Less documented IBM Tivoli Monitoring features

I am engaged in the implementation of monitoring products from IBM and I was wondering what open source achieved in comparison with solutions from IBM in the field of monitoring hardware and software. Why I began to install the most popular monitoring systems from the world of open source and read the documentation. I was mainly interested in solution architecture. The following open source products came into my view: Zabbix, Nagios, NetXMS. I found them the most popular and often mentioned. All of them can be compared with IBM Tivoli Monitoring (ITM). ITM is the core of service monitoring from IBM. As a result, I decided to describe the non-documented ITM product architecture, which is an advantage in large installations.

It is worth mentioning that ITM is not the only product of such functionality at IBM today. Recently, a product called IBM Application Performance Management appeared, but about its architecture another time.

In view of the features of ITM, it is not recommended to use it for monitoring a large number of network equipment. There are all sorts of unusual situations, but usually they use IBM Tivoli Network Manager.

I mention zabbix. I often met him with customers and heard a lot. Once, the customer struck me greatly with the requirements to receive data from the agent every 10 seconds. He was very disappointed that in ITM you can’t create average triggers for a period (if for a dispute, then you can make up crutches, but why?). He was familiar with zabbix.

In zabbix (a similar situation with NetXMS), triggers analyze historical data. This is very cool, but I never needed one. The zabbix agent passes data to the server (or through a zabbix proxy). Data is stored in a database. Then triggers work out on them, and a powerful macro system helps them. Hence, there are requirements for the performance of iron to perform the basic functionality.

ITM has its own characteristics. The ITM server receives all data exclusively from agents. No built-in SNMP protocols, etc. The server is a multi-threaded application with a built-in database. A feature of ITM in the operation of triggers (they are situations, but for the observance of general terminology I will call triggers). Triggers are executed on the agent. In addition, these triggers are sql queries with a condition. The server compiles the triggers into binary sql code and gives it to the agent for execution. The architecture of the agent is such that it looks like a database.

All kinds of metrics are already built into the agent (relatively recently added the ability to receive data from applications / scripts) and are described as tablets in a relational database. The agent executes the sql request according to the specified interval. The layer, which is amateurishly called the "database", performs the necessary queries to the operating system (OS) and puts the data in the form of a table. The frequency of requests to the OS is not more than 30 seconds. That is, the data in the table is often 30 seconds. do not update. It is clear that the agent can execute many monotonous triggers, which will not greatly affect the load, since again, more often than 30 seconds. he will not collect data. It is also interesting that the agent will not disturb the server until the sql query it executes returns 0 rows. As soon as the sql query returned several rows, all these rows will go to the server (trigger condition has occurred). The server, in turn, will put the data in a temporary table until a separate stream passes through it, which checks for additional conditions and generates an event in the system. Foreshadowing the question, how does an agent handle events, such as logs? Everything is fine with this, the agent immediately sends such data to the server in active mode.

Hence the conclusion. The ITM server database does not contain history, only live data. Triggers are executed on the side of the agent who will take on part of the load.

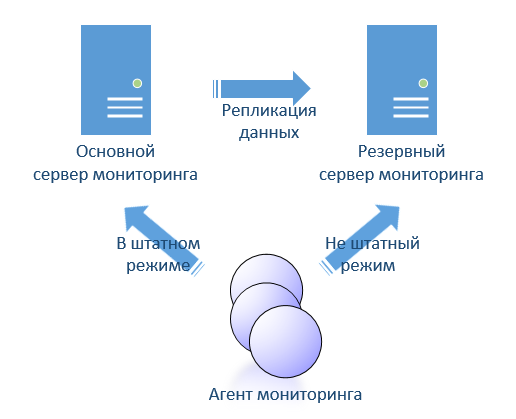

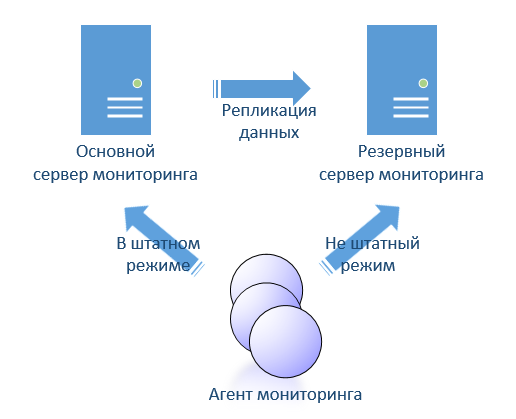

Also considering open source, I immediately wondered about the implementation of fault tolerance. I did not see what I would like. Since ITM allows you to implement hot-stanby (hot redundancy), I would like something like this in open source. In ITM, this is implemented quite simply. Two servers that replicate their databases from active to passive. Both servers are specified in the agent settings. Agents switch between two servers automatically.

The collection of history in ITM is implemented by the same triggers, only in the system they are marked as historical and are configured separately. According to the history setting, the server sends historical sql execution requests to the agent, only they are unconditional (like select * from table). The result of these sql is all the data in the agent tables. This data is added to a file. The agent periodically submits historical data to a special warehouse proxy agent, which in turn puts it in a special database, which is usually called Warehouse. If the agent loses contact with the server or with the proxy agent, then nothing terrible except the growth of the history file will happen. The agent will give the proxy history as soon as it can. The ITM server does not have access to the warehouse database and, therefore, triggers on top of the history fail.

I like open source and affordable solutions have their advantages and disadvantages. There is a certain feeling that the choice of architecture was determined by where the solution was originally applied. The ITM core was born in the bowels of another company, apparently somewhere in the early 90s. I suppose in those days there was little memory, the processor is weak by modern standards. For this, sophisticated resource saving solutions were sought.

It is worth mentioning that ITM is not the only product of such functionality at IBM today. Recently, a product called IBM Application Performance Management appeared, but about its architecture another time.

In view of the features of ITM, it is not recommended to use it for monitoring a large number of network equipment. There are all sorts of unusual situations, but usually they use IBM Tivoli Network Manager.

I mention zabbix. I often met him with customers and heard a lot. Once, the customer struck me greatly with the requirements to receive data from the agent every 10 seconds. He was very disappointed that in ITM you can’t create average triggers for a period (if for a dispute, then you can make up crutches, but why?). He was familiar with zabbix.

In zabbix (a similar situation with NetXMS), triggers analyze historical data. This is very cool, but I never needed one. The zabbix agent passes data to the server (or through a zabbix proxy). Data is stored in a database. Then triggers work out on them, and a powerful macro system helps them. Hence, there are requirements for the performance of iron to perform the basic functionality.

ITM has its own characteristics. The ITM server receives all data exclusively from agents. No built-in SNMP protocols, etc. The server is a multi-threaded application with a built-in database. A feature of ITM in the operation of triggers (they are situations, but for the observance of general terminology I will call triggers). Triggers are executed on the agent. In addition, these triggers are sql queries with a condition. The server compiles the triggers into binary sql code and gives it to the agent for execution. The architecture of the agent is such that it looks like a database.

All kinds of metrics are already built into the agent (relatively recently added the ability to receive data from applications / scripts) and are described as tablets in a relational database. The agent executes the sql request according to the specified interval. The layer, which is amateurishly called the "database", performs the necessary queries to the operating system (OS) and puts the data in the form of a table. The frequency of requests to the OS is not more than 30 seconds. That is, the data in the table is often 30 seconds. do not update. It is clear that the agent can execute many monotonous triggers, which will not greatly affect the load, since again, more often than 30 seconds. he will not collect data. It is also interesting that the agent will not disturb the server until the sql query it executes returns 0 rows. As soon as the sql query returned several rows, all these rows will go to the server (trigger condition has occurred). The server, in turn, will put the data in a temporary table until a separate stream passes through it, which checks for additional conditions and generates an event in the system. Foreshadowing the question, how does an agent handle events, such as logs? Everything is fine with this, the agent immediately sends such data to the server in active mode.

Hence the conclusion. The ITM server database does not contain history, only live data. Triggers are executed on the side of the agent who will take on part of the load.

Also considering open source, I immediately wondered about the implementation of fault tolerance. I did not see what I would like. Since ITM allows you to implement hot-stanby (hot redundancy), I would like something like this in open source. In ITM, this is implemented quite simply. Two servers that replicate their databases from active to passive. Both servers are specified in the agent settings. Agents switch between two servers automatically.

The collection of history in ITM is implemented by the same triggers, only in the system they are marked as historical and are configured separately. According to the history setting, the server sends historical sql execution requests to the agent, only they are unconditional (like select * from table). The result of these sql is all the data in the agent tables. This data is added to a file. The agent periodically submits historical data to a special warehouse proxy agent, which in turn puts it in a special database, which is usually called Warehouse. If the agent loses contact with the server or with the proxy agent, then nothing terrible except the growth of the history file will happen. The agent will give the proxy history as soon as it can. The ITM server does not have access to the warehouse database and, therefore, triggers on top of the history fail.

I like open source and affordable solutions have their advantages and disadvantages. There is a certain feeling that the choice of architecture was determined by where the solution was originally applied. The ITM core was born in the bowels of another company, apparently somewhere in the early 90s. I suppose in those days there was little memory, the processor is weak by modern standards. For this, sophisticated resource saving solutions were sought.