MIT course "Computer Systems Security". Lecture 19: “Anonymous Networks”, part 3 (lecture from the creator of the Tor network)

- Transfer

- Tutorial

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems." Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: “Introduction: threat models” Part 1 / Part 2 / Part 3

Lecture 2: “Control of hacker attacks” Part 1 / Part 2 / Part 3

Lecture 3: “Buffer overflow: exploits and protection” Part 1 /Part 2 / Part 3

Lecture 4: “Privilege Separation” Part 1 / Part 2 / Part 3

Lecture 5: “Where Security System Errors Come From” Part 1 / Part 2

Lecture 6: “Capabilities” Part 1 / Part 2 / Part 3

Lecture 7: “Native Client Sandbox” Part 1 / Part 2 / Part 3

Lecture 8: “Network Security Model” Part 1 / Part 2 / Part 3

Lecture 9: “Web Application Security” Part 1 / Part 2/ Part 3

Lecture 10: “Symbolic execution” Part 1 / Part 2 / Part 3

Lecture 11: “Ur / Web programming language” Part 1 / Part 2 / Part 3

Lecture 12: “Network security” Part 1 / Part 2 / Part 3

Lecture 13: “Network Protocols” Part 1 / Part 2 / Part 3

Lecture 14: “SSL and HTTPS” Part 1 / Part 2 / Part 3

Lecture 15: “Medical Software” Part 1 / Part 2/ Part 3

Lecture 16: “Attacks through a side channel” Part 1 / Part 2 / Part 3

Lecture 17: “User authentication” Part 1 / Part 2 / Part 3

Lecture 18: “Private Internet viewing” Part 1 / Part 2 / Part 3

Lecture 19: “Anonymous Networks” Part 1 / Part 2 / Part 3

Suppose you use Bayesian probability, arguing in this way: “would the court have arranged the evidence that this guy was caught because of a bad OPSEC? Yes, they did. That is, I need reports that would say that he was caught due to non-compliance with the information security of operations on the Internet. ” Thus, for the “double construction” I would need reports that the guy got into a bad OPSEC, because this kind of evidence is quite accessible in a regular police investigation, without mentioning the information obtained from intelligence.

We cannot draw conclusions about this case from any public reports, nevertheless, it seems that this guy was detained precisely because of non-compliance with the rules of OPSEC, that is, what you would have been looking for, trying to catch someone who is involved in illegal things. So, as I said earlier, do not use my network to violate any laws. In addition, if your life or liberty depends on using Tor or any other security product, do not use these products in isolation.

Consider creating multiple lines of defense if your life or freedom is at stake, or if a system crash is completely unacceptable to you. This is true for Tor, TLS, and PGP. Software is an unfinished job.

That's all I wanted to say about the abuse on the Internet. I have 25 minutes left for hidden services.

Anonymity of the respondent is a much more complex problem than the anonymity of the initiator. The anonymity of the initiator is what you get when Alice wants to buy socks, but at the same time remains anonymous for the seller of socks.

Respondent's anonymity is when Alice wants to publish her poems on the Internet and run a web server with her poetry, but does not want anyone to find out where this web server is located, because poetry is so embarrassing. So yes, in fact, there is a hidden service with my bad poems. No, I do not think anyone has already published them. No, I'm not going to tell anyone where he is. I'm just waiting for it to become public.

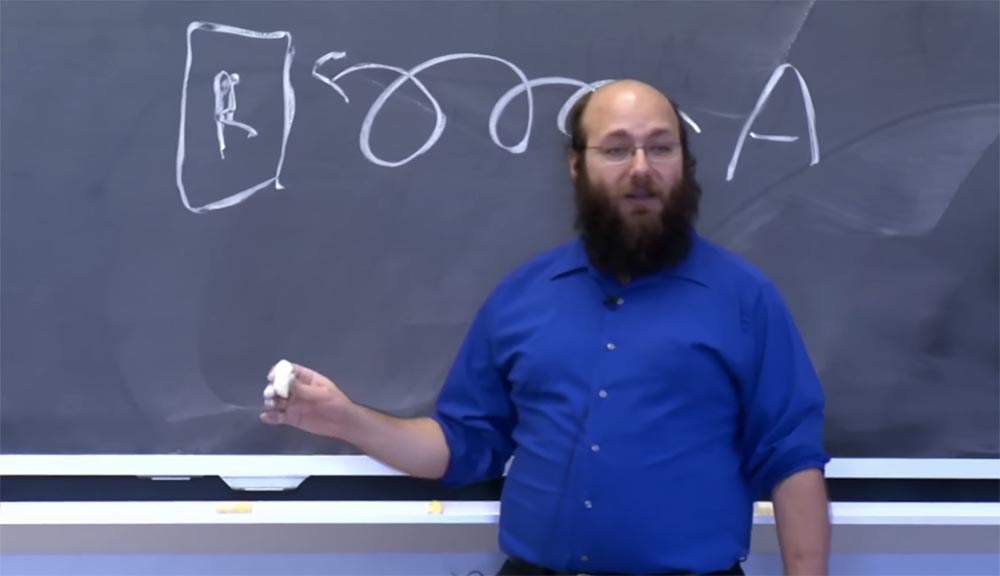

Suppose Alice wants to publish her poems. I will put it on the right, because she is the respondent. Alice can pave the way - this spiral line depicts several repeaters - to the Tor network, this R, and ask him: “please accept these connections!”. So now anyone who connects to this transponder can say they want to talk to Alice. There were projects that worked that way.

However, there are some problems. One problem is that this relay can be a “man in the middle”, which intercepts all traffic if there is not a well-known TLS key here. The second problem may be that the person who owns this verse and who is also shy of his poems and does not want to be a public distributor of poetry, owns this repeater, because it is so terrible!

Other people who own a transponder R, who hate poetry so much that they want to subject this connection to their own censorship, can also affect this. Finally, this R transponder can simply be the target of an attack.

Therefore, you want Alice to switch between different repeaters all the time, and none of them could affect her unencrypted traffic. This is quite doable.

But if we have many different repeaters, what does Alice have to say to people? There should be something like a public key here, because if it just accesses the network: “repeater X, repeater Y, repeater Z”, this will not work because the network changes every 5 minutes and it’s hard to get the correct repeater .

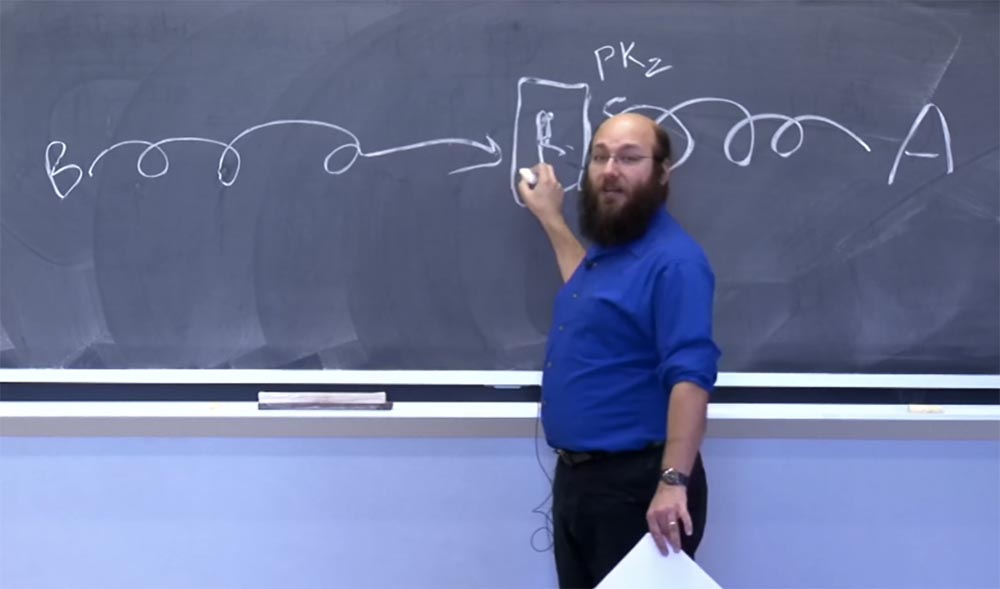

Suppose she informs all members of the network with a public key, and when she comes here, to R, she says: “this is Alice, I will prove it with my public key”. Thus, R knows that the PKz public key launches a specific hidden service — Alice’s poetry. Therefore, if someone else says, "Hey, connect me with the public key Z", then you can do a handshake with Alice with the help of a shared key. And this is the same handshake that is used in the Tor chain.

Now Bob will be able to read Alice’s verses, making another connection through the Tor network. Knowing the PKz key, Bob will be able to tell the relay: "Hey, connect me with Alice with this PKz." After the repeater makes a joint handshake, Bob and Alice will have a shared key, which they can use to encrypt throughout the connection.

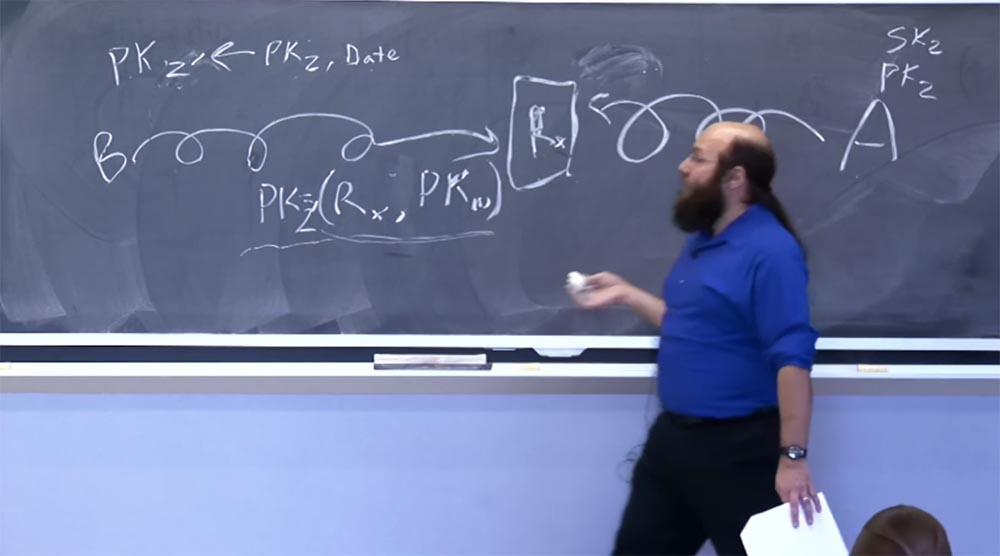

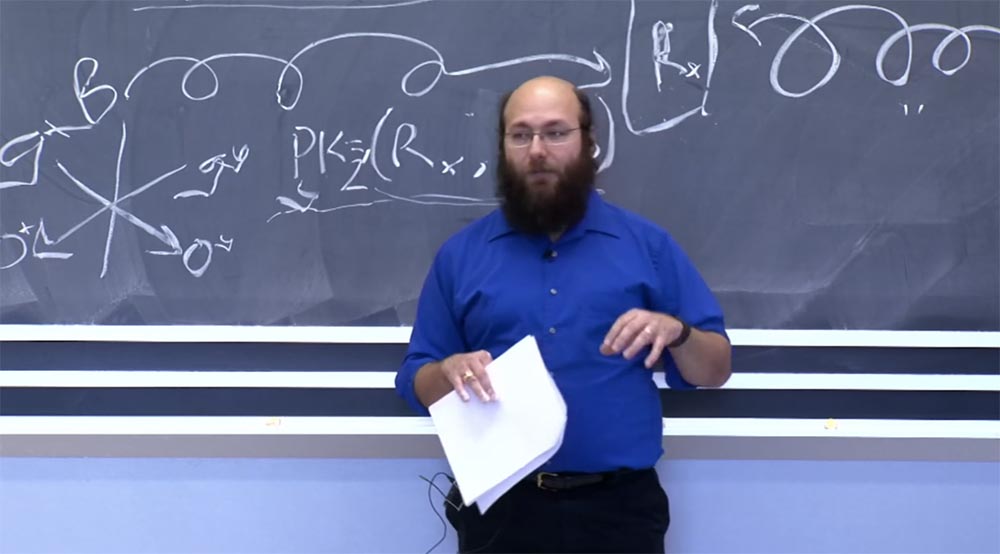

There is something that I missed, namely, how do Bob know how to get here? How does this repeater recognize the PKz public key? We can add some kind of directory system to the system where Alice anonymously via Tor uploads a signed application stating that PKz is on X relay. In this case, Bob asks the directory system to give him this signed statement about PKz, through which Bob finds out where he needs go. We could do even better — let Alice supply another public key, let's call it PKw, and the statement she uploads to the directory and which says that if you want to talk to the public key service Z, then you should go to the Rx repeater. using the public key W.

In this case, the public key Z is not published on the Rx transponder. You could go ahead and encrypt the expression (Rx, PKw) with some shared secret known to Alice and Bob. If you do this, the directory service and people who can contact the directory service will not know how to connect to Alice using this PKz key.

Student: if it is not encrypted, then the Rx relay can learn that it is running the service for Alice, right?

Nick Mathewson: No, not for Alice. He can only find out what the PKz key is using if this expression is not encrypted. We have a solution how to create such a system, it has not been built yet, but it will be cool.

Suppose you do not want to use a centralized directory for this. In this case, we actually use DHT, which is not perfect and is subject to censorship, but we are trying to make it possible to use censorship less and less. I could tell you more about this, but I’m afraid I don’t have time to cover the remaining topics.

There is another problem: if you use one of these service directories and you have a complete list of keys, then you can connect to all those who have not encrypted their connections to find out what they have there. This is called an enumeration attack, or “enumeration attack”. We did not mention this in our newspaper, not because we do not care, but because we are not yet ready to withstand such attacks.

I hope that in 2014 we will find a solution in which Alice and Bob share the key PKz, but this expression (Rx, PKw) is not signed with the key PKz. It is signed by PKz 1 , which is derived from PKz, and, say, dates:

PKz 1 → PKz, Date

If you know PKz and the date, you can get PKz 1 . If, as Alice, you know the secret of SKz, you can create messages that are signed by PKz 1 .

But if you only see PKz 1, even knowing the date, you can not re-get PKz. We have evidence of the possibility of this solution, and if you want to know how it works, then write to me and I will send them to you. This is a cool trick. We are not the first to come up with this idea. But this is the way in which we are going to solve the problem of enumeration attack in the current year, if I really find time to put this solution into practice. That's all for hidden services.

Consider the issue of attacks and defense. Until now, the largest category of attacks that we have seen is attacks at the application level. If you run an application through Tor, and it sends unencrypted traffic like a regular HTTP connection, then the hostile exit point node, like any other node that allows HTTP connections, can monitor and modify this traffic. This is attack number 1 in our system. You can resist it using encrypted traffic.

Fortunately, over the past few years, encryption has been on the rise, and more and more traffic is being encrypted by an excellent free certification authority, which EFF, Mozilla and Cisco reported a day or two ago. I hope that in 2015 unencrypted traffic will be even less than it was this year. So this approach solves this problem.

More interesting attacks include things like traffic tagging, or traffic marking. We made a mistake in our previous implementation of the integrity check. Our early implementation of the integrity check ended with an integrity check between the Alice program and the exit point node, but it turned out that this was not enough. Because if the first R1 repeater “confuses” the traffic in such a way that it creates a pattern that can detect the exit point node, then for the first and last repeater it will serve as a way to find out that they are on the same common path, in the same chain, and identify Alice

Of course, if the first and the last repeater cooperate in one way or another, then in any case they will be able to identify Alice by comparing traffic, but we hope that it will not be easy for them and with time the process of correlating traffic will become harder than we think.

In fact, it would be good to end this type of attack once and for all. For this we have two solutions. One of the expected results of such attacks is to periodically break the chains, because the attacker on the first hub made a mistake regarding control over the last hub.

Therefore, every Tor client checks for a strange bounce rate. An effective long-term solution to the problem is to make the “fuss” with the template on the first hub not create more than 1 bit of information on the last hub. You cannot avoid sending 1 bit of information, because the first hub can always simply disconnect the connection, but you can limit this information to the value of 1 bit. Or better to 2 bits, because then they will have the choice to damage the data or disconnect the connection. I had an idea how best to do this, so I’ll think about this problem.

Denial of service, or DOS, is also important. Last year there was an article about what the authors called the "sniper attack." You see traffic coming from a Tor node that you do not control, and therefore you want to drive everyone away from that node. To do this, you connect to this node, overflow all the buffers of its memory, and it “falls”. After that, you redirect the traffic you are interested in to the node you control, and if it hits the uncontrolled node, then repeat this technique several times until the desired result is achieved.

Our best solutions are designed to eliminate the possibility of a DOS attack on the memory, and our patches have virtually eliminated this possibility. Another option for solving problems of this kind is to make sure that the repeater has a large memory capacity. Therefore, do not use low capacity repeaters on the network. We also do this - if you try to start the Tor node on your phone, it will not receive authorization and will not be included in the list of trusted nodes.

We are also trying to develop chain planning algorithms, although it is very difficult to try to develop a network diagram of nodes that you do not control, so for now this is an unsolved problem.

Now tell me, is it better to tell you about interesting attacks or important attacks?

Student: about interesting!

Nick Mathewson:OK. Then let those who would like to write a program that uses cryptography raise their hands? Great, this is what you should learn! But never trust the implementation of your cryptography. Even if it’s right, it’s still wrong. A long time ago we had one of the worst security mistakes. We considered that each repeater could be a “man in the middle” in any chain of nodes, because we assumed that the correct implementation of the Diffie-Hellman algorithm would check if 0 passed as one of the inputs.

The authors of our Diffie-Hellman implementation assumed that the proper application would never pass a Diffie-Hellman implementation zero.

How does the correct Diffie-Hellman algorithm work? Suppose I have g x and you have g y. I know X, you know Y, and we both can calculate g xy . But if instead “man in the middle” replaces my g value with zero, then I calculate 0 x , you calculate 0 y , we will have the same key, and we can easily communicate with each other, but it will be the key that is known to the attacker, because that it is 0.

The unit works, P also works, P + 1 also works. Thus, you just need to make sure that your g x and g y values are in the range from 2 to P-1, if you implement the Diffie-Hellman algorithm in the form of z sub p.

I would like to talk more about censorship. After all, in fact, this is one of the areas where we can do the most good. In the beginning, we thought to make Tor look like a web client talking to a web server over HTTPS and make it difficult to block. It turns out that it is fantastically difficult and probably not worth doing.

Now we are using an approach that uses various plug-ins that allow using unaccounted repeaters as a bridge, and the user can use this scheme for various traffic conversions. We manage to make it so that new repeaters are added to the network faster than censors implement their blocking.

In fact, this is the case when none of the solutions is categorically workable. Probably, I did not formulate my idea this way. It would be more correct to say that none of these plug-ins is essentially unblocked by any modern technology, but they are good enough to keep traffic unblocked for 1-2 years in most countries or 6-7 months in China.

China currently has the most competent censors in the world, largely because it does not use outsourcing. Most other countries with aggressive censorship of the Internet transfer the functions of censors to European, American and Asian companies, whose interests are not really to sell effective software for censoring the Internet, but to force their customers to constantly participate in the race to update it.

For example, you can buy your censorship software in the United States. Technically speaking, US companies do not have the right to make censorship programs for third countries, but the US has developed a corporate firewall that scales up to 10 million users. I think this is unethical, but again, I am not a member of political organizations or a philosopher.

Paul Cyverson, one of the Tor authors, has a degree in philosophy, but even he cannot answer these questions. But he spends a lot of time not to answer them.

So what did I stop at? 90 minutes is a long time. On censored! So, Tor has repeatedly circumvented the censorship of various developers. They are trying to block the latest versions of Tor, but at the same time make a rather weak lock. Therefore, if we change 1 bit somewhere in the same node identifier, we can easily bypass such a lock.

We cannot prove that they deliberately make Tor bypass the blocking, that is, they sell blocking programs that do not work, in order to sell them one update after another. But it seems to us that it is so. So another reason not to work for censorship providers is not only that this is extremely unethical, but they also don’t provide good enough software.

If you are interested in writing one of these connected things for transporting traffic, this would be a great student project that allows you to have some fun and learn a little more about encryption and networking. And as long as you do it in a language that guarantees secure memory access, you will not be able to spoil your program much. The worst thing that can happen if you write a "weak" plug-in is that censorship will block the network in a month, and not in a year.

We have more topics that I forgot about - related development. Tor is the most popular system, but not the only one of its kind. Many other systems have really good ideas, and you should read them if you are really interested in studying all the anonymity materials on the Internet. The bibliography of freehaven.net/anonbib/ includes research and publications in this area, but not all research in this area is academic in nature.

You should also meet I2P, Gnunet, Freedom - “Freedom”, which no longer exists, and this is not a pun, Mixmaster, Mixminion, Sphynx through the letter Y, Sphinx through the letter I - this is something else, DC-net, especially with the work of Brian Ford and the team of the Dresden Technical University about trying to make DC-net more practical. These are powerful enough systems, but not ready for practical deployment. Why less attention is paid to them than Tor is an open topic, a question to which I have no firm answer.

The next topic is future work. One of the reasons why I’m talking about future work is not because I’d like everyone to know how cool I’m working on, but because I know that students have a lot of free time. And I sort of recruit myself future staff. You might think that I'm kidding. But when I first started working in this area, I complained that I was busy reviewing articles for one conference, writing software, correcting errors, answering letters. I complained about this to the head teacher, and he replied: "you will never have as much free time as today." Now you have a lot more free time than it will be in 10 years, so this is a great time to work on crazy programs.

So let me tell you about your future work at Tor. As I mentioned, the implementation of the idea:

PKz 1 → PKz, Date

It is the key task of upgrading our system of hidden services, the best that we could think of at that time. But since then a lot of research has been done, and perhaps some of them will suggest more effective ideas.

We also reorganized much of our cryptographic system. In 2003, we chose the RSA-1024 public key encryption algorithm, which at that time seemed like a good compromise between security and performance. Currently, we have replaced RSA-1024 with a stronger Ed25519 algorithm. But there are a few more things in the encryption protocol that I would like to replace, and we need to work on this.

I did not talk too much about choosing the path selection path, so I can’t talk about improvements to this process. But our path selection algorithms have been revised and over the past 5-6 years, tremendous research has been carried out on what we need to integrate into the system. Some research work has been done in the area of high and low latency traffic mixing.

Low latency traffic can block high latency traffic in terms of servicing a large number of users, while high latency traffic is still very well anonymized. It is not yet clear whether mixing this traffic will work as we like, and it is unclear whether someone will use it. But it is absolutely clear that if something does not change for the better or if there is some large funding for research in this area, I will not have time to work on it in 2015. But if someone else wants to solve this problem - welcome, it would be fun!

Our workload management algorithms were not justified on the basis of what we could crack together in a week. We improved them, but they could be optimized in the best way. There are some studies scaling to hundreds of thousands of nodes. The existing system design allows you to easily increase the network to 10 or 20 thousand nodes. But since we assume that every client knows about each node, and each node can be connected to any other node, this limits scaling to 100 thousand nodes, and we have to do something about it.

This creates the possibility of attacks based on the fact that the attacker finds out which clients know which nodes, and uses this to attack individual clients on the network. Therefore, simplified approaches to this problem are a bad idea, but you can approach the solution more seriously.

One more thing that can be done by increasing the network to 100 thousand nodes is to abandon the centralized directory authorization authorities and move on to some peer-to-peer development. I do not have much confidence in peer-to-peer projects that I know about, but maybe someone will be able to come up with a more advanced option.

Someone asked a question about adding traffic patterns or fake traffic to try to trick through traffic correlation. This is an exciting area of research that needs those who can take a more responsible approach to work than those who have done it so far.

In the research literature there are too many results on the demarcation of the traffic of two users on a network containing one repeater, because mathematically it was easier to do.

Because of these kinds of things, all the traffic analysis security tools that we know in this area and that are still compatible with wide viewing look good on paper. You can say: “Hurray, this will force the attacker to analyze three times the amount of traffic to calculate the user!”. But in reality, this only means that if the attacker used to take 2 seconds to collect traffic, now it will take 6. This is not sufficient protection, although it may have some positive effect on a real network.

Thus, we really would like to see some things that allow us to implement a template or fake traffic, confusing the hacker. But we don’t like to add “Voodoo” protection based on the assumption that it will bring something good, although in reality we cannot do it. We really like to have evidence that any changes we are going to make will really help us.

It seems I have no more time. After us there may be classes? Not? In that case, I will stay a little more in this audience. Thank you for coming to listen. I would answer your questions, but now it is 12:25, and people may have another lecture. But I will be there. David, thank you so much for coming!

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only here2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?