Technosphere Mail.Ru - 2 years

Hello, Habr! In February, the Technosphere project marks two years . Over the past year, there have been three major changes that have affected the learning process. The first of them concerned the selection of students - technical interviews. Previously, a student went to a technical interview, not knowing what tasks he would be offered to solve. Now we are sending the students a case, a technical problem, which must be solved in advance and on the spot to explain to teachers the solution. After adding the case, academic performance improved dramatically. The transfer to the second semester in the Technosphere was 27 out of 40 students, i.e. 67% instead of the usual 40-50%.

Secondly, a laboratory was created at the Technosphere, in which students are engaged in solving the practical problems of the Mail.Ru Group, as well as external customers. For example, they research targeting algorithms for advertising campaigns, and also try to create heuristics that can improve the quality of advertising results. In fact, a laboratory is an alternative to an internship at a company. In it, you can work on solving various practical problems from the market, but at the same time do not waste time on the road to the office, doing everything right at your faculty.

The third important step was the decision to switch to two-year education. This year we released the last group of children who studied under the annual program. The subjects they mastered during the year were: algorithms for the intellectual processing of large volumes of data, multithreaded programming in C / C ++, DBMS, Hadoop, methods for processing large volumes of data and information retrieval.

Now we would like to put an end to the annual training program by showing you one of the graduation projects on the subject of “Information Search”. During the semester, the children were given homework, which eventually resulted in a large final project. The rules were as follows:

- The guys were divided into teams of 2-3 people.

- Objective: to make a full search on one of the proposed sites. As planned, your search should consist of united homework + frontend + some kind of bun, for example spellchecker.

Svyatoslav Feldsherov

Svyatoslav FeldsherovOur search engine worked on the site povarenok.ru . In our project, I was engaged in collecting all the components together and making the web. Initially, all the tasks in the course were in Python 2.7, and somehow, by inertia, we started to do the project on it. Therefore, to create a web application, we took Django. Now there is a feeling that it was too much, and it was possible to do with something much simpler, but more on that later.

At the very beginning, we had a very simple idea: take already written homework, glue them and get an almost finished project. So we got the first search engine, on the project we called it draft. At that moment, everything turned out very conveniently: there was a Django application that was flying, a function was returned in the right place that returned the search results - and victory. But the whole construction began to wildly slow down when querying a popular word on a full index. We had a couple of attempts to speed up Python, but nothing good came of it. Then Denis took and rewrote the entire search part in C ++. Now, on my part, it looked like this: try searching in a fast search engine, if it lies, go to a Python search, albeit slowly, but it's better than nothing.

Another epic happened with snippets and a direct index. My first idea was just to take a summary of the recipe and show it as a snippet. At first it seemed like it worked pretty well. But if you delve into the site, you can find something like this: “This recipe was declared by the author, the talented chef Olechka-Sarochka, as“ The most delicious tiramisu. ” And I did not change the name. As if to avoid ... ". Such ingenuity of users has destroyed all my hopes for simple snippets. Somewhere at this point, Daniel rolled out his snippet builder, and again we got some margin of safety: if the snippet builder fell, then look for a summary, and if it isn't, then alas. By the way, this is where Python 2.7 is especially good when you suddenly come across a broken encoding on a page.

The next thing I had to deal with was the spellchecker, but there it was all without much adventure. And my last creation is a front-end to all this, a big, laborious piece for us, but there’s absolutely nothing to tell about it :). Who knows how, my bicycles are not interesting to him, and who does not know how, then we are clearly not the best example for training.

Denis Kuplyakov

Denis KuplyakovMy part of the course work consisted in the implementation of Boolean search with ranking on this database of loaded pages, that is, a text request came in and the output should be the URLs of the pages in descending order of relevance. I really want the search to work quickly: the response to the request is no longer than a second.

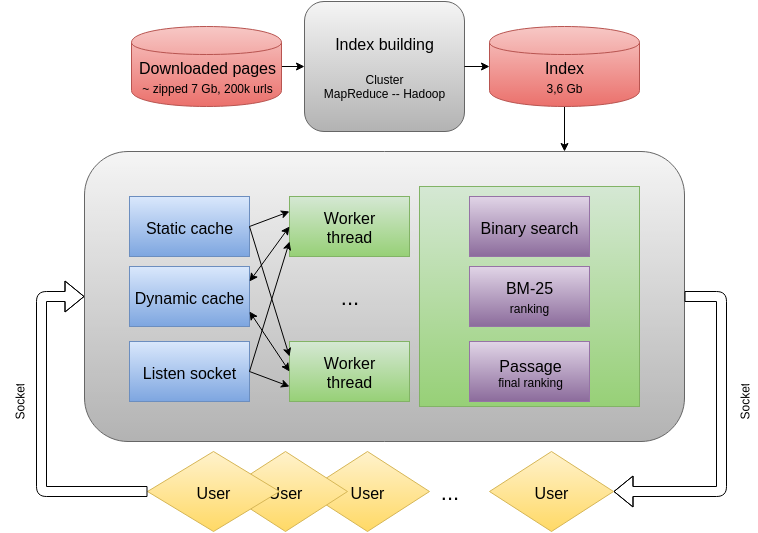

I decided to implement the search as a separate process with several processing threads, to use sockets to submit requests. I chose C ++ as the main programming language. This allowed for high speed and efficient memory management. As a result, the following architecture was developed:

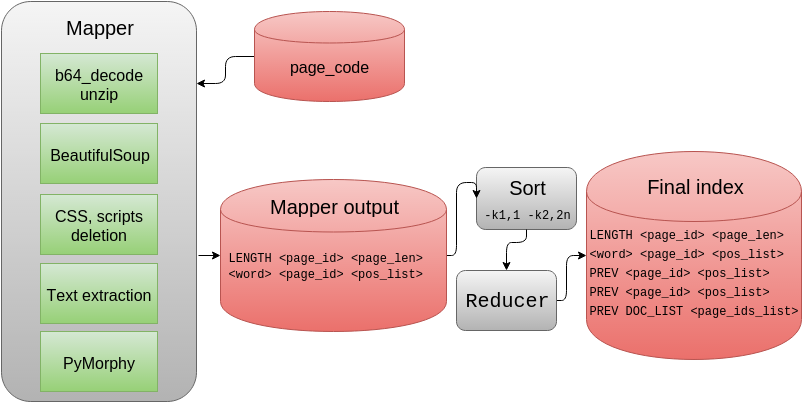

The initial data are the loaded pages of the site povarenok.ru. In total, there were approximately 200 thousand different URLs compressed by zip and recorded in Base64. To successfully search a large number of pages, you need to build a reverse index on them: each word is matched with a list of pages on which it is presented. Lists of positions of occurrences of words in the pages are also needed - they are used at the ranking stage. To build them fast enough, a training cluster with Hadoop was used. Processing was done in Python in streaming mode. Below is the general processing scheme:

To work with the contents of web pages, the Beautiful Soup library was used , which makes it easy to remove CSS, scripts and select text from the page. Words are brought back to normal using pymorphy2 .

After the Map operation, the output is records about the length (number of words) on the pages and lists of word positions. Words are encoded in Base64 to avoid encoding problems, lists are compressed using the simple-9 algorithm and also written to Base64.

MapReduce is configured to sort by the first two fields (the second is treated as a number), and then, at the Reduce stage, length records are saved, lists for words are grouped, the word itself is written once, and then a special PREV line is used (use the previous word). This trick reduced the index by 20 percent. LENGTH, DOC_LIST and PREV are special strings containing characters not used in Base64.

Networking was implemented using the libevent library. To create threads, we used std :: thread from C ++ 11. Incoming requests are queued, from where they are taken out by workers. For parallel use, the queue is “protected” by std :: mutex.

Boolean search. The incoming request is divided into words; for them, lists of pages are loaded from the index. Lists are sorted and quickly intersect. In order not to look for an analog of pymorphy2 for C ++, the user himself normalizes the words in the request (the requesting code uses Python). As a result, we get a list of pages that have all the words.

Draft Ranking: BM25.The resulting pages need to be ranked, but to do this efficiently for everyone immediately turns out to be computationally expensive and therefore long. For this reason, the Okapi BM25 algorithm first performs a rough ranking. Details about it can be found on Wikipedia . I used the standard parameters: k1 = 2.0, b = 0.75. From the index at this stage, we need for the pair (word, page) to get the length of the list of entries.

Final ranking: Passenger. This ranking applies only to the most relevant pages after the rough ranking (for example, the first three hundred). The idea of the algorithm: windows containing search words are highlighted in the text of the page, for each window the following characteristics are calculated:

- the proximity of the window to the top of the page;

- the ratio of words from the query to the total number of words in the window;

- summary TF-IDF of words in a window;

- the degree of similarity of the word order in the window to the order that was in the request.

These characteristics are reduced to one scale and are added up in a linear combination - the maximum such characteristic across all windows becomes the rank of the document. It would be right to select the coefficients to achieve the best result, but I used equal coefficients. From the index at this stage, you need to load the entry lists themselves for pairs (word, page).

Lowering coefficients based on site structure. An obvious consideration: pages with comments, jokes (yes, and there areon "Cook") are not as necessary to the user as recipes. To highlight the structure of the site, I associated each URL with a certain set of attributes based on the occurrence of certain segments in the address. Then he clustered. The result was a breakdown of URLs into groups: blogs, recipes, albums, etc. Then for some of them I introduced empirically selected reduction factors - they are multiplied by the ranks issued by BM25 and the passenger algorithm. This allowed us to lower the mobile version pages, comment pages, blogs, etc.

The bottleneck in the search is access to the hard drive. For everything to work quickly, you need to reduce their number. To do this, I tried to put as much information as possible into memory. The memory of our server was not enough to save everything, and in addition it is necessary to take into account that a lot of additional memory is required for organizing random access (pointers, etc.).

Static cache. At the beginning of the search, the entire index is read and loaded into memory:

- for each word, the offset of the list of pages in the index file;

- lengths of all pages;

- for all pairs (word, page) the length of the list of positions;

- for all pairs (word, page) the offset of this list in the index file.

The first allows you to quickly load lists from the hard drive at the boolean search stage. The second and third allow BM25 to be calculated without accessing the hard drive. The fourth is similar to the first, but to speed up the passenger algorithm.

Dynamic cache. Since the search works on the pages of the culinary site, some words such as “recipe”, “pie” are very common. Immediately the idea arises of caching access to the hard drive. To do this, using boost :: shared_lock and C ++ templates, I implemented a cache that can be used from various threads. The lifetime of records is limited by time, the crowding-out strategy is LRU .

Search Quality Assessment.For evaluation, we were given a set of 1000 pairs of queries and marker pages, which are considered the most relevant to each other. The quality can be judged by the average position of the marker among the results.

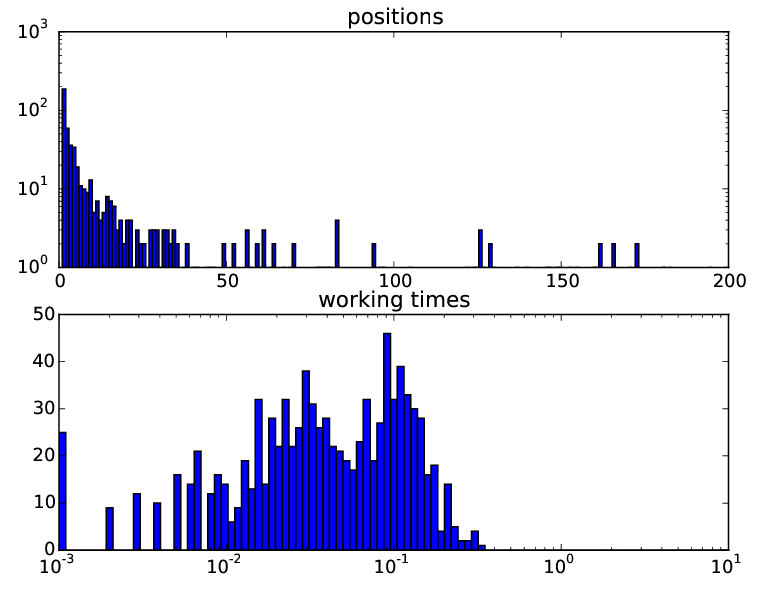

To automate testing, a Python script was written that sends requests in several threads and generates a report with graphs. This made it possible to quickly track the appearance of bugs and the effectiveness of modifications. Here is such statistics that version of the search that we presented in defense of the project had:

The top graph shows the distribution of the number of markers by position in the output. As you can see, most markers fall into the top 20.

The bottom graph shows the distribution of requests by execution time. The average request time was 58 ms, which, in combination with the other components of the project, made it possible to keep within a second of time for the request.

Daniil Polykovsky

Daniil PolykovskyMy responsibility was to develop three components of the project: the spellchecker, direct index and snippets.

The clerk was based on probability theory (Bayes formula). To correct errors, it was necessary to compare the "similarity" of the two words. For this, the modified Damerau - Levenshtein distance was used. The Russian language is characterized by errors associated with the doubling of certain letters. Such errors were considered more likely than, for example, missing a letter, and doubling the letter npunished significantly less than doubling the letter e . The guardian was also able to restore the register, which helped to improve its visual component. An interesting part of the development was the collection of word statistics. Initially, a dictionary was used, built on the site povarenok.ru, but later it was supplemented by a frequency dictionary of the Russian language (O. N. Lyashevskaya, S. A. Sharov, 2009). The result was a very accurate system that could very quickly fix up to two errors, including gluing and pasting words. By the time the project was defended, we could find one mistake in the guardian: the phrase "lordly liver" was replaced by "royal liver".

The second part was the breakdown of the text into sentences and the construction of snippets (summary of the page). For the breakdown, machine learning was used. The selection of signs turned out to be a very interesting problem, as a result, they managed to come up with about 20. The result was quite high, and it was still possible to improve by adding a few regular expressions. The training was conducted on the OpenCorpora data set, so there were errors associated with the words specific to the culinary theme: for example, the reduction of tsp. (teaspoon) was broken into two sentences. On many pages of the site povarenok.ru a summary of the article was marked out, which we could not help but use. If all the words from the query were found only in the page title, then it was shown as a snippet.

***

Perhaps in the future we will continue to publish graduation projects, stay tuned for updates to our blog!

The technosphere Mail.Ru is one of the many educational projects of the Mail.Ru Group, united on the IT.Mail.Ru platform , created for those who are interested in IT and strive to develop professionally in this field. It also presents the championships of the Russian Ai Cup, Russian Code Cup and Russian Developers Cup, educational projects of the Technopark at MSTU. Bauman and Technotrek at MIPT. In addition, at IT.Mail.Ru, you can use the tests to test your knowledge of popular programming languages, learn important news from the IT world, visit or watch broadcasts from relevant events and lectures on IT topics.