Docker and Kubernetes in security-demanding environments

- Transfer

Note trans. : The original article was written by an engineer from Sweden, Christian Abdelmassih, who is keen on enterprise-level architecture, IT security and cloud computing. He recently received a master’s degree in Computer Science and is in a hurry to share his work - his master’s thesis, or rather its part on the isolation of containerized [and launched in Kubernetes] applications. The “client” for which this research work was prepared is no less the police of his homeland.

Container orchestration and cloud-native computing have become very popular in recent years. Their adaptation has reached such a level that even financial enterprises, banks, the public sector are interested in them. Compared to other companies, they are distinguished by extensive information security and IT security requirements.

One of the important aspects is how containers can be used in production-environments and at the same time maintain the system distinction between applications. Since such organizations use private cloud environments based on virtualization over bare metal, the loss of such isolation during migration to environments with orchestrated containers is unacceptable. Taking into account these limitations, my dissertation was written, and the Swedish Police is considered as a target client.

The specific issue of the research addressed in the thesis is:

This question requires careful study. To start with something, let's look at the common denominator - applications.

Vulnerabilities in web applications - a real zoo from attack vectors. The most significant risks of which are presented in OWASP Top 10 ( 2013 , 2017 ). Such resources help educate developers in reducing typical risks. However, even if developers wrote flawless code, the application may still be vulnerable — for example, via package dependencies.

David Gilbertson wrote a wonderful story about how you can achieve code injection through a malicious package distributed, for example, as part of NPM for Node.js based applications. You can use dependency scanners to detect vulnerabilities, but they only reduce the risk, but do not eliminate it completely.

Even if you create applications without third-party dependencies, it is still unrealistic to believe that the application will never become vulnerable.

Because of these risks, we cannot say that web applications are safe.

Instead, you should stick to the strategy of "deep defense" ( defense in depth , DID). Let's look at the next level: containers and virtual machines.

A container is an isolated user (user-space) environment, which is often implemented using kernel features. For example, Docker uses Linux namespaces, cgroups, and capabilities for this. In this sense, the isolation of Docker containers is very different from virtual machines running type 1 hypervisors (that is, running directly on hardware; bare-metal hypervisors) .

The distinction for such virtual machines can be implemented at such a low level as in real hardware, for example, via Intel VT . Docker containers, in turn, rely on the Linux kernel for the distinction. This distinction is very important to consider when it comes to attacking lower level (layer-below attacks).

If an attacker is able to execute code in a virtual machine or container, it can potentially reach below by executing exit attack outside (the escape attack) .

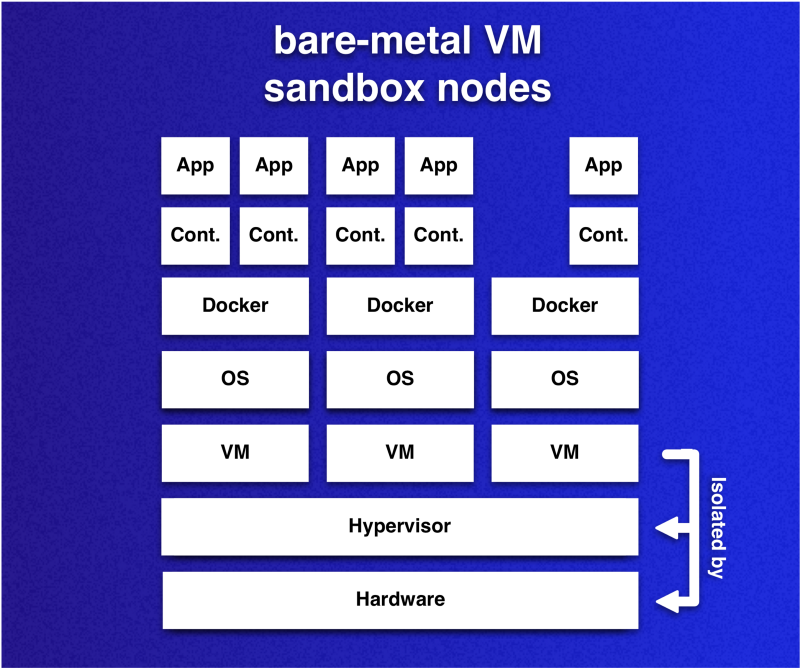

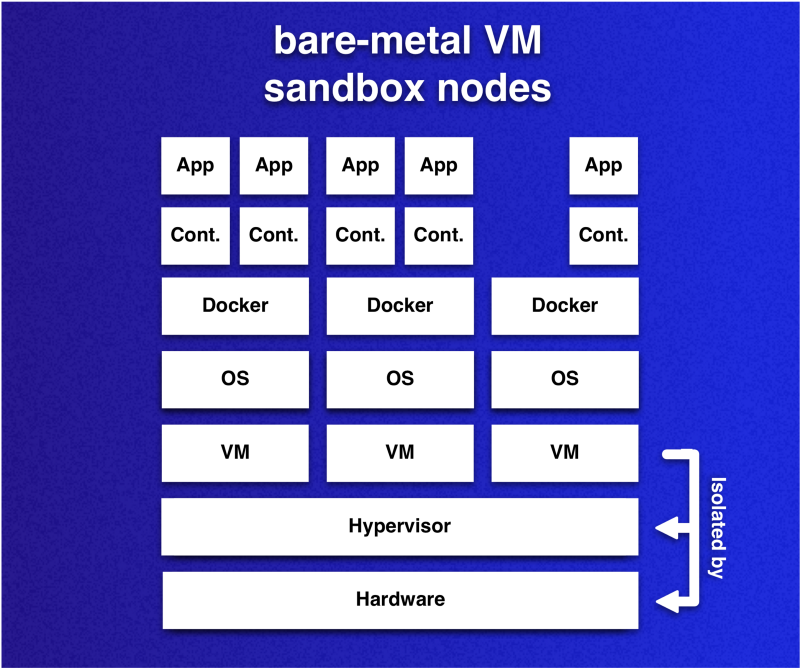

Depending on the use of containers or virtual machines on the hardware, the distinction is implemented at different levels of the infrastructure.

The possibility of such attacks was proven for the VMware ESXi hypervisor during the Pwn2Own 2017 hacker competition, as well as GeekPwn2018. The result was a pair of CVE ( CVE-2017–4902 , CVE-2018–6981 ), which can be used in layer-below attacks to exit virtual machines ( virtual machine escape ). Virtual machines on iron servers do not guarantee absolute security, even though they use iron-delimiting technologies.

On the other hand, if we look at the attack vectors standing on a bare-metal hypervisor compared to the Linux kernel, it is clear that the latter is much used on lshaya attack surface - because of its [Linux Kernel] size and the range of possibilities. B of lshaya attack surface means more potential attack vectors for cloud environments that use container insulation. This is evident in the increasing attention to the output of attacks container (container escape attacks) , which became possible by the exploit to the nucleus (e.g., CVE-2016-5195[those. Dirty COW - approx. trans.] , CVE-2017–1000405 ).

To increase isolation inside the container, you can use modules like SELinux or AppArmor . Unfortunately, such security mechanisms in the kernel do not prevent the escape attacks on the kernel itself. They will only limit the possible actions of the attacker, if going beyond the limits is not possible . If we want to deal with exits beyond the container, an isolation mechanism is needed outside the container or even the core. For example, a sandbox (sandbox) !

gVisor- sandbox for container executable environment, the code of which was opened by Google and which adds an additional core between the container and the OS kernel. This type of sandbox can improve the situation with attacks out of the container, which are carried out at the kernel level . However, core exploits are just one of the attacker's tools.

To see how other attacks can lead to similar results, you need to look at a more general picture of how containers are used in the cloud native era.

To manage containers running in environments with multiple nodes, implement orchestration, in which Kubernetes plays the leading role. As it turns out, orchestrator bugs can also affect the isolation of containers.

Tim Allclair made a wonderful presentation at KubeCon 2018, in which he noted some of the attack surface. In his report, he mentions an example ( CVE-2017–1002101 ), how orchestration bugs can affect isolation — in this case, through the possibility of mounting disk space outside the pod. Vulnerabilities of this type are very problematic, because can bypass the sandbox in which the container is wrapped.

By implementing Kubernetes, we expanded the attack surface. This includes systems hosted on the master Kubernetes. One of them is the API server Kubernetes, where they recently discovered a vulnerability that allows exceeding permissions ( CVE-2018–1002105 ). Since the surface of the Kubernetes master’s attack is beyond the scope of my thesis, this particular vulnerability is not taken into account.

Why are escape attacks so important? Containers provided the ability to run a variety of co-hosted applications in a single OS. So there was a risk associated with the isolation of applications. If a business-critical application and another vulnerable application are running on the same host, an attacker can gain access to the critical application through an attack of the vulnerable application.

Depending on the data with which the organization works, their leakage can harm not only the organization itself, but also individuals and the whole country. As you remember, we are talking about the public sector, finance, banks ... - a leak can seriously affect the lives of people.

So can container orchestration be used at all in such environments? Before embarking on further thinking, it is necessary to conduct a risk assessment.

Even before introducing security measures, it is important to think about what information the organization is actually trying to protect. The decision whether further steps are needed to prevent possible escape attacks on containers depends on the data with which the organization works and the services it provides.

In the future, this means that in order to reach the possibility of exiting the container on a correctly configured host protected by a sandbox for the container, the attacker must:

As you might guess, an organization that does not consider such a scenario valid is supposed to work with data or offer services that are very demanding for confidentiality , integrity and / or accessibility .

Since the thesis is devoted to such clients, the loss of system isolation by going beyond the container limits is impermissible, because its consequences are too great. What steps can be taken to improve insulation? To climb higher to the level in the isolation ladder, you should also look at the sandboxes, in which the OS kernel is wrapped, i.e. virtual machines!

Virtualization technologies that use hosted hypervisors will improve the situation, but we want to limit the attack surface.even more . Therefore, let's examine the hypervisors installed on the iron and see what they lead us to.

The study of the Swedish agency Swedish Defense Research Agency examined the risks of virtualization in relation to the Swedish Armed Forces (Swedish Armed Forces). His conclusion outlined the benefits of these technologies for the armed forces, even if there are strict security requirements and risks that virtualization brings.

In this regard, we can say that virtualization is used (to a certain extent) in the defense industry, since it carries acceptable risks.. Since agencies and enterprises in the defense industry are among the most demanding of IT security clients, we can also argue that acceptable risk for them means its validity for clients considered in the dissertation. And all this - despite the potential exits beyond the virtual machine, discussed above.

If we decide to use this type of sandbox for containers, we need to consider a few things in the context of cloud (cloud-native) specifics.

The idea is that the Kubernetes cluster nodes are virtual machines that use virtualization on hardware. Since virtual machines will play the role of sandboxes for containers that run in pods, each node can be considered as a sandbox-protected environment.

An important note about these sandboxes in the context of the previously mentioned container sandboxes: this approach allows you to place many containers in one sandbox. This flexibility allows to reduce overhead costs when compared with the case when each container has its own sandbox. As each sandbox brings its own OS, we want to reduce their number while maintaining isolation.

Virtual machines installed on hardware — cluster nodes — act as container sandboxes. Containers running in different VMs are delimited with the acceptable level of risk. However, this does not apply to containers running in one VM.

However, since Kubernetes is capable of changing the location of the pods for various reasons, which can spoil the idea of sandboxes used , it will be necessary to add restrictions to the mechanism of co-location of the pods. You can achieve the desired in many ways.

By the time this article was written, Kubernetes ( v1.13 ) supports three basic methods for controlling the planning and / or launching of pods:

Which method (s) to use depends on the application of the organization. However, it is important to note that the methods differ in their ability to discard pod'y after they entered the execution stage (execution) . Now only taints are capable of this - through action

The thesis proposes an idea to use a classification system showing how sensitivity affects co-location. The idea is to use a 1: 1 relationship between the container and the pod and determine the co-location of the pods based on the classification of containerized applications.

For simplicity and reusability, the following 3-step classification system is used:

To meet the collocation requirements for a variety of classified applications, taints and tolerations, with which each node is assigned a class, and PodAffinity are used to apply additional restrictions for pods with class P or PG applications.

This simplified example shows how taints and tolerations can be used to implement co-location control:

Pods 2 and 3 contain applications from the same private group, and the application on Pod 1 is more sensitive and requires a dedicated node.

However, Affinity rules will be required for classes P and PG, which ensure that requests for demarcation are fulfilled as the cluster grows and applications reside in it. Let's see how you can implement these rules. for different classes:

Affinity rules for class P applications require dedicated nodes. In this case, the pod will not be scheduled if the pod goes out without

For applications of the PG class, Affinity rules will do a co-location for pods that have a group identifier

This approach will allow us using the classification system to distinguish between containers, based on the relevant requirements of the containerized application.

What did we get?

We looked at how to implement sandboxes based on type 1 hypervisor (i.e., run on bare metal) to create nodes in Kubernetes clusters and presented a classification system defining the requirements for differentiating containerized applications. If we compare this approach with other solutions considered, it has advantages in terms of providing system isolation.

An important conclusion is that the isolation strategy limits the spread of escape attacks to the container. In other words, the exit from the container itself is not prevented, but its effects are mitigated. Obviously, with this comes additional difficulties that need to be considered when making such comparisons.

To take advantage of this method in a cloudy environment and provide scalability, additional requirements will be placed on automation. For example, to automate the creation of virtual machines and their use in the Kubernetes cluster. The most important thing is the implementation and verification of ubiquitous classification of applications.

This is the part of my dissertation dedicated to isolating a containerized application .

, Attack the services of other nodes, should be considered to prevent the attacker to log out from the container at one site distributed by network attacks (network A propagated attacks) . To address these risks, my thesis proposes cluster network segmentationand cloud architectures are presented, one of which has a hardware firewall .

Those interested can familiarize themselves with the full document - the text of the thesis in public access: “ Container Orchestration in Security Demanding Environments at the Swedish Police Authority ”.

Read also in our blog:

Container orchestration and cloud-native computing have become very popular in recent years. Their adaptation has reached such a level that even financial enterprises, banks, the public sector are interested in them. Compared to other companies, they are distinguished by extensive information security and IT security requirements.

One of the important aspects is how containers can be used in production-environments and at the same time maintain the system distinction between applications. Since such organizations use private cloud environments based on virtualization over bare metal, the loss of such isolation during migration to environments with orchestrated containers is unacceptable. Taking into account these limitations, my dissertation was written, and the Swedish Police is considered as a target client.

The specific issue of the research addressed in the thesis is:

How do you differentiate applications in Docker and Kubernetes, if you compare them with virtual machines running on top of the ESXi hypervisor running on bare metal?

This question requires careful study. To start with something, let's look at the common denominator - applications.

Web applications are confused

Vulnerabilities in web applications - a real zoo from attack vectors. The most significant risks of which are presented in OWASP Top 10 ( 2013 , 2017 ). Such resources help educate developers in reducing typical risks. However, even if developers wrote flawless code, the application may still be vulnerable — for example, via package dependencies.

David Gilbertson wrote a wonderful story about how you can achieve code injection through a malicious package distributed, for example, as part of NPM for Node.js based applications. You can use dependency scanners to detect vulnerabilities, but they only reduce the risk, but do not eliminate it completely.

Even if you create applications without third-party dependencies, it is still unrealistic to believe that the application will never become vulnerable.

Because of these risks, we cannot say that web applications are safe.

Instead, you should stick to the strategy of "deep defense" ( defense in depth , DID). Let's look at the next level: containers and virtual machines.

Containers vs. Virtual Machines - Tale of Isolation

A container is an isolated user (user-space) environment, which is often implemented using kernel features. For example, Docker uses Linux namespaces, cgroups, and capabilities for this. In this sense, the isolation of Docker containers is very different from virtual machines running type 1 hypervisors (that is, running directly on hardware; bare-metal hypervisors) .

The distinction for such virtual machines can be implemented at such a low level as in real hardware, for example, via Intel VT . Docker containers, in turn, rely on the Linux kernel for the distinction. This distinction is very important to consider when it comes to attacking lower level (layer-below attacks).

If an attacker is able to execute code in a virtual machine or container, it can potentially reach below by executing exit attack outside (the escape attack) .

Depending on the use of containers or virtual machines on the hardware, the distinction is implemented at different levels of the infrastructure.

The possibility of such attacks was proven for the VMware ESXi hypervisor during the Pwn2Own 2017 hacker competition, as well as GeekPwn2018. The result was a pair of CVE ( CVE-2017–4902 , CVE-2018–6981 ), which can be used in layer-below attacks to exit virtual machines ( virtual machine escape ). Virtual machines on iron servers do not guarantee absolute security, even though they use iron-delimiting technologies.

On the other hand, if we look at the attack vectors standing on a bare-metal hypervisor compared to the Linux kernel, it is clear that the latter is much used on lshaya attack surface - because of its [Linux Kernel] size and the range of possibilities. B of lshaya attack surface means more potential attack vectors for cloud environments that use container insulation. This is evident in the increasing attention to the output of attacks container (container escape attacks) , which became possible by the exploit to the nucleus (e.g., CVE-2016-5195[those. Dirty COW - approx. trans.] , CVE-2017–1000405 ).

To increase isolation inside the container, you can use modules like SELinux or AppArmor . Unfortunately, such security mechanisms in the kernel do not prevent the escape attacks on the kernel itself. They will only limit the possible actions of the attacker, if going beyond the limits is not possible . If we want to deal with exits beyond the container, an isolation mechanism is needed outside the container or even the core. For example, a sandbox (sandbox) !

gVisor- sandbox for container executable environment, the code of which was opened by Google and which adds an additional core between the container and the OS kernel. This type of sandbox can improve the situation with attacks out of the container, which are carried out at the kernel level . However, core exploits are just one of the attacker's tools.

To see how other attacks can lead to similar results, you need to look at a more general picture of how containers are used in the cloud native era.

The effect of container orchestration on isolation

To manage containers running in environments with multiple nodes, implement orchestration, in which Kubernetes plays the leading role. As it turns out, orchestrator bugs can also affect the isolation of containers.

Tim Allclair made a wonderful presentation at KubeCon 2018, in which he noted some of the attack surface. In his report, he mentions an example ( CVE-2017–1002101 ), how orchestration bugs can affect isolation — in this case, through the possibility of mounting disk space outside the pod. Vulnerabilities of this type are very problematic, because can bypass the sandbox in which the container is wrapped.

By implementing Kubernetes, we expanded the attack surface. This includes systems hosted on the master Kubernetes. One of them is the API server Kubernetes, where they recently discovered a vulnerability that allows exceeding permissions ( CVE-2018–1002105 ). Since the surface of the Kubernetes master’s attack is beyond the scope of my thesis, this particular vulnerability is not taken into account.

Why are escape attacks so important? Containers provided the ability to run a variety of co-hosted applications in a single OS. So there was a risk associated with the isolation of applications. If a business-critical application and another vulnerable application are running on the same host, an attacker can gain access to the critical application through an attack of the vulnerable application.

Depending on the data with which the organization works, their leakage can harm not only the organization itself, but also individuals and the whole country. As you remember, we are talking about the public sector, finance, banks ... - a leak can seriously affect the lives of people.

So can container orchestration be used at all in such environments? Before embarking on further thinking, it is necessary to conduct a risk assessment.

What risk is acceptable?

Even before introducing security measures, it is important to think about what information the organization is actually trying to protect. The decision whether further steps are needed to prevent possible escape attacks on containers depends on the data with which the organization works and the services it provides.

In the future, this means that in order to reach the possibility of exiting the container on a correctly configured host protected by a sandbox for the container, the attacker must:

- execute code in a container, for example, by injection of a code or by using a vulnerability in the application code;

- take advantage of another vulnerability, zero-day or for which no patch has yet been applied, to exit the container even though there is a sandbox .

As you might guess, an organization that does not consider such a scenario valid is supposed to work with data or offer services that are very demanding for confidentiality , integrity and / or accessibility .

Since the thesis is devoted to such clients, the loss of system isolation by going beyond the container limits is impermissible, because its consequences are too great. What steps can be taken to improve insulation? To climb higher to the level in the isolation ladder, you should also look at the sandboxes, in which the OS kernel is wrapped, i.e. virtual machines!

Virtualization technologies that use hosted hypervisors will improve the situation, but we want to limit the attack surface.even more . Therefore, let's examine the hypervisors installed on the iron and see what they lead us to.

Hypervisors on the gland

The study of the Swedish agency Swedish Defense Research Agency examined the risks of virtualization in relation to the Swedish Armed Forces (Swedish Armed Forces). His conclusion outlined the benefits of these technologies for the armed forces, even if there are strict security requirements and risks that virtualization brings.

In this regard, we can say that virtualization is used (to a certain extent) in the defense industry, since it carries acceptable risks.. Since agencies and enterprises in the defense industry are among the most demanding of IT security clients, we can also argue that acceptable risk for them means its validity for clients considered in the dissertation. And all this - despite the potential exits beyond the virtual machine, discussed above.

If we decide to use this type of sandbox for containers, we need to consider a few things in the context of cloud (cloud-native) specifics.

Sandbox nodes for virtual machines

The idea is that the Kubernetes cluster nodes are virtual machines that use virtualization on hardware. Since virtual machines will play the role of sandboxes for containers that run in pods, each node can be considered as a sandbox-protected environment.

An important note about these sandboxes in the context of the previously mentioned container sandboxes: this approach allows you to place many containers in one sandbox. This flexibility allows to reduce overhead costs when compared with the case when each container has its own sandbox. As each sandbox brings its own OS, we want to reduce their number while maintaining isolation.

Virtual machines installed on hardware — cluster nodes — act as container sandboxes. Containers running in different VMs are delimited with the acceptable level of risk. However, this does not apply to containers running in one VM.

However, since Kubernetes is capable of changing the location of the pods for various reasons, which can spoil the idea of sandboxes used , it will be necessary to add restrictions to the mechanism of co-location of the pods. You can achieve the desired in many ways.

By the time this article was written, Kubernetes ( v1.13 ) supports three basic methods for controlling the planning and / or launching of pods:

Which method (s) to use depends on the application of the organization. However, it is important to note that the methods differ in their ability to discard pod'y after they entered the execution stage (execution) . Now only taints are capable of this - through action

NoExecute. If there is no way to handle this moment and some labels will change, then everything can lead to undesirable co-location.Compliance with co-location requirements

The thesis proposes an idea to use a classification system showing how sensitivity affects co-location. The idea is to use a 1: 1 relationship between the container and the pod and determine the co-location of the pods based on the classification of containerized applications.

For simplicity and reusability, the following 3-step classification system is used:

- Class O : The application is not sensitive and has no isolation requirements. It can be placed on any nodes that do not belong to other classes.

- PG class (private group) : An application, in conjunction with a set of other applications, forms a private group for which a dedicated node is required. An application can be placed only on nodes of the PG class that have the corresponding private group identifier.

- Class P (private) : The application requires a private and separate node and can be placed only on the empty nodes of its class (P).

To meet the collocation requirements for a variety of classified applications, taints and tolerations, with which each node is assigned a class, and PodAffinity are used to apply additional restrictions for pods with class P or PG applications.

This simplified example shows how taints and tolerations can be used to implement co-location control:

Pods 2 and 3 contain applications from the same private group, and the application on Pod 1 is more sensitive and requires a dedicated node.

However, Affinity rules will be required for classes P and PG, which ensure that requests for demarcation are fulfilled as the cluster grows and applications reside in it. Let's see how you can implement these rules. for different classes:

# Class P

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

namespaces: ["default"]

labelSelector:

matchExpressions:

- key: non-existing-key

operator: DoesNotExistAffinity rules for class P applications require dedicated nodes. In this case, the pod will not be scheduled if the pod goes out without

non-existing-key. Everything will work until none of the pods have this key.# Class PG

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

class-pg-group-1: foobar

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchExpressions:

- key: class-pg-group-1

operator: DoesNotExistFor applications of the PG class, Affinity rules will do a co-location for pods that have a group identifier

class-pg-group-1, and on nodes that have pods without an identifier. This approach will allow us using the classification system to distinguish between containers, based on the relevant requirements of the containerized application.

What did we get?

Conclusion

We looked at how to implement sandboxes based on type 1 hypervisor (i.e., run on bare metal) to create nodes in Kubernetes clusters and presented a classification system defining the requirements for differentiating containerized applications. If we compare this approach with other solutions considered, it has advantages in terms of providing system isolation.

An important conclusion is that the isolation strategy limits the spread of escape attacks to the container. In other words, the exit from the container itself is not prevented, but its effects are mitigated. Obviously, with this comes additional difficulties that need to be considered when making such comparisons.

To take advantage of this method in a cloudy environment and provide scalability, additional requirements will be placed on automation. For example, to automate the creation of virtual machines and their use in the Kubernetes cluster. The most important thing is the implementation and verification of ubiquitous classification of applications.

This is the part of my dissertation dedicated to isolating a containerized application .

, Attack the services of other nodes, should be considered to prevent the attacker to log out from the container at one site distributed by network attacks (network A propagated attacks) . To address these risks, my thesis proposes cluster network segmentationand cloud architectures are presented, one of which has a hardware firewall .

Those interested can familiarize themselves with the full document - the text of the thesis in public access: “ Container Orchestration in Security Demanding Environments at the Swedish Police Authority ”.

PS from translator

Read also in our blog:

- " Vulnerability CVE-2019-5736 in runc, allowing to get root privileges on the host ";

- " 9 Best Practices for Security at Kubernetes ";

- “ 11 ways to (not) become a victim of hacking at Kubernetes ”;

- “ Understand RBAC in Kubernetes ”;

- “ OPA and SPIFFE - two new projects at CNCF for the security of cloud applications ”;

- " Vulnerable Docker VM - a virtual puzzle for Docker and pentesting ."