The Basics of Multithreading in the .NET Framework

Multithreading is one of the most difficult topics in programming; a lot of problems constantly arise with it. Without a clear understanding of internal mechanisms, it will be very difficult to predict the outcome of an application that uses multiple threads. Here we will not duplicate the mass of theoretical information, which is very much in the network and smart books. Instead, we focus on the specific and most important issues that we need to pay special attention to and be sure to keep in mind during the development process.

Streams

As everyone probably knows, a thread in the .NET Framework is represented as a Thread class . Developers can create new threads, give them meaningful names, change the priority, start, wait for completion or stop.

Streams are divided into background (background) and foreground (main, the one in the foreground). The main difference between them is that foreground streams prevent the program from completing. As soon as all foreground streams are stopped, the system will automatically stop all background and complete the application. To determine if a stream is background or not, you must call the following property of the current stream:

Thread.CurrentThread.IsBackground

By default, when creating a thread using the Thread class, we get a foreground stream. In order to change it to the background, we can use the thread.IsBackground property .

In applications that have a user interface (UI), there is always at least one main (GUI) thread that is responsible for the state of the components of the interface. It is important to know that only this so-called “UI stream”, which is created for an application usually in a single copy (although not always), has the ability to change the state of a view.

It is also worth mentioning about exceptions that may occur in child threads. In this situation, the application will be urgently terminated, and we will receiveUnhandled Exception , even if we wrap the thread start code in a try / catch block . In this case, error handling must be moved to the code of the child stream, in which it will already be possible to respond to a specific exception.

When applying global exception handling ( Application_Error in ASP.NET, Application.DispatcherUnhandledException in WPF, Application.ThreadException in WinForms, etc.), it’s important to remember that with this approach, we will be able to catch exceptions that occurred ONLY in the UI thread, that is, we will not “catch” exceptions from additional background threads. We can also use AppDomain.CurrentDomain.UnhandledExceptionand wedge into the process of handling all unhandled exceptions within the application domain, but we will not be able to prevent the process of completing the application.

Streams are expensive objects that take up memory, can use various system resources and be in different states. It takes time to create them. Compared to processes, they are less resource intensive, but still require rather high costs for creation and destruction. Moreover, the developer is responsible for releasing the resources occupied by a particular stream. For example, to perform a mass of small tasks, it is inefficient to start many threads, since the cost of starting them can exceed the benefit of using it. In order to be able to reuse already running threads and get rid of creation costs, the so-called thread pool ( ThreadPool ) was introduced .

Threadpool

Within each process, the CLR creates one additional abstraction called a thread pool. It is a set of threads that are in standby mode and ready to perform any useful work. When the application starts, the pool of threads starts the minimum number of threads that are in a state of waiting for new tasks. If there are not enough active threads to efficiently perform tasks in the pool, it launches new ones and uses them according to the same principle of reuse. The pool is quite smart and can determine the required effective number of threads, as well as stop unnecessary or run additional ones. You can set the maximum and minimum number of threads, but in practice this is rarely done.

The threads within the pool are divided into two groups: worker and I / O threads. Workflows focus on work related to loading the CPU (CPU based), while I / O flows focus on working with I / O devices: file system, network card, and others. If you try to perform an I / O operation on a workflow (CPU based), it will be a waste of resources, since the thread will be in a state of waiting for the completion of the I / O operation. Separate I / O flows are intended for such tasks. When using a thread pool, this is hidden explicitly from the developers. You can get the number of different threads in the pool using the code:

ThreadPool.GetAvailableThreads(out workerThreads, out competitionPortThreads);In order to determine whether the current thread is taken from the pool or created manually, you must use the construction:

Thread.CurrentThread.IsThreadPoolThreadYou can run a task using the thread taken in the pool using:

- ThreadPool class: ThreadPool.QueueUserWorkItem

- asynchronous delegates (a pair of delegate methods: BeginInvoke () and EndInvoke ())

- class BackgroundWorker

- TPL (Task Parallel Library, which we will talk about below)

The following constructs also use a thread pool, but do it implicitly, which is important to know and remember:

- WCF, Remoting, ASP.NET, ASMX Web Services

- System.Timers.Timer and System.Threading.Timer

- EAP (the event-based asynchronous pattern, we'll talk about it later)

- Plinq

It is useful to keep in mind the following points:

- Threads from the pool cannot be assigned a name

- Threads from the pool are always background

- Blocking threads from the pool can lead to the launch of additional threads and performance degradation

- You can change the priority of a thread from the pool, but it will return to the default value ( normal ) after returning to the pool

Synchronization

When building a multi-threaded application, it is necessary to ensure that any part of the shared data is protected from the possibility of changing their values by multiple threads. Given that the managed heap is one of the resources shared by the threads, and all threads in the AppDomain have parallel access to the shared application data, it is obvious that access to such shared data needs to be synchronized. This ensures that at one point in time access to a specific block of code will receive only one thread (or a specified number, in the case of using Semaphore). Thus, we can guarantee the integrity of the data, as well as their relevance at any time. Let's look at possible synchronization options and common problems. Speaking of synchronization, 4 types are usually distinguished:

- Caller Code Lock

- Constructs restricting access to pieces of code

- Signal Constructions

- Non-blocking lock

Blocking

A lock is understood as one thread waiting for another to complete or in standby for some time. It is usually implemented using the methods of the Thread class : Sleep () and Join () , the EndInvoke () method of asynchronous delegates, or using Task ( Task ) and their waiting mechanisms. The following constructs are examples of a poor approach to implementing expectations:

while (!proceed);

while (DateTime.Now < nextStartTime);

Such designs require a lot of processor resources, although they do not do any useful work. At the same time, the OS and CLR think that our thread is busy performing important calculations and allocate the necessary resources for it. This approach should always be avoided.

A similar example would be the following construction:

while (!proceed) Thread.Sleep(10);Here, the calling thread periodically falls asleep for a short time, but it is enough for the system to switch contexts and perform other tasks in parallel. This approach is much better than the previous one, but still not perfect. The main problem arises at the moment when it is necessary to change the proceed flag from different threads. Such a construction will be an effective solution when we expect that the condition in the loop will be satisfied in a very short time and entail a small number of iterations. If there are many iterations, then the system will need to constantly switch the context of this stream and spend additional resources on this.

Locking

Exclusive locking is used to make sure that only one thread will execute a particular section of code. This is necessary to guarantee the relevance of the data at any given time. There are quite a few mechanisms in the .NET Framework that allow blocking access to sections of code, but we will consider only the most popular ones. And at the same time, we will analyze the most common errors associated with the use of such designs.

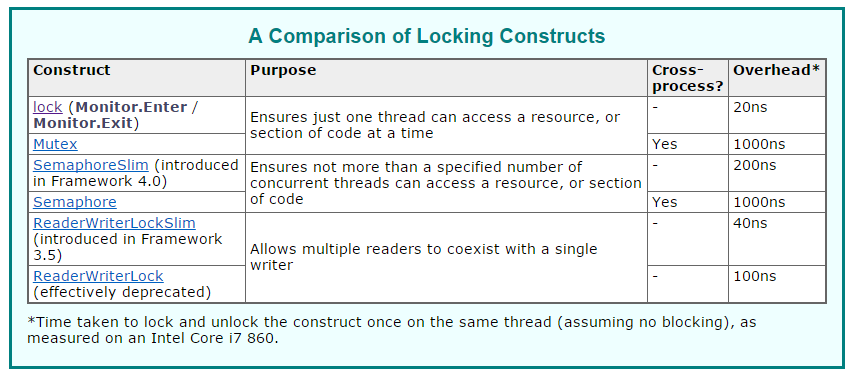

The table shows the most popular mechanisms for organizing locks. Using Mutexes, you can implement interprocessor locking (and not just for several threads of one process). A semaphore differs from Mutex in that it allows you to specify the number of threads or processes that can get simultaneous access to a specific section of code. The lock construct, which is a call to a pair of methods: Monitor.Enter () and Monitor.Exit () , is used very often, so we will consider possible problems and recommendations for its use.

Static class members often used by developers are always thread-safe, and access to such data must always be synchronized. The only difference is the static constructor, since the CLR blocks all calls from third-party threads to the static members of the class until the static constructor finishes its work.

When using blocking with the lock keyword, remember the following rules:

- type blocking must be avoided:

lock(typeof(object)) {…}

<The fact is that each type is stored in a single copy within the same domain and a similar approach can lead to deadlocks. Therefore, such constructions should be avoided. - avoid blocking this object:

lock(this) {…}

This approach can also lead to deadlocks. - as a synchronization object, you can use an additional field in a specific class:

lock(this.lockObject) {…} - you need to use the Monitor.TryEnter (this.lockObject, 3000) construct when you are in doubt, and the thread may be blocked. A similar design will allow you to exit the lock after the specified time interval.

- you must use the Interlocked class for atomic operations instead of similar constructs:

lock (this.lockObject) { this.counter++; }

Signaling

This mechanism allows the thread to stop and wait until it receives a notification from another thread about the possibility of continuing to work.

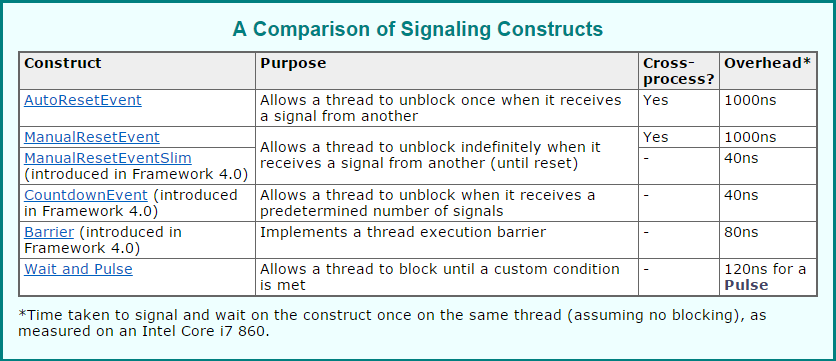

The table shows the most common designs that are used for “signaling”. Using this approach will often be more effective than previous ones.

Nonblocking synchronization

In addition to the mechanisms listed above, the .NET Framework provides constructs that can perform simple operations without blocking, stopping, or waiting for other threads. Due to the absence of locks and context switching, the code will work faster, but it is very easy to make a mistake that is fraught with hard-to-find problems. In the long run, your code can become even slower than if you were to use the common lock approach . One of the options for such synchronization is the use of so-called memory barriers ( Thread.MemoryBarrier () ), which impede optimizations, caching of CPU registers and permutations of program instructions.

Another approach is to use the volatile keywordthat mark the required fields of the class. It forces the compiler to generate memory barriers every time it reads and writes to a variable labeled volatile . This approach is good when you have one stream, or some streams only read, while others only write. If you need to read and modify in one thread, then you should use the lock operator .

Both of the above features are quite difficult to understand, require clear knowledge in the field of memory models and optimizations at different levels, so they are rarely used. Yes, and you need to apply them very carefully and only when you understand what you are doing and why.

The easiest and recommended approach for atomic operations is to use the Interlocked classreferred to above. Behind the scenes, memory barriers are also generated, and we do not need to worry about additional locks. This class has quite a few methods for atomic operations, such as increasing, decreasing, changing, changing with comparison, etc.

Collections

It is useful to know that in the System.Collections.Concurrent namespace there are quite a few thread-safe collections defined for different tasks. Most Common:

BlockingCollection

ConcurrentBag

ConcurrentDictionary

ConcurrentQueue

ConcurrentStack

In most cases, it makes no sense to implement your own similar collection - it is much simpler and more reasonable to use ready-made tested classes.

Asynchrony

Separately, I would like to highlight the so-called asynchrony, which, on the one hand, is always directly related to the launch of additional threads, and on the other hand, with additional questions and theory, which are also worth considering.

Let us illustrate the difference between synchronous and asynchronous approaches.

Suppose you want to have lunch at a pizza office and you have two options:

1st, synchronous option: take a walk to the pizzeria, choose the pizza you are interested in, make an order, wait until it is brought, get the pizza to the office or dine directly at the pizzeria, after which you will return and continue to work. In the process of walking and waiting for the order, you will be in standby mode and will not be able to do other useful work (for simplicity, this is understood as work in the office that brings money and which you cannot perform outside the workplace).

2nd, asynchronous option: order pizza over the phone. After the order you are not blocked, you can perform useful work at the workplace while your order is being processed and delivered to the office.

Evolution

As the .NET Framework evolved, there were many innovations and approaches for starting asynchronous operations. The first solution for asynchronous tasks was an approach called APM ( Asynchronous Programming Model ). It is based on asynchronous delegates are a couple of methods used with names BeginOperationName and EndOperationName which respectively begin and end an asynchronous operation operationName . After calling the BeginOperationName method , the application can continue executing instructions in the calling thread while the asynchronous operation is running in another. For each call to the BeginOperationName method , the application must also have a call to the EndOperationName methodto get the results of the operation.

This approach can be found in many technologies and classes, but it is fraught with complication and redundancy of the code.

In version 2.0, a new model was introduced called EAP ( Event-based Asynchronous Pattern ). A class that supports an event-based asynchronous model will contain one or more MethodNameAsync methods . It can reflect synchronous versions that perform the same action with the current thread. This class may also contain the MethodNameCompleted event and the MethodNameAsyncCancel method (or simply CancelAsync) to cancel the operation. This approach is common when working with services. Silverlight is used to access the server side, and Ajax is essentially an implementation of this approach. It is worth worrying about long chains of connected event calls when, upon completion of one long-term operation, the next, then the next and so on is called in the event of its completion. This is fraught with deadlocks and unexpected results. Exception handling and the results of an asynchronous operation are available only in the event handler by means of the corresponding parameter properties: Error and Result .

The .NET Framework 4.0 introduced an advanced model called TAP ( Task-based Asynchronous Model), which is based on tasks. TPL and PLINQ are also built on them, but we will talk about them in detail next time. This implementation of the asynchronous model is based on the types of Task and

Task<ТResult>System.Threading.Tasks, which are used to provide arbitrary asynchronous operations. TAP is the recommended asynchronous template for developing new components. It is important to understand the difference between the flow ( Thread ) and task ( Task ), are very different. Thread is an encapsulation of a thread of execution, while Taskis a job (or just an asynchronous operation) that can be done in parallel. A free thread from the thread pool is used to complete the task. Upon completion, the thread will be returned back to the pool, and the class user will receive the result of the task. If you need to start a long operation and you do not want to block one of the threads in the pool for a long time, you can do this using the TaskCreationOptions.LongRunning parameter . You can create and run tasks in various ways, and it is often not clear which one to choose. The difference is mainly only in ease of use and the number of parameters with settings that are available in a particular method.The latest versions of the framework have new features based on the same tasks that simplify the writing of asynchronous code and make it more readable and understandable. New async and await keywords have been introduced for this.that mark asynchronous methods and their calls. Asynchronous code becomes very similar to synchronous: we just call the desired operation and all the code that follows its call will automatically be wrapped in a kind of “callback” that is called after the completion of the asynchronous operation. Also, this approach allows you to handle exceptions in a synchronous manner; Explicitly wait for the operation to complete; determine the actions to be performed and the relevant conditions. For example, we can add code that will be executed only if an exception is thrown in an asynchronous operation. But not everything is so simple, even despite the mass of information on this topic.

async \ await

We’ll review some basic guidelines for using these keywords, as well as some interesting examples. Most often it is recommended to use asynchrony "from beginning to end." This implies using only one approach in a particular call or function block; do not mix synchronous calls with asynchronous calls. A classic example of this problem:

public static class DeadlockDemo

{

private static async Task DelayAsync()

{

await Task.Delay(1000);

}

public static void Test()

{

var delayTask = DelayAsync();

delayTask.Wait();

}

}This code works fine in a console application, but when the DeadlockDemo.Test () method is called from the GUI thread, a deadlock will occur. This is due to how await handles contexts. By default, when an incomplete Task is expected , the current context is captured and used to resume the method when the task completes. The context is the current SynchronizationContext , unless it is null, as is the case with console applications. There it is the current TaskScheduler (thread pool context). GUI and ASP.NET applications have a SynchronizationContext , which allows only one piece of code to be executed at a time. When the expression awaitfinishes execution, it tries to execute the rest of the async method within the captured context. But it already has a thread that (synchronously) waits for the completion of the async method. It turns out that each of them is waiting for each other, causing deadlock.

It is also recommended to avoid constructions of the form async void (an asynchronous method that returns nothing). Async methods can return Task ,

Task<ТResult>and void values . The last option was left to support backward compatibility and allows you to add asynchronous event handlers. But it is worth remembering about some specific differences of similar methods, namely:- Exceptions cannot be caught by standard means

- Since such methods do not return a task, we are limited in working with such constructions. For example, we will not be able to wait for the completion of such tasks with standard tools or create a chain of execution, as is the case with Task objects .

- Such methods are difficult to test, since they have differences in error handling and composition.

Always try to configure the context whenever possible. As already mentioned, the code inside the asynchronous method after await will require the synchronization context in which it was called. This is a very useful feature, especially in GUI applications, but sometimes it is not necessary. For example, when code does not need to access user interface elements. The previous deadlock example can be easily fixed by changing just one line:

await Task.Delay(1000).ConfigureAwait(false);This recommendation is very relevant when developing any libraries that do not know anything about the GUI.

Consider a few more examples of the use of new keywords, as well as some features of their use:

1)

private static async Task Test()

{

Thread.Sleep(1000);

Console.Write("work");

await Task.Delay(1000);

}

private static void Demo()

{

var child = Test();

Console.Write("started");

child.Wait();

Console.Write("finished");

}“Work” appears first on the screen, then “started”, and only then “finished”. At first glance, it seems that the word “started” should be the first to be displayed. Do not forget that in this code there is a problem with the deadlock that we examined. This is due to the fact that the method marked with the async keyword does not start additional threads and is processed synchronously until it encounters the await keyword inside . Only after that a new object of type Task will be created and a pending task launched. To fix this behavior in the above example, just replace the string with Thread.Sleep (...) with await Task.Delay (...) .

2)

async Task Demo()

{

Console.WriteLine("Before");

Task.Delay(1000);

Console.WriteLine("After");

}It can be assumed that we will wait 1 second before the second output to the screen, but this is not so - both messages will be displayed without delay. This is because the Task.Delay () method , like many other asynchronous methods, returns an object of type Task, but we ignored this task. We do not expect it to be completed in any of the possible ways, which entails the immediate display of both messages.

3)

Console.WriteLine("Before");

await Task.Factory.StartNew(async () => { await Task.Delay(1000); });

Console.WriteLine("After");As in the previous example, the output to the screen will not be suspended for one second. This is because the StartNew () method accepts a delegate and returns

Task<Т>where T is the type returned by the delegate. In the example, our delegate returns Task. As a result, we get the result in the form Task<Тask>. The use of the word await only “waits” for the completion of an external task, which immediately returns the internal Task created in the delegate, which is then ignored. You can fix this problem by rewriting the code as follows:await Task.Run(async () => { await Task.Delay(1000); });4)

async Task TestAsync()

{

await Task.Delay(1000);

}

void Handler()

{

TestAsync().Wait();

}Despite the use of keywords, this code is not asynchronous and runs synchronously, because we create a task and clearly expect it to complete. In this case, the calling thread is blocked and waits for the running task to complete.

Conclusion

As you can see, developers have quite a lot of opportunities for working with multi-threaded applications. It is important not only to know the theory, but also to be able to apply effective approaches to solve specific problems. For example, the use of the Thread class almost unambiguously indicates that you have outdated code in the project, although the likelihood of the need to use it is very small. In normal situations, using a pool is always warranted, for obvious reasons.

The use of multithreading in applications with a GUI usually entails additional restrictions, do not forget about them!

It is also worth remembering about other ready-made implementations, such as thread-safe collections. This eliminates the need to write additional code and prevents possible implementation errors. Well, do not forget about the features of the new keywords.