1155 vs 2011. Only old people go to battle

In 2017, AMD once again opened the Pandora’s box, launching mainstream processors with more than four cores, with a performance per core at least comparable to modern Intel processors at equal frequencies. Full parity did not work out, but there is no longer a half to two break depending on the type of load, as in the days of FX. In synthetics, the performance of Ryzen cores is roughly equal to the performance of Ivy Bridge cores (3xxx). The move turned out to be pretty strong, so the blue team had to hastily release the six cores on the updated 1151. What am I doing for? And actually to the fact that since last year, six or more nuclear processors with fast cores moved to the consumer segment from a narrow HEDT niche. Among other things it means

What is a modern mass 6-8-core processor? This is a frequency of 3.5-4.5GHz, a relatively fast inter-core bus of one architecture or another, dual-channel DDR4 memory with a frequency of 2.4+ GHz. At the same time, the cores of the same Ryzen do not beat performance records. But what if ... instead of a modern and rather expensive platform, take the old 2011 platform of Intel, the benefit now is the supply of decommissioned server processors of the E5 v1 and v2 lines for this socket clearly exceeds the demand? Especially enterprising Chinese, seeing such a situation, quickly mastered the mass production of motherboards for this platform, while the remaining components, a processor with memory, are relatively inexpensive in the secondary market and are not particularly susceptible to aging. The capacity of the register DDR3 memory will be lower by one channel than that of DDR4,

I don't have a Ryzen platform at hand, so we will compare the available configs, all related to the Ivy Bridge generation. In general, the platforms that are not very comparable at the time (at least for the price) are compared, but the time equalizes everyone.

The following configurations took part in the test:

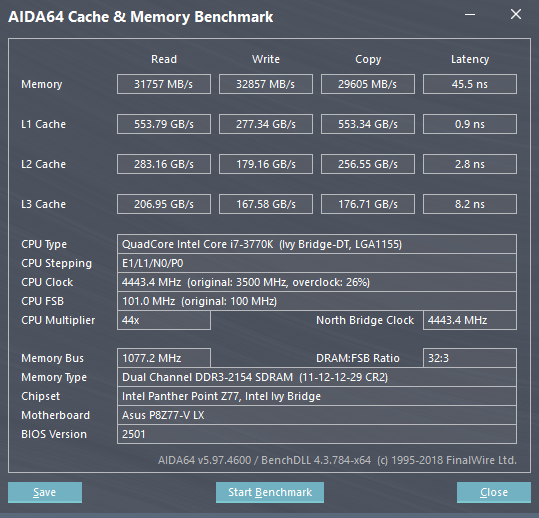

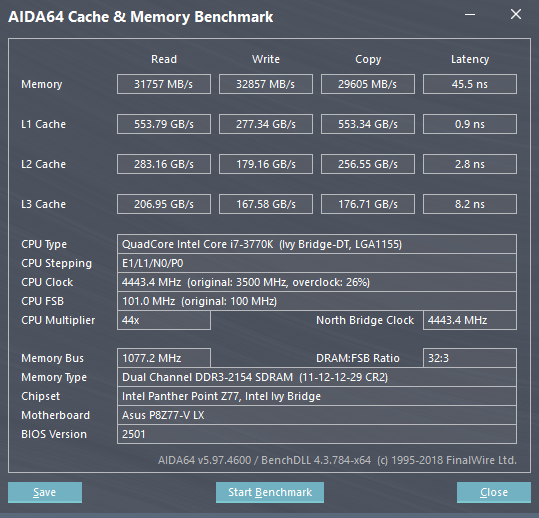

MB : ASUS P8Z77-V LX

RAM : 2 x DDR3 Kingston Hyper-X 8GB 1600 @ 2150MHz

CPU : Intel Core i7-3770K @ 4440MHz

4 cores, 8 threads, 8MB cache 3 levels, nominal frequency from 3.5GHz to 3.9GHz in boost. The multiplier is not fixed, dual channel DDR3 memory controller.

The cost of this particular kit is not known; it has been working for me since 2012

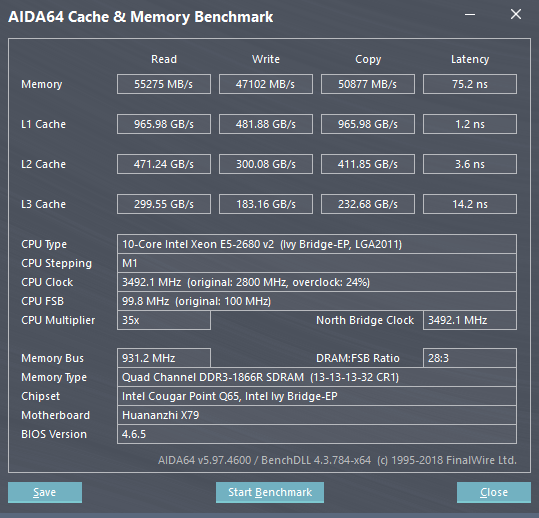

MB : Huanan X79 v2.49p (BIOS 4.6.5)

RAM : 4 x DDR3 Samsung 4GB 1333 @ 1866MHz

CPU : Intel Xeon E5-1650v2 @ 4100MHz

LGA1155, 6 cores, 12 threads, 12MB cache 3 levels, nominal frequency from 3.5GHz to 3.9GHz in boost. The multiplier is not fixed, four-channel DDR3 memory controller, with support for register memory.

The cost of the kit is 24 tr.

MB : Huanan X79 v2.49p

RAM : 4 x DDR3 Samsung 4GB 1333 @ 1866

CPU : Intel Xeon E5-2680v2

10 cores, 20 threads, 25MB cache 3 levels, the nominal frequency from 2.8GHz to 3.5GHz in boost. The multiplier is fixed, a four-channel DDR3 memory controller, with support for register memory.

The cost of the processor is 14 tr., Was taken for the experiments in the appendage to the previous set

Out of scoring goes the platform on Kaby Lake:

MB : Gigabyte Z270M-D3H

RAM: 2 x DDR4 Kingston Hyper-X 16GB 2400 @ 3200MHz

CPU : Intel Core i5-7600K @ 4500

4 cores, 4 threads, 6MB cache 3 levels, the nominal frequency 3.8 with a boost up to 4.2. The factor is not fixed.

Directly test measurements were not conducted on it, but there are a number of observations during its operation. Where a comparison with her is appropriate, she is mentioned. The following

tests were also used in all tests

: Drive: NVME SSD ADATA SX8000NP

Video card: Palit GeForce GTX1080Ti

Platform stability was tested with successive 10-15 minutes OCCT Large Dataset and Linpack runs in the AVX version. The first test reveals very well problems with memory or its controller, the second with power supply circuits and a cooling system.

The tests were conducted under Windows 10 Pro 1803 (build 17134.320).

Specter and Meltdown protection was deactivated on all test systems using the InSpectre utility.

All tests were carried out several times (at least three or four), the result of the first run was discarded, since the result of the first run is noticeably more affected by I / O delays. Undertook the maximum result, the remaining test runs were carried out to verify possible anomalies.

On the LGA1155 platform, the peak performance of NVME drives in synthetic tests is limited by the bandwidth of the PCI Express v2 bus. On the Chinese motherboard, the SSD earned without interface restrictions in both the PCI-E slot and the M2.

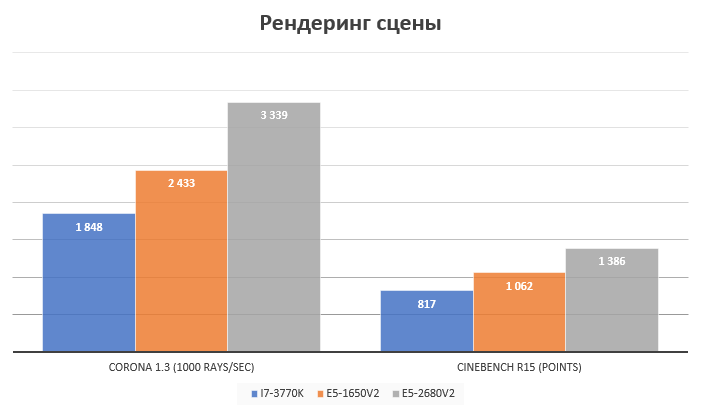

Rendering, along with video encoding, is one of the classes of tasks that parallelize almost perfectly and in theory almost linearly scale as the number of CPU cores increases. What actually confirmed the tests.

I took a set of paintball shots taken with the good old Nikon D60 in NEF format. Only 329 frames. Import time, preview building and other operations were not measured, as it is not entirely clear how to measure them with sufficient accuracy. The conversion time of this batch of files to JPEG was measured. The task processing time was estimated by the difference in time of the change in the first and last exported JPEG. Not the most accurate indicator, I agree, 3-5 seconds are not taken into account at the start of the export process, but an easily automated test and you do not need to constantly sit and monitor the progress of the process with a stopwatch, as you would when trying to estimate the import time, and all the systems in equal conditions. The processing did not exactly rest on the disk, the source code and the export folder were on the SSD.

The results were rather strange at first glance. It would seem that the process of developing RAW images should be well parallelized. And even if the development of a specific frame cannot be scattered across several cores, there were more than three hundred pictures in the processing queue! Know yourself scatter each frame to your core. But judging by the processor load, which ranged from 40% on i7 to 20% on E5-2680v2, the old Lightroom does not know how to do this. According to the CPU loading schedule, it can be seen that it cannot be used more than 4 threads, hence the minimum increase. It is surprising that it exists at all - the speed per stream on a 10-core should be noticeably lower than the overclocked i7. In general, the 2013 sample application is sharpened for a maximum of two to four-threaded processors and is not able to benefit from modern (and not very) multi-threaded processors.

After the results of the tests Lightroom 5.7, which caused disappointment, I decided to check how things are on the current versions of software from Adobe.

Testing was carried out again with real settings. The hardware acceleration of UI rendering was activated (judging by monitoring, Lighroom Classic supports drawing using DX12) and using OpenCL for Adobe Camera RAW, because in the absence of compatibility problems, no one in their right mind would disable these options, and I test the performance of the system as a whole in certain tasks.

The Adobe Lightroom Classic CC performed much better than the 2013 version of Lightroom. Apart from the fact that on I7, the latest version showed much better speed, the extra pair of cores gave a reduction in the processing time of the pack by almost a minute (55 seconds, to be exact), that is, by about 26%. The addition of another 4 cores gave an acceleration of 51 seconds or 30%. Excellent in itself, and if you compare the results of the i7 and E5-2680v2, it is altogether impressive. Decimal core does the job exactly 2 times faster than quad core. Scaling is more than linear, given that i7 operates at 4.44GHz, and E5-2680v2 in boost squeezes out of itself 3.2GHz when it is loaded on all cores.

The difference in the behavior of different versions of the same application clearly demonstrates that you cannot just take and speed up the software just by giving it a bunch of cores. Software must also be appropriate.

Yes, the new Lightroom Classic CC is good, but the trial will end soon, and there is no desire to upload 900 rubles per month for a subscription, I would rather pay the full amount once, but Adobe does not offer this option. The latest Lightroom, which could be bought rather than rented, is its sixth version. But again, there is no reason to hope for an upgrade of this version, just as for noticeable discounts when upgrading from 5.7 to 6.x. Not interested.

I wonder what can replace it? Nikon Capture NX? Maybe, but he steadily fell on me, processing 2-3 frames from the queue, so it was not possible to conduct tests. It seems that some of the latest updates of Windows 10 broke it, but in any case, even if there is a normal multithreading, it is pointless to expect OpenCL support to be delivered to it, and without it, we will doom to repeatedly play Lightroom Classic.

Raw Therapee? Not even funny. Despite the rich functionality, to use this miracle of Open Source, which the hand of the UX-designer obviously did not touch, had to force myself. Hang up when you click on a folder and do not respond for 15 seconds without any indication of the process? The processing time, at times exceeding even Lightroom 5.7 (it was not possible to make a measurement, after 5 minutes The Rawee chewed something like a third of a pack, and I was tired of waiting)? This is the norm. Probably. For someone. May be. But I'd rather pay for an adequate interface and a normal speed. What do we have out of paid analogues working under Windows? Who else makes graphics software?

Corel with its Corel AfterShot Pro 3. Moreover, with applications for winning the form “Corel AfterShot Pro 3 is up to 4x faster than Adobe Lightroom”. Already interesting.

With quad speed quadruple, Corel, of course, turned down, but on I7-3770K Corel AfetrShot Pro 3 plays back from Adobe Lightroom CC about 84 seconds from 211 or 40%. At E5-1650v2, any fourfold superiority over Lightroom also smells, moreover, the gap was narrowed, but he played 36 seconds or 24% in 300+ images. But on 20 streams, an unpleasant surprise was waiting for me - the processing time of the pack increased one and a half times compared to 12 streams. WTF ?! And if you disable HT? On 10 threads, it works quite normally, ends half a minute faster than on a six-core. And if you just set a limit on the number of threads with your hands, and turn the HT on the processor back on? And about the same works. That is, AfterShot Pro on the number of threads more than 10 starts to level off wildly on level ground. Well, also the result. Another proof that in order for multi-core CPUs to show themselves, they need the appropriate software. And Corel AfetrShot Pro 3 was clearly sharpened by 4-8 threads.

The choice is mainly due not to the representativeness of the sample, but to the fact that it was at hand from those games in which there are built-in benchmarks, which allow to obtain less stable and repeatable results. But no logic can be traced.

The settings in all games are maximum with the exception of anti-aliasing settings. AA is disabled so as not to rest against the fillrate limit. The minimum averaged FPS was taken into account as the main indicator of the comfort of the game process on the tested hardware with the maximum settings. The rate of "very rare events", 0.1% min fpshowever, it is more an indicator of random events, and if the microfreezes do not go a continuous stream every few seconds, then it seems to me that the comfort of the game is not particularly affected. Naturally, I checked the frame-time schedule, and if the game did have problems with microfreezes, they were fixed. The results of measurements were taken from the built-in benchmark of the game, if the benchmark does not allow obtaining statistics explicitly, as in GTA V, the Riva Tuner Statics Server was used to fix performance parameters.

Relatively middle-aged multiplatform game, on Windows using the graphical API DX11. Since it came out on consoles, with support for multithreading, everything is pretty good, and the requirements for core performance are moderate, so the game supports consoles of the previous generation. Since the test cannot produce statistics, Riva Tuner Statistics Server was used to collect the test results. In order to complicate the task of the processor, the options for increasing the range of detail and shadow rendering were included.

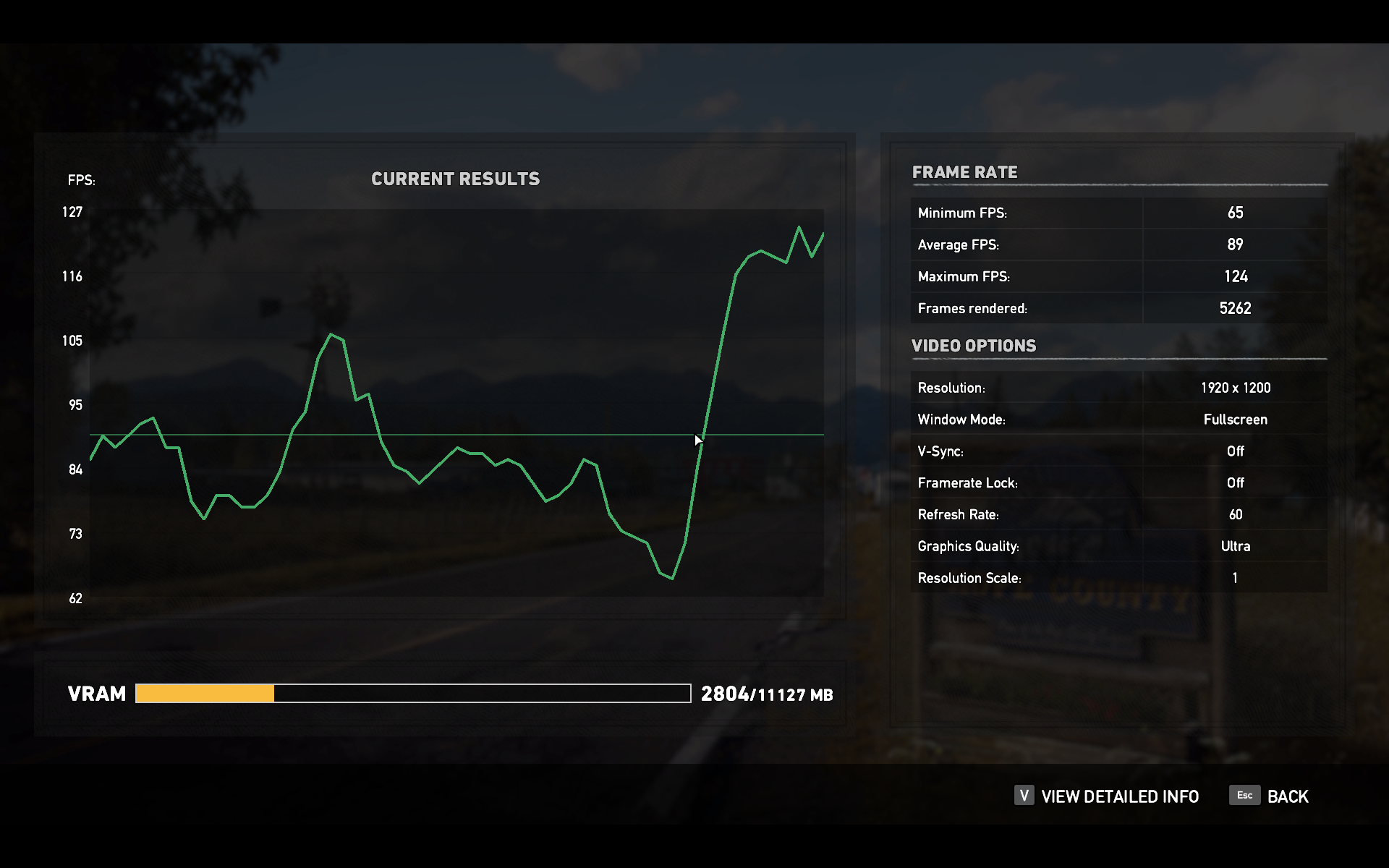

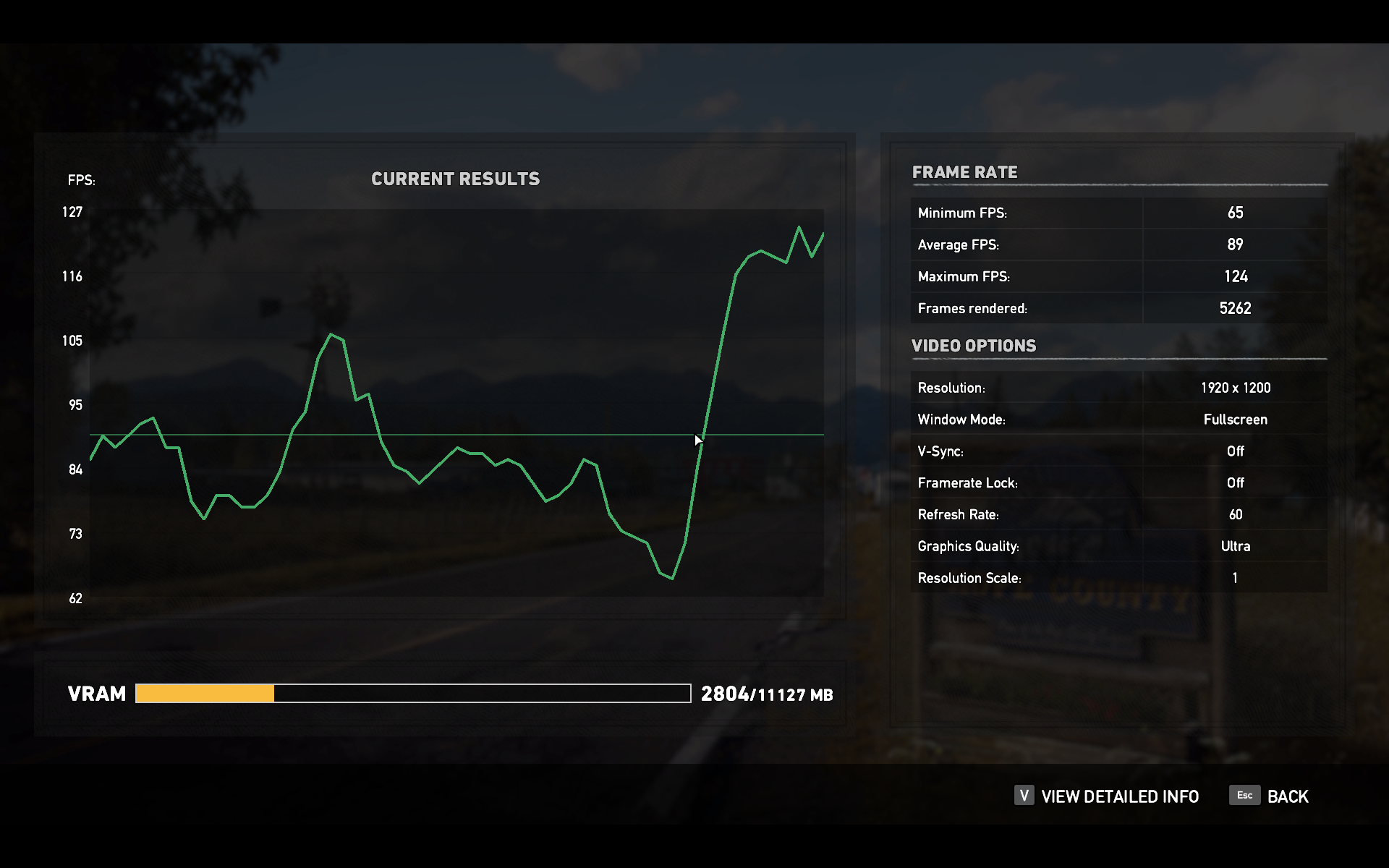

Multiplatform game this year, on the PC, again, works under DX11. Focused on PC and the latest generation of consoles. There is multithreading, but it likes fast kernels and for some reason HyperThreading. At least when it was deactivated on the E5-2680v2 platform, the frame time in the test with a stop in the CPU became very twitched with incomprehensible drawdowns in arbitrary places, the friezes started every 5-6 seconds in the test segment. If you remove the emphasis from the CPU, raising the rendering resolution, the game while lowering the average FPS showed noticeably more comfortable gameplay due to the absence of microfreezes and jerks. Indicators of average and minimum FPS did not change. After the release of the last patch with HD textures, I retested the game on two configurations, and did not find any difference in performance at all. Yes, VRAM consumption rises from 2.

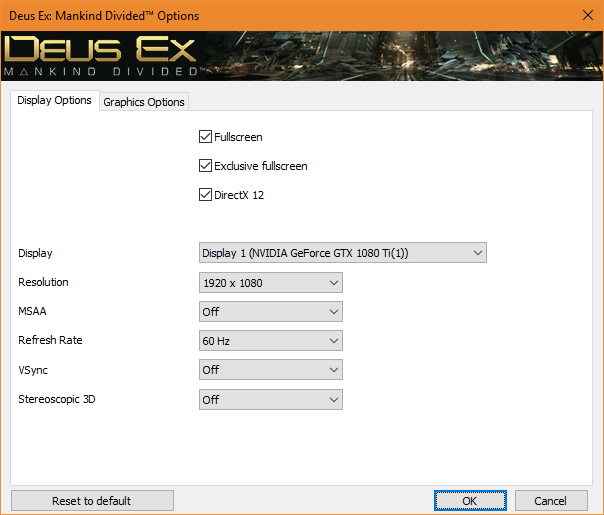

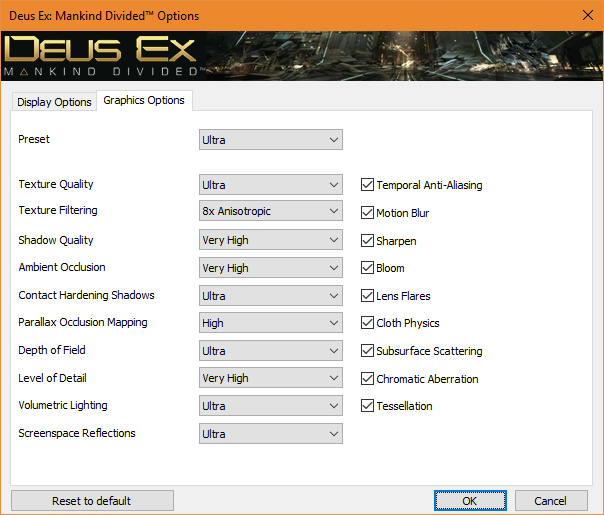

The only game that formally supports DX12. It was tested in this mode, despite its absolute senselessness. In theory, DX12 should reduce processor dependency. In practice, nothing but reducing the FPS at 15-20 frames per second, he does not bring, one iota without changing the graphics in the game. Somewhat artificial test turned out, but it happened.

The rest of the games went out of scoring, because the repeatability of exponential game segments on them is near zero, but there is a general tendency, although it cannot be expressed in exact figures of the minimum FPS.

Games on id tech 6. Doom, Wolfenstein New Colossus

ID Software game engine pleased. ID Tech 6, when used with the Vulcan API, ensures that all CPU cores that can reach are utilized. At E5-2680v2, the load was evenly distributed across all 20 streams, which no other engine could provide in the tests. In Doom, the frame counter rested against the embedded limit of 200 frames per second at maximum settings on all CPUs.

Wolfenstein New Colossus shows similar behavior on the one hand - it can usefully use 20 streams, on the other, it does not have a 200 frames per second limit and more complex graphics, resulting in different results: FPS varies from 170 to 230 per both Xeon-ah. At I7, the spread of indicators is greater - from 150 to 270. The minimum FPS in scenes with a bunch of geometry is lower, but where it rests against the speed of the core - the FPS flies to heaven. In general, all processors ensured an equally comfortable game. Distinguish by eye of 150 minimum FPS from 170 complicated.

Cities Skylines

A classic 1 and a half game with zero optimization for modern realities. She doesn’t need anything but a pair of fast cores, so the performance of this game at E5-2680v2 was depressing. FPS drops below 25, simulation twitches, everything is bad. On E5-1650v2 @ 4100 everything is more or less, FPS is in the region of 30, on I7-3770K @ 4444 in the region of 35-40 with the maximum approximation and a large city. I5-7600K with stable 50-60 FPS out of reach.

Single Flow Game: DosBox + Carmageddon + nGlide

Severe single-threaded load, double emulation, everything we love. The only type of load that caused problems when attempting to run on a balanced power scheme on a Xeon. On the one hand, the load is purely single-threaded, on the other hand, no matter how I twist the settings, load the entire kernel, even with an activated HT, even without it, DosBox is not able, as a result, the boost does not turn on and the frequency hangs from 1.2 to 3.5 GHz, and everything terribly tupit and twitches. What is the problem, failed to figure out.

This is best seen in the video:

It is treated by switching on the productive power plan, but still the kernel load never exceeds 70-80%, it’s ridiculous to load the video card and in this mode there is no difference at all in the performance on the Intel board and on the GTX1080Ti, but the FPS floats and sags.

The minimum FPS sometimes falls below 30 on all systems, but at E5-2680v2 it sometimes falls below 20. I7 noticeably exceeds the E5-1650v2 in comfort - below 25 the frame rate does not sag, there are no microfreezes, the average FPS is 5-7 frames per second higher . E5-1650v2 somewhere in the middle. I5-7600K @ 4500 is again unreachable.

Conclusions in principle expected. Both ziones showed themselves quite well, but not without nuances.

E5-2680v2 expectedly showed itself well in applications optimized for multi-threaded CPUs and not bad in relatively modern games, provided they are optimized for multi-core CPUs. Despite the fact that it still gave way to higher-frequency processors in games, it’s still not playable at maximum speeds of the last two or three years, and it’s just ripped in the competitors ’working applications. Another advantage of this processor is that it is cold. In no test, its temperature did not exceed 50 degrees, despite the fact that the fan of the cooler worked at minimum speed and did not even think to unwind.

E5-1650v2overclocking proved to be the most versatile processor. Slightly inferior to the usual desktop models in games, it quite seriously surpasses them when working with video and graphics. But he also consumes considerably, and he heats himself and heats the food chains from the soul.

I7-3770K . Despite the impressive age, overclocking this processor to this day significantly exceeds last year’s stones in stock for the same price. Yes, and in acceleration in half the cases, too. In most cases, it provides approximately the same or greater gaming performance, and working on it is noticeably more comfortable than on the same I5-7600K. The superiority in numbers does not hit, but the responsiveness of the system under load on the old I7 is much higher than on the modern quad core (now the closest analog is I3-8100).

Feels like the most versatile option for the LGA2011v2 platform should be the eight-core E5-2667v2 and E5-2687Wv2 Xeon-s with a sufficiently high core frequency in boost, since Xeon E5-2680v2 in some scenarios clearly rested on the flow rate, but this is 4 more -8 thousand rubles on top of its price, which makes the use of such a configuration very doubtful. On the other hand, the same E5-2680v2 for relatively small money allows you to build a twenty-nuclear and forty-flux system with a large amount of RAM, but in this case you need to clearly understand the purpose for which such a monster is going (for example, here ).

PS: and yes ... the Chinese motherboard has lived just enough to have time to remove the tests. With the next unsuccessful attempt to overclock the E5-1650v2, it hung under OCCT and no longer booted. As the main system, she worked for 3 weeks, there were no problems with it as a platform for the workstation at all. The Chinese make claims in this case is strange, yet overclocking is not a win-win lottery, and iron sometimes suffers.

What is a modern mass 6-8-core processor? This is a frequency of 3.5-4.5GHz, a relatively fast inter-core bus of one architecture or another, dual-channel DDR4 memory with a frequency of 2.4+ GHz. At the same time, the cores of the same Ryzen do not beat performance records. But what if ... instead of a modern and rather expensive platform, take the old 2011 platform of Intel, the benefit now is the supply of decommissioned server processors of the E5 v1 and v2 lines for this socket clearly exceeds the demand? Especially enterprising Chinese, seeing such a situation, quickly mastered the mass production of motherboards for this platform, while the remaining components, a processor with memory, are relatively inexpensive in the secondary market and are not particularly susceptible to aging. The capacity of the register DDR3 memory will be lower by one channel than that of DDR4,

I don't have a Ryzen platform at hand, so we will compare the available configs, all related to the Ivy Bridge generation. In general, the platforms that are not very comparable at the time (at least for the price) are compared, but the time equalizes everyone.

Testing participants

The following configurations took part in the test:

MB : ASUS P8Z77-V LX

RAM : 2 x DDR3 Kingston Hyper-X 8GB 1600 @ 2150MHz

CPU : Intel Core i7-3770K @ 4440MHz

4 cores, 8 threads, 8MB cache 3 levels, nominal frequency from 3.5GHz to 3.9GHz in boost. The multiplier is not fixed, dual channel DDR3 memory controller.

The cost of this particular kit is not known; it has been working for me since 2012

MB : Huanan X79 v2.49p (BIOS 4.6.5)

RAM : 4 x DDR3 Samsung 4GB 1333 @ 1866MHz

CPU : Intel Xeon E5-1650v2 @ 4100MHz

LGA1155, 6 cores, 12 threads, 12MB cache 3 levels, nominal frequency from 3.5GHz to 3.9GHz in boost. The multiplier is not fixed, four-channel DDR3 memory controller, with support for register memory.

The cost of the kit is 24 tr.

MB : Huanan X79 v2.49p

RAM : 4 x DDR3 Samsung 4GB 1333 @ 1866

CPU : Intel Xeon E5-2680v2

10 cores, 20 threads, 25MB cache 3 levels, the nominal frequency from 2.8GHz to 3.5GHz in boost. The multiplier is fixed, a four-channel DDR3 memory controller, with support for register memory.

The cost of the processor is 14 tr., Was taken for the experiments in the appendage to the previous set

Out of scoring goes the platform on Kaby Lake:

MB : Gigabyte Z270M-D3H

RAM: 2 x DDR4 Kingston Hyper-X 16GB 2400 @ 3200MHz

CPU : Intel Core i5-7600K @ 4500

4 cores, 4 threads, 6MB cache 3 levels, the nominal frequency 3.8 with a boost up to 4.2. The factor is not fixed.

Directly test measurements were not conducted on it, but there are a number of observations during its operation. Where a comparison with her is appropriate, she is mentioned. The following

tests were also used in all tests

: Drive: NVME SSD ADATA SX8000NP

Video card: Palit GeForce GTX1080Ti

Platform stability was tested with successive 10-15 minutes OCCT Large Dataset and Linpack runs in the AVX version. The first test reveals very well problems with memory or its controller, the second with power supply circuits and a cooling system.

Testing method

The tests were conducted under Windows 10 Pro 1803 (build 17134.320).

Specter and Meltdown protection was deactivated on all test systems using the InSpectre utility.

All tests were carried out several times (at least three or four), the result of the first run was discarded, since the result of the first run is noticeably more affected by I / O delays. Undertook the maximum result, the remaining test runs were carried out to verify possible anomalies.

Testing

Synthetic tests

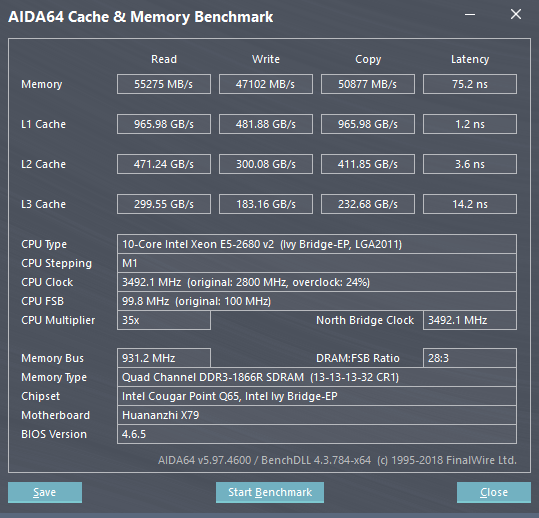

AIDA64 memtest

Performance and memory latency

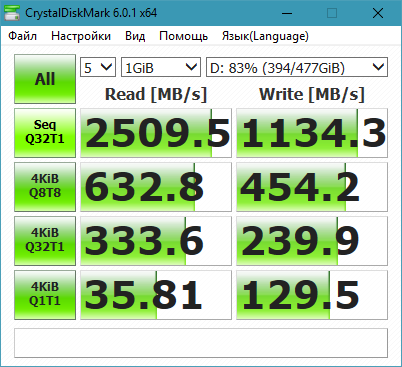

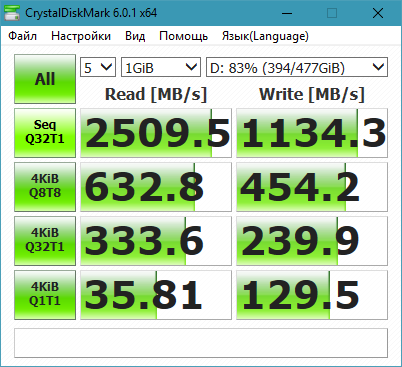

СrystalDiskMark

NVME Drive Performance

I7 3770K, MB ASUS P8Z77-V, NVME SSD @ PCI-E 2.0 x4

E5-1650v2, HUANAN X79 2.49p, NVME SSD @ PCI-E 3.0 x4

E5-1650v2, HUANAN X79 2.49p, NVME SSD @ M2

E5-1650v2, HUANAN X79 2.49p, NVME SSD @ PCI-E 3.0 x4

E5-1650v2, HUANAN X79 2.49p, NVME SSD @ M2

On the LGA1155 platform, the peak performance of NVME drives in synthetic tests is limited by the bandwidth of the PCI Express v2 bus. On the Chinese motherboard, the SSD earned without interface restrictions in both the PCI-E slot and the M2.

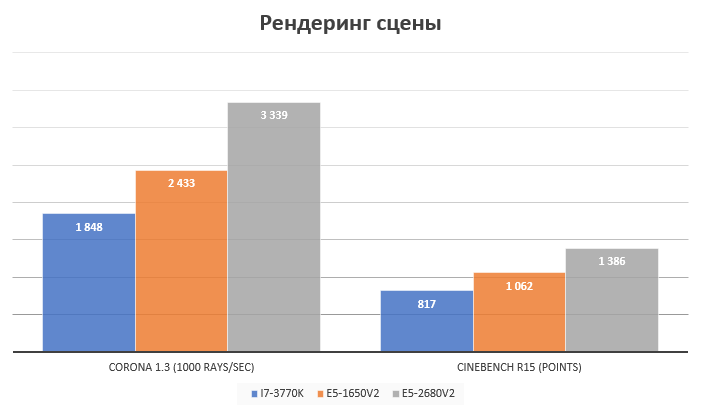

Rendering

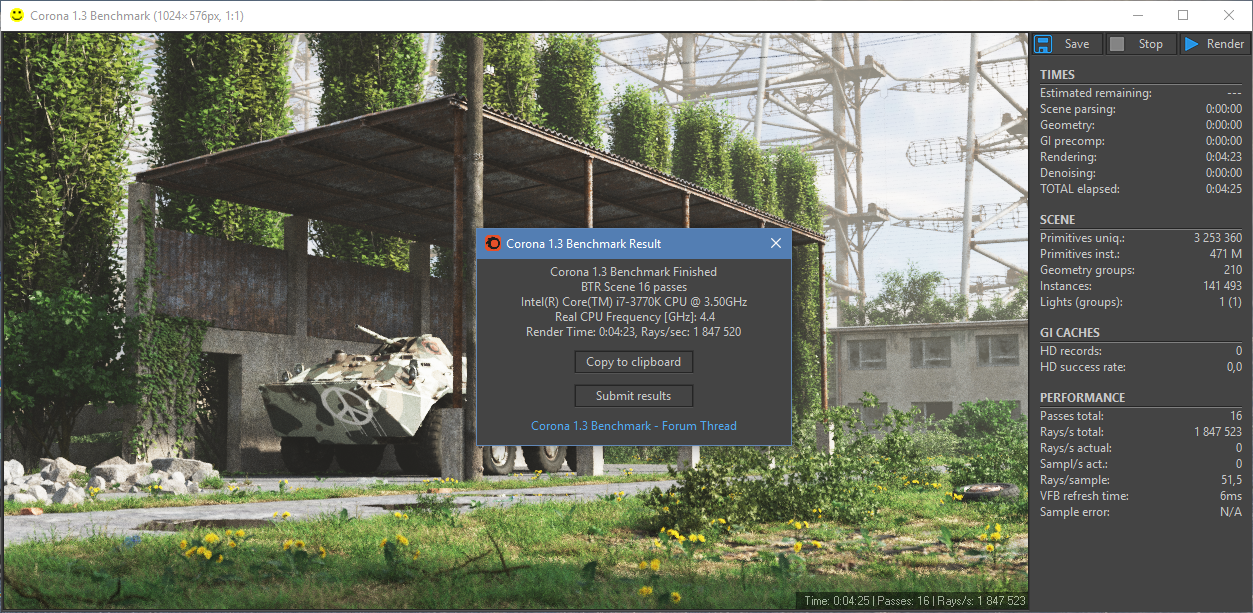

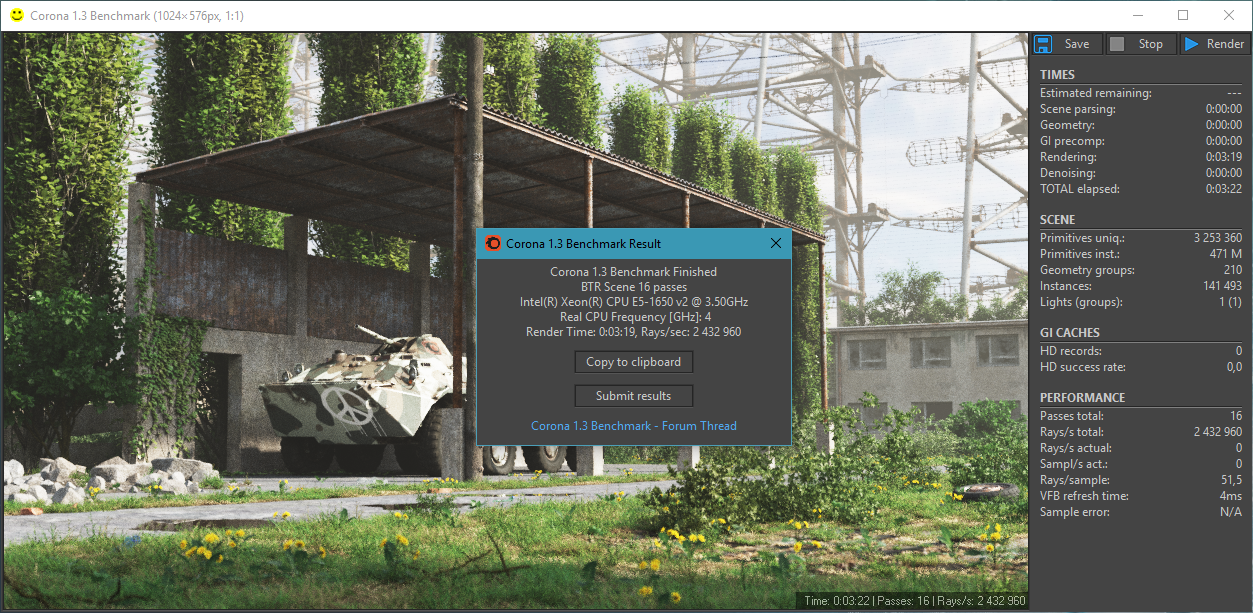

Rendering, along with video encoding, is one of the classes of tasks that parallelize almost perfectly and in theory almost linearly scale as the number of CPU cores increases. What actually confirmed the tests.

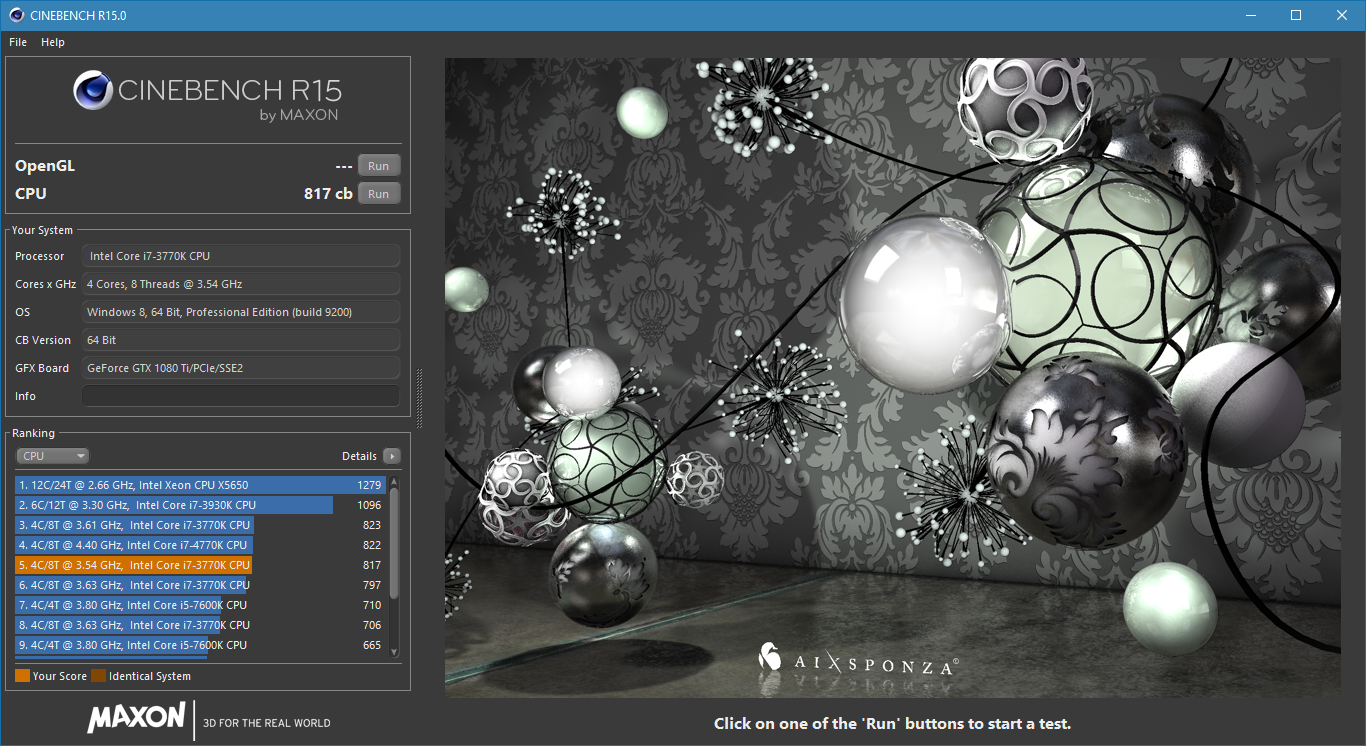

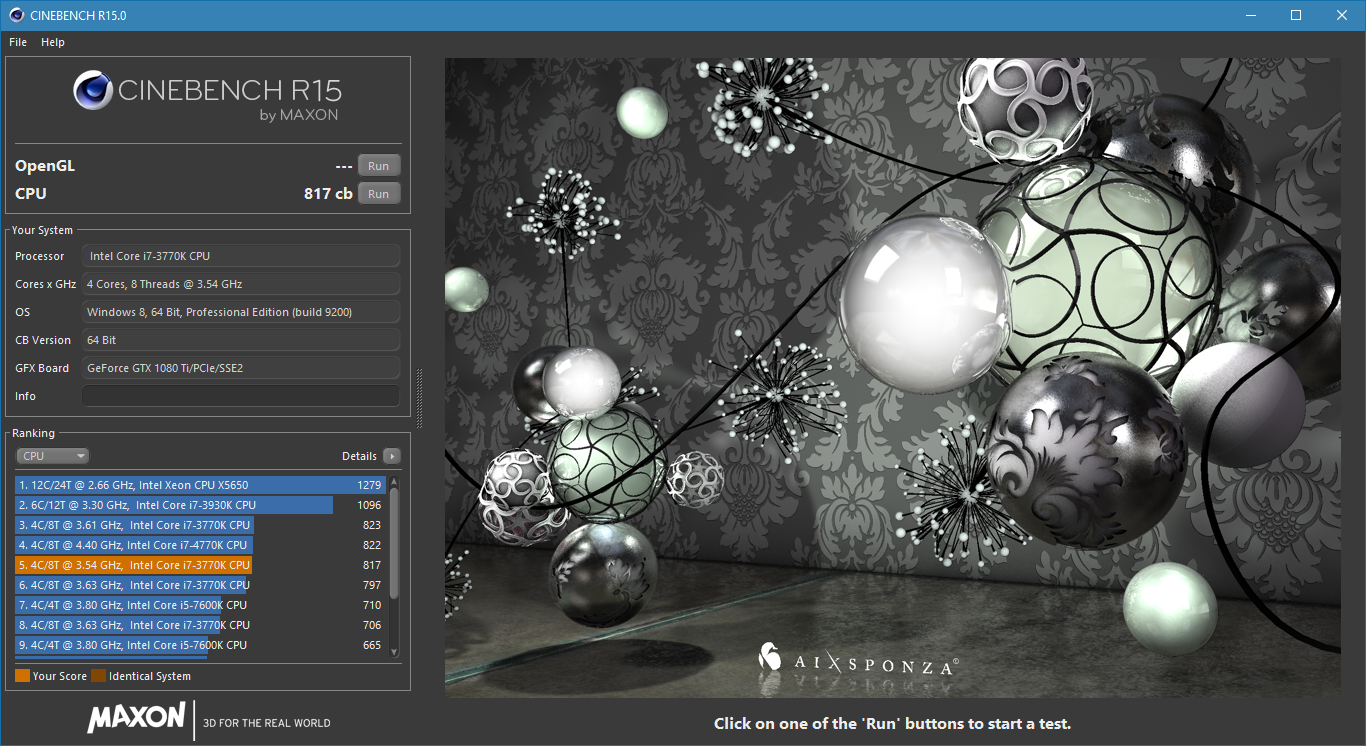

Cinebench R15.0

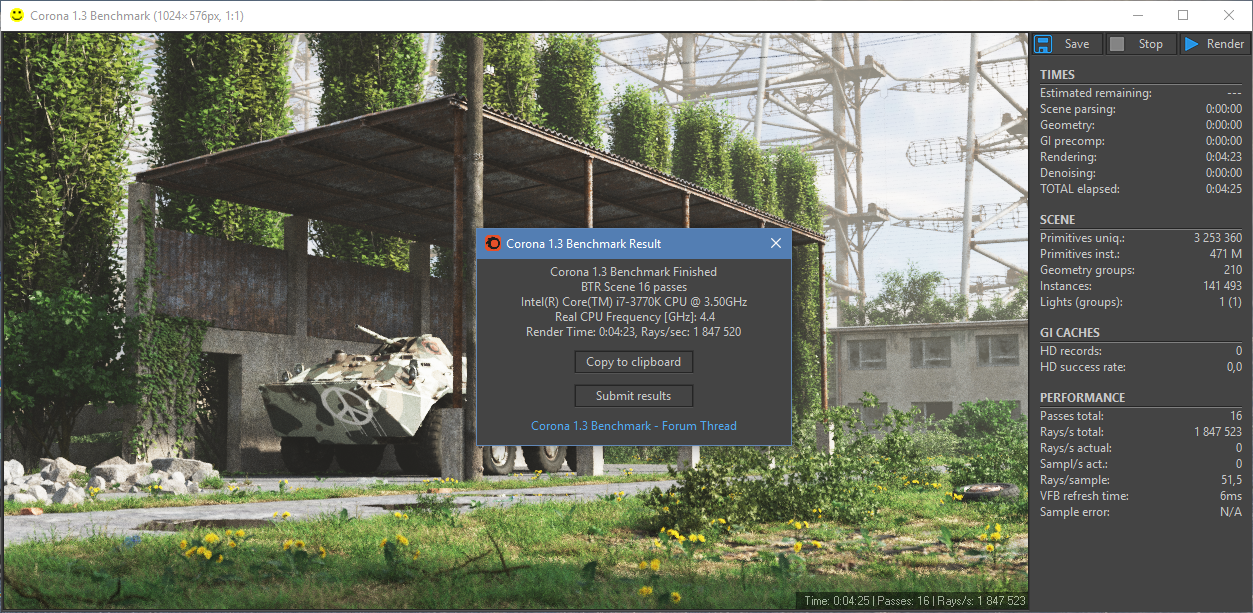

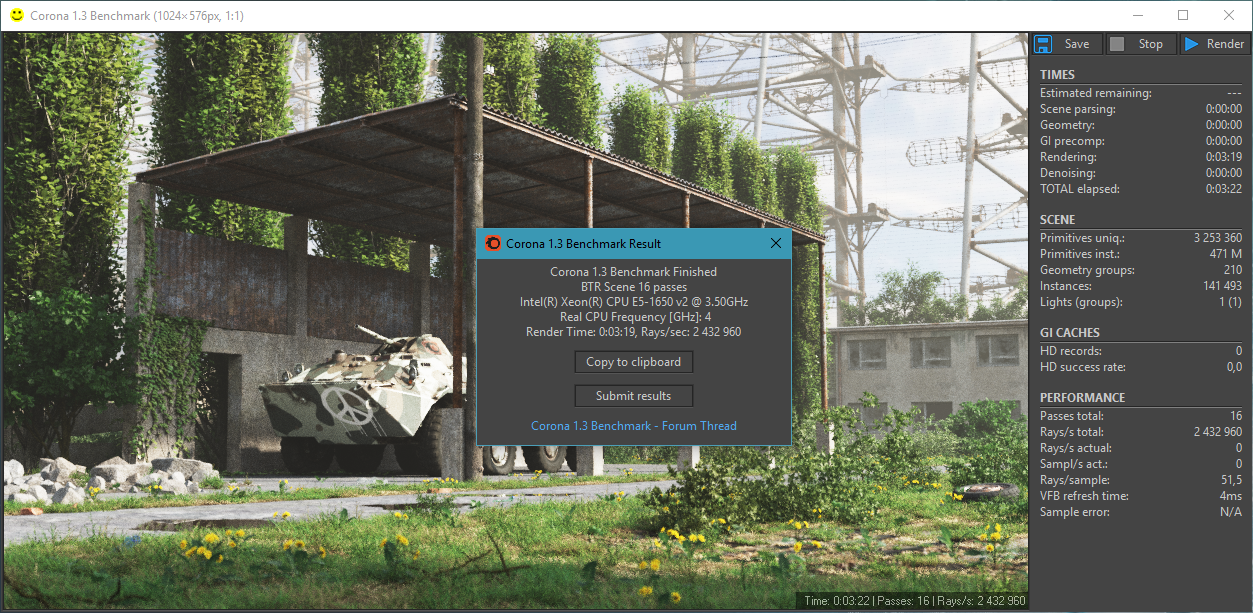

Corona 1.3 benchmark

Synthetic gaming performance test

3D Mark Time Spy

Photo processing software

Testing method

I took a set of paintball shots taken with the good old Nikon D60 in NEF format. Only 329 frames. Import time, preview building and other operations were not measured, as it is not entirely clear how to measure them with sufficient accuracy. The conversion time of this batch of files to JPEG was measured. The task processing time was estimated by the difference in time of the change in the first and last exported JPEG. Not the most accurate indicator, I agree, 3-5 seconds are not taken into account at the start of the export process, but an easily automated test and you do not need to constantly sit and monitor the progress of the process with a stopwatch, as you would when trying to estimate the import time, and all the systems in equal conditions. The processing did not exactly rest on the disk, the source code and the export folder were on the SSD.

Adobe Lightroom 5.7

The results were rather strange at first glance. It would seem that the process of developing RAW images should be well parallelized. And even if the development of a specific frame cannot be scattered across several cores, there were more than three hundred pictures in the processing queue! Know yourself scatter each frame to your core. But judging by the processor load, which ranged from 40% on i7 to 20% on E5-2680v2, the old Lightroom does not know how to do this. According to the CPU loading schedule, it can be seen that it cannot be used more than 4 threads, hence the minimum increase. It is surprising that it exists at all - the speed per stream on a 10-core should be noticeably lower than the overclocked i7. In general, the 2013 sample application is sharpened for a maximum of two to four-threaded processors and is not able to benefit from modern (and not very) multi-threaded processors.

Adobe Lightroom Classic CC (7.5)

After the results of the tests Lightroom 5.7, which caused disappointment, I decided to check how things are on the current versions of software from Adobe.

Testing was carried out again with real settings. The hardware acceleration of UI rendering was activated (judging by monitoring, Lighroom Classic supports drawing using DX12) and using OpenCL for Adobe Camera RAW, because in the absence of compatibility problems, no one in their right mind would disable these options, and I test the performance of the system as a whole in certain tasks.

The Adobe Lightroom Classic CC performed much better than the 2013 version of Lightroom. Apart from the fact that on I7, the latest version showed much better speed, the extra pair of cores gave a reduction in the processing time of the pack by almost a minute (55 seconds, to be exact), that is, by about 26%. The addition of another 4 cores gave an acceleration of 51 seconds or 30%. Excellent in itself, and if you compare the results of the i7 and E5-2680v2, it is altogether impressive. Decimal core does the job exactly 2 times faster than quad core. Scaling is more than linear, given that i7 operates at 4.44GHz, and E5-2680v2 in boost squeezes out of itself 3.2GHz when it is loaded on all cores.

The difference in the behavior of different versions of the same application clearly demonstrates that you cannot just take and speed up the software just by giving it a bunch of cores. Software must also be appropriate.

Yes, the new Lightroom Classic CC is good, but the trial will end soon, and there is no desire to upload 900 rubles per month for a subscription, I would rather pay the full amount once, but Adobe does not offer this option. The latest Lightroom, which could be bought rather than rented, is its sixth version. But again, there is no reason to hope for an upgrade of this version, just as for noticeable discounts when upgrading from 5.7 to 6.x. Not interested.

I wonder what can replace it? Nikon Capture NX? Maybe, but he steadily fell on me, processing 2-3 frames from the queue, so it was not possible to conduct tests. It seems that some of the latest updates of Windows 10 broke it, but in any case, even if there is a normal multithreading, it is pointless to expect OpenCL support to be delivered to it, and without it, we will doom to repeatedly play Lightroom Classic.

Raw Therapee? Not even funny. Despite the rich functionality, to use this miracle of Open Source, which the hand of the UX-designer obviously did not touch, had to force myself. Hang up when you click on a folder and do not respond for 15 seconds without any indication of the process? The processing time, at times exceeding even Lightroom 5.7 (it was not possible to make a measurement, after 5 minutes The Rawee chewed something like a third of a pack, and I was tired of waiting)? This is the norm. Probably. For someone. May be. But I'd rather pay for an adequate interface and a normal speed. What do we have out of paid analogues working under Windows? Who else makes graphics software?

Corel AfterShot Pro 3

Corel with its Corel AfterShot Pro 3. Moreover, with applications for winning the form “Corel AfterShot Pro 3 is up to 4x faster than Adobe Lightroom”. Already interesting.

With quad speed quadruple, Corel, of course, turned down, but on I7-3770K Corel AfetrShot Pro 3 plays back from Adobe Lightroom CC about 84 seconds from 211 or 40%. At E5-1650v2, any fourfold superiority over Lightroom also smells, moreover, the gap was narrowed, but he played 36 seconds or 24% in 300+ images. But on 20 streams, an unpleasant surprise was waiting for me - the processing time of the pack increased one and a half times compared to 12 streams. WTF ?! And if you disable HT? On 10 threads, it works quite normally, ends half a minute faster than on a six-core. And if you just set a limit on the number of threads with your hands, and turn the HT on the processor back on? And about the same works. That is, AfterShot Pro on the number of threads more than 10 starts to level off wildly on level ground. Well, also the result. Another proof that in order for multi-core CPUs to show themselves, they need the appropriate software. And Corel AfetrShot Pro 3 was clearly sharpened by 4-8 threads.

Games

The choice is mainly due not to the representativeness of the sample, but to the fact that it was at hand from those games in which there are built-in benchmarks, which allow to obtain less stable and repeatable results. But no logic can be traced.

Testing method

The settings in all games are maximum with the exception of anti-aliasing settings. AA is disabled so as not to rest against the fillrate limit. The minimum averaged FPS was taken into account as the main indicator of the comfort of the game process on the tested hardware with the maximum settings. The rate of "very rare events", 0.1% min fpshowever, it is more an indicator of random events, and if the microfreezes do not go a continuous stream every few seconds, then it seems to me that the comfort of the game is not particularly affected. Naturally, I checked the frame-time schedule, and if the game did have problems with microfreezes, they were fixed. The results of measurements were taken from the built-in benchmark of the game, if the benchmark does not allow obtaining statistics explicitly, as in GTA V, the Riva Tuner Statics Server was used to fix performance parameters.

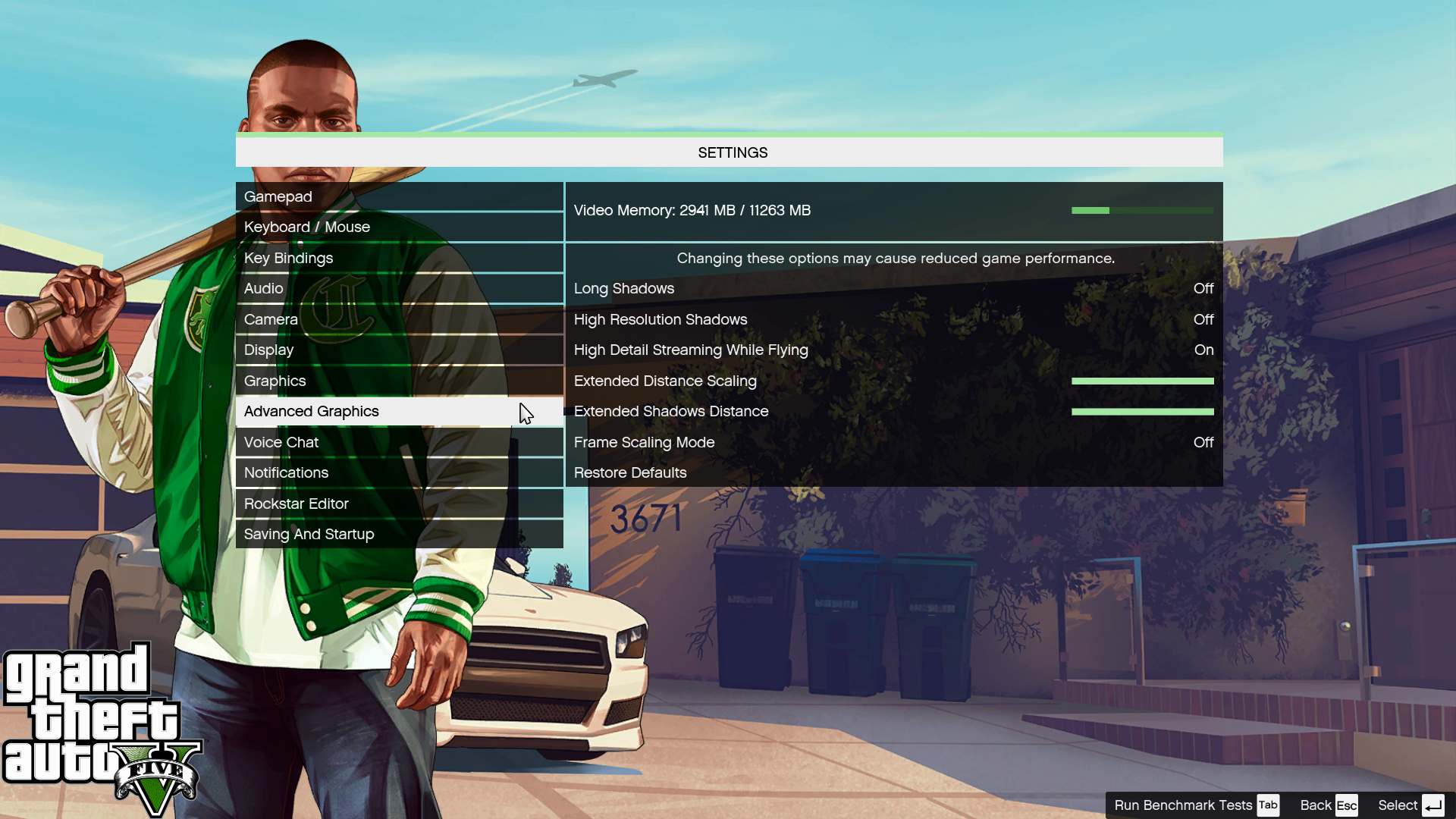

GTA V

Relatively middle-aged multiplatform game, on Windows using the graphical API DX11. Since it came out on consoles, with support for multithreading, everything is pretty good, and the requirements for core performance are moderate, so the game supports consoles of the previous generation. Since the test cannot produce statistics, Riva Tuner Statistics Server was used to collect the test results. In order to complicate the task of the processor, the options for increasing the range of detail and shadow rendering were included.

screenshots of game settings for test

test results

I7-3770K

22-09-2018, 18:58:21GTA5.exebenchmarkcompleted, 8234 framesrenderedin 115.485sAverageframerate : 71.2FPSMinimumframerate : 45.3FPSMaximumframerate : 269.9FPS

1% lowframerate : 46.3FPS

0.1% lowframerate : 39.4FPSE5-1650V2

23-09-2018, 20:50:08GTA5.exebenchmarkcompleted, 7862 framesrenderedin 116.125sAverageframerate : 67.7FPSMinimumframerate : 48.1FPSMaximumframerate : 119.2FPS

1% lowframerate : 47.3FPS

0.1% lowframerate : 43.0FPSE5-2680v2

23-09-2018, 22:48:25GTA5.exebenchmarkcompleted, 7618 framesrenderedin 116.313sAverageframerate : 65.4FPSMinimumframerate : 47.7FPSMaximumframerate : 154.8FPS

1% lowframerate : 46.7FPS

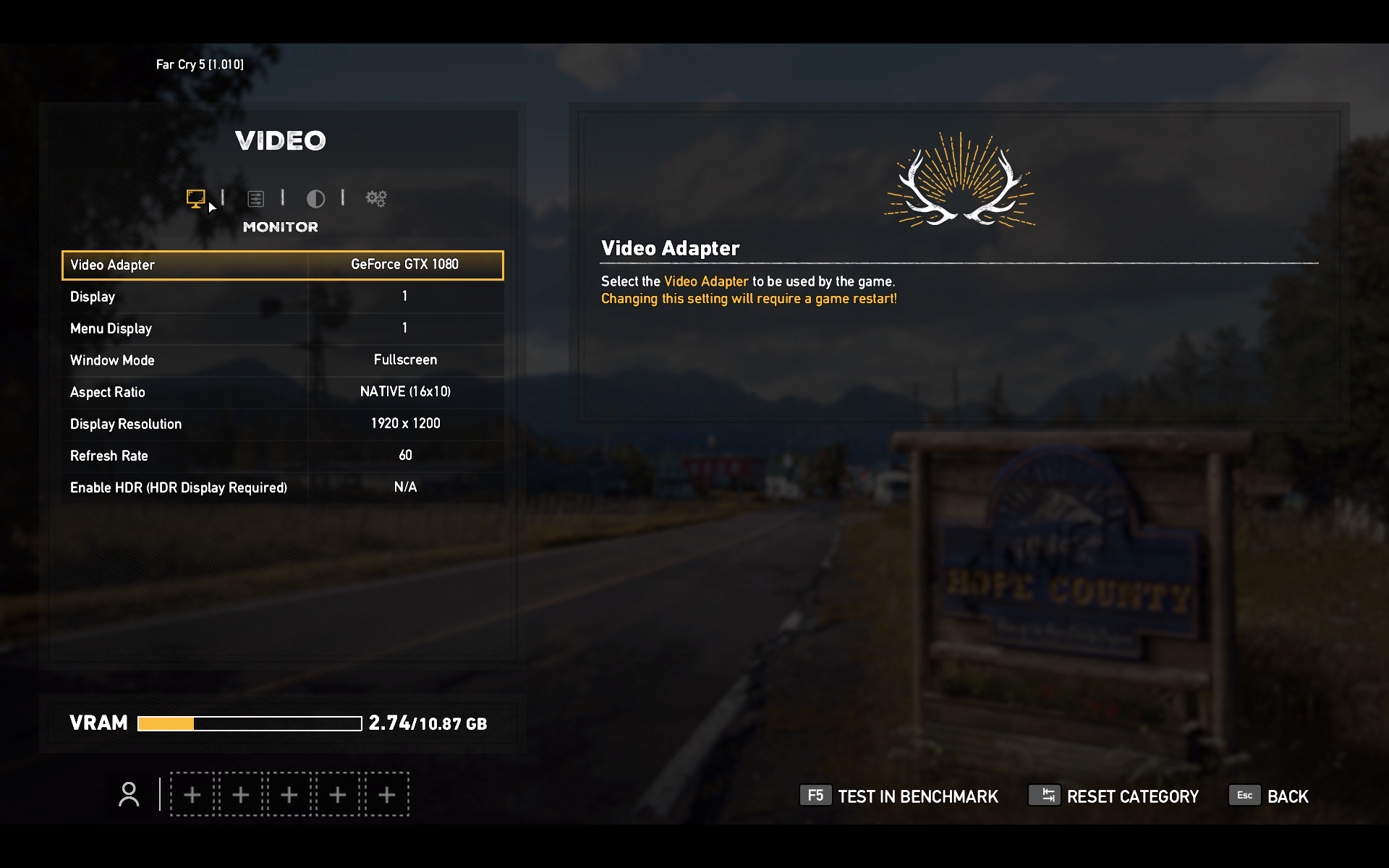

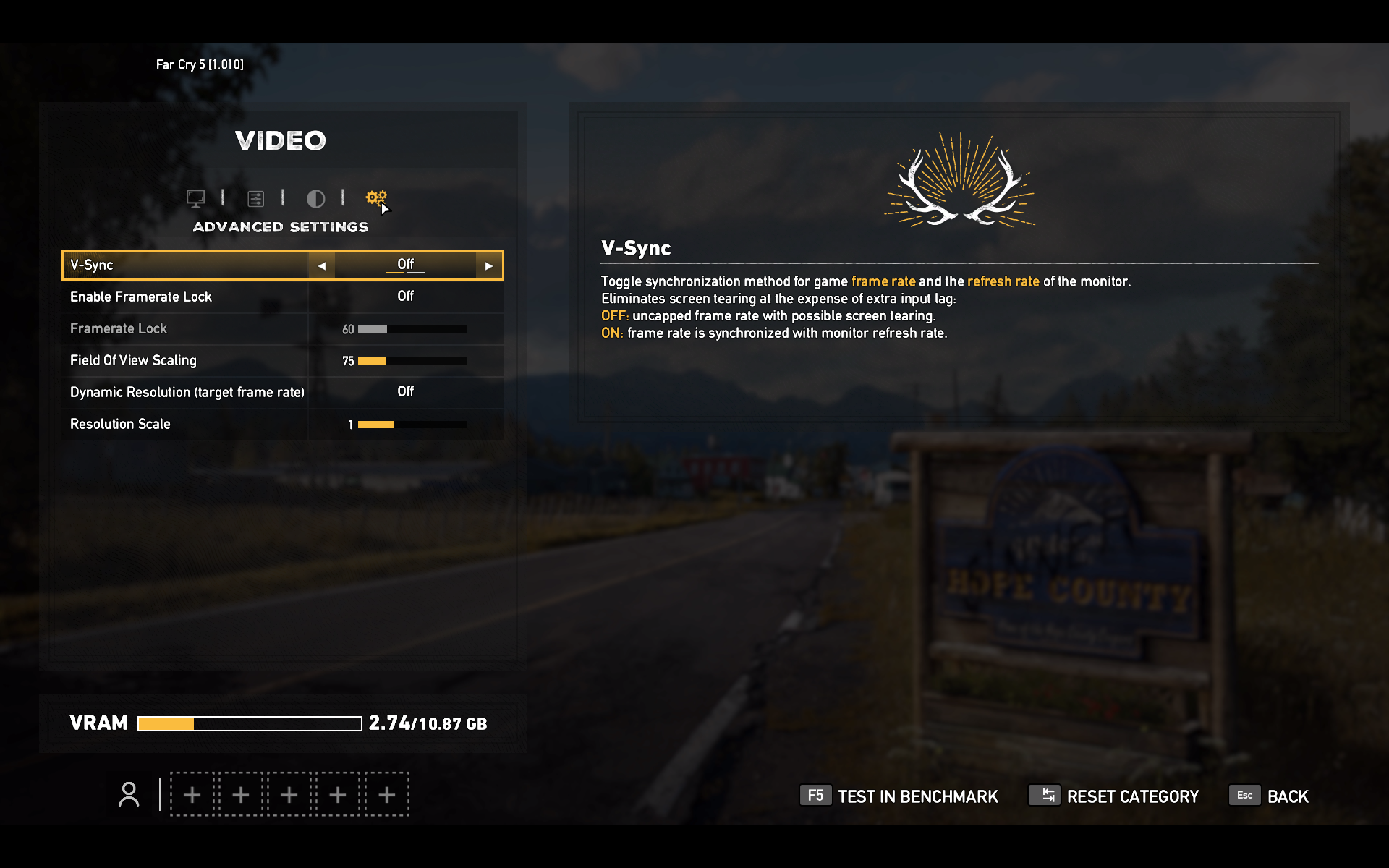

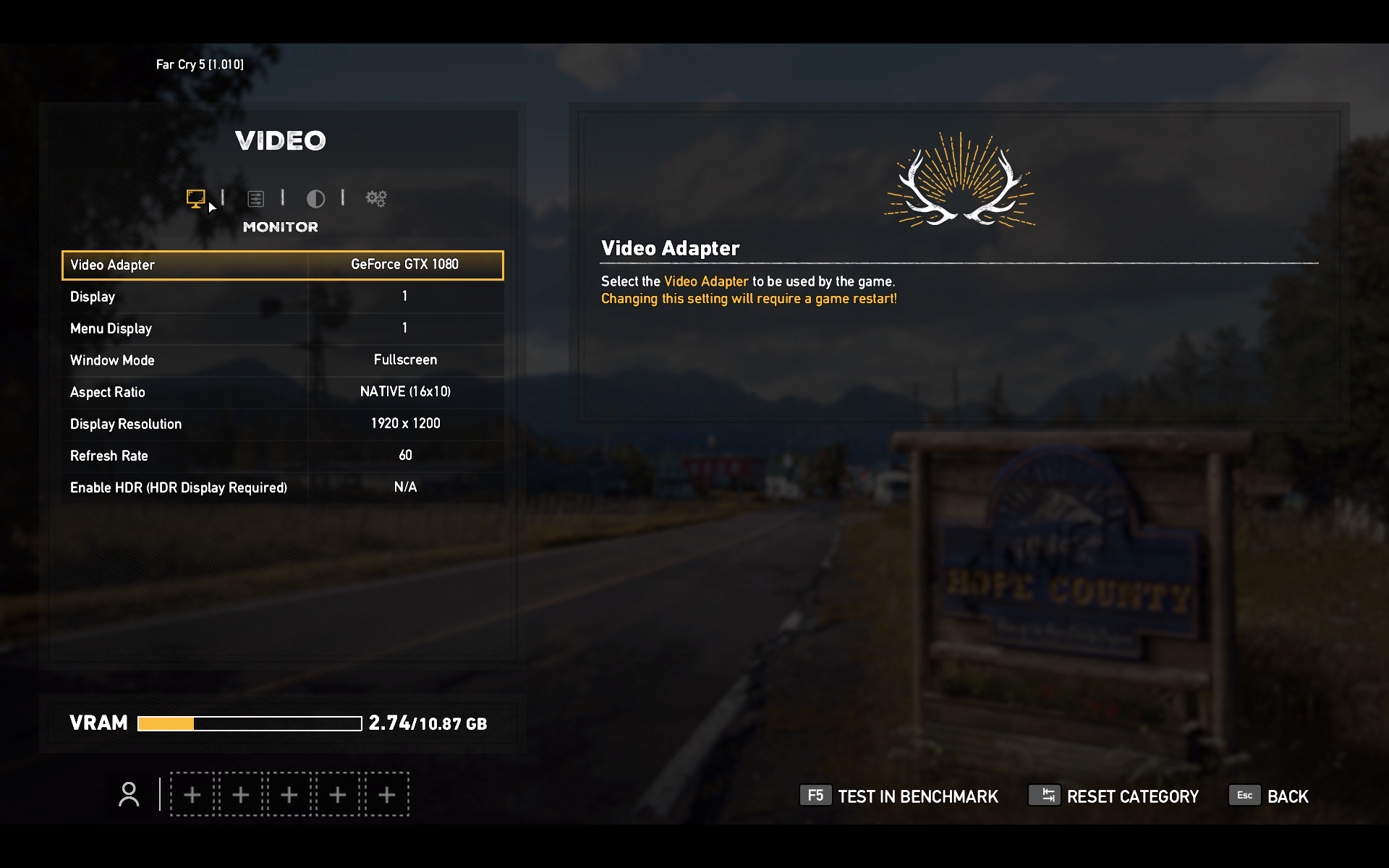

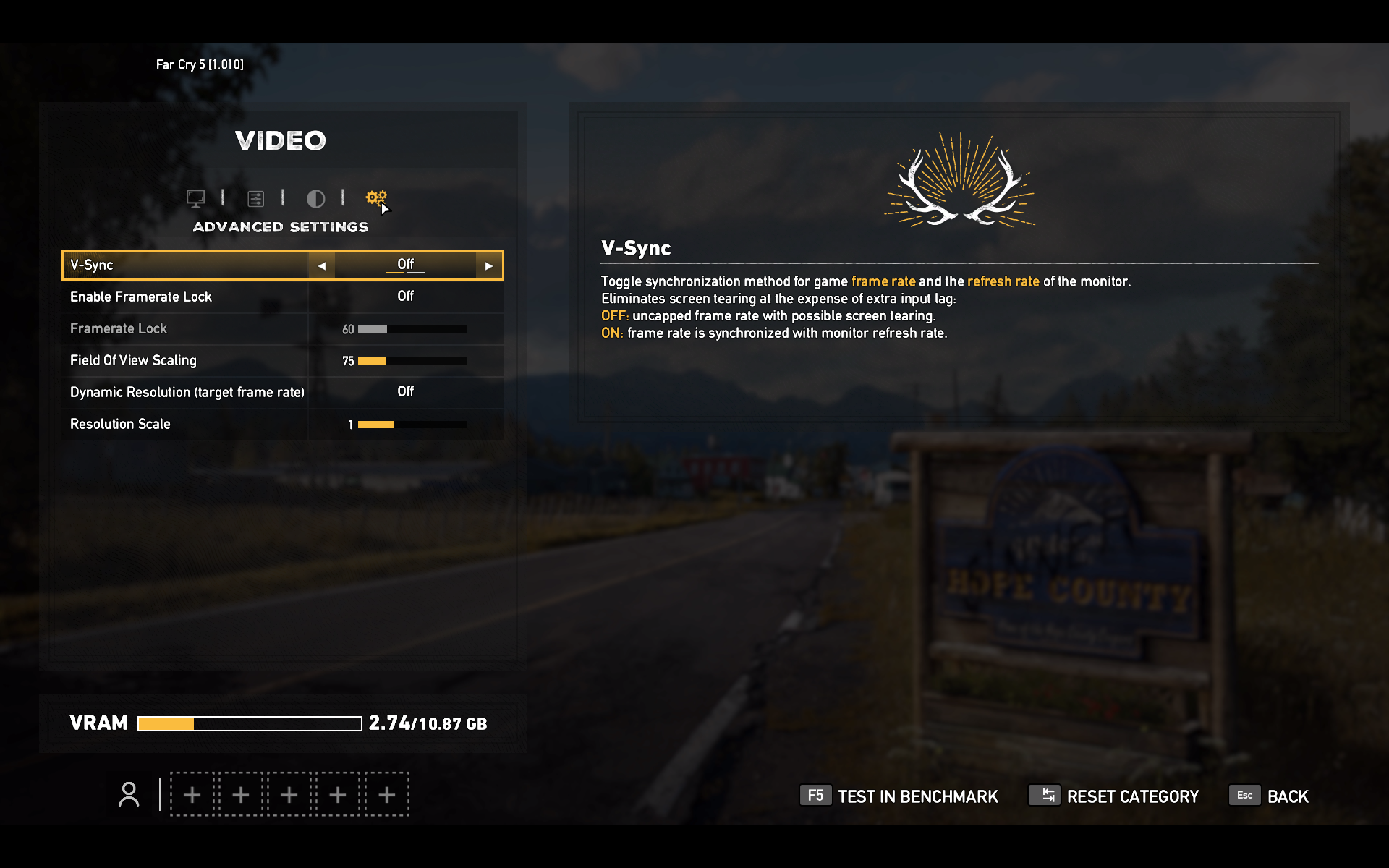

0.1% lowframerate : 40.5FPSFar cry 5

Multiplatform game this year, on the PC, again, works under DX11. Focused on PC and the latest generation of consoles. There is multithreading, but it likes fast kernels and for some reason HyperThreading. At least when it was deactivated on the E5-2680v2 platform, the frame time in the test with a stop in the CPU became very twitched with incomprehensible drawdowns in arbitrary places, the friezes started every 5-6 seconds in the test segment. If you remove the emphasis from the CPU, raising the rendering resolution, the game while lowering the average FPS showed noticeably more comfortable gameplay due to the absence of microfreezes and jerks. Indicators of average and minimum FPS did not change. After the release of the last patch with HD textures, I retested the game on two configurations, and did not find any difference in performance at all. Yes, VRAM consumption rises from 2.

screenshots of game settings for test

screenshots of test run results

I7 3770K@4444

E5-1650v2@4000

E5-2680v2

E5-1650v2@4000

E5-2680v2

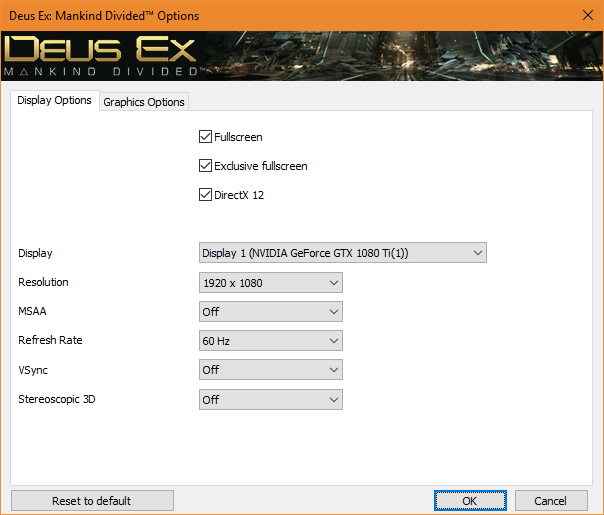

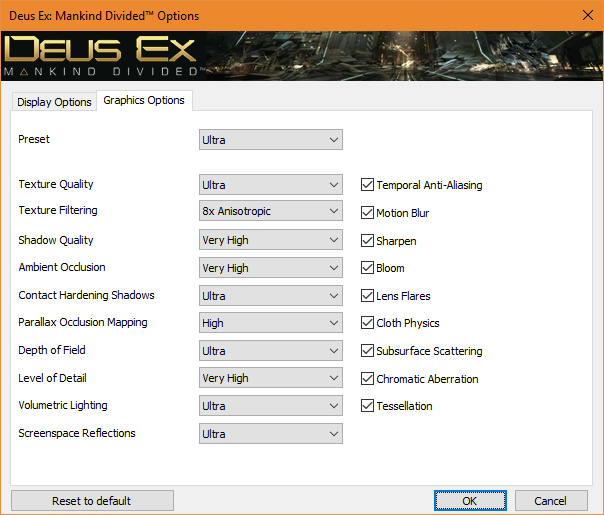

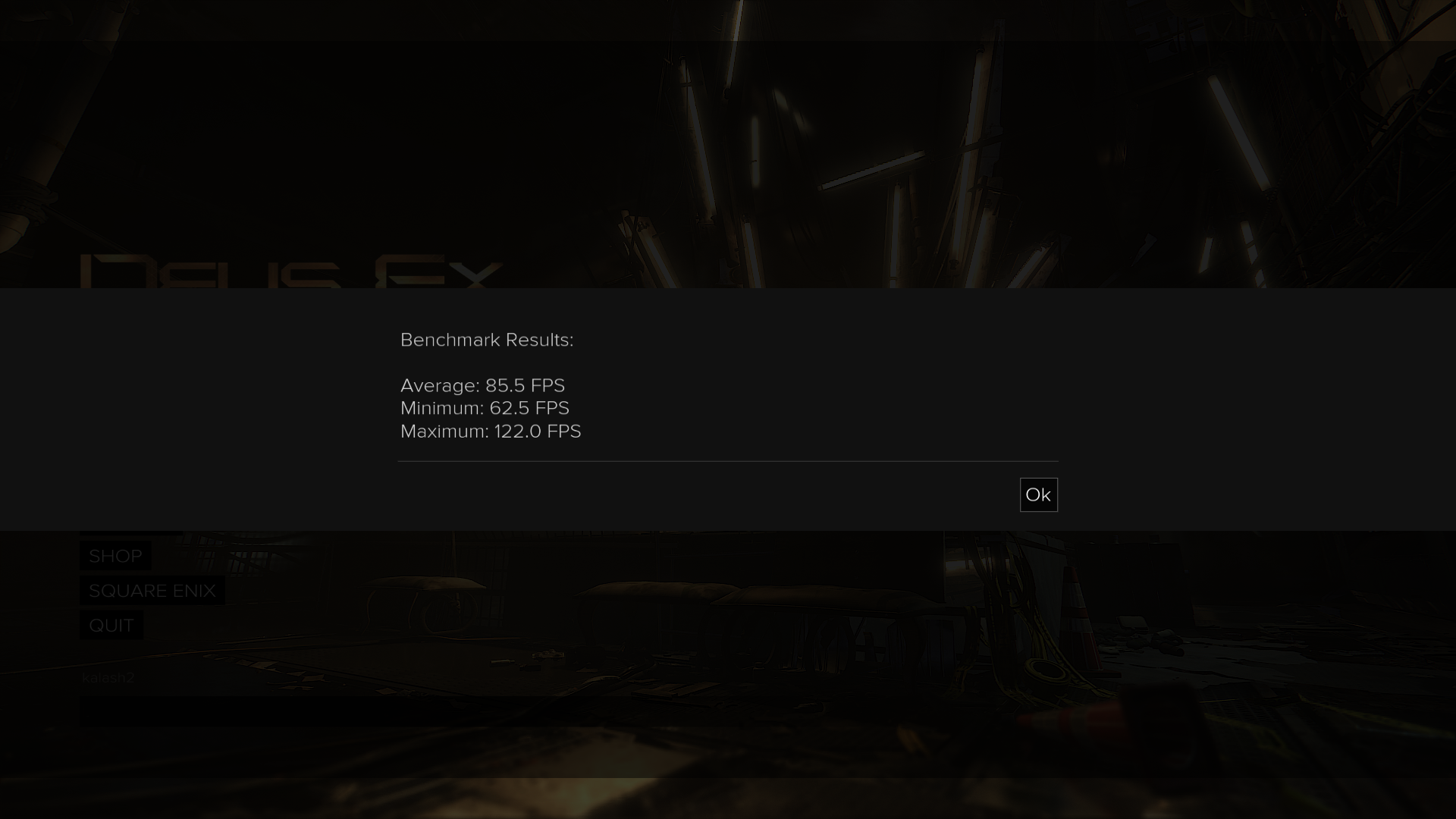

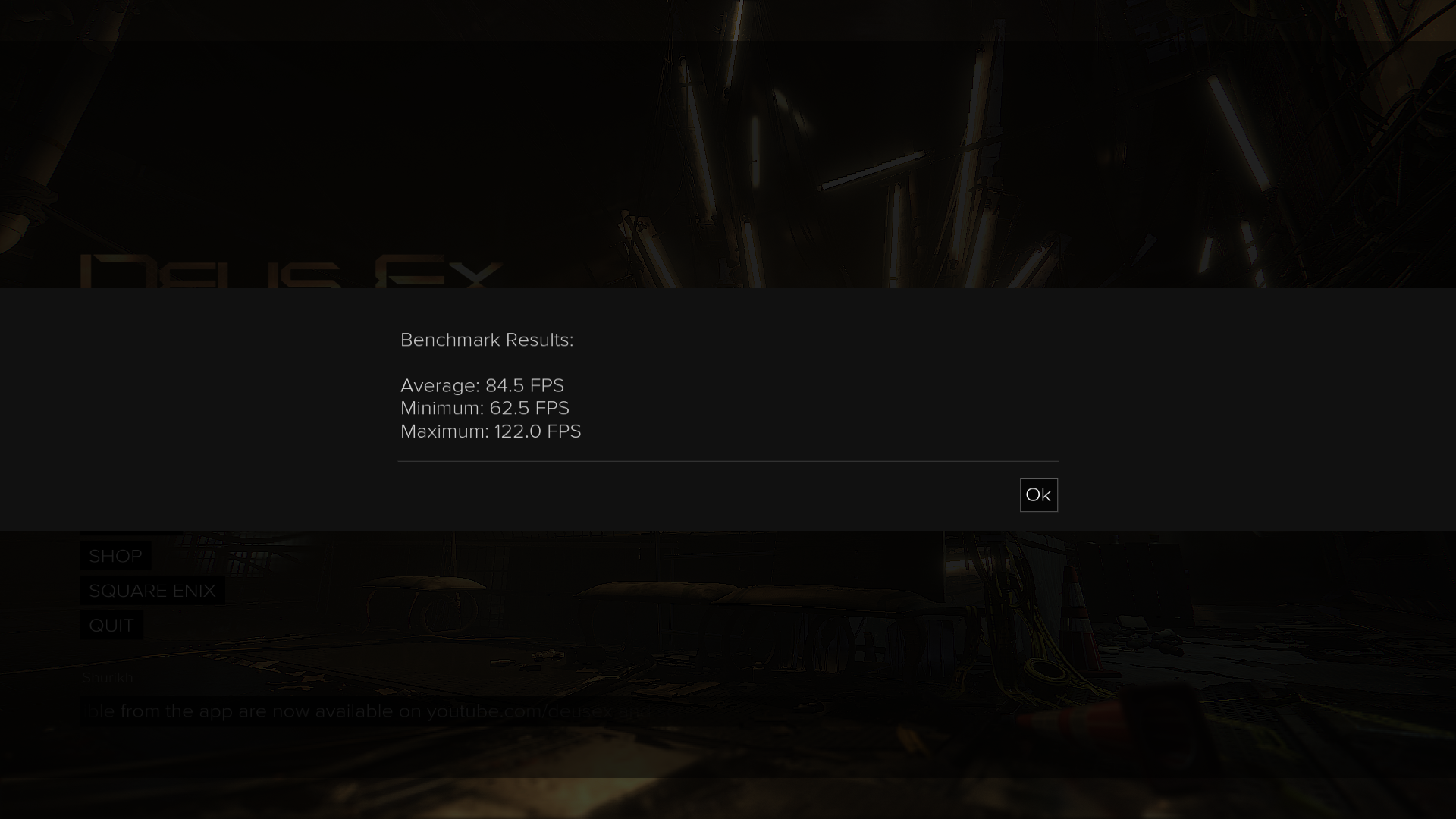

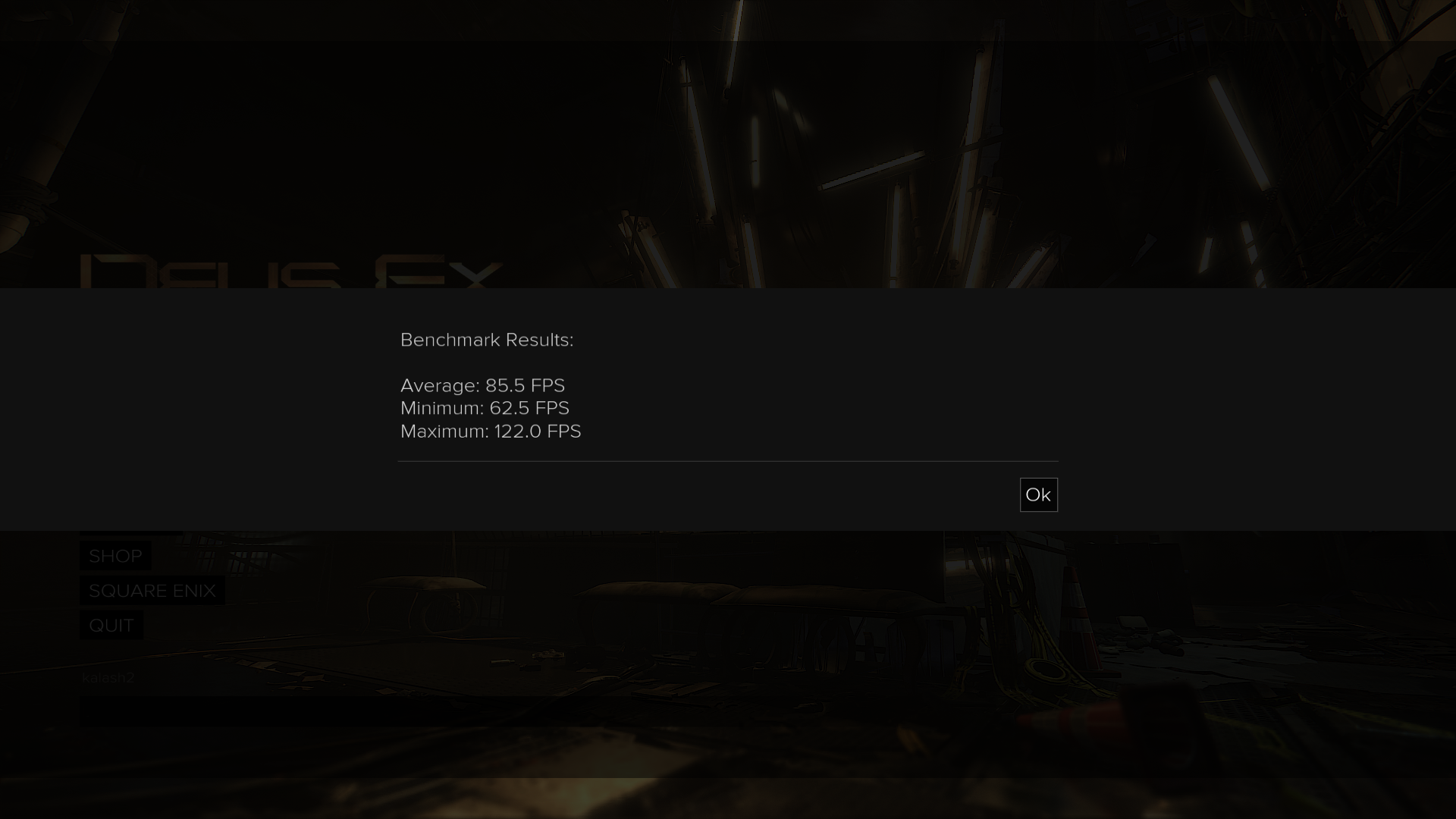

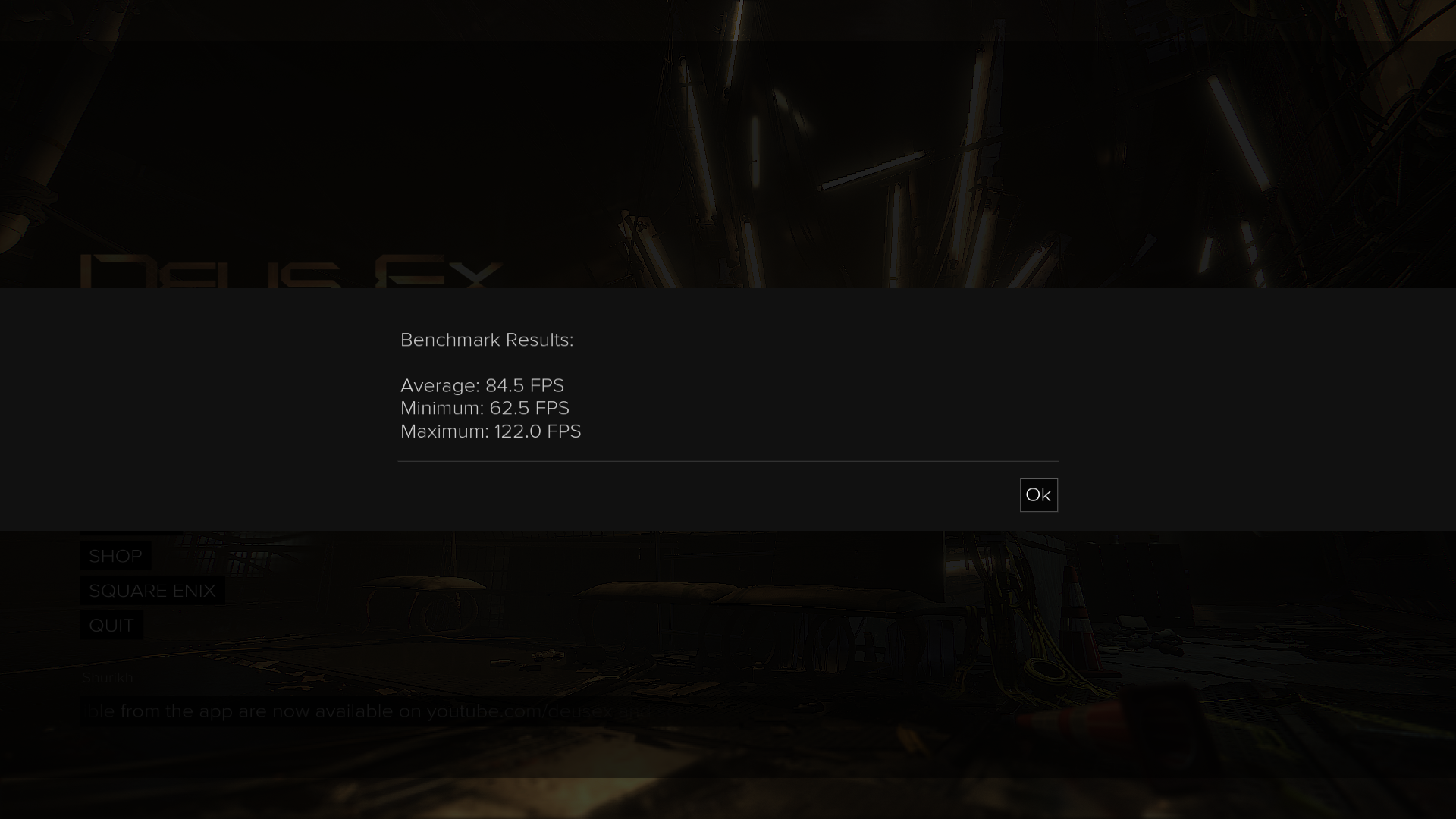

Deus Ex Mankind Divided

The only game that formally supports DX12. It was tested in this mode, despite its absolute senselessness. In theory, DX12 should reduce processor dependency. In practice, nothing but reducing the FPS at 15-20 frames per second, he does not bring, one iota without changing the graphics in the game. Somewhat artificial test turned out, but it happened.

screenshots of game settings for test

screenshots of test run results

I7 3770K@4444

E5-1650v2@4000

E5-2680v2

E5-1650v2@4000

E5-2680v2

The rest of the games went out of scoring, because the repeatability of exponential game segments on them is near zero, but there is a general tendency, although it cannot be expressed in exact figures of the minimum FPS.

Out of scoring

Games on id tech 6. Doom, Wolfenstein New Colossus

ID Software game engine pleased. ID Tech 6, when used with the Vulcan API, ensures that all CPU cores that can reach are utilized. At E5-2680v2, the load was evenly distributed across all 20 streams, which no other engine could provide in the tests. In Doom, the frame counter rested against the embedded limit of 200 frames per second at maximum settings on all CPUs.

Wolfenstein New Colossus shows similar behavior on the one hand - it can usefully use 20 streams, on the other, it does not have a 200 frames per second limit and more complex graphics, resulting in different results: FPS varies from 170 to 230 per both Xeon-ah. At I7, the spread of indicators is greater - from 150 to 270. The minimum FPS in scenes with a bunch of geometry is lower, but where it rests against the speed of the core - the FPS flies to heaven. In general, all processors ensured an equally comfortable game. Distinguish by eye of 150 minimum FPS from 170 complicated.

Cities Skylines

A classic 1 and a half game with zero optimization for modern realities. She doesn’t need anything but a pair of fast cores, so the performance of this game at E5-2680v2 was depressing. FPS drops below 25, simulation twitches, everything is bad. On E5-1650v2 @ 4100 everything is more or less, FPS is in the region of 30, on I7-3770K @ 4444 in the region of 35-40 with the maximum approximation and a large city. I5-7600K with stable 50-60 FPS out of reach.

Single Flow Game: DosBox + Carmageddon + nGlide

Severe single-threaded load, double emulation, everything we love. The only type of load that caused problems when attempting to run on a balanced power scheme on a Xeon. On the one hand, the load is purely single-threaded, on the other hand, no matter how I twist the settings, load the entire kernel, even with an activated HT, even without it, DosBox is not able, as a result, the boost does not turn on and the frequency hangs from 1.2 to 3.5 GHz, and everything terribly tupit and twitches. What is the problem, failed to figure out.

This is best seen in the video:

Test segment of the classic Carmageddon

It is treated by switching on the productive power plan, but still the kernel load never exceeds 70-80%, it’s ridiculous to load the video card and in this mode there is no difference at all in the performance on the Intel board and on the GTX1080Ti, but the FPS floats and sags.

The minimum FPS sometimes falls below 30 on all systems, but at E5-2680v2 it sometimes falls below 20. I7 noticeably exceeds the E5-1650v2 in comfort - below 25 the frame rate does not sag, there are no microfreezes, the average FPS is 5-7 frames per second higher . E5-1650v2 somewhere in the middle. I5-7600K @ 4500 is again unreachable.

Summary Test Results

| Unit rev. | I7-3770K | E5-1650V2 | E5-2680V2 | |

|---|---|---|---|---|

| Lightroom 5.7 | sec | 393 | 372 | 347 |

| Lightroom 7.5 | sec | 221 | 156 | 105 |

| Corel AfterShot Pro3 | sec | 127 | 118 | 94 |

| Corona 1.3 | 1000 rays / sec | 1,848 | 2,433 | 3,339 |

| CineBench R15 | points | 817 | 1,062 | 1,386 |

| Memory Read | MB / s | 31,872 | 50,143 | 55,275 |

| Memory write | MB / s | 33,072 | 36 202 | 47 102 |

| Memory Copy | MB / s | 29,932 | 48,249 | 50,877 |

| Memory latency | ns | 46.90 | 75.20 | 75.30 |

| 3DMark Timespy. Overall score | points | 8 364 | 8,842 | 9,152 |

| 3DMark Timespy. Graphics Score | points | 9,756 | 9,588 | 9,469 |

| 3DMark Timespy. Graphis Test 1 | fps | 62,60 | 61.72 | 60,88 |

| 3DMark Timespy. Graphis Test 2 | fps | 56,72 | 55,58 | 54.96 |

| 3DMark Timespy. CPU Score | points | 4,626 | 6,137 | 7,697 |

| 3DMark Timespy. CPU Test | fps | 15.54 | 20.62 | 25.86 |

| Far Cry 5. Minimum FPS | fps | 66 | 65 | 60 |

| Far Cry 5. Average FPS | fps | 89 | 89 | 82 |

| Far Cry 5. Maximum FPS | fps | 120 | 124 | 115 |

| Deus Ex: Mankind Divided, minimum | fps | 62.50 | 62.50 | 53,10 |

| Deus Ex: Mankind Divided, average | fps | 85.50 | 84.50 | 73.20 |

| Deus Ex: Mankind Divided, maximum | fps | 122.00 | 122.00 | 105.30 |

| GTA V Average framerate | fps | 71.60 | 67.70 | 65.40 |

| GTA V min framerate | fps | 47.10 | 48.80 | 48,10 |

| GTA V 1% low framerate | fps | 46.30 | 47.30 | 46.70 |

| GTA V 0.1% low framerate | fps | 39.40 | 43.00 | 40.50 |

findings

Conclusions in principle expected. Both ziones showed themselves quite well, but not without nuances.

E5-2680v2 expectedly showed itself well in applications optimized for multi-threaded CPUs and not bad in relatively modern games, provided they are optimized for multi-core CPUs. Despite the fact that it still gave way to higher-frequency processors in games, it’s still not playable at maximum speeds of the last two or three years, and it’s just ripped in the competitors ’working applications. Another advantage of this processor is that it is cold. In no test, its temperature did not exceed 50 degrees, despite the fact that the fan of the cooler worked at minimum speed and did not even think to unwind.

E5-1650v2overclocking proved to be the most versatile processor. Slightly inferior to the usual desktop models in games, it quite seriously surpasses them when working with video and graphics. But he also consumes considerably, and he heats himself and heats the food chains from the soul.

I7-3770K . Despite the impressive age, overclocking this processor to this day significantly exceeds last year’s stones in stock for the same price. Yes, and in acceleration in half the cases, too. In most cases, it provides approximately the same or greater gaming performance, and working on it is noticeably more comfortable than on the same I5-7600K. The superiority in numbers does not hit, but the responsiveness of the system under load on the old I7 is much higher than on the modern quad core (now the closest analog is I3-8100).

Feels like the most versatile option for the LGA2011v2 platform should be the eight-core E5-2667v2 and E5-2687Wv2 Xeon-s with a sufficiently high core frequency in boost, since Xeon E5-2680v2 in some scenarios clearly rested on the flow rate, but this is 4 more -8 thousand rubles on top of its price, which makes the use of such a configuration very doubtful. On the other hand, the same E5-2680v2 for relatively small money allows you to build a twenty-nuclear and forty-flux system with a large amount of RAM, but in this case you need to clearly understand the purpose for which such a monster is going (for example, here ).

PS: and yes ... the Chinese motherboard has lived just enough to have time to remove the tests. With the next unsuccessful attempt to overclock the E5-1650v2, it hung under OCCT and no longer booted. As the main system, she worked for 3 weeks, there were no problems with it as a platform for the workstation at all. The Chinese make claims in this case is strange, yet overclocking is not a win-win lottery, and iron sometimes suffers.