Writing a search plugin for Elasticsearch

- Tutorial

Elaticsearch is a popular search engine and NoSQL database. One of its interesting features is the support for plugins, which can expand the built-in functionality and add a bit of business logic to the search level. In this article I want to talk about how to write such a plugin and tests for it.  I want to make a reservation right away that the task in this article is greatly simplified so as not to clutter up the code. For example, in one of the real applications, the document stores the full schedule with exceptions, and based on them the script calculates the necessary values. But I would like to focus on the plugin itself, so the example is very simple.

I want to make a reservation right away that the task in this article is greatly simplified so as not to clutter up the code. For example, in one of the real applications, the document stores the full schedule with exceptions, and based on them the script calculates the necessary values. But I would like to focus on the plugin itself, so the example is very simple.

It is also worth mentioning that I am not an Elasticsearch committer, the information presented is mainly obtained by trial and error, and may be incorrect in some ways.

So, suppose we have an Event document with start and stop properties that store time as a string in the format “HH: MM: SS”. The task is to sort events for a given time time so that the active events (start <= time <= stop) are at the beginning of the output. An example of such a document:

As a basis, I took an example from one of the developers of Elasticsearch . The plugin consists of one or more scripts that must be registered:

The script consists of two parts: the NativeScriptFactory factory, and the script itself, which inherits AbstractSearchScript. The factory is engaged in creating a script (and at the same time in the validation of parameters). It is worth noting that the script is created only 1 time for searching (on each shard), so initialization / processing of parameters should be done at this stage.

The client application must pass the parameters to the script:

So, the script is created and ready to work. The most important thing in the script is the run () method:

This method is called for each document, so you should pay special attention to what happens inside it and how fast. This has a direct impact on the performance of the plugin.

In general, the algorithm here is:

To access document data, you must use one of the methods source (), fields (), or doc (). Looking ahead, I will say that doc () is much faster than source () and should be used if possible.

In this example, based on the document, I create a model for further work.

(in trivial cases, of course, you can simply use the data from the document and immediately return the result, and that would be faster)

The result in our case is “1” for the events that are happening now (start <= time <= stop), and “0 "For everyone else. The result type is Integer, because sort by Boolean Elasticsearch does not know how.

After processing the script for each document, a value will be determined by which Elasticsearch will sort them. Mission accomplished!

Besides being good on their own, tests are also a great entry point for debugging. It is very convenient to set a breakpoint, and start the debug of the desired test. Without this, it would be very difficult to debug the plugin.

The plugin integration testing scheme is approximately as follows:

To start the test server, we use the base class ElasticsearchIntegrationTest. You can configure the number of nodes, shards, and replicas. Read more on GitHub .

There are perhaps two ways to create test documents. The first is to build the document directly in the test - an example can be found here . This option is quite good, and at first I used it. However, the document layout changes, and over time it may turn out that the structure built in the test no longer corresponds to reality. Therefore, the second way is to store mapping and dataseparately as resources. In addition, this method makes it possible in case of unexpected results on live servers to simply copy the problem document as a resource and see how the test falls. In general, any method is good, the choice is yours.

To request the result of calculating the script, we will use the standard Java client:

An optional part of the program is integration with the Continuous Integration Travis system . Add the .travis file :

So, the plugin is ready and tested. It's time to try it out.

You can read about installing plugins in the official documentation . The compiled plugin is located in ./target. To facilitate local installation, I wrote a small script that compiles the plugin and installs it:

The script is written for Mac / brew. For other systems, you may have to correct the path to the plugin file. On Ubuntu, it is located in / usr / share / elasticsearch / bin / plugin. After installing the plugin, do not forget to restart Elasticsearch.

A simple test document generator written in Ruby.

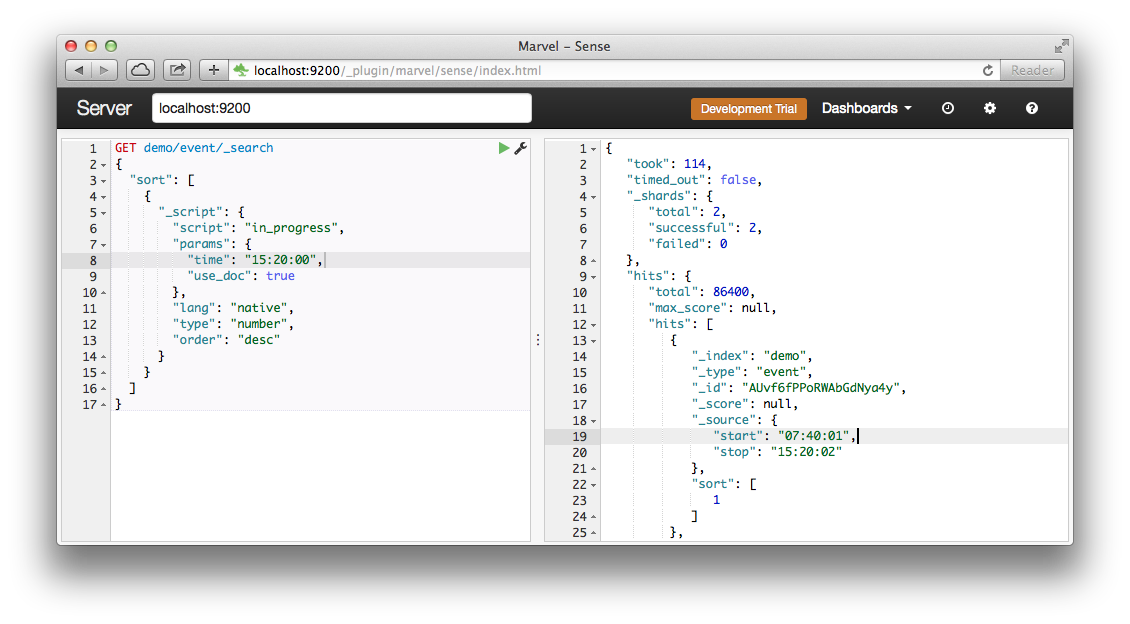

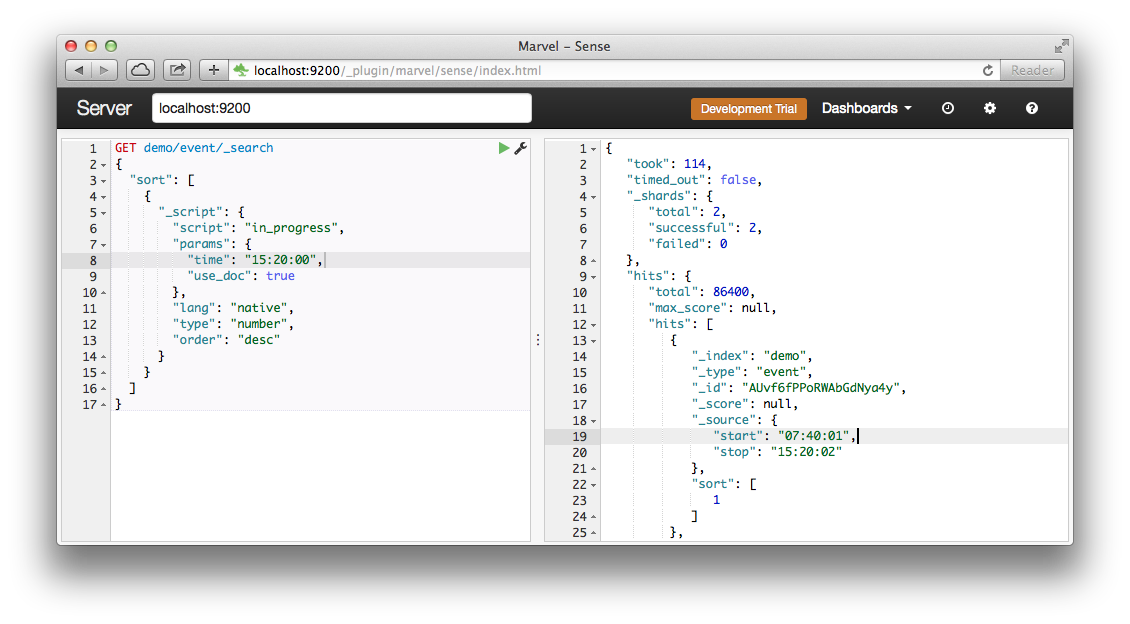

We ask Elasticsearch to sort all events by the result of the in_progress script:

Result:

does not compare in speed with simple sorting (2 ms), but still not bad for a laptop. In addition, scripts run in parallel in different shards and are thus scaled.

As I wrote at the beginning, the script has several methods for accessing the contents of the document. These are source (), fields (), and doc (). Source () is a convenient and slow way. When requested, loading of everythingdocument in HashMap. But then absolutely everything is available. Doc () is access to indexed data, it is much faster, but working with it is a bit more complicated. Firstly, the Nested type is not supported, which imposes restrictions on the structure of the document. Secondly, the indexed data may differ from what is in the document itself, first of all it concerns the lines. As an experiment of the task, you can try to remove the “index”: “not_analyzed” in mapping.json, and see how everything breaks. As for the fields () method, frankly, I have not tried it, judging by the documentation, it is slightly better than source ().

Now try using source () by changing the use_doc parameter to false.

The script from the plugin can be used not only for sorting, but in general in any places where scripts are supported. For example, you can calculate the value for found documents. In this case, by the way, productivity is no longer so important, since the calculations are performed for the filtered and limited set of documents.

That's all, thanks for reading!

GitHub source code: github.com/s12v/elaticsearch-plugin-demo

PS By the way, we really need experienced programmers and system administrators to work on a large project based on AWS / Elasticsearch / Symfony2 in Berlin. If you are suddenly interested - write!

I want to make a reservation right away that the task in this article is greatly simplified so as not to clutter up the code. For example, in one of the real applications, the document stores the full schedule with exceptions, and based on them the script calculates the necessary values. But I would like to focus on the plugin itself, so the example is very simple.

I want to make a reservation right away that the task in this article is greatly simplified so as not to clutter up the code. For example, in one of the real applications, the document stores the full schedule with exceptions, and based on them the script calculates the necessary values. But I would like to focus on the plugin itself, so the example is very simple. It is also worth mentioning that I am not an Elasticsearch committer, the information presented is mainly obtained by trial and error, and may be incorrect in some ways.

So, suppose we have an Event document with start and stop properties that store time as a string in the format “HH: MM: SS”. The task is to sort events for a given time time so that the active events (start <= time <= stop) are at the beginning of the output. An example of such a document:

{

"start": "09:00:00",

"stop": "18:30:00"

}

Plugin

As a basis, I took an example from one of the developers of Elasticsearch . The plugin consists of one or more scripts that must be registered:

public class ExamplePlugin extends AbstractPlugin {

public void onModule(ScriptModule module) {

module.registerScript(EventInProgressScript.SCRIPT_NAME, EventInProgressScript.Factory.class);

}

}

The script consists of two parts: the NativeScriptFactory factory, and the script itself, which inherits AbstractSearchScript. The factory is engaged in creating a script (and at the same time in the validation of parameters). It is worth noting that the script is created only 1 time for searching (on each shard), so initialization / processing of parameters should be done at this stage.

The client application must pass the parameters to the script:

- time - a string in the format "HH: MM: SS", the point in time that interests us

- use_doc - determines which method to use to access document data (more on that later)

public static class Factory implements NativeScriptFactory {

@Override

public ExecutableScript newScript(@Nullable Map params) {

LocalTime time = params.containsKey(PARAM_TIME)

? new LocalTime(params.get(PARAM_TIME))

: null;

Boolean useDoc = params.containsKey(PARAM_USE_DOC)

? (Boolean) params.get(PARAM_USE_DOC)

: null;

if (time == null || useDoc == null) {

throw new ScriptException("Parameters \"time\" and \"use_doc\" are required");

}

return new EventInProgressScript(time, useDoc);

}

}

So, the script is created and ready to work. The most important thing in the script is the run () method:

@Override

public Integer run() {

Event event = useDoc

? parser.getEvent(doc())

: parser.getEvent(source());

return event.isInProgress(time)

? 1

: 0;

}

This method is called for each document, so you should pay special attention to what happens inside it and how fast. This has a direct impact on the performance of the plugin.

In general, the algorithm here is:

- Read the document data we need

- Calculate the result

- Return it to Elasticsearch

To access document data, you must use one of the methods source (), fields (), or doc (). Looking ahead, I will say that doc () is much faster than source () and should be used if possible.

In this example, based on the document, I create a model for further work.

public class Event {

public static final String START = "start";

public static final String STOP = "stop";

private final LocalTime start;

private final LocalTime stop;

public Event(LocalTime start, LocalTime stop) {

this.start = start;

this.stop = stop;

}

public boolean isInProgress(LocalTime time) {

return (time.isEqual(start) || time.isAfter(start))

&& (time.isBefore(stop) || time.isEqual(stop));

}

}

(in trivial cases, of course, you can simply use the data from the document and immediately return the result, and that would be faster)

The result in our case is “1” for the events that are happening now (start <= time <= stop), and “0 "For everyone else. The result type is Integer, because sort by Boolean Elasticsearch does not know how.

After processing the script for each document, a value will be determined by which Elasticsearch will sort them. Mission accomplished!

Integration tests

Besides being good on their own, tests are also a great entry point for debugging. It is very convenient to set a breakpoint, and start the debug of the desired test. Without this, it would be very difficult to debug the plugin.

The plugin integration testing scheme is approximately as follows:

- Start test cluster

- Create index and mapping

- Add document

- Ask the server to calculate the script value for the given parameters and the document

- Verify that the value is correct.

To start the test server, we use the base class ElasticsearchIntegrationTest. You can configure the number of nodes, shards, and replicas. Read more on GitHub .

There are perhaps two ways to create test documents. The first is to build the document directly in the test - an example can be found here . This option is quite good, and at first I used it. However, the document layout changes, and over time it may turn out that the structure built in the test no longer corresponds to reality. Therefore, the second way is to store mapping and dataseparately as resources. In addition, this method makes it possible in case of unexpected results on live servers to simply copy the problem document as a resource and see how the test falls. In general, any method is good, the choice is yours.

To request the result of calculating the script, we will use the standard Java client:

SearchResponse searchResponse = client()

.prepareSearch(TEST_INDEX).setTypes(TEST_TYPE)

.addScriptField(scriptName, "native", scriptName, scriptParams)

.execute()

.actionGet();

Integration with Travis-CI

An optional part of the program is integration with the Continuous Integration Travis system . Add the .travis file :

language: java

jdk:

- openjdk7

- oraclejdk7

script:

- mvn test

Application

So, the plugin is ready and tested. It's time to try it out.

Installation

You can read about installing plugins in the official documentation . The compiled plugin is located in ./target. To facilitate local installation, I wrote a small script that compiles the plugin and installs it:

mvn clean package

if [ $? -eq 0 ]; then

plugin -r plugin-example

plugin --install plugin-example --url file://`pwd`/`ls target/*.jar | head -n 1`

echo -e "\033[1;33mPlease restart Elasticsearch!\033[0m"

fi

The script is written for Mac / brew. For other systems, you may have to correct the path to the plugin file. On Ubuntu, it is located in / usr / share / elasticsearch / bin / plugin. After installing the plugin, do not forget to restart Elasticsearch.

Test data

A simple test document generator written in Ruby.

bundle install

./generate.rb

Test request

We ask Elasticsearch to sort all events by the result of the in_progress script:

curl -XGET "http://localhost:9200/demo/event/_search?pretty" -d'

{

"sort": [

{

"_script": {

"script": "in_progress",

"params": {

"time": "15:20:00",

"use_doc": true

},

"lang": "native",

"type": "number",

"order": "desc"

}

}

],

"size": 1

}'

Result:

{

"took" : 139,

"timed_out" : false,

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"hits" : {

"total" : 86400,

"max_score" : null,

"hits" : [ {

"_index" : "demo",

"_type" : "event",

"_id" : "AUvf6fPPoRWAbGdNya4y",

"_score" : null,

"_source":{"start":"07:40:01","stop":"15:20:02"},

"sort" : [ 1.0 ]

} ]

}

}

does not compare in speed with simple sorting (2 ms), but still not bad for a laptop. In addition, scripts run in parallel in different shards and are thus scaled.

Source () and doc () methods

As I wrote at the beginning, the script has several methods for accessing the contents of the document. These are source (), fields (), and doc (). Source () is a convenient and slow way. When requested, loading of everythingdocument in HashMap. But then absolutely everything is available. Doc () is access to indexed data, it is much faster, but working with it is a bit more complicated. Firstly, the Nested type is not supported, which imposes restrictions on the structure of the document. Secondly, the indexed data may differ from what is in the document itself, first of all it concerns the lines. As an experiment of the task, you can try to remove the “index”: “not_analyzed” in mapping.json, and see how everything breaks. As for the fields () method, frankly, I have not tried it, judging by the documentation, it is slightly better than source ().

Now try using source () by changing the use_doc parameter to false.

Inquiry

And now it took: 587 milliseconds, i.e. 4 times slower. In a real application with large documents, the difference can be hundreds of times.curl -XGET "http://localhost:9200/demo/event/_search?pretty" -d'

{

"sort": [

{

"_script": {

"script": "in_progress",

"params": {

"time": "15:20:00",

"use_doc": false

},

"lang": "native",

"type": "number",

"order": "desc"

}

}

],

"size": 1

}'

Other script uses

The script from the plugin can be used not only for sorting, but in general in any places where scripts are supported. For example, you can calculate the value for found documents. In this case, by the way, productivity is no longer so important, since the calculations are performed for the filtered and limited set of documents.

curl -XGET "http://localhost:9200/demo/event/_search" -d'

{

"script_fields": {

"in_progress": {

"script": "in_progress",

"params": {

"time": "00:00:01",

"use_doc": true

},

"lang": "native"

}

},

"partial_fields": {

"properties": {

"include": ["*"]

}

},

"size": 1

}'

Result

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"hits": {

"total": 86400,

"max_score": 1,

"hits": [

{

"_index": "demo",

"_type": "event",

"_id": "AUvf6fO9oRWAbGdNyUJi",

"_score": 1,

"fields": {

"in_progress": [

1

],

"properties": [

{

"stop": "00:00:02",

"start": "00:00:01"

}

]

}

}

]

}

}

That's all, thanks for reading!

GitHub source code: github.com/s12v/elaticsearch-plugin-demo

PS By the way, we really need experienced programmers and system administrators to work on a large project based on AWS / Elasticsearch / Symfony2 in Berlin. If you are suddenly interested - write!