The myth of the futility of QoS without network congestion

At work, I several times came across the opinion that it is not necessary to configure QoS in a non-congested ethernet network for the successful operation of services such as IPTV and VoIP. This opinion cost me and my colleagues many nerve cells and hours to diagnose phantom problems, so I’ll try to tell you as easy as possible about why this opinion is wrong.

My name is Vladimir and I work as a network engineer in one of the small ISPs in St. Petersburg.

One of the services we provide is L2VPN for transporting IPTV streams. On the example of this service I will lead a story.

It all starts with a call to technical support from a client-operator with a complaint about the quality of IPTV - the picture is streaming (“artifacts”), the sound disappears, in general, the standard set. The IPTV in our network is classified into an assured forwarding queue, so the diagnosis is to go through the glands on the route and check that there are no losses in the AF queue for egress, and there are no physical errors on the ingress queue. After that, we cheerfully report to the client that no losses were found in our area of responsibility, we recommend that the client look for a problem with himself or the IPTV provider, and go and drink tea with cookies.

But the client presses and continues to insist that we are to blame, and everything is fine with him. We check everything again, look at the correctness of classifiers and labeling packets from the client, a dialogue is established, and at some stage we ask the question “how do you configure QoS on the network?”, To which we get the answer “nothing, we even have 50 interfaces % are not loaded therefore we do not need QoS ". A heavy sigh and drove off.

Typically, the download schedule that everyone is looking at has an interval of 5 minutes. If “real time” is a few seconds, starting from 1. Unfortunately and fortunately, modern network equipment operates in periods of not 5 minutes and not 1 second even, but picoseconds. The fact that the interface was not 100% loaded within a second does not mean that it was not loaded in the same way for several milliseconds.

Here we come to the conceptual notion - mikroborst ( microburst ). This is such a very short period of time when the amount of data received by the device becomes larger than the interface is capable of sending.

Usually the first reaction - how so ?! We live in the era of high-speed interfaces! 10Gb / s is already commonplace, 40 and 100Gb / s is being implemented everywhere, and we are already waiting for 1Tb / s interfaces.

In fact, the higher the speed of the interfaces, the tougher the microbursts and their effect on the network become.

The mechanism of occurrence is very simple, I will consider it as an example of three 1Gb / s interfaces, where traffic from two of them goes through the third.

This is the only necessary condition for the emergence of microburst - so that the speed of incoming (ingress) interfaces exceeds the speed of the outgoing (egress) interface. Doesn’t resemble anything? This is the traditional aggregation level scheme in an ethernet network - many ports (ingress) merge traffic into one uplink (egress). This is how absolutely everything is built in networks - from telecom operators to data centers.

Each egress interface has a tx-ring send queue, which is a ring buffer. There are added packets to send to the network and of course this buffer has a finite size. But ingress interfaces on the sending side also have the same ring buffers that provide the same line-rate. What happens if they start sending traffic at the same time? Our egress interface does not have enough space in its tx-ring, since it will fill up twice as fast as it can send packets. The remaining packages need to be stored somewhere. In general, this is another buffer, which we call a queue. As long as there is no space in tx-ring, the packet is queued and waits for free space in tx-ring. But the trouble is, the queue has finite memory. What will happen if ingress interfaces work on line-rate long enough? The memory in the queue will also end. In this case, the new package has nowhere to be stored, and it will be discarded - this situation is called tail drop.

How long does it take for such a scenario to become a reality? Let's count.

The most difficult thing is to find the capacity of the interface buffer. Vendors do not publish such information very actively. But let’s take, for example, a period of 200ms - it usually does not make sense to hold a packet in a queue longer, and this is already a lot.

For a 1Gb / s interface, we need (1,000,000,000 * 0.2) / 8 = 25MB of memory. How long does it take to work on line-rate for two 1Gb / s interfaces to completely fill the buffer? 200ms. This is the time during which 25MB are transmitted at a speed of 1Gb / s. Yes, we have two ingress interfaces, but the egress interface also doesn’t sit idle and sends data at the same speed, so 200ms.

This is a relatively large number. And 10Gb / s ingress interface, how long will it take to reload the 200ms buffer of 1Gb / s interface? ~ 22ms. This is already significantly less.

And how much memory do you need to store 200ms for a 10Gb / s interface? Already 250MB. It’s not that much by modern standards, but the wind is blowing in this direction - speeds are increasing, and in order to maintain the depth of the buffer more and more memory is required, which results in engineering and economic problems, and the smaller the buffer, the faster the microburst will clog it.

It turns out the eternal question for vendor engineers - how much memory to give the interface in hardware? A lot is expensive and every next millisecond becomes meaningless and meaningless. Few - microbursts will lead to large packet losses and customer complaints.

For other scenarios, you can calculate for yourself, but the result is always the same - the queue and tail drops are completely clogged, and on the chart the interface shelf does not smell close, and at any period - 5 minutes, 1 second.

This situation in packet networks is inevitable - the interface will work on the line-rate for less than a second, and there will already be losses. The only way to avoid it is to build a network so that ingress speed never exceeds egress speed, which is impractical and unrealistic.

Further logic is already traceable and fairly obvious. There are packet losses, but QoS is not configured - priority traffic is not classified in any way and does not differ from other traffic, and falls into one general queue, where it has an equal chance of dropping.

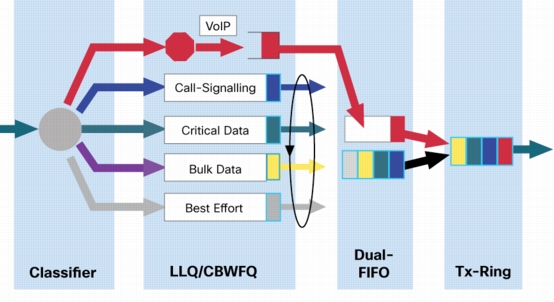

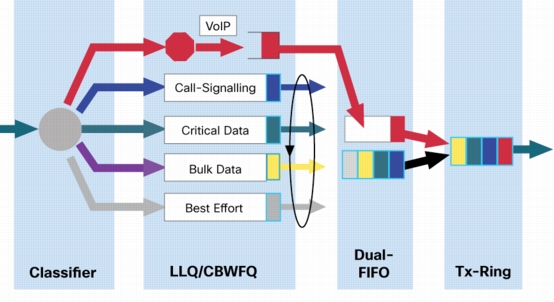

What to do? Configure QoS. Be sure to classify priority traffic and put it in a separate queue which allocate a large amount of memory. Configure packet sending algorithms so that priority packets get to the tx-ring earlier than others - so their queue will be cleared faster.

For example, in our practice we use the following approach to queues:

Assured forwarding (AF) - “hold but deliver”. In the AF queue, traffic is classified that requires guaranteed delivery, but is not sensitive to delays. A large amount of memory is allocated for this queue, but relatively little space is given in the tx-ring, and packets get there later than others. A vivid example of such traffic is IPTV - it is buffered on the client (VLC or STB), so it can be delayed, but the loss will turn into an image artifact.

Expedited forwarding (EF) - "deliver instantly or throw it away." This queue will be allocated a minimum (or no memory at all) for the queue, but the highest priority is set for getting into the tx-ring so that the packet is sent as quickly as possible. An example of traffic is VoIP. The voice cannot be delivered late, otherwise the telephony codec will not be able to correctly collect it - the subscriber will hear a croaking. At the same time, the loss of individual packets on the overall voice quality does not affect much - it is not ideal for people.

There is also network control (NC) and best effort (BE) for managing the network and everything else, respectively, and traffic can also be, for example, a teleconference, which is a hybrid between VoIP and IPTV, but this is a completely separate topic, and configure QoS for them follows separately in each network, depending on the topology and other factors. All together, in general, it looks something like this (picture from the Cisco website):

I hope now you will configure QoS on your network?

My name is Vladimir and I work as a network engineer in one of the small ISPs in St. Petersburg.

One of the services we provide is L2VPN for transporting IPTV streams. On the example of this service I will lead a story.

It all starts with a call to technical support from a client-operator with a complaint about the quality of IPTV - the picture is streaming (“artifacts”), the sound disappears, in general, the standard set. The IPTV in our network is classified into an assured forwarding queue, so the diagnosis is to go through the glands on the route and check that there are no losses in the AF queue for egress, and there are no physical errors on the ingress queue. After that, we cheerfully report to the client that no losses were found in our area of responsibility, we recommend that the client look for a problem with himself or the IPTV provider, and go and drink tea with cookies.

But the client presses and continues to insist that we are to blame, and everything is fine with him. We check everything again, look at the correctness of classifiers and labeling packets from the client, a dialogue is established, and at some stage we ask the question “how do you configure QoS on the network?”, To which we get the answer “nothing, we even have 50 interfaces % are not loaded therefore we do not need QoS ". A heavy sigh and drove off.

Typically, the download schedule that everyone is looking at has an interval of 5 minutes. If “real time” is a few seconds, starting from 1. Unfortunately and fortunately, modern network equipment operates in periods of not 5 minutes and not 1 second even, but picoseconds. The fact that the interface was not 100% loaded within a second does not mean that it was not loaded in the same way for several milliseconds.

Here we come to the conceptual notion - mikroborst ( microburst ). This is such a very short period of time when the amount of data received by the device becomes larger than the interface is capable of sending.

Usually the first reaction - how so ?! We live in the era of high-speed interfaces! 10Gb / s is already commonplace, 40 and 100Gb / s is being implemented everywhere, and we are already waiting for 1Tb / s interfaces.

In fact, the higher the speed of the interfaces, the tougher the microbursts and their effect on the network become.

The mechanism of occurrence is very simple, I will consider it as an example of three 1Gb / s interfaces, where traffic from two of them goes through the third.

This is the only necessary condition for the emergence of microburst - so that the speed of incoming (ingress) interfaces exceeds the speed of the outgoing (egress) interface. Doesn’t resemble anything? This is the traditional aggregation level scheme in an ethernet network - many ports (ingress) merge traffic into one uplink (egress). This is how absolutely everything is built in networks - from telecom operators to data centers.

Each egress interface has a tx-ring send queue, which is a ring buffer. There are added packets to send to the network and of course this buffer has a finite size. But ingress interfaces on the sending side also have the same ring buffers that provide the same line-rate. What happens if they start sending traffic at the same time? Our egress interface does not have enough space in its tx-ring, since it will fill up twice as fast as it can send packets. The remaining packages need to be stored somewhere. In general, this is another buffer, which we call a queue. As long as there is no space in tx-ring, the packet is queued and waits for free space in tx-ring. But the trouble is, the queue has finite memory. What will happen if ingress interfaces work on line-rate long enough? The memory in the queue will also end. In this case, the new package has nowhere to be stored, and it will be discarded - this situation is called tail drop.

How long does it take for such a scenario to become a reality? Let's count.

The most difficult thing is to find the capacity of the interface buffer. Vendors do not publish such information very actively. But let’s take, for example, a period of 200ms - it usually does not make sense to hold a packet in a queue longer, and this is already a lot.

For a 1Gb / s interface, we need (1,000,000,000 * 0.2) / 8 = 25MB of memory. How long does it take to work on line-rate for two 1Gb / s interfaces to completely fill the buffer? 200ms. This is the time during which 25MB are transmitted at a speed of 1Gb / s. Yes, we have two ingress interfaces, but the egress interface also doesn’t sit idle and sends data at the same speed, so 200ms.

This is a relatively large number. And 10Gb / s ingress interface, how long will it take to reload the 200ms buffer of 1Gb / s interface? ~ 22ms. This is already significantly less.

And how much memory do you need to store 200ms for a 10Gb / s interface? Already 250MB. It’s not that much by modern standards, but the wind is blowing in this direction - speeds are increasing, and in order to maintain the depth of the buffer more and more memory is required, which results in engineering and economic problems, and the smaller the buffer, the faster the microburst will clog it.

It turns out the eternal question for vendor engineers - how much memory to give the interface in hardware? A lot is expensive and every next millisecond becomes meaningless and meaningless. Few - microbursts will lead to large packet losses and customer complaints.

For other scenarios, you can calculate for yourself, but the result is always the same - the queue and tail drops are completely clogged, and on the chart the interface shelf does not smell close, and at any period - 5 minutes, 1 second.

This situation in packet networks is inevitable - the interface will work on the line-rate for less than a second, and there will already be losses. The only way to avoid it is to build a network so that ingress speed never exceeds egress speed, which is impractical and unrealistic.

Further logic is already traceable and fairly obvious. There are packet losses, but QoS is not configured - priority traffic is not classified in any way and does not differ from other traffic, and falls into one general queue, where it has an equal chance of dropping.

What to do? Configure QoS. Be sure to classify priority traffic and put it in a separate queue which allocate a large amount of memory. Configure packet sending algorithms so that priority packets get to the tx-ring earlier than others - so their queue will be cleared faster.

For example, in our practice we use the following approach to queues:

Assured forwarding (AF) - “hold but deliver”. In the AF queue, traffic is classified that requires guaranteed delivery, but is not sensitive to delays. A large amount of memory is allocated for this queue, but relatively little space is given in the tx-ring, and packets get there later than others. A vivid example of such traffic is IPTV - it is buffered on the client (VLC or STB), so it can be delayed, but the loss will turn into an image artifact.

Expedited forwarding (EF) - "deliver instantly or throw it away." This queue will be allocated a minimum (or no memory at all) for the queue, but the highest priority is set for getting into the tx-ring so that the packet is sent as quickly as possible. An example of traffic is VoIP. The voice cannot be delivered late, otherwise the telephony codec will not be able to correctly collect it - the subscriber will hear a croaking. At the same time, the loss of individual packets on the overall voice quality does not affect much - it is not ideal for people.

There is also network control (NC) and best effort (BE) for managing the network and everything else, respectively, and traffic can also be, for example, a teleconference, which is a hybrid between VoIP and IPTV, but this is a completely separate topic, and configure QoS for them follows separately in each network, depending on the topology and other factors. All together, in general, it looks something like this (picture from the Cisco website):

I hope now you will configure QoS on your network?