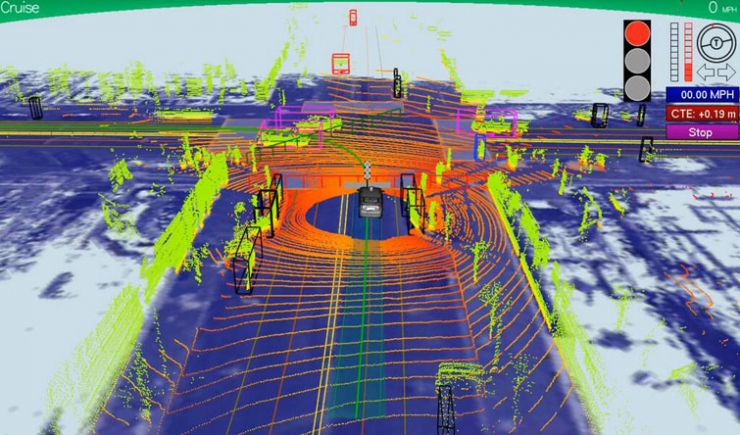

How do Google robots see the world?

I remember that the film "Terminator 2" made a huge impression on me, it was this part. I especially liked the footage where the T800 model analyzed the world (the same moment with taking away clothes and a motorcycle, for example). It turns out that in our time the situation is not much different in the case of real robots (though not as perfect as the T800, of course). The other day I caught the eye of a video with the presentation of the “vision of the world” by Google’s robotic cars.

These cars use a huge number of sensors to analyze the environment, including lasers, radars and more. Separately, it is worth noting that the car collects about a gigabyte of data per second, by itself, analyzing this information, and quickly enough. When an obstacle appears, the car reacts almost instantly, which indicates a high speed of the car’s control system.

Here is the video with a demonstration of the system:

And here is a more detailed presentation:

Via Bill_Gross + Dvice