Overview of caching systems in onPHP

Good day!

In this article we will talk about how we work with the cache in plus1.wapstart.ru , what problems we had and how we solved some special cases.

To start with the terminology.

By “cache” in this article I will understand some kind of fast storage that can be used, including for caching. In this case, the storage should have a standardized interface.

A server / storage is an application that can store data and give access to it through the interface described below. For example, this application may be memcached.

We use the onPHP framework . It has an abstract CachePeer class from which all cache implementations should inherit. The interface of any implementation is reduced to the following methods.

The following CachePeer implementations exist in our world (clickable)

This diagram shows both storage implementations (see bridge ) and various decorators that solve particular problems.

Onphp has support for working with Redis ; Memcached - two whole implementations: on sockets and using Memcache ( http://php.net/Memcache ); we can work with SharedMemory . If none of this is in the installation, then we will work with the application memory .

Despite the variety of supported technologies, I do not know of a single onphp project that would use anything other than Memcached.

Memcached rules this world. :)

We have two Memcache implementations for the following reasons:

We almost everywhere use PeclMemcached, which connects to the server through the Memcache extension. Other things being equal, it works faster and also supports pconnect .

With an alternative library ( MemcacheD ), we somehow did not work out. I tried to write an implementation for it, but at that time (about two years ago) it was not very stable.

About decorators:

When the ratio of projects to one caching system becomes more than one, then WatermarkedPeer should be used . Its meaning boils down to the getActualWatermark () method. For example, the get implementation becomes

This avoids a key conflict. Data from different projects / classes / etc. will be recorded under different keys. Otherwise, this cache is a standard decorator for some kind of storage implementation.

If you need to distribute data across multiple caching systems, you can either use the memkesh cluster from php delivery or take one of our aggregate caches. We have several of them:

On this more or less standard part ends. Most applications should have enough of this set of implementations to create a normal caching system.

Then the particulars begin.

Because Since almost all implementations use the decorator pattern, they can be quite successfully combined.

For example, the following construction is valid:

Or even this:

In this case, the data will first be searched in the local memcache, accessible via unix-socket, if it is not there, then a localhost: 9898 memcache will be requested. And in case it is unavailable, then backup: 9898. At the same time, the application knows that from the caches on ports 9898 you can only read, but not write.

The possibilities of caches from onphp do not end there. You can make completely different configurations that will cover your tasks. CachePeer from onphp is cool.

ps. Once upon a time here they talked about a series of articles about onphp. The beginning was laid by this post. In the future, we will touch upon other topics related to the framework and its use in plus1.wapstart.ru.

pps I take this opportunity to inform you that we are looking for people:

hantim.ru/jobs/11163-veduschiy-qa-menedzher-rukovoditel-otdela-testirovaniya

hantim.ru/jobs/11111-veduschiy-php-razrabotchik-team-leader

In this article we will talk about how we work with the cache in plus1.wapstart.ru , what problems we had and how we solved some special cases.

To start with the terminology.

By “cache” in this article I will understand some kind of fast storage that can be used, including for caching. In this case, the storage should have a standardized interface.

A server / storage is an application that can store data and give access to it through the interface described below. For example, this application may be memcached.

We use the onPHP framework . It has an abstract CachePeer class from which all cache implementations should inherit. The interface of any implementation is reduced to the following methods.

abstractpublicfunctionget($key);

abstractpublicfunctiondelete($key);

abstractpublicfunctionincrement($key, $value);

abstractpublicfunctiondecrement($key, $value);

abstractprotectedfunctionstore(

$action, $key, $value, $expires = Cache::EXPIRES_MEDIUM

);

abstractpublicfunctionappend($key, $data);

The following CachePeer implementations exist in our world (clickable)

This diagram shows both storage implementations (see bridge ) and various decorators that solve particular problems.

Onphp has support for working with Redis ; Memcached - two whole implementations: on sockets and using Memcache ( http://php.net/Memcache ); we can work with SharedMemory . If none of this is in the installation, then we will work with the application memory .

Despite the variety of supported technologies, I do not know of a single onphp project that would use anything other than Memcached.

Memcached rules this world. :)

We have two Memcache implementations for the following reasons:

- when writing Memcached (on sockets) extension Memcache was not yet written.

yes, we know about the name conflict with http://php.net/Memcached , in master this has already been fixed. - Implementing a connection on sockets is available to you even when you do not have access to php settings and cannot supply the necessary extensions.

We almost everywhere use PeclMemcached, which connects to the server through the Memcache extension. Other things being equal, it works faster and also supports pconnect .

With an alternative library ( MemcacheD ), we somehow did not work out. I tried to write an implementation for it, but at that time (about two years ago) it was not very stable.

About decorators:

When the ratio of projects to one caching system becomes more than one, then WatermarkedPeer should be used . Its meaning boils down to the getActualWatermark () method. For example, the get implementation becomes

publicfunctionget($key){

return$this->peer->get($this->getActualWatermark().$key);

}

This avoids a key conflict. Data from different projects / classes / etc. will be recorded under different keys. Otherwise, this cache is a standard decorator for some kind of storage implementation.

If you need to distribute data across multiple caching systems, you can either use the memkesh cluster from php delivery or take one of our aggregate caches. We have several of them:

- AggregateCache defines a server that will work with the current key based on the value of mt_rand from it with a previously redefined mt_srand . It sounds weird, but it's really very simple .

- SimpleAggregateCache is generally simple as a brick. The desired server is determined based on the remainder of dividing the numerical key representation by the number of servers.

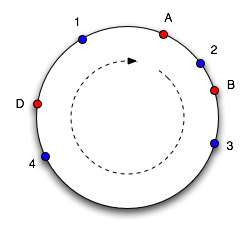

CyclicAggregateCache - implementation, we assembled at last.fm . She is very elegant. We take a circle, on it we put "mount points" of servers.

CyclicAggregateCache - implementation, we assembled at last.fm . She is very elegant. We take a circle, on it we put "mount points" of servers.

Moreover, the number of points for each server will be proportional to its weight. Upon receipt of the request, the key is also mapped to some point on the circle. It will be processed by the server whose point has a shorter distance to the key point.

The advantage of this approach is that when adding a server to the pool, only a part of the values is devalued, and not all. Also, when a server is withdrawn from a pool, only those values stored on it disappear, while the rest of the server takes its load more or less evenly. You can read more about the idea of the algorithm here or here .

On this more or less standard part ends. Most applications should have enough of this set of implementations to create a normal caching system.

Then the particulars begin.

- DebugCachePeer - its name is so self-documenting that I don’t see any sense in describing it.

- ReadOnlyPeer - there are such caches from which you can only read, but you can not write to them. For example, they can be recorded from some other place, or even be implemented differently, for example, like our fish . For these repositories, it is advisable to use ReadOnlyPeer, because it on the application side will ensure that the data will only be read, but not written / updated.

- CascadeCache - you have a local fast unloaded cache, for example on a socket. And there is some kind of remote cache, which is also pre-populated. If your application is allowed to use slightly outdated data, then you can use CascadeCache. It will do read operations from the local cache, and if there is no data in the local cache, they will be requested in the remote cache.

For "negative" results (null), you can use one of two strategies - they will either be stored in the local cache or ignored. - MultiCachePeer - you have a cache prefill. At the same time, it is desirable that it can fill a dozen "local" caches on ten servers.

In other words, we want to write data in one place, and read them, in the general case, from another. At the same time, each server with applications should have the same configuration - for the convenience of deployment. To do this, you can use MultiCachePeer with something like this config:MultiCachePeer::create( PeclMemcached::create('localhost', 11211), array( PeclMemcached::create('meinherzbrennt', 11211), PeclMemcached::create('links234', 11211), PeclMemcached::create('sonne', 11211), PeclMemcached::create('ichwill', 11211), PeclMemcached::create('feuerfrei', 11211), PeclMemcached::create('mutter', 11211), PeclMemcached::create('spieluhr', 11211) ) ); - SequentialCache - Imagine that you have a repository that sometimes crashes or is simply unavailable. For example, it can sometimes be taken out of service, restarted, etc. At the same time, the application always wants to receive data. To cover this situation, you can use SequentialCache, with something like this config:

$cache = new SequentialCache( PeclMemcached::create('master', 11211, 0.1), //третий параметр конструктора - это таймаут.array( PeclMemcached::create('backup', 11211, 0.1), ) )

Because Since almost all implementations use the decorator pattern, they can be quite successfully combined.

For example, the following construction is valid:

$swordfish =

ReadOnlyPeer::create(

new SequentialCache(

PeclMemcached::create('localhost', 9898, 0.1),

array(

PeclMemcached::create('backup', 9898, 0.1),

)

)

);

Or even this:

$swordfish =

CascadeCache::create(

PeclMemcached::create('unix:///var/run/memcached_sock/memcached.sock', 0),

ReadOnlyPeer::create(

new SequentialCache(

PeclMemcached::create('localhost', 9898, 0.1),

array(

PeclMemcached::create('backup', 9898, 0.1),

)

)

),

CascadeCache::NEGATIVE_CACHE_OFF

);

In this case, the data will first be searched in the local memcache, accessible via unix-socket, if it is not there, then a localhost: 9898 memcache will be requested. And in case it is unavailable, then backup: 9898. At the same time, the application knows that from the caches on ports 9898 you can only read, but not write.

The possibilities of caches from onphp do not end there. You can make completely different configurations that will cover your tasks. CachePeer from onphp is cool.

ps. Once upon a time here they talked about a series of articles about onphp. The beginning was laid by this post. In the future, we will touch upon other topics related to the framework and its use in plus1.wapstart.ru.

pps I take this opportunity to inform you that we are looking for people:

hantim.ru/jobs/11163-veduschiy-qa-menedzher-rukovoditel-otdela-testirovaniya

hantim.ru/jobs/11111-veduschiy-php-razrabotchik-team-leader