When 2 x 3 = 2, or once again about data virtualization

Hello! Have you heard anything about the Baader-Meinhof phenomenon ? This is a funny cognitive distortion, to observe which, as it turned out, is quite interesting by example. In 2016, a review article about Delphix technology was published on Habré . Like any good theory that you consume daily in tons, to be aware of, you completely forget about 80 percent, without applying it in practice. It happened to me too - I rather quickly forgot about that post and Delphix, until about a year ago, as a matter of duty, I did not encounter the authors of the product and the product itself. Having the opportunity to study the topic not in theory, but in practice, LANIT plunged into this technology so deeply that in this article I would like to systematize the knowledge gained and analyze the experience gained.

The picture is kindly provided by Yandex search results.

The picture is kindly provided by Yandex search results.

Delphix is software running on a virtual machine with the Solaris OS, to which you need to connect the storage system to store copies of the database to complete the work. Delivered software in the form of a ready virtual machine image. The image can be deployed on VmWare, or in the AWS / Azure cloud infrastructure. For tests, in principle, it can be raised locally at the workstation.

If it is very generalized and very briefly expounded, Delphix virtualizes data and provides ready-made databases with which to work. He takes a copy of the source database (called dSource) and puts it in his stack (a local VM partition that is connected to an external storage system). Using algorithms, it reduces the volume of this copy to 60% (depending on the data types, of course). Then from the same copy in a few minutes you can deploy this database to other hosts. Such databases are called Virtual Database - VDB. Database files are mounted to target hosts via NFS, and therefore do not occupy space on them. That is, having 1 dSource for 500 GB and 5 VDB, the total occupied space for this case on the storage system will be about 350 GB (or so).

After the initialization and initial loading of the source database in Delphix, the Delphix engine maintains constant synchronization with this database based on the policy you have chosen, for example, synchronization daily or every hour, or some time after the transactions.

The speed of creating the first copy of the database directly depends on the network bandwidth between the source database and Delphix, since the backup is transmitted over the network.

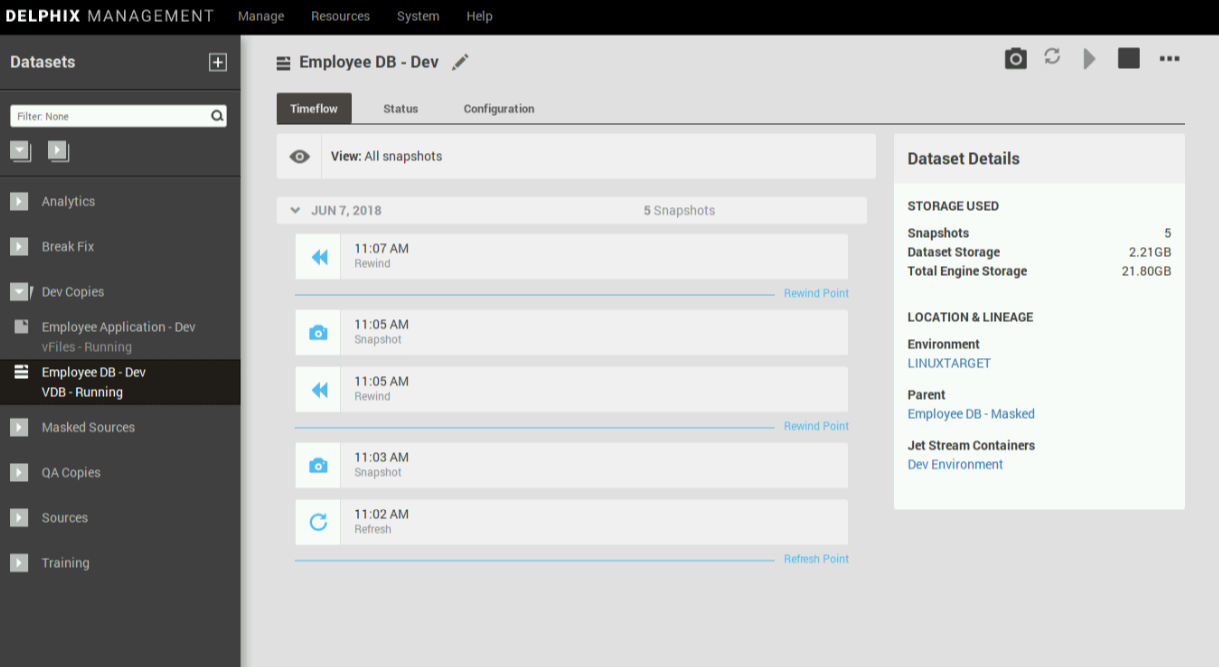

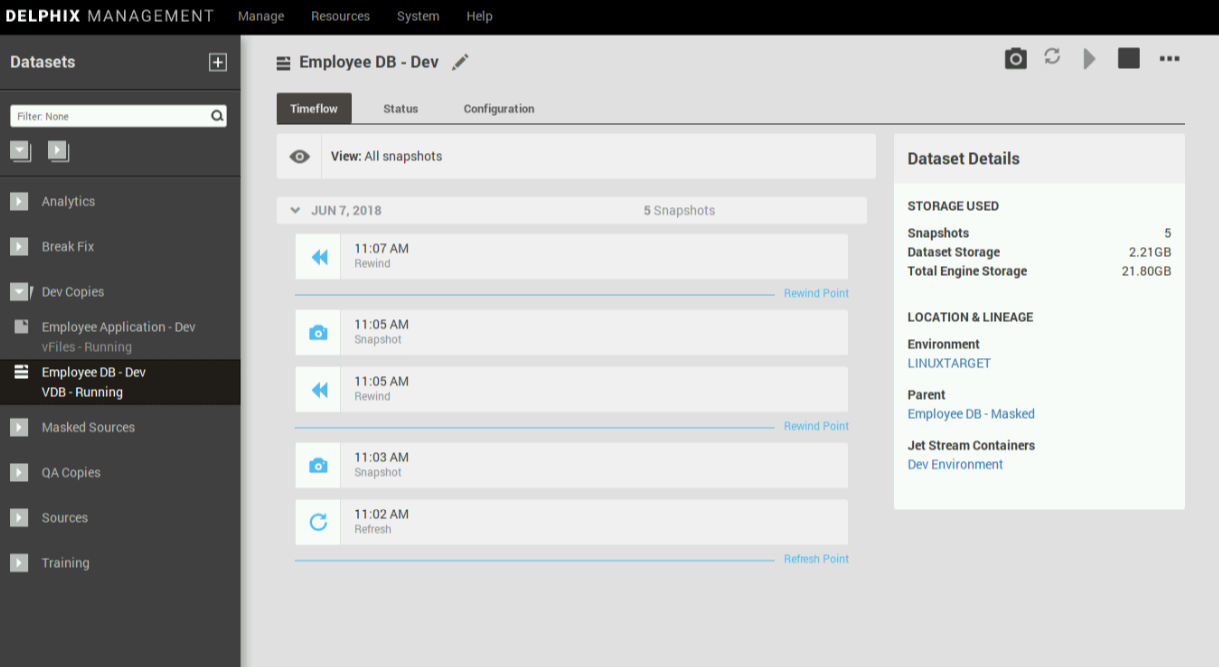

After binding to the source database, Delphix supports the so-called TimeFlow (time machine) source database - a functionality similar to version control. Any version of the database within this time interval can be connected to the target database. What for? For example, to investigate incidents.

Figure 1: Ability to deploy VDB at any time ...

Figure 1: Ability to deploy VDB at any time ...

Figure 2: ... or manually created snapshot of the

Figure 2: ... or manually created snapshot of the

VDB database are on common storage, so neither users nor admins need additional storage resources.

You can quickly connect any version of this VDB to the target database. VDBs are independent of each other and are in Read-Write mode. New changes that are made to VDB are written to new blocks in the Delphix store.

You can create a VDB based on another VDB and update it or roll back as needed.

It is possible to work with such VDB, “as with a normal DB”. The application will not notice anything. It is clear that the binary database must already be installed on these servers, since only the database files are cloned.

Such VDB are used mainly for functional testing or as sandboxes. To drive the load on VDB in most cases is not worth it, since due to the connection of the database via NFS, it will be quite problematic to interpret the results of such load testing. Although if you have a DB in prome working with the storage system through NFS, then, probably, it is possible.

Guaranteed, you can count on stable work if you use Oracle (including with support for multitenant and RAC), MS SQL, SAP ASE, IBM DB2, Oracle EBS, SAP HANA. Such configurations are supported by the vendor.

In addition, you can also virtualize regular files (consider any database, but with some restrictions on functionality). For example, as a proof-of-concept for internal tests, we virtualized PostgreSQL instances of one of our projects. I won’t say what happened once or twice, but in the end, the scheme with PostgreSQL worked, even though the vendor currently does not officially support PostgreSQL.

It goes without saying that the storage and snapshot functionality of thin-provision has been around for a long time. However, if a productive database and stands are on the storage systems of different vendors, then this solution will not work. And not all storage systems can do it.

With Delphix, you can quite successfully deploy test environments on technologically outdated and end-of-life storage systems that have been decommissioned. As a result, significantly reduce the cost of storing test data.

It is also possible to get a physical copy of the database from this snapshot, for example, to create a standby or to transfer the database to another storage system.

The important component is self-service. With the help of the Delphix JetStream product GUI, even untrained developers / testers can independently roll back / update the database versions of their booths, do not pull admins several times a week.

Figure 3. The use of JetStream in the figure indicates the lifetime of the main version VDB branch, the creation of version snapshots and a couple of rollbacks to these snapshots.

Figure 3. The use of JetStream in the figure indicates the lifetime of the main version VDB branch, the creation of version snapshots and a couple of rollbacks to these snapshots.

Delphix maintains constant VDB synchronization with a productive database using archive / transaction log files. For a similar implementation on the basis of storage, you will have to do a dozen snapshots per day.

Yes, it can, but only Oracle DBMS is supported in it. Therefore, it cannot be used for other DBMSs.

First, let's figure out who and due to what Delphix can be profitable. The profit is as follows:

Now briefly on each item.

Reduction of time spent on approval for new stands. Yes, in some companies we worked with, it took two weeks to coordinate the deployment of a new stand. And if there is no necessary amount of resources or the organization comes across very solid and highly bureaucratic, then, let's say carefully, the terms are called “from the month”.

Reduction of used space on storage for test benches.According to zhelezyachnyh vendors, the cost of maintaining 1 TB of data on storage is from 800 euros per year. The figures are approximate, but the order is. In the presence of a productive database of 2 TB in size and 3 test benches with VDB - the total volume occupied by the delfix will be about 2 TB. Such indicators are achieved due to the fact that all test databases require only one common stack, which, in general, will occupy not much more productive database. Of course, it all depends on the number of changes that are made to the test database. More changes - it will be stronger to “swell” a lot of storage due to the storage of deltas. To present this, you can submit a diagram with snapshots of virtual machines.

The total number of possible connected databases (including the source and test databases) to a single Delphix instance is about 300.

Reducing the time to deploy base on the stand. Instead of many hours of routine operations to roll out a backup of a certain version on the stand, you need to press 3 buttons. For those who are used to working in the console or use a powerful API, there is a CLI, and API, respectively.

Reducing the time to update the database on the stands. The Delphix engine maintains constant synchronization with the source database (using the archive logs / transaction logs of the database), and all changes from this database can be propagated to the connected VDB.

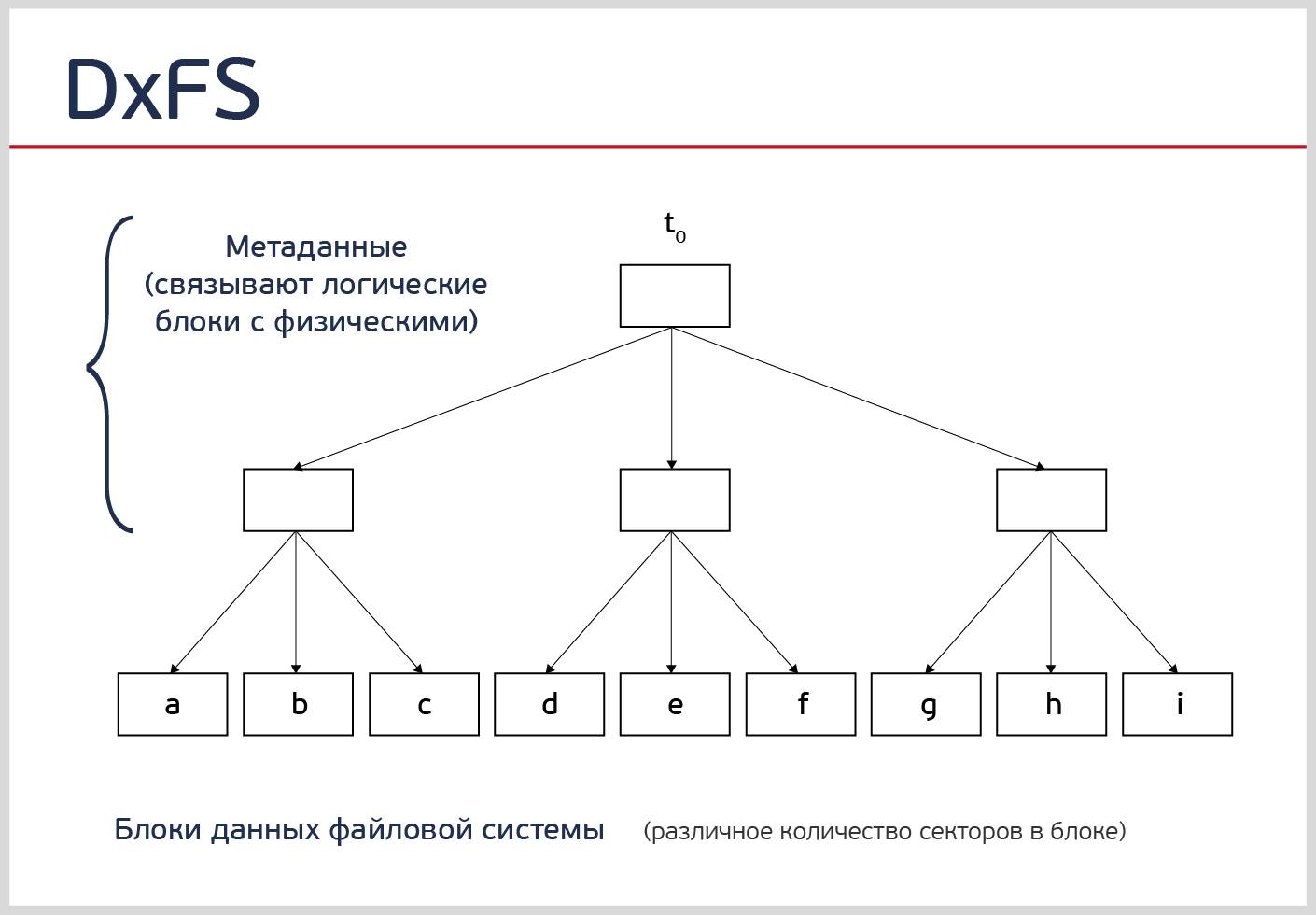

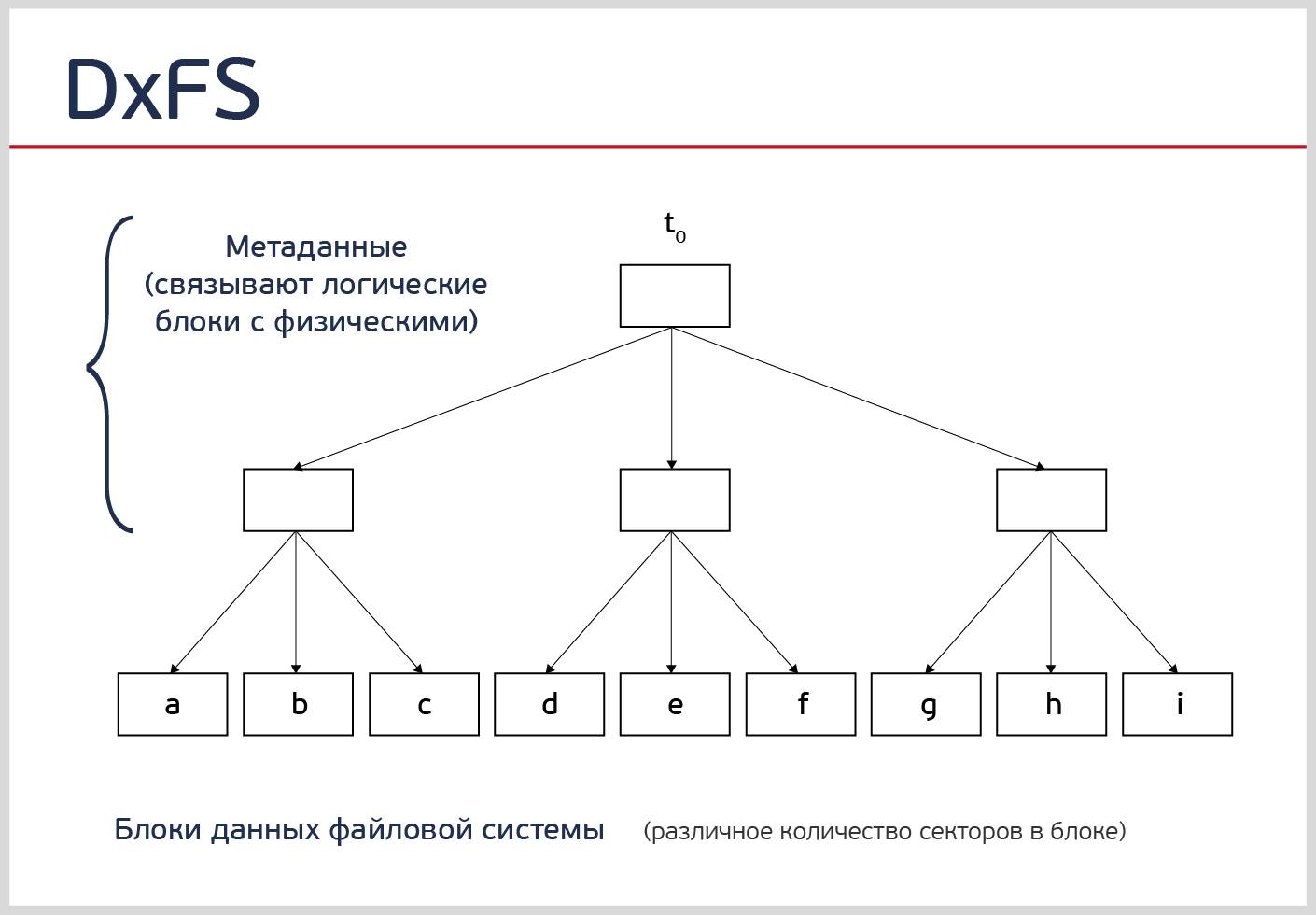

The Delphix file system contains data blocks (the lowest level in the picture). Delphix creates B-tree indexes that point to these data blocks. Moreover, the root block of the index (topmost) is decisive. This is the state of the system at time t0.

Now imagine that the modified data blocks b 'and c' arrived, and the system went into the t1 state.

Delphix does not grind old data blocks, and creates new blocks nearby. In order to access them, a new root index block is created, indicating new data blocks.

Thanks to the indexes, the system has two versions, each of which can be operated by connecting to the corresponding root block of the index t0 or t1.

For example, when connecting to the t1 index block, the system will look like this:

When Delphix removes a backup from a source database, it creates such indexes within itself. It automatically applies incremental backups to the original one, but does not overwrite the blocks, but writes them side by side and creates new root blocks of the index.

Therefore, it is always possible to take a snapshot of the database at a certain point in time and deploy VDB from it.

There is a video on YouTube , with an explanation from Jonathan Lewis (a high-class optimization / optimizer and performance issues for Oracle, the author of a good Cost-Based Oracle Fundamentals book, and many more useful books).

With enough people, time, money and patience, you can write anything with scripts. However, the cost of supporting it and many other nuances will depend directly on the complexity of such a solution. One company, with which we work and are friends, weighed the pros and cons, assessed the strength of its IT department and decided to write an analogue of Delphix independently on scripts and ZFS. Quite an option, if you need a specialized solution for a single system, you are confident in your abilities. Yes, what is really there, we ourselves love to write scripts ...

An additional data masking tool can be added to the main Delphix functionality. It will allow you to encrypt personal data, such as credit card numbers, first names, last names, etc. A very demanded function from banks and those who fulfill all the requirements of 149-FZ and 152-FZ.

Typically, developers need data from a productive database when it occurs:

It is not good to give anyone the opportunity to connect to an industrial database directly, but work somehow needs to be done and it is necessary to somehow make it possible for everyone who needs it to work with the industrial data structure and with the industrial data volume. More importantly, with an industrial “profile” of data. Considering that productive data often contains confidential information, including personal data, transfer it to a test zone or give it to developers - the task is usually impossible.

You can write a separate detailed article about this, but if briefly, the data masking option hides or modifies data that cannot be moved outside the controlled area of the industrial circuit and allows you to pass already “masked” data to the development and testing environments beyond the “perimeter”.

The Masking Engine (masking engine) deletes or changes the protected information and leaves the analog data, thereby allowing developers and testers to work with similar data. Data masking is implemented by the Delphix Masking Engine component.

Of course, there are other solutions that have similar functionality. For example, Oracle Masking, Informatica Masking, etc. Plus, as is the case with Delphix itself, you can write your own masking scripts. However, a significant common drawback is the need to purchase a large number of more expensive licenses / additional software.

In order not to overload the text, we will leave a more detailed illustration of the principles of the operation of the masking function in a separate article.

If you can provide examples of other concealment tools that you used in practice and that solve the problem, it would be great to see a few words from you about them in the comments.

It is possible to use self-written one, but it should be understood that, with a high degree of probability, running the scripts on a test database in the amount of, say, 5 TB will take a lot of time. And the writing of algorithms and the scripts themselves can last even longer.

When you need to quickly and guaranteed to solve a new problem and should work “like a clock,” masking out of the box for Delphix will work. The script will most likely have to be “doped” for a new task (data structure, database type, etc.).

Implementation begins with a pilot project. At first, we conduct interviews with the customer, and here we need only one responsible engineer (DBA or sysadmin) for full-fledged cooperation. We have a specialized questionnaire, which helps to determine the characteristics of the customer's computing environment.

Also, we will definitely need information about systems that may be candidates for virtualization (either separate databases or whole SAP / Dynamics systems). Together, we define the testing criteria, the success criteria and the timing of the pilot project in a dialogue mode. Further, while the customer is preparing the infrastructure for the pilot, we receive a test license from the vendor.

Our engineers arrive at the customer site, set up the engine and connect the source database to it. Depending on the internal rules of the customer in terms of information security, exactly which source is connected and the presence of its technical team, the initial deployment and configuration can be performed not by us, but by the customer’s own IT teams, either under our supervision or by instructions from the vendor our advice.

Further, if the customer has a desire to pump his team (and usually there is such a desire), we conduct training of infrastructure administrators and DBA. In order to learn, together with them, we deploy one or more virtual databases and run all the scenarios.

Typically, the pilot lasts from 2 to 4 weeks, if the internal processes of the customer allow you to quickly prepare the necessary infrastructure. As a rule, during this time on real systems, it is possible to test all the software functionality and assess the extent of the benefits obtained.

According to the results of testing, a report is compiled in which all pilot processes are analyzed, the “by-become” figures are given and a conclusion is issued on the advisability of longer-term relationships. If the management makes a positive decision, the process of “switching to industrial rails” is a matter of several hours, because in the process of piloting the main difficulties are usually overcome, and the “payback” and “effect from the project implementation” begin literally the next day.

Frankly, the product is not cheap. You can’t download it on torrents and, in order to use the solution, you will have to pay an annual fee to the vendor. Licensing scheme - for the amount of virtualized data.

The maximum benefit from using Delphix is achieved when you need to create a large number of copies of large volumes of different database sources (the number of source databases> = 1), for example, to create a heap of sample stands for analysts, testing services, support services for reproducing defects with production, etc., where you need copies of either a combat base with masked data, or a copy of a very large test base, and quickly and for a relatively short period of time (created a clone, solved the problem, killed the clone, made a fresh and etc.).

If this is your case, then consider the TCO (we can help to do it correctly) and decide whether it fits you or not. Otherwise, this is really a very interesting boxed (this is important!) Tool that solves problems that are quite clear from a technical point of view.

As a conclusion, we would like to conduct a short survey to understand how this tool can be useful for you.

What is Delphix?

Delphix is software running on a virtual machine with the Solaris OS, to which you need to connect the storage system to store copies of the database to complete the work. Delivered software in the form of a ready virtual machine image. The image can be deployed on VmWare, or in the AWS / Azure cloud infrastructure. For tests, in principle, it can be raised locally at the workstation.

If it is very generalized and very briefly expounded, Delphix virtualizes data and provides ready-made databases with which to work. He takes a copy of the source database (called dSource) and puts it in his stack (a local VM partition that is connected to an external storage system). Using algorithms, it reduces the volume of this copy to 60% (depending on the data types, of course). Then from the same copy in a few minutes you can deploy this database to other hosts. Such databases are called Virtual Database - VDB. Database files are mounted to target hosts via NFS, and therefore do not occupy space on them. That is, having 1 dSource for 500 GB and 5 VDB, the total occupied space for this case on the storage system will be about 350 GB (or so).

Is this process of making copies once?

After the initialization and initial loading of the source database in Delphix, the Delphix engine maintains constant synchronization with this database based on the policy you have chosen, for example, synchronization daily or every hour, or some time after the transactions.

The speed of creating the first copy of the database directly depends on the network bandwidth between the source database and Delphix, since the backup is transmitted over the network.

After binding to the source database, Delphix supports the so-called TimeFlow (time machine) source database - a functionality similar to version control. Any version of the database within this time interval can be connected to the target database. What for? For example, to investigate incidents.

VDB database are on common storage, so neither users nor admins need additional storage resources.

You can quickly connect any version of this VDB to the target database. VDBs are independent of each other and are in Read-Write mode. New changes that are made to VDB are written to new blocks in the Delphix store.

You can create a VDB based on another VDB and update it or roll back as needed.

How will the application respond?

It is possible to work with such VDB, “as with a normal DB”. The application will not notice anything. It is clear that the binary database must already be installed on these servers, since only the database files are cloned.

Such VDB are used mainly for functional testing or as sandboxes. To drive the load on VDB in most cases is not worth it, since due to the connection of the database via NFS, it will be quite problematic to interpret the results of such load testing. Although if you have a DB in prome working with the storage system through NFS, then, probably, it is possible.

Guaranteed, you can count on stable work if you use Oracle (including with support for multitenant and RAC), MS SQL, SAP ASE, IBM DB2, Oracle EBS, SAP HANA. Such configurations are supported by the vendor.

In addition, you can also virtualize regular files (consider any database, but with some restrictions on functionality). For example, as a proof-of-concept for internal tests, we virtualized PostgreSQL instances of one of our projects. I won’t say what happened once or twice, but in the end, the scheme with PostgreSQL worked, even though the vendor currently does not officially support PostgreSQL.

Why is this needed if similar functionality is built into the storage system?

It goes without saying that the storage and snapshot functionality of thin-provision has been around for a long time. However, if a productive database and stands are on the storage systems of different vendors, then this solution will not work. And not all storage systems can do it.

With Delphix, you can quite successfully deploy test environments on technologically outdated and end-of-life storage systems that have been decommissioned. As a result, significantly reduce the cost of storing test data.

It is also possible to get a physical copy of the database from this snapshot, for example, to create a standby or to transfer the database to another storage system.

The important component is self-service. With the help of the Delphix JetStream product GUI, even untrained developers / testers can independently roll back / update the database versions of their booths, do not pull admins several times a week.

Delphix maintains constant VDB synchronization with a productive database using archive / transaction log files. For a similar implementation on the basis of storage, you will have to do a dozen snapshots per day.

Like Oracle Enterprise Manager also knows how to clone databases

Yes, it can, but only Oracle DBMS is supported in it. Therefore, it cannot be used for other DBMSs.

The key question is why does he bring profit?

First, let's figure out who and due to what Delphix can be profitable. The profit is as follows:

- reduction of time spent on approval for new stands,

- reduction of used space on storage systems for test benches,

- the ability to use non-specialized storage systems from different vendors,

- reduction of time on deploy base on the stand,

- reducing the time to update the database on the stands.

Now briefly on each item.

Reduction of time spent on approval for new stands. Yes, in some companies we worked with, it took two weeks to coordinate the deployment of a new stand. And if there is no necessary amount of resources or the organization comes across very solid and highly bureaucratic, then, let's say carefully, the terms are called “from the month”.

Reduction of used space on storage for test benches.According to zhelezyachnyh vendors, the cost of maintaining 1 TB of data on storage is from 800 euros per year. The figures are approximate, but the order is. In the presence of a productive database of 2 TB in size and 3 test benches with VDB - the total volume occupied by the delfix will be about 2 TB. Such indicators are achieved due to the fact that all test databases require only one common stack, which, in general, will occupy not much more productive database. Of course, it all depends on the number of changes that are made to the test database. More changes - it will be stronger to “swell” a lot of storage due to the storage of deltas. To present this, you can submit a diagram with snapshots of virtual machines.

The total number of possible connected databases (including the source and test databases) to a single Delphix instance is about 300.

Reducing the time to deploy base on the stand. Instead of many hours of routine operations to roll out a backup of a certain version on the stand, you need to press 3 buttons. For those who are used to working in the console or use a powerful API, there is a CLI, and API, respectively.

Reducing the time to update the database on the stands. The Delphix engine maintains constant synchronization with the source database (using the archive logs / transaction logs of the database), and all changes from this database can be propagated to the connected VDB.

How is this technically implemented?

The Delphix file system contains data blocks (the lowest level in the picture). Delphix creates B-tree indexes that point to these data blocks. Moreover, the root block of the index (topmost) is decisive. This is the state of the system at time t0.

Now imagine that the modified data blocks b 'and c' arrived, and the system went into the t1 state.

Delphix does not grind old data blocks, and creates new blocks nearby. In order to access them, a new root index block is created, indicating new data blocks.

Thanks to the indexes, the system has two versions, each of which can be operated by connecting to the corresponding root block of the index t0 or t1.

For example, when connecting to the t1 index block, the system will look like this:

When Delphix removes a backup from a source database, it creates such indexes within itself. It automatically applies incremental backups to the original one, but does not overwrite the blocks, but writes them side by side and creates new root blocks of the index.

Therefore, it is always possible to take a snapshot of the database at a certain point in time and deploy VDB from it.

There is a video on YouTube , with an explanation from Jonathan Lewis (a high-class optimization / optimizer and performance issues for Oracle, the author of a good Cost-Based Oracle Fundamentals book, and many more useful books).

How much more convenient is the custom solution (scripts, for example)?

With enough people, time, money and patience, you can write anything with scripts. However, the cost of supporting it and many other nuances will depend directly on the complexity of such a solution. One company, with which we work and are friends, weighed the pros and cons, assessed the strength of its IT department and decided to write an analogue of Delphix independently on scripts and ZFS. Quite an option, if you need a specialized solution for a single system, you are confident in your abilities. Yes, what is really there, we ourselves love to write scripts ...

Somewhere above it was mentioned about masking, what is it?

An additional data masking tool can be added to the main Delphix functionality. It will allow you to encrypt personal data, such as credit card numbers, first names, last names, etc. A very demanded function from banks and those who fulfill all the requirements of 149-FZ and 152-FZ.

Typically, developers need data from a productive database when it occurs:

- developing a new application

- support or refinement of the application,

- testing functionality on test benches.

It is not good to give anyone the opportunity to connect to an industrial database directly, but work somehow needs to be done and it is necessary to somehow make it possible for everyone who needs it to work with the industrial data structure and with the industrial data volume. More importantly, with an industrial “profile” of data. Considering that productive data often contains confidential information, including personal data, transfer it to a test zone or give it to developers - the task is usually impossible.

You can write a separate detailed article about this, but if briefly, the data masking option hides or modifies data that cannot be moved outside the controlled area of the industrial circuit and allows you to pass already “masked” data to the development and testing environments beyond the “perimeter”.

The Masking Engine (masking engine) deletes or changes the protected information and leaves the analog data, thereby allowing developers and testers to work with similar data. Data masking is implemented by the Delphix Masking Engine component.

Why not use IBM Optim / Oracle Masking / counterparts as masking?

Of course, there are other solutions that have similar functionality. For example, Oracle Masking, Informatica Masking, etc. Plus, as is the case with Delphix itself, you can write your own masking scripts. However, a significant common drawback is the need to purchase a large number of more expensive licenses / additional software.

- With Oracle, it is the licensing of each server with masking (this is the license of Masking itself and the Database Gateway for a non-Oracle database).

- Informatica is PowerCenter ETL, Designer and Lifecycle Management. And if you want to tie it to SAP, then you have to pay for it.

- Scripts - with scripts it all depends on your faith in yourself and your leadership's confidence in you.

In order not to overload the text, we will leave a more detailed illustration of the principles of the operation of the masking function in a separate article.

If you can provide examples of other concealment tools that you used in practice and that solve the problem, it would be great to see a few words from you about them in the comments.

I still want to write my masking script ...

It is possible to use self-written one, but it should be understood that, with a high degree of probability, running the scripts on a test database in the amount of, say, 5 TB will take a lot of time. And the writing of algorithms and the scripts themselves can last even longer.

When you need to quickly and guaranteed to solve a new problem and should work “like a clock,” masking out of the box for Delphix will work. The script will most likely have to be “doped” for a new task (data structure, database type, etc.).

What can Delphix Masking Engine “out of the box”

- Secure Lookup - replaces the original data, for example, “Vasya” -> “Peter”. In this algorithm, the appearance of collisions is possible, when the substituted data will be the same.

- Segmented mapping – делит значение на несколько сегментов и замещает эти сегменты по отдельности. Например, номер NM831026-04 можно разделить на три части, из которых буквы NM — не маскировать, значение после трансформации — NM390572-50. Это актуально для маскирования значений колонок, используемых в качестве первичного ключа или для уникальных колонок.

- Mapping Algorithm – для его работы необходимо указать точное соответствие оригинальных и заменяемых значений. В этом случае коллизий не будет, т.к. оригинальное значение напрямую заменяется определенным. В качестве примера, имя “Алексей” всегда будет заменяться на “Никита”.

- Binary Lookup algorithm – заменяет clob/blob значение в колонках и т.д. Delphix не умеет сам заменять значение в картинках/текстах, но вместо этого можно выбрать замещающую картинку или текст.

- Tokenization Algorithm – это вид шифрования данных, при котором входные данные преобразуются в токены, имеющие похожие атрибуты (длина строки, цифровое или текстовое значение), однако они не несут в себе никакого смыслового значения. С помощью алгоритмов можно зашифровать/расшифровать эти данные. Например, можно замаскировать данные и направить вендору продукта. Он их проанализирует и пометит те данные, которые неправильные (например, неверное заполнение), а затем отправит назад.

- Min Max Algorithm – алгоритм, который усредняет все значения в колонке, чтобы скрыть максимальные и минимальные значения (допустим, зарплаты).

- Data Cleansing Algorithm – не маскирует, а стандартизирует данные. Например, можно задать правила, по которым значения Ru, Rus, R преобразуются к единому формату RU.

OK. I already understood that we need Delphix. How long to implement? What does the process look like?

Implementation begins with a pilot project. At first, we conduct interviews with the customer, and here we need only one responsible engineer (DBA or sysadmin) for full-fledged cooperation. We have a specialized questionnaire, which helps to determine the characteristics of the customer's computing environment.

Also, we will definitely need information about systems that may be candidates for virtualization (either separate databases or whole SAP / Dynamics systems). Together, we define the testing criteria, the success criteria and the timing of the pilot project in a dialogue mode. Further, while the customer is preparing the infrastructure for the pilot, we receive a test license from the vendor.

Our engineers arrive at the customer site, set up the engine and connect the source database to it. Depending on the internal rules of the customer in terms of information security, exactly which source is connected and the presence of its technical team, the initial deployment and configuration can be performed not by us, but by the customer’s own IT teams, either under our supervision or by instructions from the vendor our advice.

Further, if the customer has a desire to pump his team (and usually there is such a desire), we conduct training of infrastructure administrators and DBA. In order to learn, together with them, we deploy one or more virtual databases and run all the scenarios.

Typically, the pilot lasts from 2 to 4 weeks, if the internal processes of the customer allow you to quickly prepare the necessary infrastructure. As a rule, during this time on real systems, it is possible to test all the software functionality and assess the extent of the benefits obtained.

According to the results of testing, a report is compiled in which all pilot processes are analyzed, the “by-become” figures are given and a conclusion is issued on the advisability of longer-term relationships. If the management makes a positive decision, the process of “switching to industrial rails” is a matter of several hours, because in the process of piloting the main difficulties are usually overcome, and the “payback” and “effect from the project implementation” begin literally the next day.

Are there any negative points? What don't you like?

Frankly, the product is not cheap. You can’t download it on torrents and, in order to use the solution, you will have to pay an annual fee to the vendor. Licensing scheme - for the amount of virtualized data.

The maximum benefit from using Delphix is achieved when you need to create a large number of copies of large volumes of different database sources (the number of source databases> = 1), for example, to create a heap of sample stands for analysts, testing services, support services for reproducing defects with production, etc., where you need copies of either a combat base with masked data, or a copy of a very large test base, and quickly and for a relatively short period of time (created a clone, solved the problem, killed the clone, made a fresh and etc.).

If this is your case, then consider the TCO (we can help to do it correctly) and decide whether it fits you or not. Otherwise, this is really a very interesting boxed (this is important!) Tool that solves problems that are quite clear from a technical point of view.

We also have vacancies.

As a conclusion, we would like to conduct a short survey to understand how this tool can be useful for you.

Only registered users can participate in the survey. Sign in , please.