Web development on Node.JS: Part 2

In the last article, I started talking about my experience in developing the experimental WEB-project “ What to do ?” On Node.JS. The first part was a review, in it I tried to reveal the pros and cons of technology, as well as to warn about problems that you may have to face during development. In this article I will dwell on the technical details.

Honestly, after periodic observations of the fall of sites, links to which fall on the main hub, I expected to see much more serious figures. Both previous articles visited the main page. Although the first article was in the closed blog “I PR” and was visible only to its subscribers, the second one in the profile blog “Node.JS” caused a rather lengthy discussion in the comments - the number of people who came to the site from both articles was about the same. Equally small.

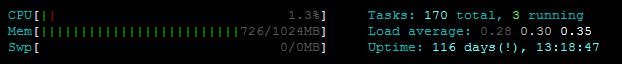

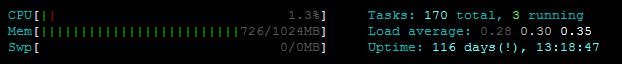

These numbers are too small to speak of any serious burden. At the very peak of visits, htop showed approximately the following picture:

Load average sometimes reached 1, but then again went down to 0.3-0.5. Pages were given quickly. The average page generation time, the data for the formation of which is in memcached - 15-20ms. If there is no data in memcached, the generation time increases to 40-100ms, but this is extremely rare. Some visitors tested the site using the siege and ab utilities, as well as using the LoadImpact service. At that time, I was sure that all pages were cached well by Nginx, and these requests did not reach Node.JS. It turned out that this was not so. Later, I discovered the incorrect behavior of one of the modules, which prevented page caching (I will discuss this in more detail later). Virtually all requests were handled by Node.JS and the site was stable.

Unfortunately, I don’t know how much the “habraeffect” differs depending on the subject of the article and the subject of the site to which the link is set. But if the site falls from the same (well, or even x2) number of people, then the problem is far from the choice of technology.

Based on tests and attendance data, I concluded that the project is quite stable and will not fall if there is a sharp influx of visitors.

The site lives on VPS with modest features:

Additional software is installed to a minimum. In this case, it is:

By default, Node.JS runs in a single thread, which is not very convenient and not optimal, especially for multi-core processors. Almost immediately, modules appeared to conveniently launch several processes (various implementations of Web Workers). It was not difficult to do this using the standard Node.JS API . With the release of version 0.6.0, Node.JS introduced a new module - Cluster . It greatly simplifies the task of starting multiple Node.JS processes. The API of this module allows forking node processes, net / http-servers of which will use a common TCP port. The parent process can manage child processes: stop, start new ones, respond to unexpected terminations. Child processes can exchange messages with their parent.

Despite the fact that using Cluster is convenient to start the required number of processes, I run 2 instances of node on different TCP ports. I do this in order to avoid downtime during the update, otherwise during the reboot (which, by the way, takes only a few seconds), the site will be inaccessible to users. Between the node instances, the load is distributed using Nginx's HttpUpstreamModule. When, during a reboot, one of the instances becomes unavailable, the second takes over all the requests.

Nginx is configured so that for unauthorized users, all pages of the site are cached for a short period of time - 1 minute. This allows you to significantly remove the load from Node.JS and at the same time display relevant content pretty quickly. For authorized users, the caching time is set to 3 seconds. This is completely invisible to ordinary users, but it will save from intruders trying to load the site with a large number of requests containing authorization cookies.

When developing applications on Node.JS, the question often arises of choosing a module to perform a particular task. For some tasks, there are already proven, popular modules, for others, it is more difficult to make a choice. Choosing a module, you should focus on the number of observers, the number of forks and the dates of the last commits (now we are talking about GitHub). By these indicators, you can determine whether the project is alive. This task is greatly facilitated by the recently appeared service The Node Toolbox .

Now it is time to talk about the modules that I have chosen for the development of the project.

This module is an add-on for the Node.JS http server and significantly expands its capabilities. He adds features such as routing, cookies support, session support, parsing the request body and much more, without which the development of a web application on Node.JS is likely to turn into a nightmare. Most connect features are implemented as plugins. There are also many equally useful plugins for connect , which are not included in its standard package. Adding the missing functionality by developing your own plugin is also quite simple.

Despite the popularity of this module and its rapid development, the problem that prevented Nginx from caching the response from Node.JS was in it. By default, the proxy_cache directive in Nginx does not cache backend responses if at least one of the following headers is present in them:

This module is a plugin for connect. It adds the ability to store sessions in memcached. Without additional plugins, connect can only store sessions in the memory of one process. This is clearly not enough for use in combat conditions, so the corresponding plugins have already been developed for all popular storages.

Without this module, writing asynchronous code for Node.JS would be much more difficult. This library contains methods that allow you to “juggle” with asynchronous calls and not inflate the code with many functions embedded in each other. For example, launching several asynchronous calls and performing some action to complete them becomes much easier. I highly recommend that you familiarize yourself with the full range of features of this library in order to avoid further invention of bicycles.

This module implements the OAuth and OAuth2 protocols, which makes it quite simple to provide user authorization on the site through social networks that support these protocols.

The name of this module speaks for itself. It allows you to perform tasks on a schedule. The schedule syntax is very similar to cron, which everyone is used to on linux, but, unlike it, node-cron supports second-second startup intervals. That is, you can configure the method to run once every 10 seconds or even every second. Tasks such as bringing popular questions to the main page and posting them on Twitter are launched using this module.

This module implements the interaction of the application with the Twitter API. To work, it uses the node-oauth module described above.

This module is the interface to the NoSQL DBMS MongoDB. Among his competitors, he stands out for his best sophistication and rapid development. Opening multiple connections to the database (pool) is supported out of the box, which eliminates the need to write your own crutches. Based on this module, a rather convenient ORM Mongoose was developed .

This is, in my opinion, the best memcached access interface from Node.JS. It supports multiple memcached servers and key distribution between them, as well as a connection pool.

This module is designed to access remote resources via HTTP / HTTPS. With it, photos of users logging into the site through social networks are downloaded.

A small but very useful module, which, as its name implies, implements the sprintf and vsprintf functions in JavaScript.

This module makes it very easy to make a demon from Node.JS application. With it, it is convenient to untie the application from the console and redirect the output to the log files.

The following modules were developed by me while working on the project, as at the time of writing, I could not find any ready-made solutions in their place. These modules are published on GitHub and in the npm modules directory.

This module does not yet claim to be a full implementation of the AOP pattern . Now it contains one single method that allows you to wrap a function in an aspect that, if necessary, can change its behavior. This technique is very convenient to use for caching the results of the functions.

For example, we have some kind of asynchronous function:

This function is often called and the result must be cached. Usually it looks like this:

There can be many such functions in a project. The code will swell a lot and become less readable, a large amount of copy-paste appears. And here you can do the same with aop.wrap:

Separately, we create the aspects library and define the cache function there, which will be responsible for caching everything and everything.

As necessary, the functional aspect can be increased. In a large project, this approach greatly saves the amount of code and localizes all the end-to-end functionality in one place.

In the future, I plan to expand this library by implementing the remaining capabilities of the AOP pattern.

The task of this module is to check and filter input data. Most often these are forms, but also it can be data received upon request from external APIs, etc. This module includes the node-validator library and allows you to fully use its capabilities.

The principle of operation of this module is as follows: each form is described by a set of fields on which filters are hung (functions that influence the value of the field) and validators (functions that check the value of the field for compliance with the condition). When data is received, they are passed to the process form method. In the callback we will receive either a description of the error (if any data did not meet the criteria of the form) or an object containing a filtered set of fields and ready for further use. A small usage example:

In this case, we get the error 'Bad text length' for the text field, because The length of the transmitted text is less than 30 characters.

Filters and validators are executed sequentially, so even if you add a lot of spaces to the end of the line, we still get an error, because Before checking, spaces will be removed with the trim filter.

You can read how to create your own filters and validators on the node-validator page or see the source code. Plans for the future make a port for this module for use in the browser and well document its capabilities.

This module is designed to conveniently configure the application. The configurations are stored in regular JS files, this makes it possible to use JavaScript during configuration and does not require additional analysis of files. You can create several additional configurations for various environments (development, production, testing, etc.) that will expand and / or change the main configuration.

This module is very similar to configjs, but is designed to store strings of different locales to support multilingualism. The module is hardly suitable for applications with a lot of text. In this case, it will be more convenient to use solutions similar to GetText . In addition to the means of loading the necessary locale, the module contains a function for outputting numerals that supports Russian and English.

Perhaps this module can claim to be the smallest module for Node.JS. It consists of only one line: module.exports = {}; Moreover, without it, development would be much more complicated. This module is a container for storing objects while the application is running. It uses the Node.JS feature - when connected, the module is initialized only once. All calls to require ('moduleName'), no matter how many there are in the application, return a reference to the same module instance, initialized at the first mention. In fact, it replaces the use of global space to share resources between parts of the application. Such a need arises quite often. Examples: DBMS connection pool, cache connection pool, link to the loaded configuration, and locale. These resources are needed in many parts of the application and access to them should be easy. When the resource is initialized, it is assigned to the property of the hub object and in the future it can be accessed from any other module by pre-connecting the hub to it.

Unfortunately, this module has not yet been published. not properly designed and not all errors are still fixed. I am going to finish and publish it in the near future as soon as there is a little free time.

Since the application does not use frameworks, its structure is not tied to any rules, except for the general style of writing applications on Node.JS and common sense. The application uses the MVC model.

The server.js launched file contains the initialization of the main application resources: launching the http server, connecting, loading the configuration and language, establishing connections with MongoDB and Memcached, connecting controllers, setting links to resources that need to be shared between the modules in the hub. Here the necessary number of processes is forked. In the master process, node-cron is launched to perform scheduled tasks, and in child processes - http-servers.

In each controller, connect connects the url to the handler method. Each request goes through a chain of methods that is created during initialization of connect. Example:

This is a convenient mechanism that makes it very easy to add new behavior to the algorithm for processing a request and generating a response.

The controller, if necessary, calls the methods of the model, which receive data from MongoDb or Memcached. When all the data for the response is ready, the controller gives the command to the template engine to form the page and sends the generated html to the user.

The topic of developing WEB applications on Node.JS is quite large and interesting. It is impossible to state it completely in two articles. Yes, this is probably not necessary. I tried to describe the basic principles of development and point out possible problems. This should be enough to “enter” the topic, and then Google and GitHub will come to the rescue. All the links to the module pages on GitHub that I cited in the article contain a detailed description of installing modules and examples of their use.

Thanks to everyone who read it. I will be very interested to hear feedback and questions in the comments.

A few words about the "habraeffect"

Honestly, after periodic observations of the fall of sites, links to which fall on the main hub, I expected to see much more serious figures. Both previous articles visited the main page. Although the first article was in the closed blog “I PR” and was visible only to its subscribers, the second one in the profile blog “Node.JS” caused a rather lengthy discussion in the comments - the number of people who came to the site from both articles was about the same. Equally small.

These numbers are too small to speak of any serious burden. At the very peak of visits, htop showed approximately the following picture:

Load average sometimes reached 1, but then again went down to 0.3-0.5. Pages were given quickly. The average page generation time, the data for the formation of which is in memcached - 15-20ms. If there is no data in memcached, the generation time increases to 40-100ms, but this is extremely rare. Some visitors tested the site using the siege and ab utilities, as well as using the LoadImpact service. At that time, I was sure that all pages were cached well by Nginx, and these requests did not reach Node.JS. It turned out that this was not so. Later, I discovered the incorrect behavior of one of the modules, which prevented page caching (I will discuss this in more detail later). Virtually all requests were handled by Node.JS and the site was stable.

Unfortunately, I don’t know how much the “habraeffect” differs depending on the subject of the article and the subject of the site to which the link is set. But if the site falls from the same (well, or even x2) number of people, then the problem is far from the choice of technology.

Based on tests and attendance data, I concluded that the project is quite stable and will not fall if there is a sharp influx of visitors.

Architecture

Iron and software

The site lives on VPS with modest features:

- 1 CPU with a guaranteed frequency of 1200Mhz;

- 1024Mb RAM;

- 25Gb HDD (for this project, this indicator does not play a special role).

Additional software is installed to a minimum. In this case, it is:

- Node.JS - JavaScript application runtime on the server;

- MongoDB - NoSQL DBMS;

- Memcached - Caching daemon;

- Nginx - Frontend server.

Configuration

By default, Node.JS runs in a single thread, which is not very convenient and not optimal, especially for multi-core processors. Almost immediately, modules appeared to conveniently launch several processes (various implementations of Web Workers). It was not difficult to do this using the standard Node.JS API . With the release of version 0.6.0, Node.JS introduced a new module - Cluster . It greatly simplifies the task of starting multiple Node.JS processes. The API of this module allows forking node processes, net / http-servers of which will use a common TCP port. The parent process can manage child processes: stop, start new ones, respond to unexpected terminations. Child processes can exchange messages with their parent.

Despite the fact that using Cluster is convenient to start the required number of processes, I run 2 instances of node on different TCP ports. I do this in order to avoid downtime during the update, otherwise during the reboot (which, by the way, takes only a few seconds), the site will be inaccessible to users. Between the node instances, the load is distributed using Nginx's HttpUpstreamModule. When, during a reboot, one of the instances becomes unavailable, the second takes over all the requests.

Nginx is configured so that for unauthorized users, all pages of the site are cached for a short period of time - 1 minute. This allows you to significantly remove the load from Node.JS and at the same time display relevant content pretty quickly. For authorized users, the caching time is set to 3 seconds. This is completely invisible to ordinary users, but it will save from intruders trying to load the site with a large number of requests containing authorization cookies.

Modules

When developing applications on Node.JS, the question often arises of choosing a module to perform a particular task. For some tasks, there are already proven, popular modules, for others, it is more difficult to make a choice. Choosing a module, you should focus on the number of observers, the number of forks and the dates of the last commits (now we are talking about GitHub). By these indicators, you can determine whether the project is alive. This task is greatly facilitated by the recently appeared service The Node Toolbox .

Now it is time to talk about the modules that I have chosen for the development of the project.

connect

github.com/senchalabs/connectThis module is an add-on for the Node.JS http server and significantly expands its capabilities. He adds features such as routing, cookies support, session support, parsing the request body and much more, without which the development of a web application on Node.JS is likely to turn into a nightmare. Most connect features are implemented as plugins. There are also many equally useful plugins for connect , which are not included in its standard package. Adding the missing functionality by developing your own plugin is also quite simple.

Despite the popularity of this module and its rapid development, the problem that prevented Nginx from caching the response from Node.JS was in it. By default, the proxy_cache directive in Nginx does not cache backend responses if at least one of the following headers is present in them:

- Set cookie

- Cache-Control containing values of "no-cache", "no-store", "private", or "max-age" with a non-numeric or zero value;

- Expires with a date in the past;

- X-Accel-Expires: 0.

connect-memcached

github.com/balor/connect-memcachedThis module is a plugin for connect. It adds the ability to store sessions in memcached. Without additional plugins, connect can only store sessions in the memory of one process. This is clearly not enough for use in combat conditions, so the corresponding plugins have already been developed for all popular storages.

async

github.com/caolan/asyncWithout this module, writing asynchronous code for Node.JS would be much more difficult. This library contains methods that allow you to “juggle” with asynchronous calls and not inflate the code with many functions embedded in each other. For example, launching several asynchronous calls and performing some action to complete them becomes much easier. I highly recommend that you familiarize yourself with the full range of features of this library in order to avoid further invention of bicycles.

node-oauth

github.com/ciaranj/node-oauthThis module implements the OAuth and OAuth2 protocols, which makes it quite simple to provide user authorization on the site through social networks that support these protocols.

node-cron

github.com/ncb000gt/node-cronThe name of this module speaks for itself. It allows you to perform tasks on a schedule. The schedule syntax is very similar to cron, which everyone is used to on linux, but, unlike it, node-cron supports second-second startup intervals. That is, you can configure the method to run once every 10 seconds or even every second. Tasks such as bringing popular questions to the main page and posting them on Twitter are launched using this module.

node twitter

github.com/jdub/node-twitterThis module implements the interaction of the application with the Twitter API. To work, it uses the node-oauth module described above.

node-mongodb-native

github.com/christkv/node-mongodb-nativeThis module is the interface to the NoSQL DBMS MongoDB. Among his competitors, he stands out for his best sophistication and rapid development. Opening multiple connections to the database (pool) is supported out of the box, which eliminates the need to write your own crutches. Based on this module, a rather convenient ORM Mongoose was developed .

node-memcached

github.com/3rd-Eden/node-memcachedThis is, in my opinion, the best memcached access interface from Node.JS. It supports multiple memcached servers and key distribution between them, as well as a connection pool.

http-get

github.com/SaltwaterC/http-getThis module is designed to access remote resources via HTTP / HTTPS. With it, photos of users logging into the site through social networks are downloaded.

sprintf

github.com/maritz/node-sprintfA small but very useful module, which, as its name implies, implements the sprintf and vsprintf functions in JavaScript.

daemon.node

github.com/indexzero/daemon.nodeThis module makes it very easy to make a demon from Node.JS application. With it, it is convenient to untie the application from the console and redirect the output to the log files.

My contribution

The following modules were developed by me while working on the project, as at the time of writing, I could not find any ready-made solutions in their place. These modules are published on GitHub and in the npm modules directory.

aop

github.com/baryshev/aopThis module does not yet claim to be a full implementation of the AOP pattern . Now it contains one single method that allows you to wrap a function in an aspect that, if necessary, can change its behavior. This technique is very convenient to use for caching the results of the functions.

For example, we have some kind of asynchronous function:

var someAsyncFunction = function(num, callback) {

var result = num * 2;

callback(undefined, result);

};This function is often called and the result must be cached. Usually it looks like this:

var someAsyncFunction = function(num, callback) {

var key = 'someModule' + '_' + 'someAsyncFunction' + '_' + num;

cache.get(key, function(error, cachedResult) {

if (error || !cachedResult) {

var result = num * 2;

callback(undefined, result);

cache.set(key, result);

} else {

callback(undefined, cachedResult);

}

});

};There can be many such functions in a project. The code will swell a lot and become less readable, a large amount of copy-paste appears. And here you can do the same with aop.wrap:

var someAsyncFunction = function(num, callback) {

var result = num * 2;

callback(undefined, result);

};

/**

* Первый параметр - ссылка на объект this для обёртки

* Второй параметр - функция, которую мы заворачиваем в аспект

* Третий параметр - аспект, который будет выполнятся при вызове заворачиваемой функции

* Последующие параметры - произвольные параметры, которые получает аспект (используются для настройки его поведения)

*/

someAsyncFunction = aop.wrap(someAsyncFunction, someAsyncFunction, aspects.cache, 'someModule', 'someAsyncFunction');Separately, we create the aspects library and define the cache function there, which will be responsible for caching everything and everything.

module.exports.cache = function(method, params, moduleName, functionName) {

var that = this;

// Такое формирование ключа кеширования приведено для простоты примера

var key = moduleName + '_' + functionName + '_' + params[0];

cache.get(key, function(error, cachedResult) {

// Получаем ссылку на callback-функцию (всегда передаётся последним параметром)

var callback = params[params.length - 1];

if (error || !cachedResult) {

// Результата в кеше не нашли, передаём управление в метод, подменяя callback-функцию

params[params.length - 1] = function(error, result) {

callback(error, result);

if (!error) cache.set(key, result);

};

method.apply(that, params);

} else {

callback(undefined, cachedResult);

}

});

};As necessary, the functional aspect can be increased. In a large project, this approach greatly saves the amount of code and localizes all the end-to-end functionality in one place.

In the future, I plan to expand this library by implementing the remaining capabilities of the AOP pattern.

form

github.com/baryshev/formThe task of this module is to check and filter input data. Most often these are forms, but also it can be data received upon request from external APIs, etc. This module includes the node-validator library and allows you to fully use its capabilities.

The principle of operation of this module is as follows: each form is described by a set of fields on which filters are hung (functions that influence the value of the field) and validators (functions that check the value of the field for compliance with the condition). When data is received, they are passed to the process form method. In the callback we will receive either a description of the error (if any data did not meet the criteria of the form) or an object containing a filtered set of fields and ready for further use. A small usage example:

var fields = {

text: [

form.filter(form.Filter.trim),

form.validator(form.Validator.notEmpty, 'Empty text'),

form.validator(form.Validator.len, 'Bad text length', 30, 1000)

],

name: [

form.filter(form.Filter.trim),

form.validator(form.Validator.notEmpty, 'Empty name')

]

};

var textForm = form.create(fields);

textForm.process({'text' : 'some short text', 'name': 'tester'}, function(error, data) {

console.log(error);

console.log(data);

});In this case, we get the error 'Bad text length' for the text field, because The length of the transmitted text is less than 30 characters.

Filters and validators are executed sequentially, so even if you add a lot of spaces to the end of the line, we still get an error, because Before checking, spaces will be removed with the trim filter.

You can read how to create your own filters and validators on the node-validator page or see the source code. Plans for the future make a port for this module for use in the browser and well document its capabilities.

configjs

github.com/baryshev/configjsThis module is designed to conveniently configure the application. The configurations are stored in regular JS files, this makes it possible to use JavaScript during configuration and does not require additional analysis of files. You can create several additional configurations for various environments (development, production, testing, etc.) that will expand and / or change the main configuration.

localejs

github.com/baryshev/localejsThis module is very similar to configjs, but is designed to store strings of different locales to support multilingualism. The module is hardly suitable for applications with a lot of text. In this case, it will be more convenient to use solutions similar to GetText . In addition to the means of loading the necessary locale, the module contains a function for outputting numerals that supports Russian and English.

hub

github.com/baryshev/hub/blob/master/lib/index.jsPerhaps this module can claim to be the smallest module for Node.JS. It consists of only one line: module.exports = {}; Moreover, without it, development would be much more complicated. This module is a container for storing objects while the application is running. It uses the Node.JS feature - when connected, the module is initialized only once. All calls to require ('moduleName'), no matter how many there are in the application, return a reference to the same module instance, initialized at the first mention. In fact, it replaces the use of global space to share resources between parts of the application. Such a need arises quite often. Examples: DBMS connection pool, cache connection pool, link to the loaded configuration, and locale. These resources are needed in many parts of the application and access to them should be easy. When the resource is initialized, it is assigned to the property of the hub object and in the future it can be accessed from any other module by pre-connecting the hub to it.

connect-response

This plugin for connect adds the ability to easily work with cookies, and also includes a template engine for generating a response to the user. I developed my own template engine. It turned out pretty well. The basis was taken from the EJS template engine , but in the end it turned out a completely different product, with its own functionality, although with a similar syntax. But this is a big topic for a separate article.Unfortunately, this module has not yet been published. not properly designed and not all errors are still fixed. I am going to finish and publish it in the near future as soon as there is a little free time.

Application structure

Since the application does not use frameworks, its structure is not tied to any rules, except for the general style of writing applications on Node.JS and common sense. The application uses the MVC model.

The server.js launched file contains the initialization of the main application resources: launching the http server, connecting, loading the configuration and language, establishing connections with MongoDB and Memcached, connecting controllers, setting links to resources that need to be shared between the modules in the hub. Here the necessary number of processes is forked. In the master process, node-cron is launched to perform scheduled tasks, and in child processes - http-servers.

In each controller, connect connects the url to the handler method. Each request goes through a chain of methods that is created during initialization of connect. Example:

var server = connect();

server.listen(port, hub.config.app.host);

if (hub.config.app.profiler) server.use(connect.profiler());

server.use(connect.cookieParser());

server.use(connect.bodyParser());

server.use(connect.session({

store: new connectMemcached(hub.config.app.session.memcached),

secret: hub.config.app.session.secret,

key: hub.config.app.session.cookie_name,

cookie: hub.config.app.session.cookie

}));

server.use(connect.query());

server.use(connect.router(function(router) {

hub.router = router;

}));This is a convenient mechanism that makes it very easy to add new behavior to the algorithm for processing a request and generating a response.

The controller, if necessary, calls the methods of the model, which receive data from MongoDb or Memcached. When all the data for the response is ready, the controller gives the command to the template engine to form the page and sends the generated html to the user.

Conclusion

The topic of developing WEB applications on Node.JS is quite large and interesting. It is impossible to state it completely in two articles. Yes, this is probably not necessary. I tried to describe the basic principles of development and point out possible problems. This should be enough to “enter” the topic, and then Google and GitHub will come to the rescue. All the links to the module pages on GitHub that I cited in the article contain a detailed description of installing modules and examples of their use.

Thanks to everyone who read it. I will be very interested to hear feedback and questions in the comments.