Linux containers: isolation as a technological breakthrough

Imagine that you are developing an application, moreover, on your laptop, where the working environment has a certain configuration. The application relies on this configuration and depends on the specific files on your computer. Other developers may have slightly different configurations. In addition, your organization has test and industrial environments with their own configurations and file sets. You would like to emulate these environments as accurately as possible, but you absolutely do not want to play complex and heavy servers on your machine. How to make the application work in all environments, pass quality control and get into production without encountering a lot of problems on the road that require constant code refinement?

Answer: use containers. Together with your application, the container contains all the necessary configurations (and files), so it can be easily transferred from the development environment to the testing environment, and then to the industrial environment, without fear of any side effects. The crisis is eliminated, everything is in the gain.

This, of course, is a simplified example, but Linux containers really allow you to successfully deal with many problems related to portability, customization and isolation of applications. Whether it’s a local IT infrastructure, a cloud, or a hybrid solution based on them, containers can do their job efficiently. Of course, in addition to the containers themselves, the choice of the right container platform is important.

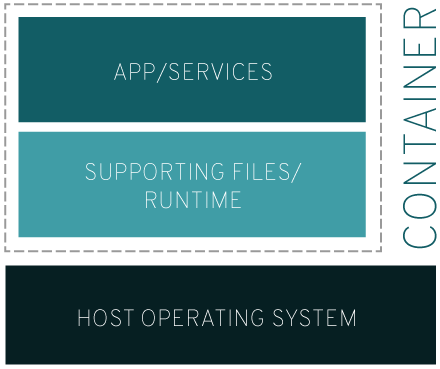

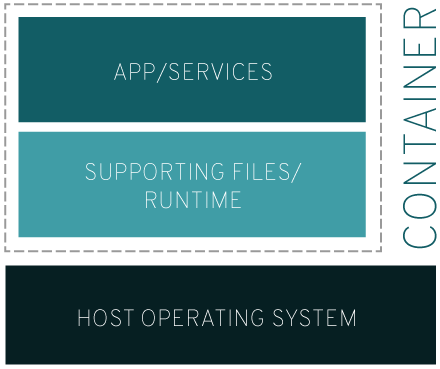

A Linux container is a set of processes that are isolated from the rest of the operating system and run from a separate image that contains all the files necessary for their operation. The image contains all the application dependencies and therefore can easily be transferred from the development environment to the testing environment, and then to the industrial environment.

Yes and no. This approach will help to understand:

What does it mean? First of all, the fact that for simultaneous operation of several operating systems on one hypervisor (a program that implements virtualization) requires more system resources than for a similar configuration based on containers. Resources, as a rule, are not unlimited, therefore, the less your applications “weigh”, the denser they can be placed on servers. All Linux containers running on a computer use the same operating system, so your applications and services remain lightweight and run in parallel without interfering with each other.

The progenitor of containers can be considered the FreeBSD Jail technology , which appeared in 2000. It allows you to create several independent systems inside the same FreeBSD operating system , running on its own kernel, the so-called "jail cells". Cameras were conceived as isolated environments that an administrator can safely provide to internal users or external clients. Since the camera is built on the basis of the chroot call and is a virtual environment with its files, network and users, processes cannot go beyond the camera and damage the main OS. However, due to design limitations, the Jail mechanism still does not completely isolate the processes, and over time, ways to “escape” from the camera have appeared.

But the idea itself was promising, and already in 2001, the VServer project appeared on the Linux platform , created, according to its founder , Jacques Gélinas, in order to run “several standard Linux-servers on one machine with a high degree of independence and security. ” Thus, Linux appeared the foundation for the implementation of parallel user environments, and what we call containers today gradually loomed.

A major and quick step towards isolation was the integration of existing technologies. In particular, the cgroups mechanism , operating at the Linux kernel level and restricting the use of system resources to a process or group of processes, with the systemd initialization system, which is responsible for creating user space and starting processes. The combination of these mechanisms, originally created in order to increase the overall controllability in Linux, made it possible to better control isolated processes and laid the foundation for a successful separation of environments.

The next milestone in container history is related to the development of user namespaces), "Allowing the user and group identifiers assigned to the process to be separated inside and outside the namespace. In the context of containers, this means that users and groups can have privileges to perform certain operations inside the container, but not outside. " This is similar to the concept of Jail, but safer due to additional process isolation.

Then came the Linux Containers project (LXC) virtualization system , which offered a number of highly sought-after tools, templates, libraries, and language support tools, greatly simplifying the use of containers in practice.

In 2008, Docker (then called dotCloud ) came on the scene with the technology of the same name, combining the achievements of LXC with advanced tools for developers and further facilitating the use of containers. Today, Docker open source technology is the most well-known and popular tool for deploying and managing Linux containers.

Along with many other companies, Red Hat and Docker are participants in the Open Container Initiative (OCI) project, which aims to unify container technology management standards.

The Open Container Initiative project is sponsored by the Linux Foundation . It was established in 2015 "to create open industry standards for container formats and runtimes." Currently, his main task is to develop specifications for container images and runtimes.

The runtime specification defines a set of open standards that describe the composition and structure of the filesystem bundle, and how this bundle should be unpacked by the runtime. Basically, this specification is needed so that the container works as intended, and all the necessary resources are available and located in the right places.

The container image specification defines standards for "image manifest, file system serialization, and image configuration."

Together, these two specifications define what is inside the container image, as well as its dependencies, environments, arguments, and other parameters necessary for the container to execute correctly.

Linux containers are another evolutionary step in the development of application development, deployment and maintenance methods. Providing portability and version control, the container image ensures that if the application runs on the developer's computer, then it will work in an industrial environment.

Demanding less system resources than a virtual machine, the Linux container is almost inferior to it in isolation capabilities and greatly facilitates the maintenance of composite multi-level applications.

The meaning of Linux containers is to speed up development and help you quickly respond to business requirements as they arise, and not provide any specific software to solve emerging problems. Moreover, not only the entire application can be packaged in a container, but also individual parts of the application and services, and then use technologies like Kubernetes to automate and orchestrate such containerized applications. In other words, you can create monolithic solutions where all the application logic, runtime components and dependencies are in the same container, or you can build distributed applications from many containers that work as microservices.

Containers are a great way to speed up delivery of software and applications to customers using them in industrial environments. But this naturally increases responsibility and risks. How to keep containers safe, says Josh Bresser, Red Hat Security Strategist.

“I have been dealing with security issues for a very long time, and they are almost always not given due importance until a technology or idea becomes mainstream,” Bresser complains. - Everyone agrees that this is a problem, but that is how the world works. Containers are taking over the world today, and their place in the overall security picture is starting to clear up. I must say that containers are not something special, it is just another tool. But since today they are in the spotlight, it's time to talk about their safety.

At least once a week, they assure me that running workloads in containers is safe, so don't worry about what's inside them. In fact, everything is completely wrong, and such an attitude is very dangerous. Security inside the container is just as important as security anywhere else in the IT infrastructure. The containers are already here, they are actively used and distributed with amazing speed. However, there is no magic in terms of security in them. A container is only as secure as the contents inside it. Therefore, if your container contains a bunch of vulnerabilities, then the result will be exactly the same as in the case of "bare iron" with the same bunch of vulnerabilities. "

Container technology is changing the way it looks at the computing environment. The essence of the new approach is that you have an image that contains only what you need, and which you only launch when it is needed. You no longer have any extraneous software that is installed, but it is not clear why and can cause big trouble. From a security point of view, this is called an “attack surface.” The less you have all sorts of things running in the container, the smaller this surface, and the higher the security. However, even if few programs are running inside the container, you still need to make sure that the contents of the container are not outdated and are not teeming with vulnerabilities. The size of the attack surface does not matter if something is installed inside that has serious security vulnerabilities. Containers are not omnipotent

Banyan released a report titled “ Over 30% of official images in the Docker Hub have high criticality security vulnerabilities .” 30% is a lot. Since the Docker Hub is a public registry, it contains a ton of containers created by a wide variety of people. And since anyone can place containers in such a registry, no one can guarantee that a newly published container does not contain old "holey" software. Docker Hub is both a blessing and a curse. On the one hand, it saves a lot of time and effort when working with containers, on the other hand, it does not give any guarantees that a loaded container does not contain known security vulnerabilities.

Most of these vulnerable images are not malicious, no one built in them with “holey” software with malicious intent. Just someone at one time packed the software in a container and posted it on the Docker Hub. As time passed, a vulnerability was discovered in the software. And as long as someone does not follow this and do the update, the Docker Hub will continue to be a hotbed of vulnerable images.

When deploying containers, basic images are usually “pulled” from the registry. If this is a public registry, then it is not always possible to understand what you are dealing with, and in some cases to get an image with very serious vulnerabilities. The contents of the container is really important. Therefore, a number of organizations are starting to create scanners that look inside container images and report vulnerabilities found. But scanners are only half the solution. After all, after the vulnerability is found, for it you need to find and install a security update.

Of course, you can completely abandon third-party containers in order to develop them and manage them exclusively on your own, but this is a very difficult decision, and it can seriously distract you from the main tasks and goals. Therefore, it is much better to find a partner who understands the safety of containers and is able to solve the corresponding problems so that you can focus on what you really need.

Red Hat offers a fully integrated platform for deploying Linux containers, which is suitable for small pilot projects as well as for complex systems based on orchestrated multi-container applications - from the operating system for the host where the containers work, to verified container images for building your own applications or same orchestration platforms and controls for industrial container environments.

The containers and most of the technologies for deploying and managing them are released by Red Hat as open source software.

Linux containers are another evolutionary step in the way we develop, deploy, and manage applications. They provide portability and version control, helping to ensure that a developer working on a laptop will work in production.

Do you use containers in your work and how do you assess their prospects? Share your pain, hopes and successes.

Answer: use containers. Together with your application, the container contains all the necessary configurations (and files), so it can be easily transferred from the development environment to the testing environment, and then to the industrial environment, without fear of any side effects. The crisis is eliminated, everything is in the gain.

This, of course, is a simplified example, but Linux containers really allow you to successfully deal with many problems related to portability, customization and isolation of applications. Whether it’s a local IT infrastructure, a cloud, or a hybrid solution based on them, containers can do their job efficiently. Of course, in addition to the containers themselves, the choice of the right container platform is important.

What are Linux containers

A Linux container is a set of processes that are isolated from the rest of the operating system and run from a separate image that contains all the files necessary for their operation. The image contains all the application dependencies and therefore can easily be transferred from the development environment to the testing environment, and then to the industrial environment.

Isn't that just virtualization?

Yes and no. This approach will help to understand:

- Virtualization provides simultaneous operation of several operating systems on one computer

- Containers use the same operating system kernel and isolate application processes from the rest of the system.

What does it mean? First of all, the fact that for simultaneous operation of several operating systems on one hypervisor (a program that implements virtualization) requires more system resources than for a similar configuration based on containers. Resources, as a rule, are not unlimited, therefore, the less your applications “weigh”, the denser they can be placed on servers. All Linux containers running on a computer use the same operating system, so your applications and services remain lightweight and run in parallel without interfering with each other.

A brief history of containers

The progenitor of containers can be considered the FreeBSD Jail technology , which appeared in 2000. It allows you to create several independent systems inside the same FreeBSD operating system , running on its own kernel, the so-called "jail cells". Cameras were conceived as isolated environments that an administrator can safely provide to internal users or external clients. Since the camera is built on the basis of the chroot call and is a virtual environment with its files, network and users, processes cannot go beyond the camera and damage the main OS. However, due to design limitations, the Jail mechanism still does not completely isolate the processes, and over time, ways to “escape” from the camera have appeared.

But the idea itself was promising, and already in 2001, the VServer project appeared on the Linux platform , created, according to its founder , Jacques Gélinas, in order to run “several standard Linux-servers on one machine with a high degree of independence and security. ” Thus, Linux appeared the foundation for the implementation of parallel user environments, and what we call containers today gradually loomed.

On the way to practical use

A major and quick step towards isolation was the integration of existing technologies. In particular, the cgroups mechanism , operating at the Linux kernel level and restricting the use of system resources to a process or group of processes, with the systemd initialization system, which is responsible for creating user space and starting processes. The combination of these mechanisms, originally created in order to increase the overall controllability in Linux, made it possible to better control isolated processes and laid the foundation for a successful separation of environments.

The next milestone in container history is related to the development of user namespaces), "Allowing the user and group identifiers assigned to the process to be separated inside and outside the namespace. In the context of containers, this means that users and groups can have privileges to perform certain operations inside the container, but not outside. " This is similar to the concept of Jail, but safer due to additional process isolation.

Then came the Linux Containers project (LXC) virtualization system , which offered a number of highly sought-after tools, templates, libraries, and language support tools, greatly simplifying the use of containers in practice.

Docker Appearance

In 2008, Docker (then called dotCloud ) came on the scene with the technology of the same name, combining the achievements of LXC with advanced tools for developers and further facilitating the use of containers. Today, Docker open source technology is the most well-known and popular tool for deploying and managing Linux containers.

Along with many other companies, Red Hat and Docker are participants in the Open Container Initiative (OCI) project, which aims to unify container technology management standards.

Standardization and Open Container Initiative

The Open Container Initiative project is sponsored by the Linux Foundation . It was established in 2015 "to create open industry standards for container formats and runtimes." Currently, his main task is to develop specifications for container images and runtimes.

The runtime specification defines a set of open standards that describe the composition and structure of the filesystem bundle, and how this bundle should be unpacked by the runtime. Basically, this specification is needed so that the container works as intended, and all the necessary resources are available and located in the right places.

The container image specification defines standards for "image manifest, file system serialization, and image configuration."

Together, these two specifications define what is inside the container image, as well as its dependencies, environments, arguments, and other parameters necessary for the container to execute correctly.

Containers as an abstraction

Linux containers are another evolutionary step in the development of application development, deployment and maintenance methods. Providing portability and version control, the container image ensures that if the application runs on the developer's computer, then it will work in an industrial environment.

Demanding less system resources than a virtual machine, the Linux container is almost inferior to it in isolation capabilities and greatly facilitates the maintenance of composite multi-level applications.

The meaning of Linux containers is to speed up development and help you quickly respond to business requirements as they arise, and not provide any specific software to solve emerging problems. Moreover, not only the entire application can be packaged in a container, but also individual parts of the application and services, and then use technologies like Kubernetes to automate and orchestrate such containerized applications. In other words, you can create monolithic solutions where all the application logic, runtime components and dependencies are in the same container, or you can build distributed applications from many containers that work as microservices.

Industrial Containers

Containers are a great way to speed up delivery of software and applications to customers using them in industrial environments. But this naturally increases responsibility and risks. How to keep containers safe, says Josh Bresser, Red Hat Security Strategist.

“I have been dealing with security issues for a very long time, and they are almost always not given due importance until a technology or idea becomes mainstream,” Bresser complains. - Everyone agrees that this is a problem, but that is how the world works. Containers are taking over the world today, and their place in the overall security picture is starting to clear up. I must say that containers are not something special, it is just another tool. But since today they are in the spotlight, it's time to talk about their safety.

At least once a week, they assure me that running workloads in containers is safe, so don't worry about what's inside them. In fact, everything is completely wrong, and such an attitude is very dangerous. Security inside the container is just as important as security anywhere else in the IT infrastructure. The containers are already here, they are actively used and distributed with amazing speed. However, there is no magic in terms of security in them. A container is only as secure as the contents inside it. Therefore, if your container contains a bunch of vulnerabilities, then the result will be exactly the same as in the case of "bare iron" with the same bunch of vulnerabilities. "

What's wrong with container security

Container technology is changing the way it looks at the computing environment. The essence of the new approach is that you have an image that contains only what you need, and which you only launch when it is needed. You no longer have any extraneous software that is installed, but it is not clear why and can cause big trouble. From a security point of view, this is called an “attack surface.” The less you have all sorts of things running in the container, the smaller this surface, and the higher the security. However, even if few programs are running inside the container, you still need to make sure that the contents of the container are not outdated and are not teeming with vulnerabilities. The size of the attack surface does not matter if something is installed inside that has serious security vulnerabilities. Containers are not omnipotent

Banyan released a report titled “ Over 30% of official images in the Docker Hub have high criticality security vulnerabilities .” 30% is a lot. Since the Docker Hub is a public registry, it contains a ton of containers created by a wide variety of people. And since anyone can place containers in such a registry, no one can guarantee that a newly published container does not contain old "holey" software. Docker Hub is both a blessing and a curse. On the one hand, it saves a lot of time and effort when working with containers, on the other hand, it does not give any guarantees that a loaded container does not contain known security vulnerabilities.

Most of these vulnerable images are not malicious, no one built in them with “holey” software with malicious intent. Just someone at one time packed the software in a container and posted it on the Docker Hub. As time passed, a vulnerability was discovered in the software. And as long as someone does not follow this and do the update, the Docker Hub will continue to be a hotbed of vulnerable images.

When deploying containers, basic images are usually “pulled” from the registry. If this is a public registry, then it is not always possible to understand what you are dealing with, and in some cases to get an image with very serious vulnerabilities. The contents of the container is really important. Therefore, a number of organizations are starting to create scanners that look inside container images and report vulnerabilities found. But scanners are only half the solution. After all, after the vulnerability is found, for it you need to find and install a security update.

Of course, you can completely abandon third-party containers in order to develop them and manage them exclusively on your own, but this is a very difficult decision, and it can seriously distract you from the main tasks and goals. Therefore, it is much better to find a partner who understands the safety of containers and is able to solve the corresponding problems so that you can focus on what you really need.

Red Hat Container Solutions

Red Hat offers a fully integrated platform for deploying Linux containers, which is suitable for small pilot projects as well as for complex systems based on orchestrated multi-container applications - from the operating system for the host where the containers work, to verified container images for building your own applications or same orchestration platforms and controls for industrial container environments.

Infrastructure

- The

Red Hat Enterprise Linux Host (RHEL) is a Linux distribution that has earned a high reputation in the world in terms of trust and certification. If you only need support for containerized applications, you can use the specialized Red Hat Enterprise Linux Atomic Host distribution . It provides the creation of container solutions and distributed systems / clusters, but does not contain the functionality of the general-purpose operating system, which is in RHEL. - Inside a container

Using Red Hat Enterprise Linux inside containers ensures that regular, non-containerized applications deployed on the RHEL platform will work just as well inside containers. If the organization itself develops applications, then RHEL inside containers allows you to maintain the familiar level of technical support and updates for containerized applications. In addition, portability is provided - in other words, applications will work without problems wherever there is RHEL, starting from the developer's machine and ending with the cloud. - Data Warehouse

Containers may require a lot of space in the data warehouse. In addition, they have one design flaw - when a container crashes, the stateful application in it loses all its data. Integrated with the Red Hat OpenShift platform, Red Hat Gluster Storage software provides flexible, managed storage for containerized applications, eliminating the need to deploy an independent storage cluster or spend money on costly expansion of traditional monolithic storage systems. - Infrastructure-as-a-Service (IaaS)

Red Hat OpenStack Platform integrates physical servers, virtual machines, and containers into a single unified platform. As a result, container technologies and containerized applications are fully integrated with the IT infrastructure, paving the way for full automation, self-service and resource quoting throughout the technology stack.

Platform

- Container Application

Platform The Red Hat OpenShift platform integrates key container technologies such as docker and Kubernetes with the enterprise-class Red Hat Enterprise Linux operating system. The solution can be deployed in a private cloud or in public cloud environments, including with the ability to support forces of Red Hat. In addition, it supports both stateful and stateless applications, providing transfer to container rails without architectural processing of existing applications. - All-in-One Solution

Sometimes it’s better to get it all at once. It is for such cases that the Red Hat Cloud Suite package is intended , which includes a container application development platform, infrastructure components for building a private cloud, integration tools with public cloud environments, and a common management system for all components. Red Hat Cloud Suite allows you to upgrade your corporate IT infrastructure so that developers can quickly create and provide services to employees and customers, and IT professionals have centralized control over all components of the IT system.

Control

- Hybrid Cloud Management

Success depends on flexibility and choice. Universal solutions do not exist, so in the case of corporate IT infrastructure, it is always worth having more than one option. Complementing public cloud platforms, private clouds, and traditional data centers, containers expand the choice. Red Hat CloudForms lets you manage hybrid clouds and containers in an easily scalable and understandable way by integrating container management systems such as Kubernetes and Red Hat OpenShift with Red Hat Virtualization and VMware virtual environments. - Automation of containers

Creating and managing containers is often a monotonous and time-consuming job. Ansible Tower by Red Hat allows you to automate it and eliminate the need to write shell scripts and perform operations manually. Ansible scripts provide the ability to automate the entire container life cycle, including assembly, deployment, and management. You no longer have to do the routine, and time will come for more important things.

The containers and most of the technologies for deploying and managing them are released by Red Hat as open source software.

Linux containers are another evolutionary step in the way we develop, deploy, and manage applications. They provide portability and version control, helping to ensure that a developer working on a laptop will work in production.

Do you use containers in your work and how do you assess their prospects? Share your pain, hopes and successes.