iOS Safari 11 Can WebRTC Now

A couple of weeks ago, the release of new iPhones and iOS 11 took place, which was impossible not to notice. With the release, there was another event that was certainly important for developers: the long-awaited support for WebRTC appeared in the Safari browser.

Imagine for a minute, millions of iPhones and iPads around the world began to be able to real-time audio and video in a browser. IOS and Mac users have access to full-featured browser video chats, live streaming with low (less than a second) real-time delay, calls, conferences and much more. They went for a long time and finally it happened.

It was

Earlier, we wrote about a way to play video with a minimum delay in iOS Safari, and this method is still relevant for iOS 9 and iOS 10, where there is no support for WebRTC technology. We suggested using the approach codenamed “WSPlayer”, which allows you to deliver a live video stream from a server using the Websocket protocol, then decode the stream using JavaScript and draw the video stream into a Canvas HTML5 element using WebGL. The received audio stream was played back through the Web Audio API of the browser, and this is how it looked:

This approach allowed and now allows you to play the stream on the iOS Safari browser page with a delay of about 3 seconds, but has the following disadvantages:

1. Performance.

The video stream is decoded by JavaScript. This creates a fairly high load on the CPU of the mobile device, does not allow to play high resolutions and utilizes the battery charge.

2. TCP.

The transport protocol used for video and audio is Websocket / TCP. For this reason, it is not possible to target a delay that can increase depending on network fluctuations.

All this time, until iOS 11 came out, WSPlayer could play video with a relatively low latency (3 seconds), compared to HLS (20 seconds). Now everything has changed for the better, and the native WebRTC technology is replacing the JavaScript player, which does all the work using the browser itself without decoding into JavaScript and without using Canvas.

Has become

With the advent of WebRTC, the low-latency video playback scheme in iOS Safari 11 became identical to other browsers already supporting WebRTC, namely Chrome, Firefox, Edge.

Microphone and camera

Above, we wrote only about playing realtime video. However, video chat cannot be launched without a camera and microphone, and this was the main obstacle and the main headache for developers who planned to support iOS Safari browser in their video chat or other web project with live video. How many man-hours were wasted in search of a solution in iOS Safari 9 and 10, when this solution simply didn’t exist - Safari could not capture the camera and microphone, and this “nebaganoficha” was fixed recently in iOS 11.

Launch iOS 11 Safari and We request access to the camera and microphone. It was this simple thing that we were waiting for, and as you can see, we waited:

The browser asks for a camera and microphone and can either stream live streams or play sound and video.

You can also look at the Safari browser settings and turn on / off the microphone there:

Camera display and video streaming

Not without its “features”. The main distinguishing feature of video playback in a video element is the fact that to play on a page you need to click (tap).

For developers, this is an obvious limitation and a brake. After all, if the customer demands - “I want the video to start playing automatically after the page is loaded”, this trick will not work in iOS Safari and the developer will have to explain that Safari and Apple are to blame for everything with their certain security policy.

For users, this can be a blessing, because sites will not be able to play the stream without the knowledge of the user, who, by clicking on the UI element of the page, formally confirms his desire to play this video stream.

What about Mac OS?

There is good news for owners of MacBooks and Mac OS. After the upgrade, Safari 11 for Mac also launched WebRTC. Prior to this, Mac Safari used the good old Flash Player, which allows you to do the work that WebRTC does - to compress and play audio and video over the network via the RTMP and RTMFP protocols. But with the advent of WebRTC, the need to use Flash Player for video chat has disappeared. Therefore, for Safari 11+ we use WebRTC, and for Safari 10 and less, we continue to use Flash Player or WebRTC plug-ins as a fallback mechanism.

Clarify the status

As you can see, support for WebRTC was added to Safari 11, and for Safari 9 and 10 there were fallbacks in the form of Fash Player and WebRTC plug-ins on Mac OS, as well as WSPlayer on iOS.

Mac, Safari 10 | iOS 9, 10, Safari | Mac, Safari 11 | iOS 11, Safari |

Flash player WebRTC plugins Wsplayer | Wsplayer | WebRTC | WebRTC |

Testing broadcast from browser to browser

Now let's check the main cases in practice, and let's start with the player. First of all, install the iOS 11.0.2 update with the new Safari.

So, as the first test, Chrome for Windows will broadcast the video stream to the server, and the viewer on iOS Safari will play the video stream via WebRTC.

We open the Two Way Streaming example in the Chrome browser and send the WebRTC video stream with the name 1ad5 to the server. Chrome captures the video from the camera, presses in the H.264 codec in this case, and sends a live stream to the server for subsequent distribution. The video stream broadcast looks like this :

To play, specify the stream name and the player in iOS Safari starts playing the stream that was previously sent by Chrome to the server. Playing a stream on an iPhone in Safari looks like this :

The delay is imperceptible (less than a second). The video stream is played out smoothly, without any hint of artifacts. The playback quality is normal, you can see the screenshots.

And it looks like video playback in the same Two Way Streaming example in the Play block. Thus, one stream can be broadcast, and the second can be played on the same browser page. If users know each other's stream names, we get a simple video chat.

Testing webcam and microphone broadcasts using iOS Safari

As we wrote above, the main feature of WebRTC is the ability to capture the camera and microphone in the browser and send over the network with a targeted low latency. We check how it works in iOS Safari 11.

We open in Safari the same demo streamer example that we opened in Chrome. Get access to the camera and microphone. Safari displays a dialog that asks you to allow or deny the use of the camera and microphone.

After allowing access to the camera and microphone, we will see a red camera icon in the upper left corner of the browser. So Safari shows that the camera is active and in use. In this case, the video stream is sent to the server.

We take this stream in another browser, for example Chrome. On playback, we see a stream from Safari with swearing vertical shooting, and all because the device was not turned upside down.

After changing the orientation of the iPhone, the picture of the stream playback takes on its normal form:

Capturing and broadcasting video is always technologically more interesting than playback, because this is where important RTCP feedback takes place, which target the delay and quality of the video.

At the time of writing, we did not find any suitable WebRTC monitoring tools in the browser for iOS Safari, similar to webrtc-internals for Chrome. Let's see how the server sees the video stream captured from Safari. To do this, turn on monitoring and check the main graphs that describe traffic coming from Safari.

The first section of the graphs shows metrics such as NACK and PLI, which are indicators of the loss of UDP packets. For a normal network, the number of NACK shown in the graphs is insignificant, about 15, so we believe that the analyzes are within normal limits.

The FPS of the video stream fluctuates in the range of 29.30.31 and does not sag to low values (10-15). This means that the iPhone hardware accelerator has enough performance to encode video in an H.264 codec, and there is enough processor to stream this video to the network. For this test, we used the iPhone 6, 16 GB.

The following graphs show how video resolution and bit rate change. The video bitrate varies in the range 1.2 - 1.6 Mbps, the video resolution remains the same 640x480. This suggests that there is enough bandwidth for video encoding and Safari shakes the video with the maximum bitrate. If desired, the bitrate can be clamped to the desired limits.

Next, we check the bitrate of the audio component of the stream and the statistics of audio losses. The graph shows that the audio is not lost, the loss counter is at zero. The audio bitrate is 30-34 kbps. This is the Opus codec by which Safari squeezes the audio stream captured from the microphone.

And the last chart is timecodes. According to it, we evaluate how synchronously audio and video go. If there is no synchronization, then visual desynchronization becomes noticeable when the voice does not keep up with the lips, or vice versa the video goes forward. In this case, the stream with Safari enters perfectly synchronously and monotonously without the slightest deviation.

From the graphs you can see a picture typical of WebRTC and behavior very similar to the behavior of the Google Chrome browser: NACK and PLI feedbacks come, FPS changes slightly, bitrate floats. That is, we get the WebRTC that we were waiting for.

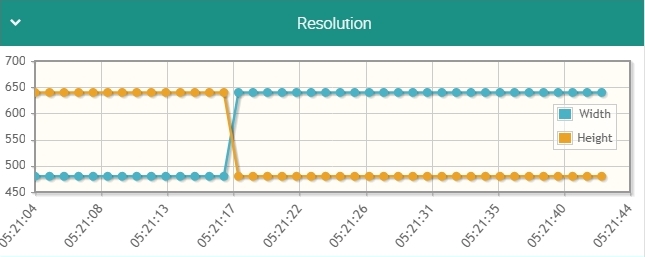

Pay attention to the change in height and width. For example, if you change the position of the device to horizontal, the resolution of the stream will change to the opposite, for example, from 640x480 to 480x640, as in the graph below.

The orange line in the graph shows the width, and the blue one shows the height of the image. At 05:21:17 we flip the iPhone, which streams the stream, to a horizontal position and the resolution of the stream changes exactly the opposite 480 in width and 640 in height.

Testing video playback from an IP camera in WebRTC for iOS Safari

An IP camera is most often a portable Linux server that streams over RTSP. In this test, we take video from an IP camera with H.264 support and play this video in the iOS Safari browser using WebRTC. To do this, in the player that was shown above, we enter instead of the stream name, its RTSP address.

Playing a stream from an IP camera in Safari via WebRTC looks like this :

In this case, the video wins back smoothly, without any problems with the picture. But here much depends on the source of the stream - on how the video from the camera will go to the server.

As a result, we successfully tested the following 3 cases:

- Cast from Chrome browser to Safari

- Capture camera and microphone with streaming from Safari to Chrome

- Play video from an IP camera in iOS Safari

A bit about the code

For broadcasting video streams, we use the universal API ( Web SDK ), which in terms of broadcasting looks like this:

session.createStream({name:'stream22',display:document.getElementById('myVideo')}).publish();Here we set the unique name of the stream stream22 and use the div element:

To display the captured camera on a web page.

Playing the same video stream in a browser works like this:

session.createStream({name:'stream22',display:document.getElementById('myVideo')}).play();Those. we again denote the name of the stream and indicate the div element in which you want to place the video for playback. Then call the play () method.

iOS Safari is currently the only browser in which you need to click on the page element for the video to play.

Therefore, we added a small code, especially for iOS Safari, which “activates” the video element before playing the stream by calling the following code :

if (Flashphoner.getMediaProviders()[0] === "WSPlayer") {

Flashphoner.playFirstSound();

} else if ((Browser.isSafariWebRTC() && Flashphoner.getMediaProviders()[0] === "WebRTC") || Flashphoner.getMediaProviders()[0] === "MSE") {

Flashphoner.playFirstVideo(remoteVideo);

}

This code in the standard player is called by clicking on the Play button, and thereby we fulfill the Apple requirement and start playback correctly.

Flashphoner.playFirstVideo(remoteVideo);In conclusion

iOS 11 Safari finally got support for WebRTC and it is unlikely that this support will be cut in the next updates. Therefore, we boldly take this opportunity and make real-time streaming video and calls in this browser. We install further updates of iOS 11.x and wait for new fixes and feature bugs. Have a good streaming!

References

WCS - the server with which we tested live streams on iOS 11 Safari

Two Way Streaming - an example of the

Source Two Way Streaming translator - the sources of the streamer

Player - an example of the player

Source Player - the sources of the

WSPlayer player - playback of streams with low latency in iOS 9, 10 Safari