Network Security Status in 2016, Qrator Labs and Wallarm detailed report

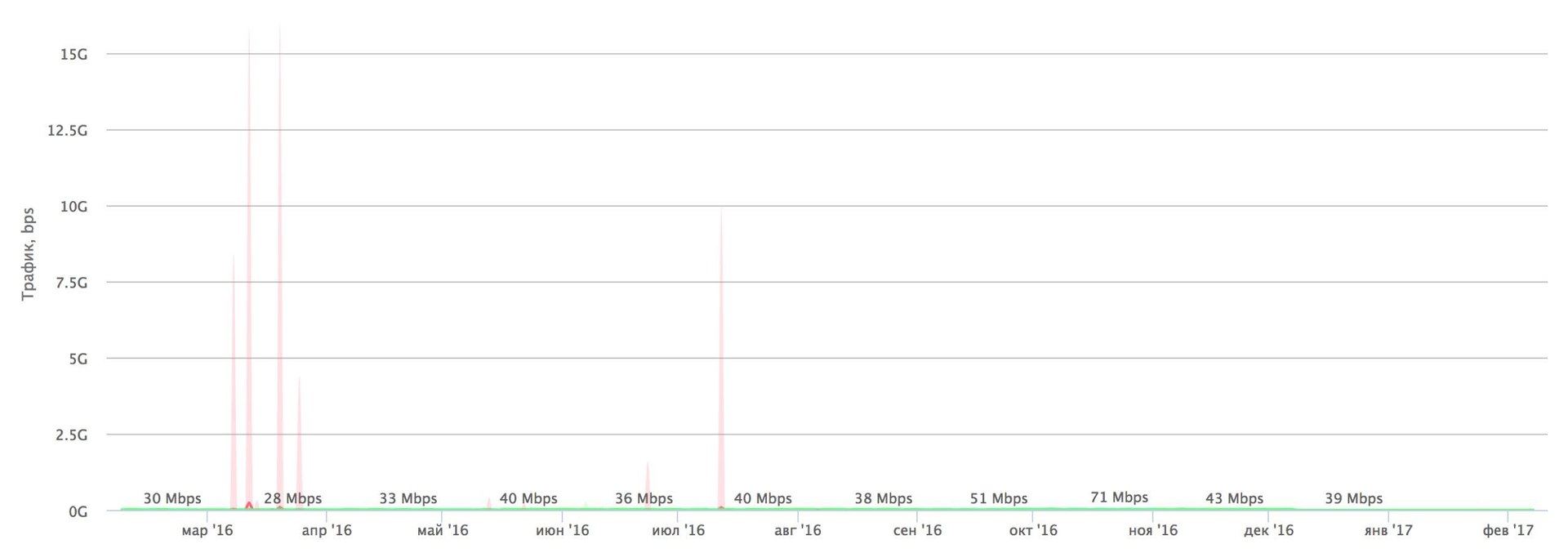

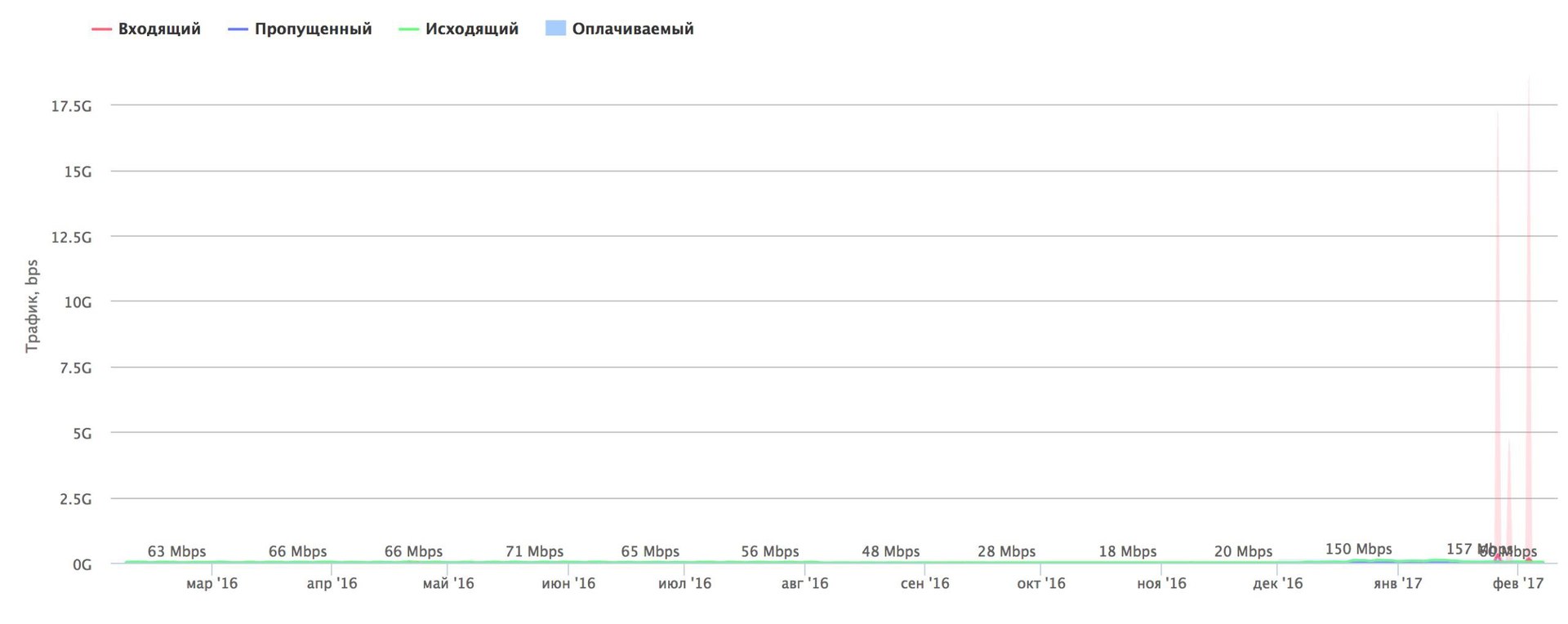

"Habraeffect" on the contrary - attacks on Habrahabr for a year (above) and on Giktayms (below). In February 2017, the attack at 17.5 Gbit / s was neutralized on the Giktayms.

As a company whose core business is the neutralization of DDoS, last year we observed several changes in the industry.

Incidents of denial of service attacks are once again heard - but now correctly executed attacks are already threatening the accessibility of entire regions. Once again, attention needs to be paid to the problem - as if we had returned to the past 5–7 years ago.

Until last year, it might seem that the DDoS problem is already well resolved.

But the power of attacks and their complexity last year increased radically. In the past, even powerful attacks of 100-300 Gbit / s did not cause a special “headache”. Complex types of attacks on application layer protocols were rare.

And in 2016, the world first saw attacks at 1 Tbps, and attacks on L7 became much more common.

Attack Simplification

There are several reasons for these changes.

All these years, the evolution of information technology has gone along a kind of "path of least resistance." Companies fought against time and competitors, and the winner was the one who successfully and on time saved. To write a competitive product, you often had to neglect security.

The whole modern Internet was formed in the same way - the creation of its protocols and specifications gave rise to similar problems.

And last year, these problems reached a critical level. In fact, we have witnessed unprecedented changes in the overall security situation of the Web.

Dynamics of changes in the activity of attacks by industry for 2015 - 2016

A good illustration of this is the threat of Mirai in the fall of 2016, a botnet of unprecedented power built on IoT devices - from home routers and IP cameras to ridiculous “exotic” Wi-Fi dummies. The danger of Mirai turned out to be quite real: a completely tangible 620 Gbit / s volumetric attack came to the researcher Brian Krebs' blog, and the French OVH hoster withstood 990 Gbit / s.

Last year we ourselves also met with Mirai - in the form of an attack on 120 Gb / s.

Dyn is the worst-affected DNS provider for Mirai, and many Fortune 500 companies use it. The attack of water torture on DNS servers, TCP and UDP traffic on port 53, power of 1.2 Tbps from one hundred thousand nodes - and the largest websites of the world went offline for several hours. Protecting DNS is especially difficult. Typically, junk traffic comes from a dozen ports (53, 123, and so on). In the case of the DNS server, closing port 53 means stopping the normal operation of the service.

The Mirai botnet itself consisted of Internet-connected devices with default login-password pairs and fairly simple vulnerabilities. We believe that this is only the first-born of the entire generation of Internet of things botnets. Even a solution to the problem of one Mirai will not help.

At first, the attackers simply sorted out passwords, now they look for vulnerabilities and backdoors, it comes to studying the code of the device’s latest firmware for possible “holes” with their subsequent operation within a few hours.

The boom of startups and the subsequent increase in the number of connected devices is a new field of rich opportunities where you can create more than one even larger and more dangerous botnet. In 2016, terabit per second suddenly appeared unattainable.

What attacks will have to face, say, in 2019?

At the same time, the level of necessary experience and knowledge for organizing DDoS attacks has fallen markedly. Today, to carry out a successful attack, even on large sites and applications, video instructions on YouTube or a bit of cryptocurrency are enough to pay for services like booter. Therefore, in 2017, the most dangerous person in the field of cybersecurity may be, for example, an ordinary teenager with a pair of bitcoins in his wallet.

Amplification

To increase the power of attacks, attackers amplify attacks. An attacker increases the amount of “junk” traffic sent by exploiting vulnerabilities in third-party services, and also masks the addresses of a real botnet. A typical example of an amplified attack is DNS response traffic to the victim's IP address.

Another vector is Wordpress, a ubiquitous and functional blogging engine. Among the other features in this CMS is the Pingback feature, which allows offline blogs to share comments and mentions. Vulnerability in Pingback allows a special XML request to force a vulnerable server to request any web page from the Internet. The resulting malicious traffic is called Wordpress Pingback DDoS.

An attack on HTTPS is no more complicated than on HTTP: you just need to specify a different protocol. To neutralize, you will need a channel with a width of 20 Gb / s, the ability to process application-level traffic at full connection bandwidth and decrypt all TLS connections in real time - significant technical requirements, which not everyone can fulfill. A huge number of vulnerable Wordpress servers are added to this combination of factors - hundreds of thousands can be involved in a single attack. Each server has a good connection and performance, and participation in the attack for ordinary users is invisible.

We saw the first use of the vector in 2015, but it still works. We expect that in the future this type of attack will increase in frequency and power. Amplification on Wordpress Pingback or DNS are already well-established examples. Probably, in the future we will see the exploitation of younger protocols, primarily gaming.

BGP and leaks

The founding fathers of the Internet could hardly have predicted that it would grow to its current size. The network that they created was built on trust. This trust was lost during the boom in the Internet. BGP was created when the total number of Autonomous Systems (AS) was counted in dozens. Now there are more than 50 thousand.

The BGP routing protocol appeared in the late eighties as a sketch on a napkin of three engineers. It is not surprising that he answers the questions of a bygone era. Its logic says that packets should go on the best of the available channels. The financial relations of organizations and politics of huge structures were not in it.

But in the real world, money comes first. Money sends traffic from Russia somewhere to Europe, and then returns back to their homeland - it is cheaper than using a channel inside the country. The policy does not allow two quarreling providers to exchange traffic directly, it is easier for them to negotiate with a third party.

Another protocol problem is the lack of built-in mechanisms for checking routing data. The roots of BGP hijacking vulnerabilities, route leaks, and reserved AS numbers come from here. Not all anomalies are malicious in nature, often technical experts do not fully understand the principles of the protocol. They do not give a “driver's license” to drive BGP, there are no fines, but a large space for destruction is available.

A typical example of route leaks: a provider uses a list of client prefixes as the only mechanism for filtering outbound announcements. Regardless of the source of the announcements, client prefixes will always be announced in all available directions. As long as there are announcements directly, this problem remains difficult to detect. At one point, the provider's network degrades, clients try to divert announcements and disconnect the BGP session with the problem provider. But the operator continues to announce client prefixes in all directions, thereby creating route leaks and drawing a significant part of client traffic to its problem network. Of course, this is how Man in the Middle attacks can be organized, which some use.

To deal with leaks in anycast networks, we developed a number of amendments and submitted them to the Internet Engineering Council (IETF). Initially, we wanted to understand when our prefixes got into such anomalies, and through whose fault. Since the cause of most leaks was incorrect configuration, we realized that the only way to solve the problem was to eliminate the conditions in which the mistakes of engineers can affect other telecom operators.

The IETF develops voluntary Internet standards and helps disseminate them. The IETF is not a legal entity, but a community. This organization method has many advantages: the IETF does not depend on legal issues and the requirements of any country; it cannot be sued, hacked or attacked. But the IETF does not pay salaries, all participation is voluntary. All activity hardly reaches a priority higher than “unprofitable”. Therefore, the development of new standards is slow.

Anyone can discuss or propose draft standards - there are no membership requirements in the IETF. In the working group is the main process. When agreement is reached on a common topic, then with the authors of the proposal begin discussions and finalization of the draft. The result goes to the director of the region, whose purpose is to double-check the document. Then the document is sent to IANA, since it is this organization that controls all protocol changes.

If our draft with the new BGP extension goes through all the circles of hell, then the flow of route leaks will run out. Malicious leaks will not go anywhere, but to solve this problem there is only one option - constant monitoring.

2017 year

We expect faster detection of vulnerabilities in enterprises. According to statistics obtained by Wallarm with honeypot deployed by the company, in 2016, an average of 3 hours elapses between a public exploit and its mass exploitation. In 2013, this period was a week. Attackers are becoming more prepared and professional. The acceleration will continue, we expect a reduction of this time period to 2 hours in the near future. And again, only proactive monitoring can prevent this threat and insure against monstrous consequences.

Hacking and network scanning have already reached an unprecedented scale. This year, more and more cybercriminals will acquire pre-scanned ranges of IP addresses, segmented by the technologies and products used - for example, “all Wordpress servers”. The number of attacks on new technology stacks will increase: microcontainers, private and public clouds (AWS, Azure, OpenStack).

In the next one or two years, we expect to see a nuclear type of attack on providers and other infrastructure when connected autonomous systems or entire regions suffer. The last few years of the battle of the sword and shield have led to more advanced methods of neutralization. But the industry often forgot about legacy, and technical duty brought attacks to unprecedented simplicity. From this moment, only geo-distributed cloud systems built with competence will be able to withstand record attacks.

The above data are just excerpts from our network security status report . In it, these and other threats are described in detail.