Will formal verification of microkernel code allow creating highly reliable OS?

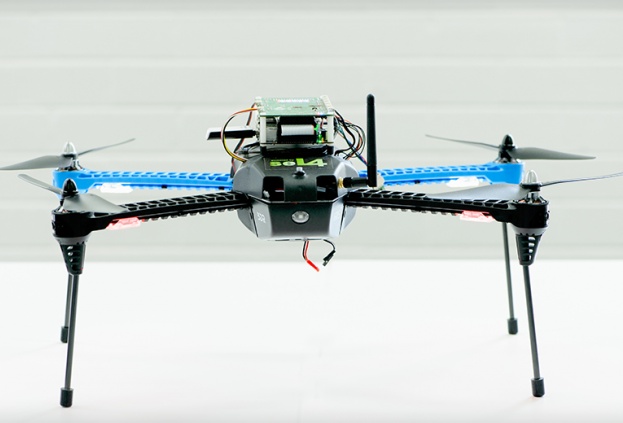

In 2015, the American company Rockwell Collins, together with Boeing and 3D-Robotics, tested the tamper-resistant Iris quadcopter and the Little Bird unmanned helicopter with an “highly reliable” operating system.

The development of burglar-proof drones is commissioned by the US Department of Defense Advanced Defense Projects Agency (DARPA), which is interested in protecting promising unmanned and optionally manned aircraft from possible vulnerabilities.

There are at least three methods of hacking unmanned aerial vehicles: the first is to gain access to control by hacking a communication channel or spoofing authorization data, the second is using software vulnerabilities, the third is using the original software interfaces and data channels to download malicious code.

The operating system developed by Rockwell Collins based on the seL4 microkernel is resistant to all three types of hacking. The seL4-based operating system was initially focused primarily on security. In English, this is called the beautiful phrase “hackproof” (burglary resistant).

Its feature is a kernel that is resistant to code spoofing, which uses mathematical algorithms and checksums to check running services, and also provides separate execution of processes.

The system allows you to run a virtual machine in which the guest program does not gain access to the main processes or hardware. When the program starts, the checksums are reported to the kernel, on the basis of which errors are fixed or unauthorized processes are closed.

The tests were successful: cybersecurity experts were not able to run malicious code on unmanned vehicles with a secure operating system, as well as seize control of the drone or cause a system failure.

The seL4 microkernel is used not only in aviation, but also in medicine, the financial sector, energy and other areas where a guarantee of the absence of failures is needed.

An important feature of the seL4 architecture is the removal of parts for managing kernel resources into the user space and using the same access control methods for these resources as for user resources. The microkernel does not provide ready-made high-level abstractions for managing files, processes, network connections, and so on.

Instead, it provides only minimal mechanisms for controlling access to the physical address space, interrupts, and processor resources.

High-level abstractions and drivers for interacting with equipment are implemented separately - on top of the microkernel in the form of tasks performed at the user level. The access of such tasks to the resources available in the microkernel is organized through the definition of rules.

For programmers, there is a component-oriented application development platform CAmkES , which allows you to simulate and create systems based on seL4 in the form of a collection of interacting components.

The L4.verified project , a formally verified core on the L4 architecture, is genetically linked to seL4 .

L4 is a second-generation microkernel developed by Jochen Lidtke in 1993.The architecture of the L4 microkernel has been successful. Many implementations of the ABI and L4 microkernel APIs have been created. All implementations began to be called the "L4 micronucleus family." So there was seL4. And the Lidtke implementation was unofficially called "L4 / x86."

The main idea of L4.verified, like seL4, is to mathematically prove the correctness of the kernel implementation. The fact is that microkernels, unlike monolithic-modular monsters like GNU / Linux, are very tiny in size. And if so, then they can be formally verified. Each line of code is associated with a mathematical statement that needs to be proved.

The L4.verified microkernel proves the conformity of the model implementation, the absence of perpetual cycles, and some other things. The cost of this study was about $ 6 million, and the project lasted 7 years. The volume of work reached 25 person-years.

In addition to its main application (safety, as with seL4), L4 is used in other areas. So, Qualcomm launched the implementation of the L4 microkernel, developed by NICTA , on a chipset called the “Mobile Station Modem” ( MSM ). This was announced in November 2005 by representatives of NICTA, and at the end of 2006, MSM chipsets went on sale. So the implementation of the L4 microkernel appeared in cell phones.

The issue of creating highly reliable software became especially relevant when the vast majority of applications began to work on the network and operate with a lot of data. And this data is often confidential.

There is not much material on the network about the role of formal verification in creating highly reliable operating systems. Even fewer in-depth discussions. Therefore, below are the main thoughts and arguments of one of the conversations about the article on this topic:

- Distribution of verification tools and their competent implementation will allow you to get rid of a whole class of errors in any software. For example, an error such as “buffer overflow” can be prevented by simply checking the necessary parameters.

There are still bugs there, one of the commentators concludes, referring to this material .

- It is claimed that seL4 code has some degree of reliability. If the creation of an OS completely protected from hacking is utopia, then why do cool teams like DARPA continue to do this?

- What happens if hackers slip verified code there? Or is it impossible? Why do all OSs have bugs that hackers can definitely use?

- Why does code verification have to be connected with hacking protection? What is impossible? Code verification and protection against hacking are two different tasks that only sometimes intersect.

Trojans, cyber-extortion, phishing, clickjacking are types of attacks that work regardless of the presence of errors in the application program code or the operating system as a whole. And if users choose weak passwords? Is this also the fault of software developers?

- The experiment is at odds with reality, since no one has ever opened access for them to the researchers. In such systems, you just need to carefully monitor to prevent anyone except the administrator from inserting the code.

- Wow! Well-written and tested applications are very hard to crack. Who would have thought ... Actually, the program code already consists of mathematically verified constructions.

- Developing verified code is a luxury few can afford. And its accompaniment and constant introduction of changes is even greater luxury.

- From a management point of view, programmers often behave bipolar. When you ask them to break up a large development task into sub-tasks, they easily agree. But when you offer them to think in advance about possible mistakes and systematically prevent them, the reaction is completely different: “it’s impractical”, “you don’t foresee everything,” “how about that?”, “How is it?” ... Not everyone wants to think about quality of code in advance.

- But what if the verification tools themselves contain errors? Who will verify the verifiers?

- In pure mathematics there are no “bugs”. If there is a rule that it is impossible to divide by zero, then the cases when the denominator is zero are simply not considered. In real life, this technique does not work. If we are talking about software development, then we have to work even with those values that the program “does not like”. The result of dividing by zero is infinity. And you need to do something with it, and not say that such a result cannot be.

PS Of course, you can discuss endlessly. But if large organizations such as DARPA take concrete steps to implement these projects, this story should at least be followed up and at the very least involved.

The source code for seL4 is still available on GitHub .