Complex data visualization algorithm

- Tutorial

Over the three years of its existence, the Data Laboratory has released about thirty interactive visualizations, in the format of custom, own projects and free tips. In the laboratory, we visualize financial and scientific data, urban transport network data, race results, marketing campaigns, and much more. In the spring, we received a bronze medal at the prestigious Malofiej 24 award for visualizing the results of the Moscow marathon.

For the past six months, I have been working on a data visualization algorithm that systematizes this experience. My goal is to give a recipe that will allow you to sort out any data and solve data visualization tasks as clearly and consistently as mathematical problems. In mathematics, it does not matter whether to stack apples or rubles, to distribute rabbits in boxes or budgets for advertising campaigns - there are standard operations of addition, subtraction, division, etc. I want to create a universal algorithm that will help visualize any data, while taking into account their meaning and uniqueness.

I want to share the results of my research with readers of Habr.

The purpose of the algorithm: to visualize a specific data set with maximum benefit for the viewer. The initial data collection remains behind the scenes; we always have data at the input. If there is no data, then there is no task to visualize the data.

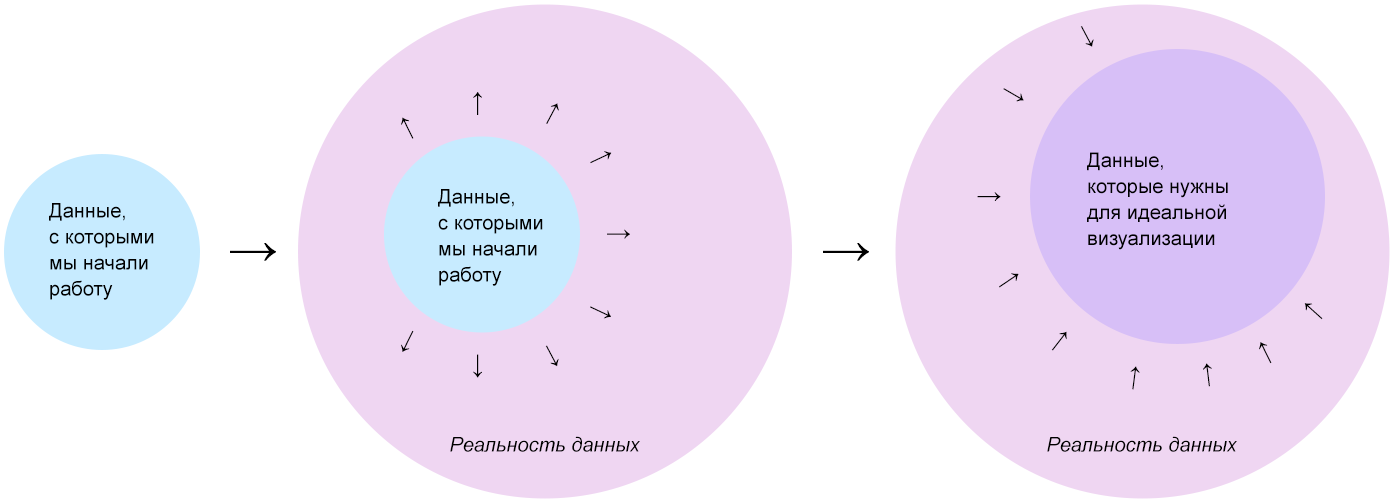

Typically, data is stored in tables and databases that combine multiple tables. All tables look the same, like pie / bar charts based on them. All data is unique, they are endowed with meaning, subordinate to the internal hierarchy, riddled with connections, contain patterns and anomalies. The tables show the slices and layers of the complete holistic picture that is behind the data - I call it the reality of the data.

Data reality is a collection of processes and objects that generate data. My recipe for high-quality visualization: to transfer the reality of data to an interactive web page with minimal losses (unavoidable due to media limitations), build upon visualization from the full picture, and not from a set of slices and layers. Therefore, the first step of the algorithm is to imagine and describe the reality of the data.

Description example:

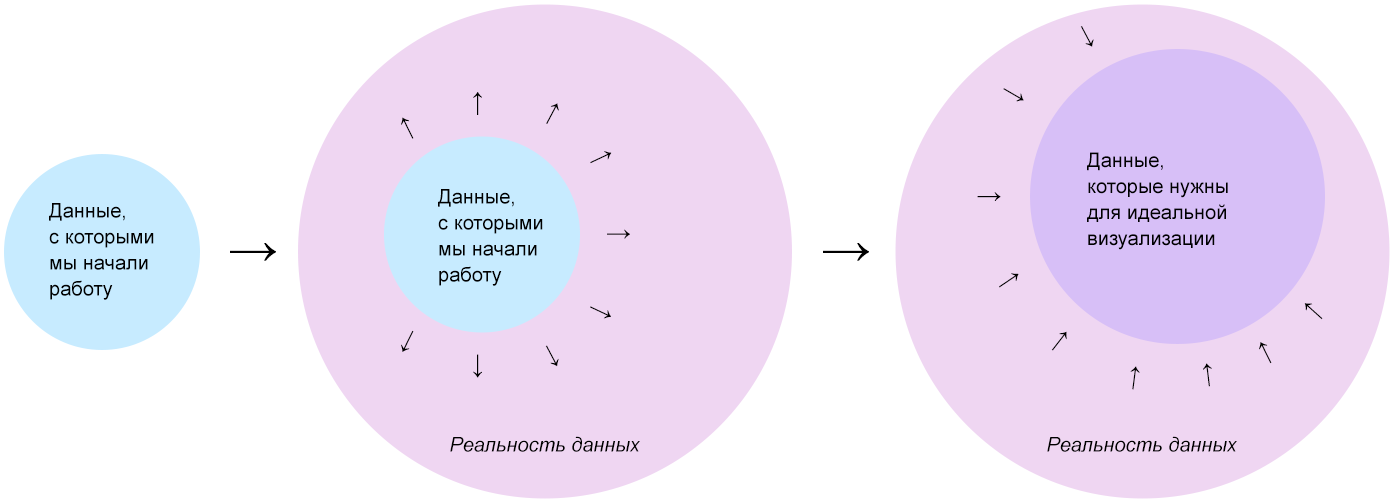

The data we start with is just a starting point. After meeting them, we present the reality that generated them, where there is much more data. In the reality of data, without regard to the initial set, we select data from which we could make the most complete and useful visualization for the viewer.

Part of the data of the ideal set will be unavailable, therefore we “extract” - we search in open sources or rely on those that can be “extracted” and work with them.

My biggest discovery and the central idea of the Δλ algorithm in dividing visualization into a mass of data and a wireframe. The frame is rigid, it consists of axes, guides, areas. The framework organizes the space of a blank screen, it transfers the data structure and does not depend on specific values. A mass of data is a concentrate of information; it consists of elementary particles of data. Due to this, it is plastic and “sticks” to any given frame. A mass of data without a framework is a shapeless pile, a framework without a mass of data is a bare skeleton.

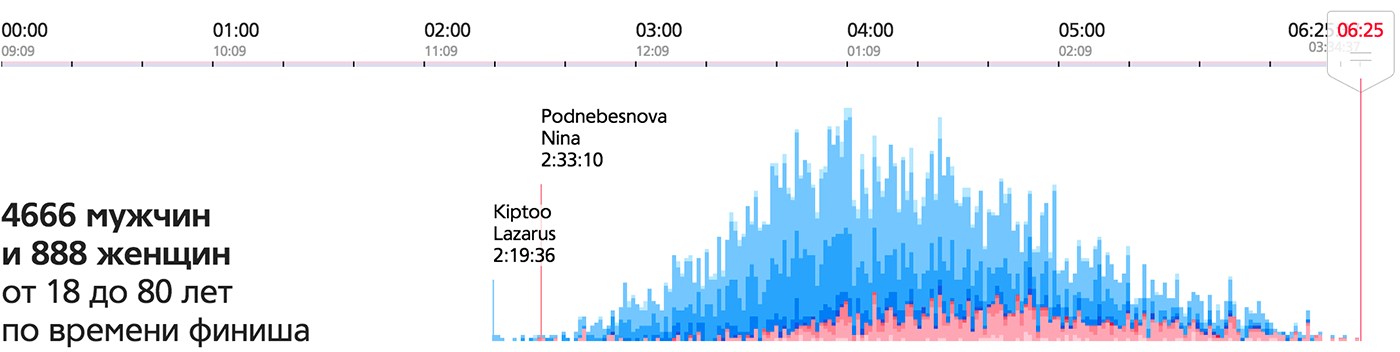

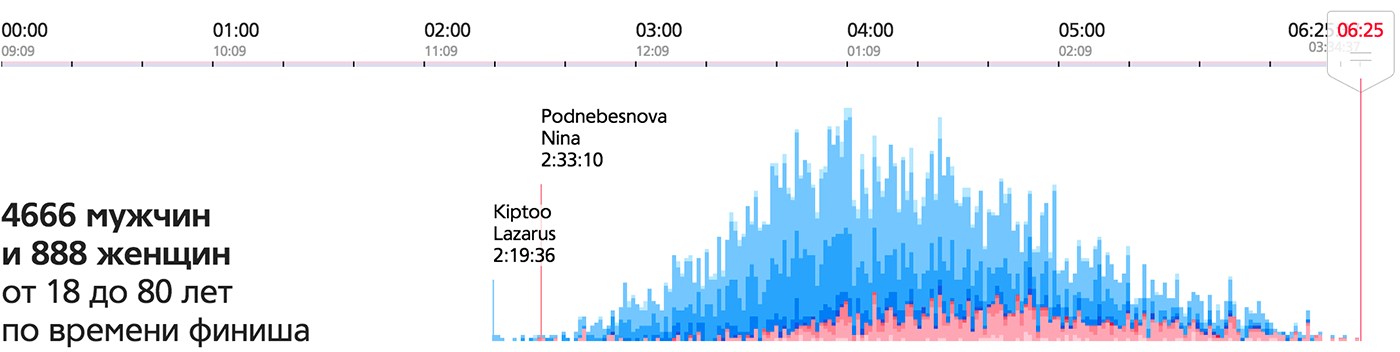

In the example of the Moscow marathon , the elementary particle of data is a runner, the mass is a crowd of runners. The framework of the main visualization is a map with a race route and a temporary slider.

The same mass on the frame formed by the time axis gives a diagram of the finishes:

This is an important feature of visualization, as it serves as a starting point for finding ideas. A data mass consists of data particles that are easy to see and highlight in the reality of the data.

An elementary data particle is an entity large enough to possess the characteristic properties of the data, and at the same time small enough so that all data can be disassembled into particles and reassembled, in the same or another order.

Search for an elementary particle of data on the example of the city budget:

After answering the question of what is an elementary piece of data, think about how to best show it. An elementary particle of data is an atom, and its visual embodiment must be atomic. The main visual atoms: pixel, dot, circle, dash, square, cell, object, rectangle, line, line and mini-graph, as well as cartographic atoms - points, objects, regions and routes. The better the visual conveys the properties of the data particle, the more visual the resulting visualization will be.

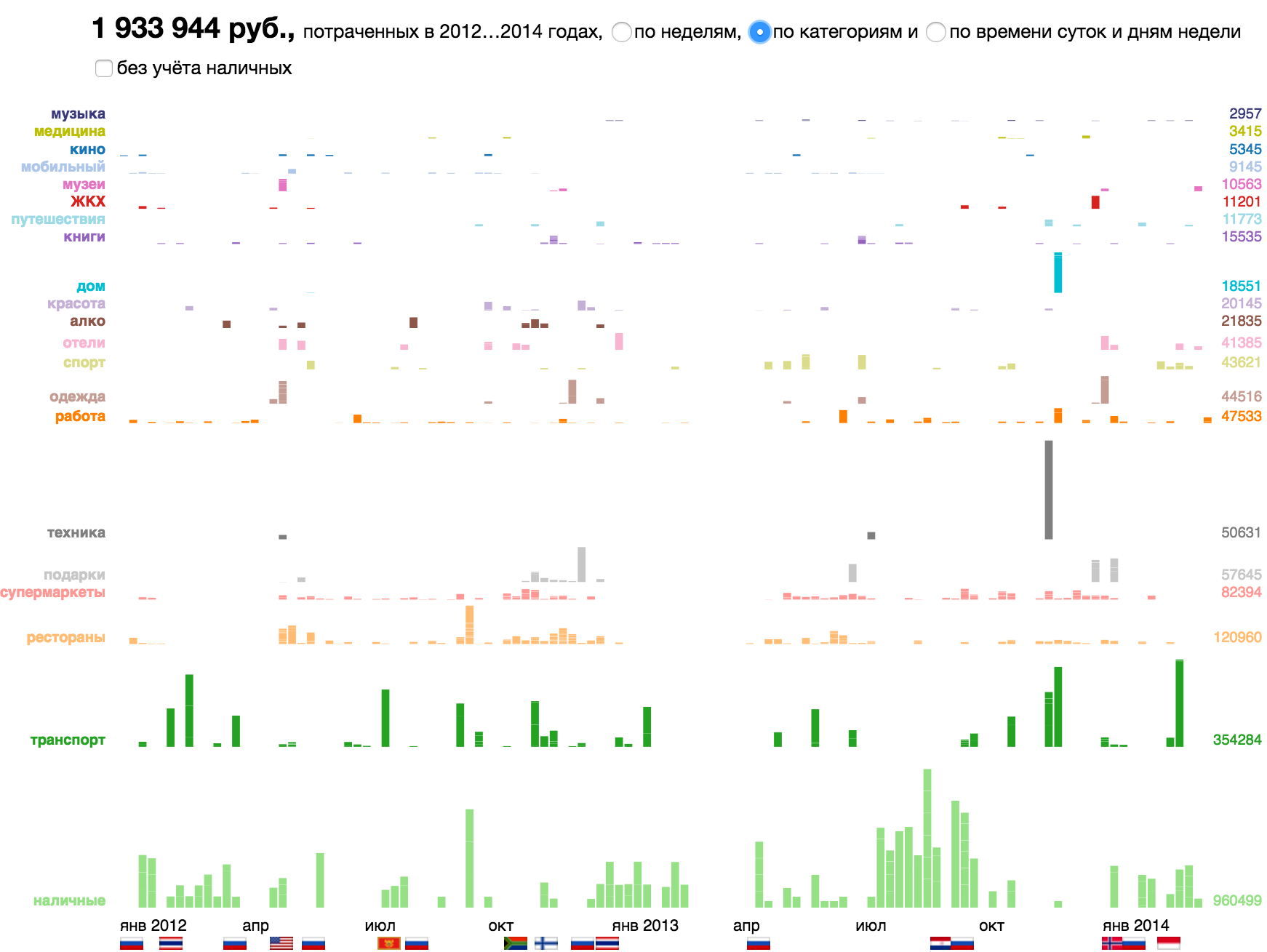

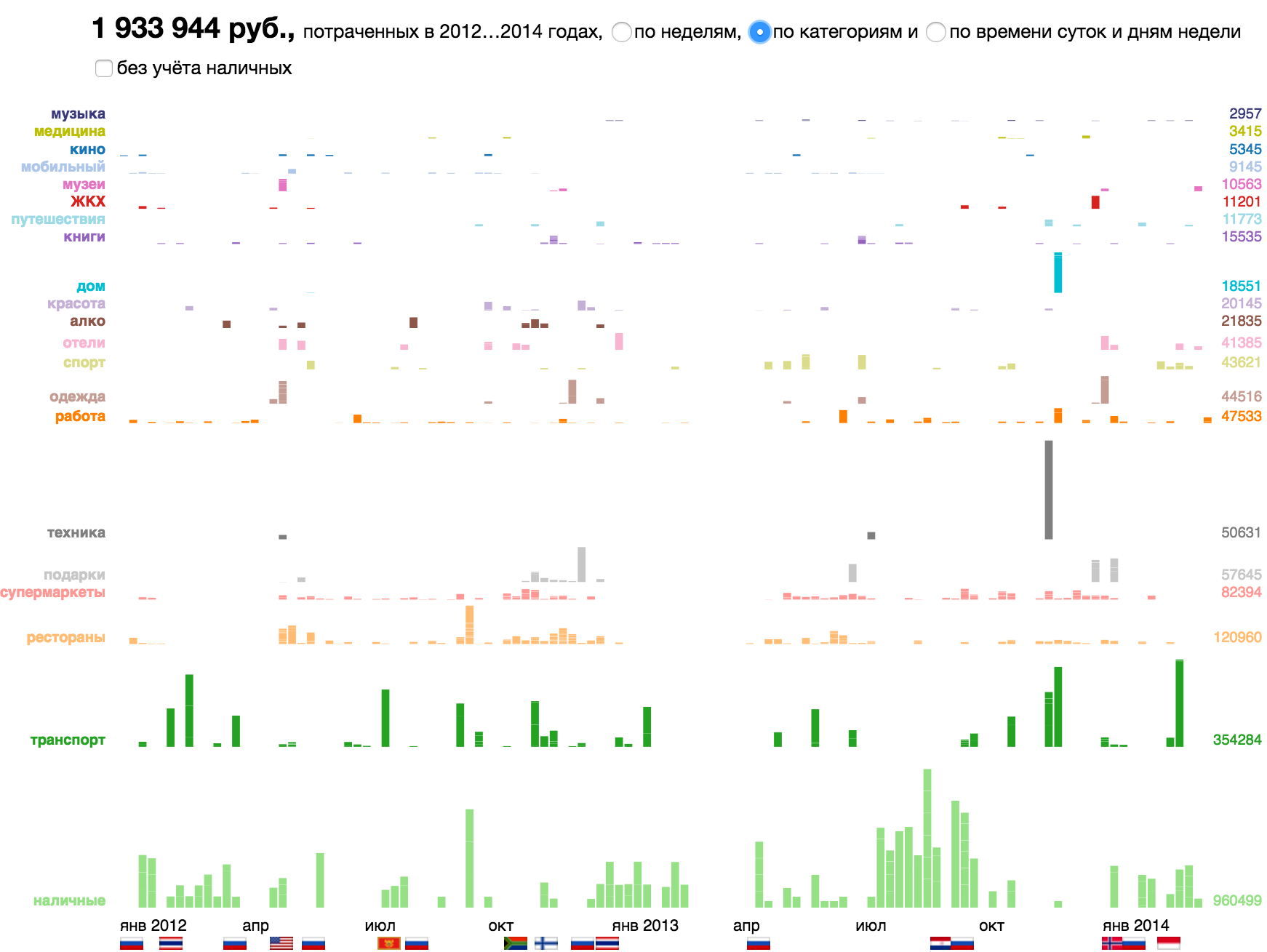

In the example of the city budget, the deduction has two key parameters - the amount (quantitative) and the purpose - qualitative. A rectangular atom with a unit width is well suited for these parameters: the height of the column encodes the amount, and its color represents the purpose of the payment. The result is a picture similar to oursvisualization of the personal budget :

One and the same particle on different wireframes: along the time axis, by categories or in the axes the time of day / day of the week.

Here are other examples of data particles and their corresponding visual atoms.

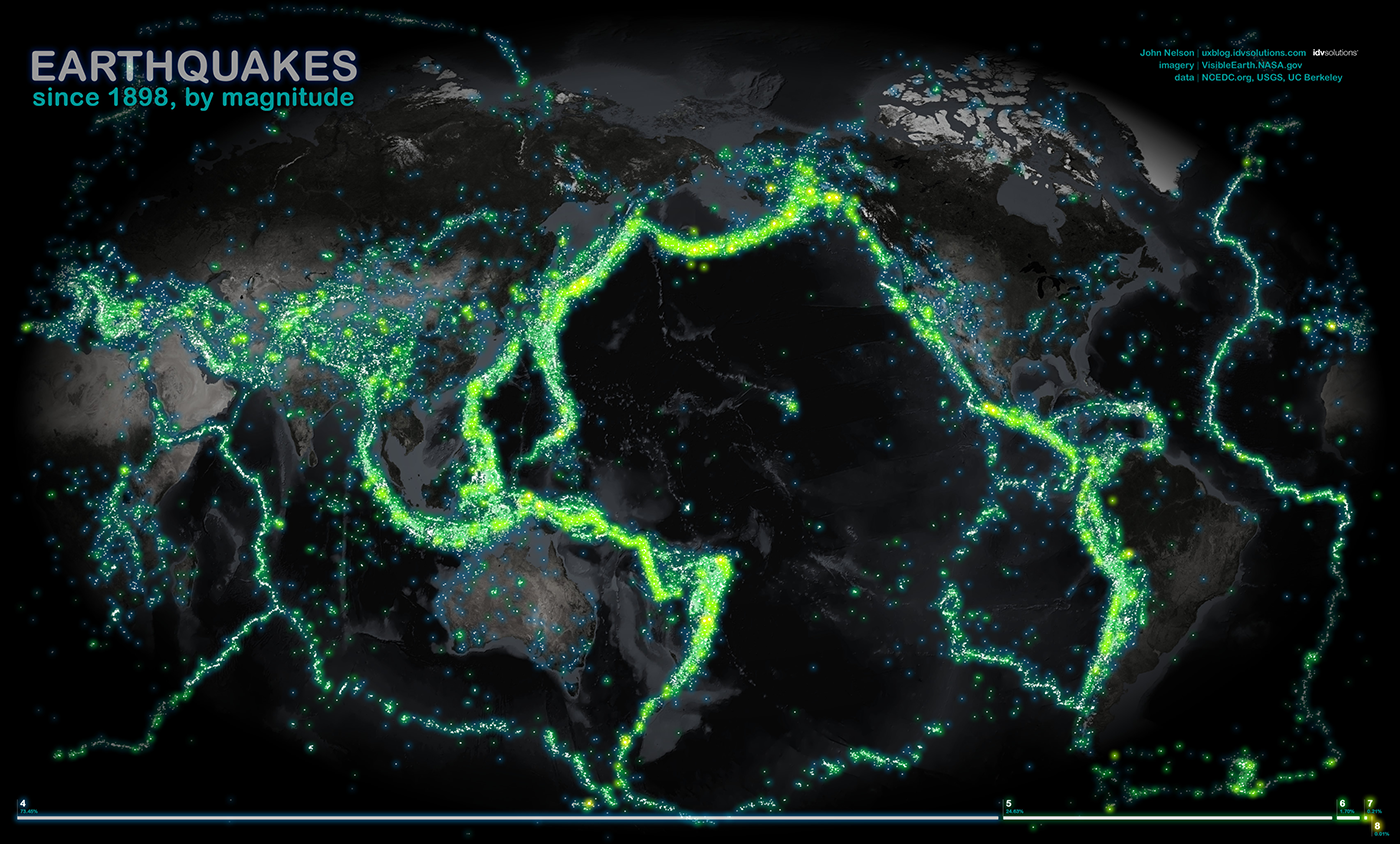

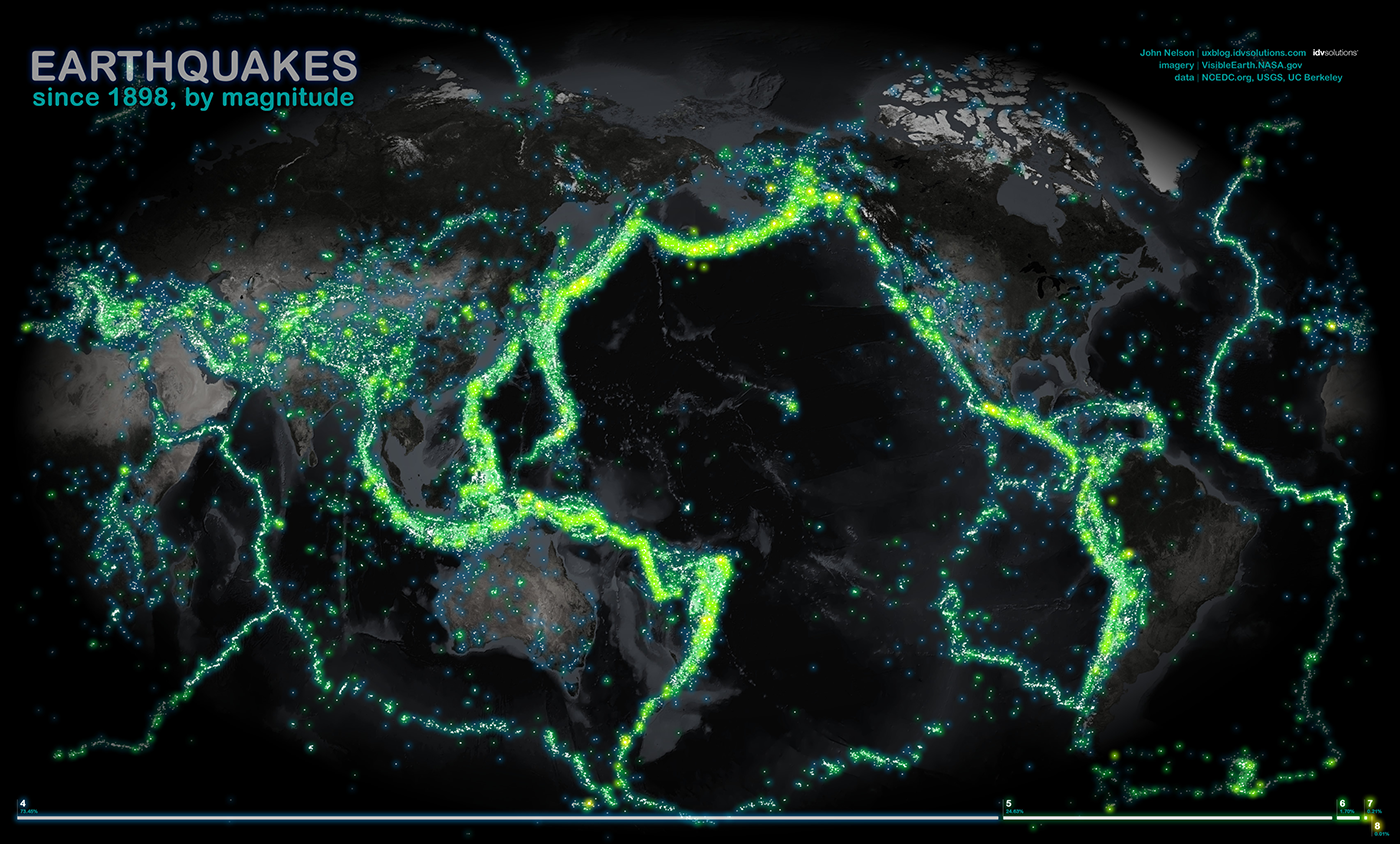

Earthquake in the history of earthquakes , visual atom - map point:

Dollar and its degrees on a logarithmic maniogram , atom - pixel:

Soldier and civilian in loss visualization "Fallen.io" , atom - object - image of a man with a gun and without:

Flag on the visualization devoted to flags , atom - object - flag image:

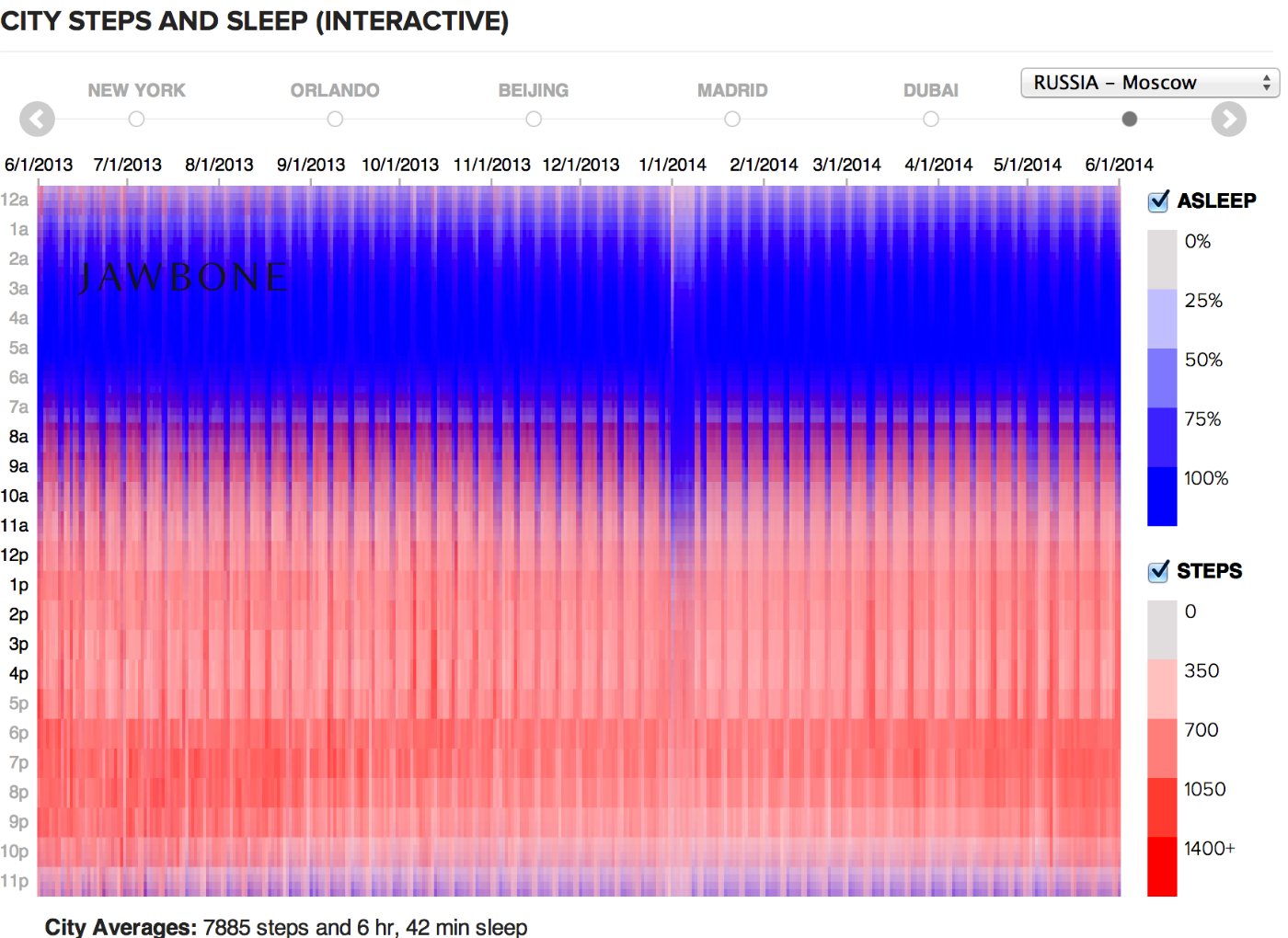

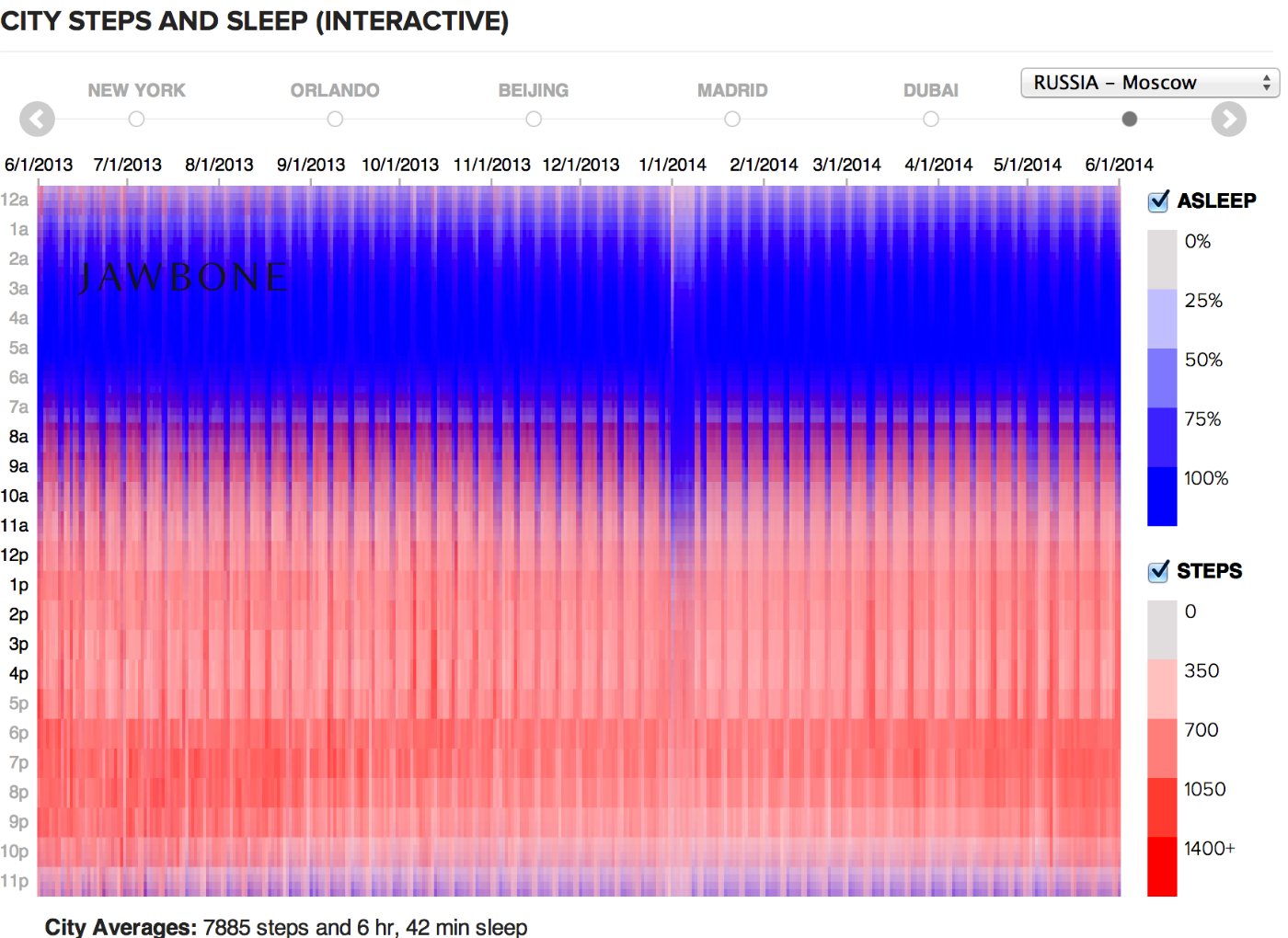

Hour of the day (activity or sleep) on the diagram about the rhythm of cities, atom - a cell with two-color coding:

Attempting to answer a question in the statistics of the traffic simulator , visual atom - a cell with a traffic light gradient:

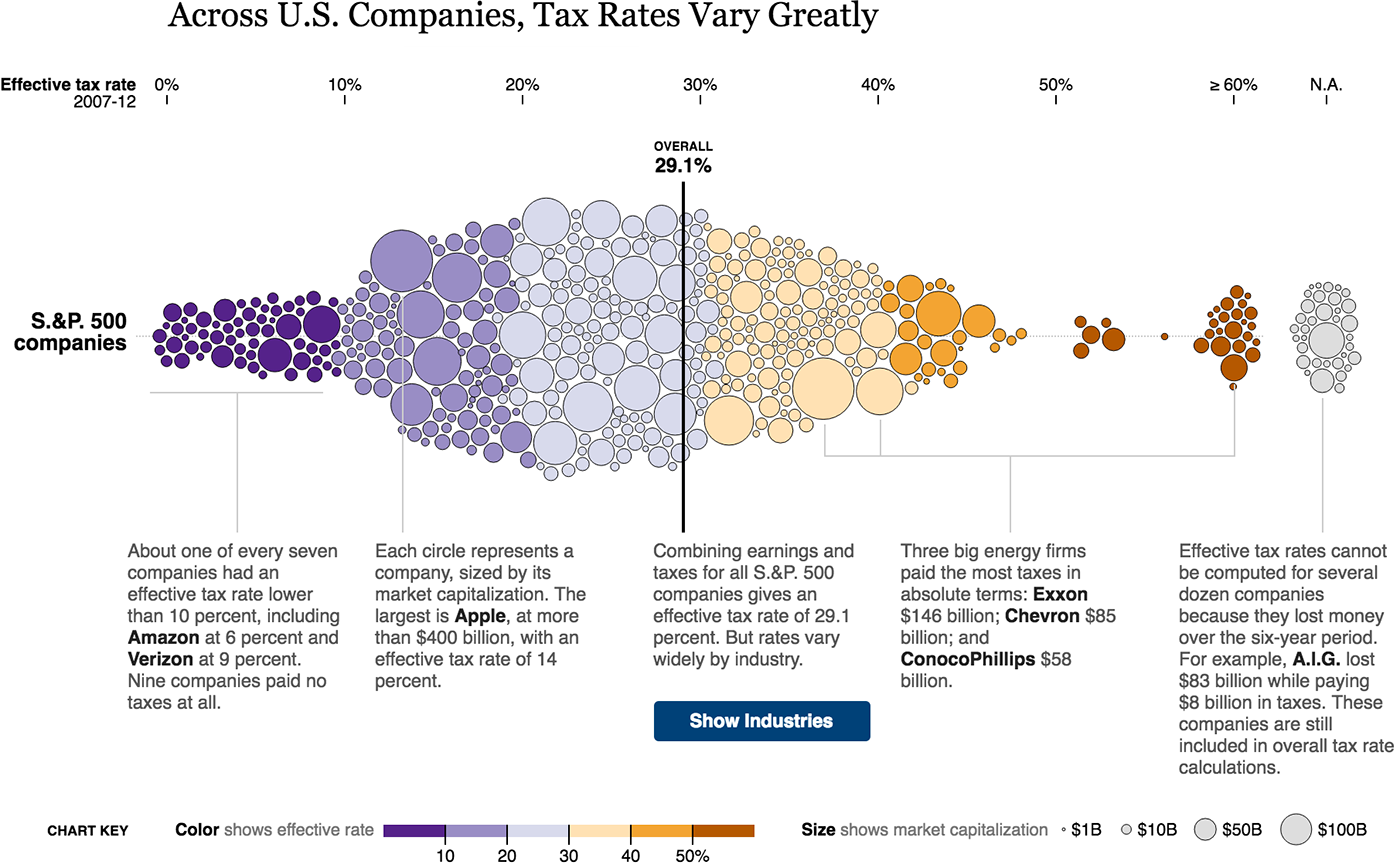

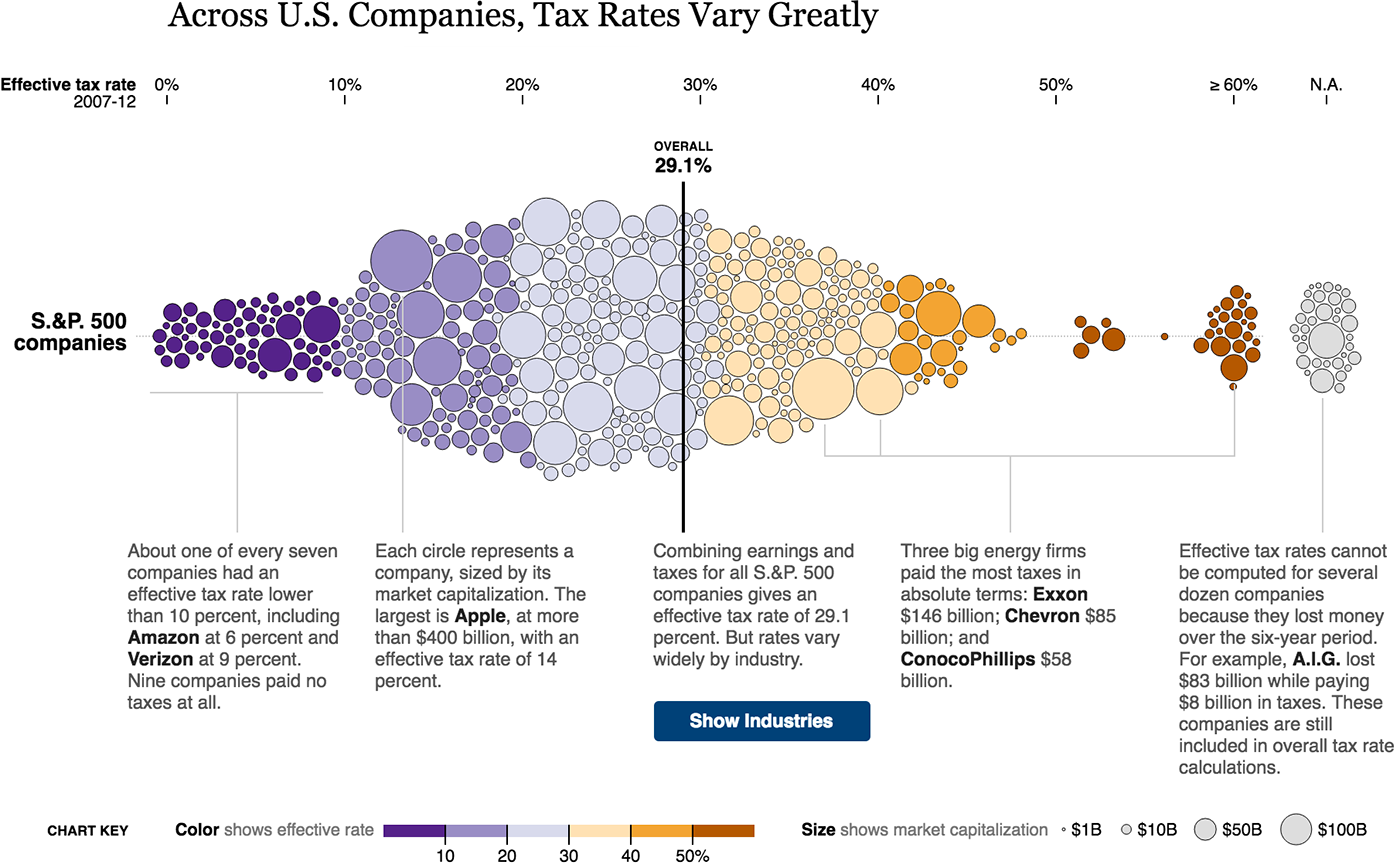

Company on the diagram of the scatter of tax rates , atom - circle:

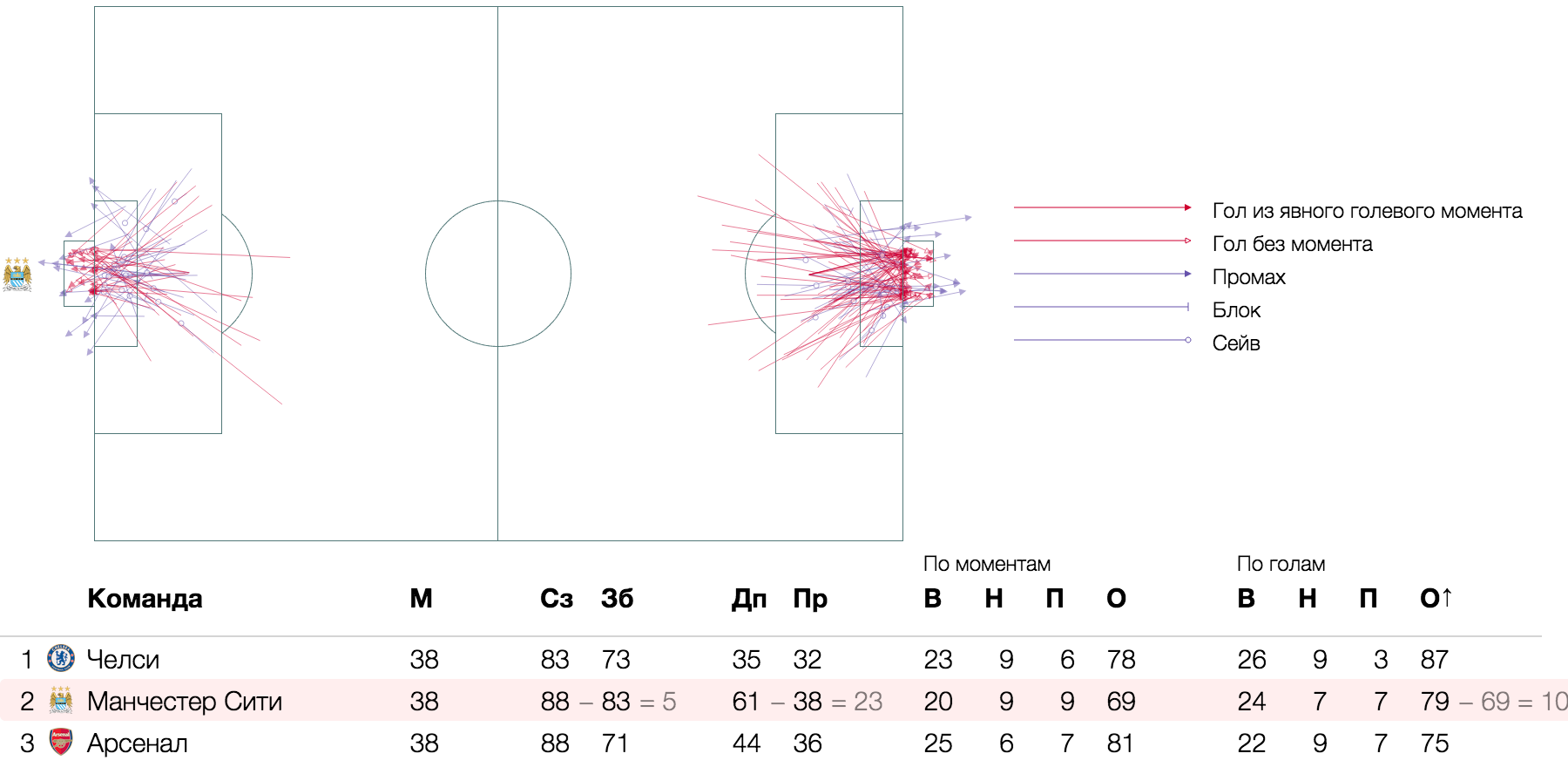

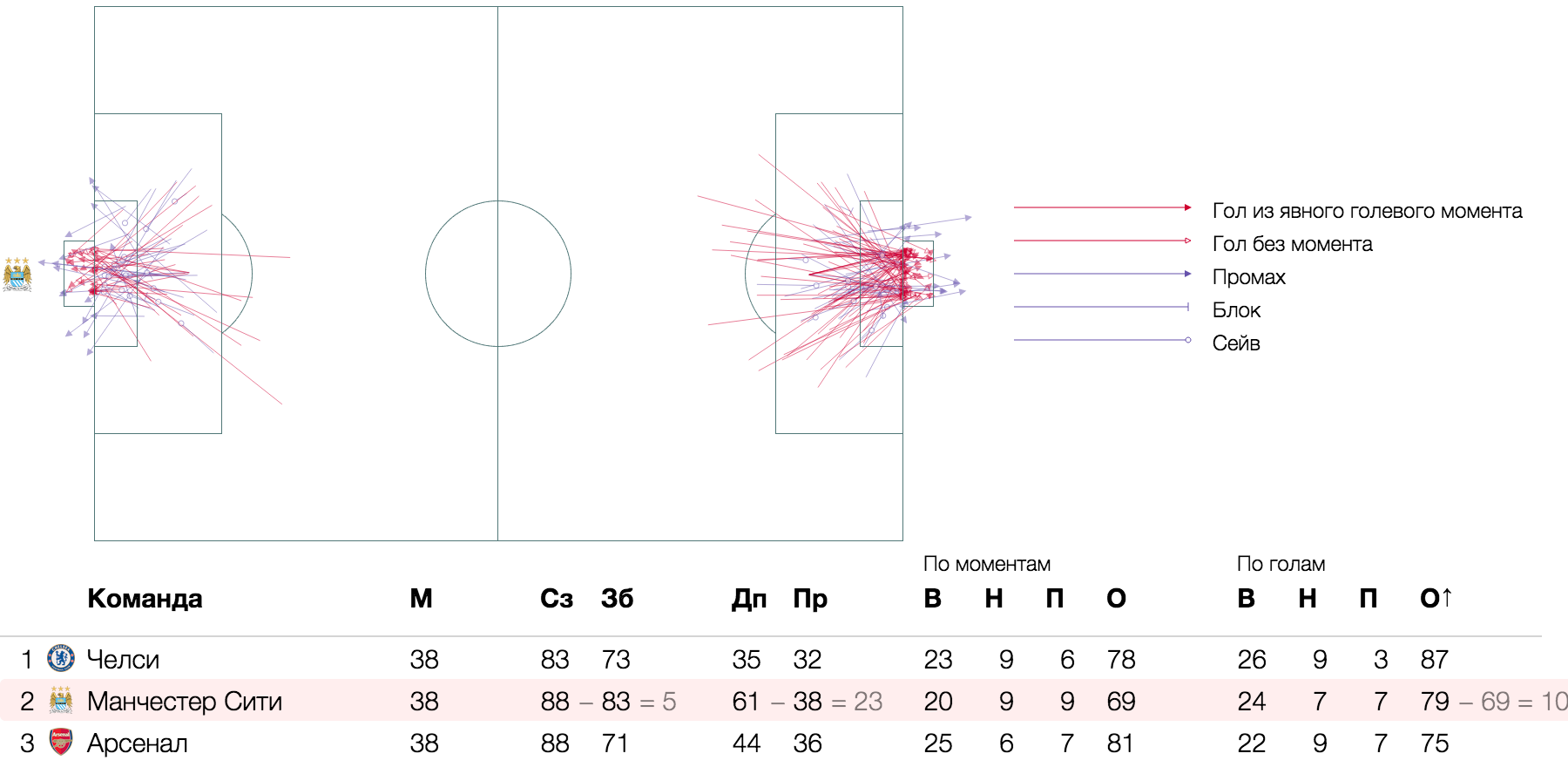

Goal and goal moment in football analytics , atom - segment - trajectory of impact on the football field:

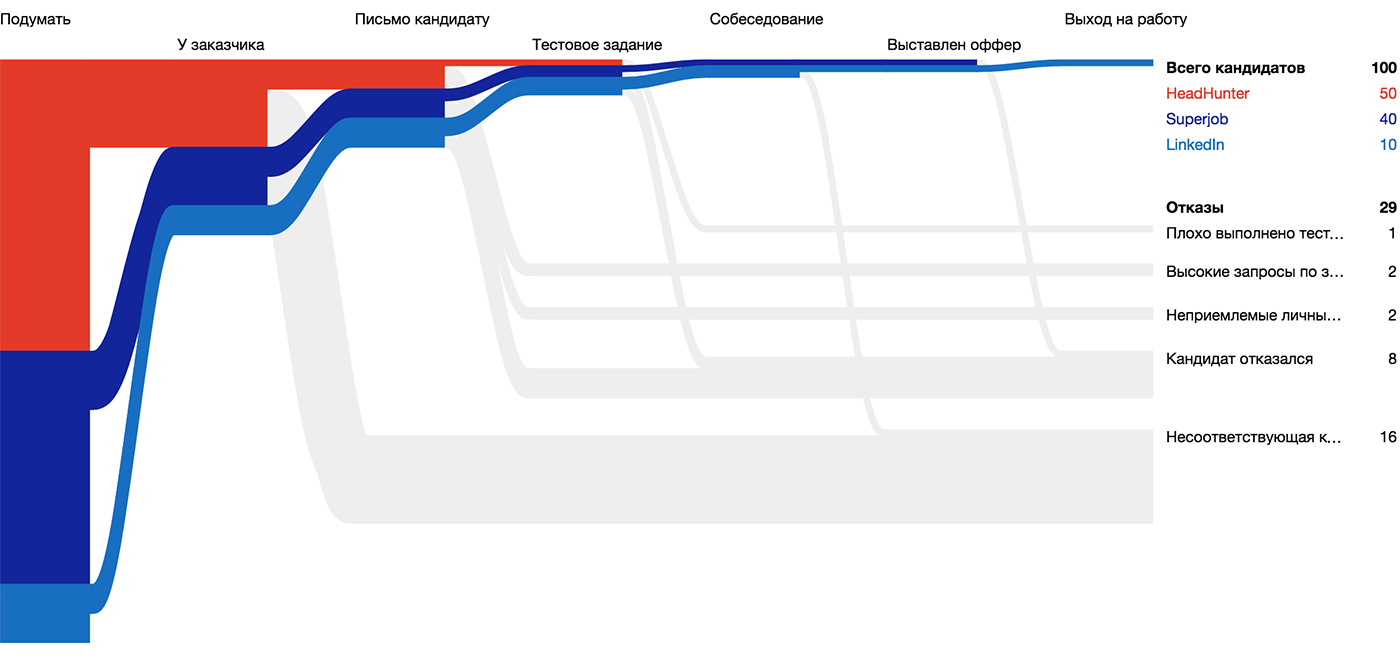

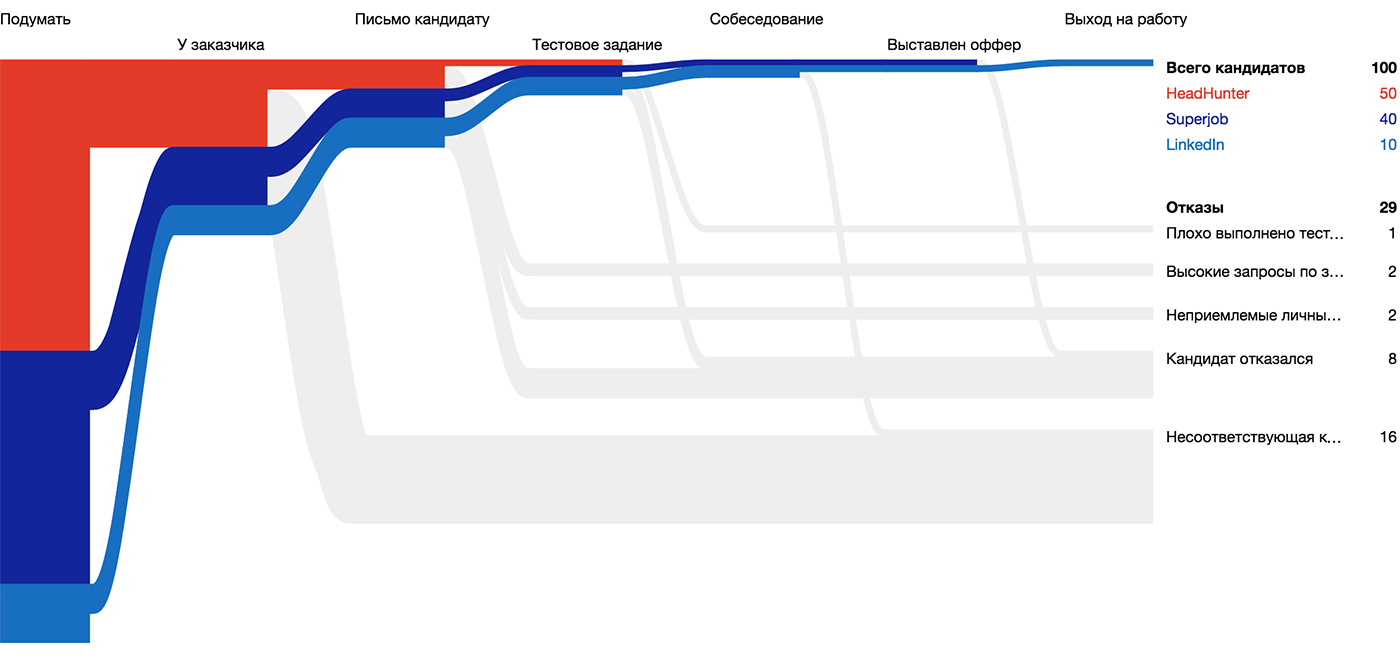

Candidate for visualization of the Huntflow hiring process , atom - line of unit thickness - path of the candidate for the funnel:

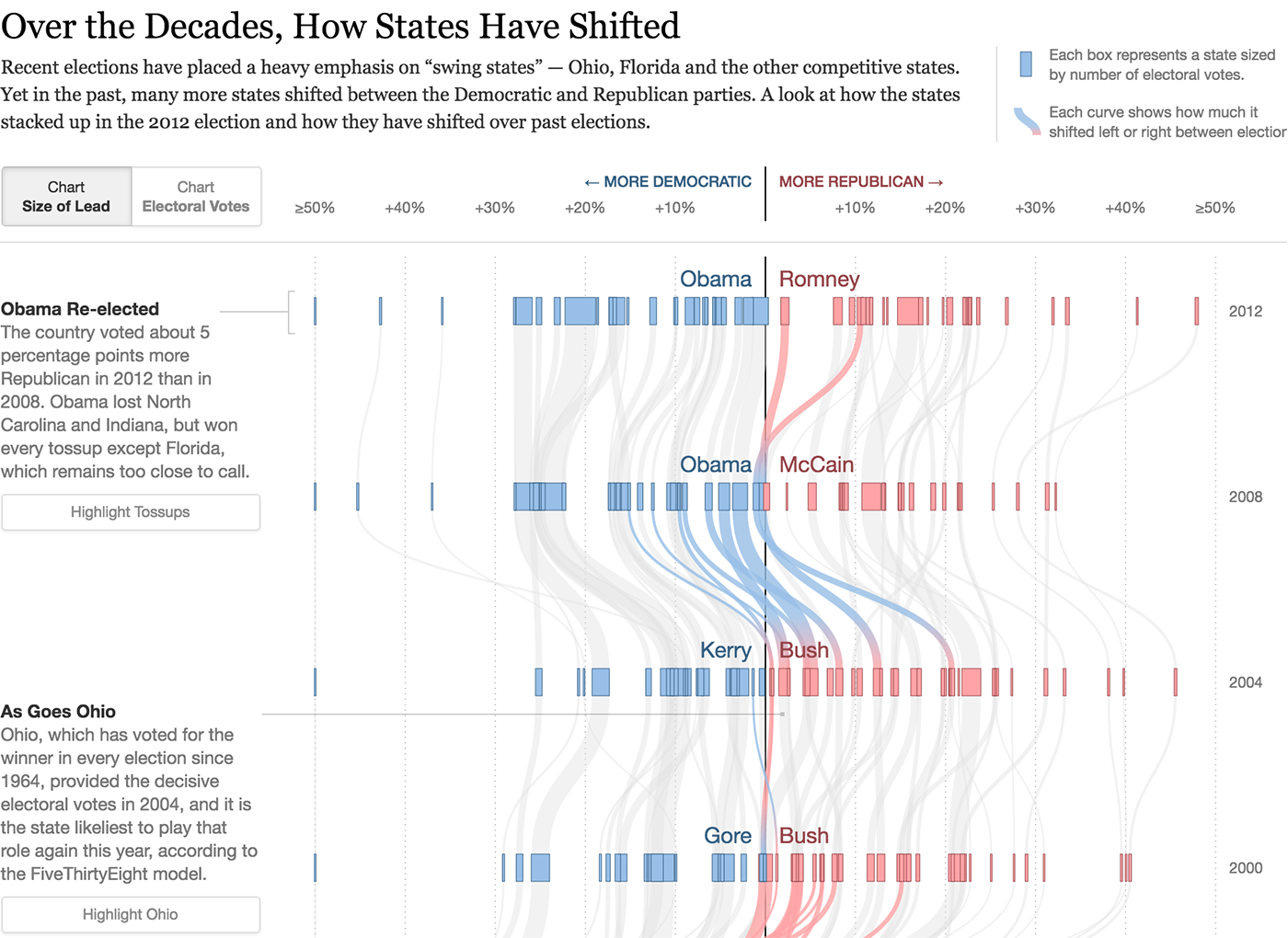

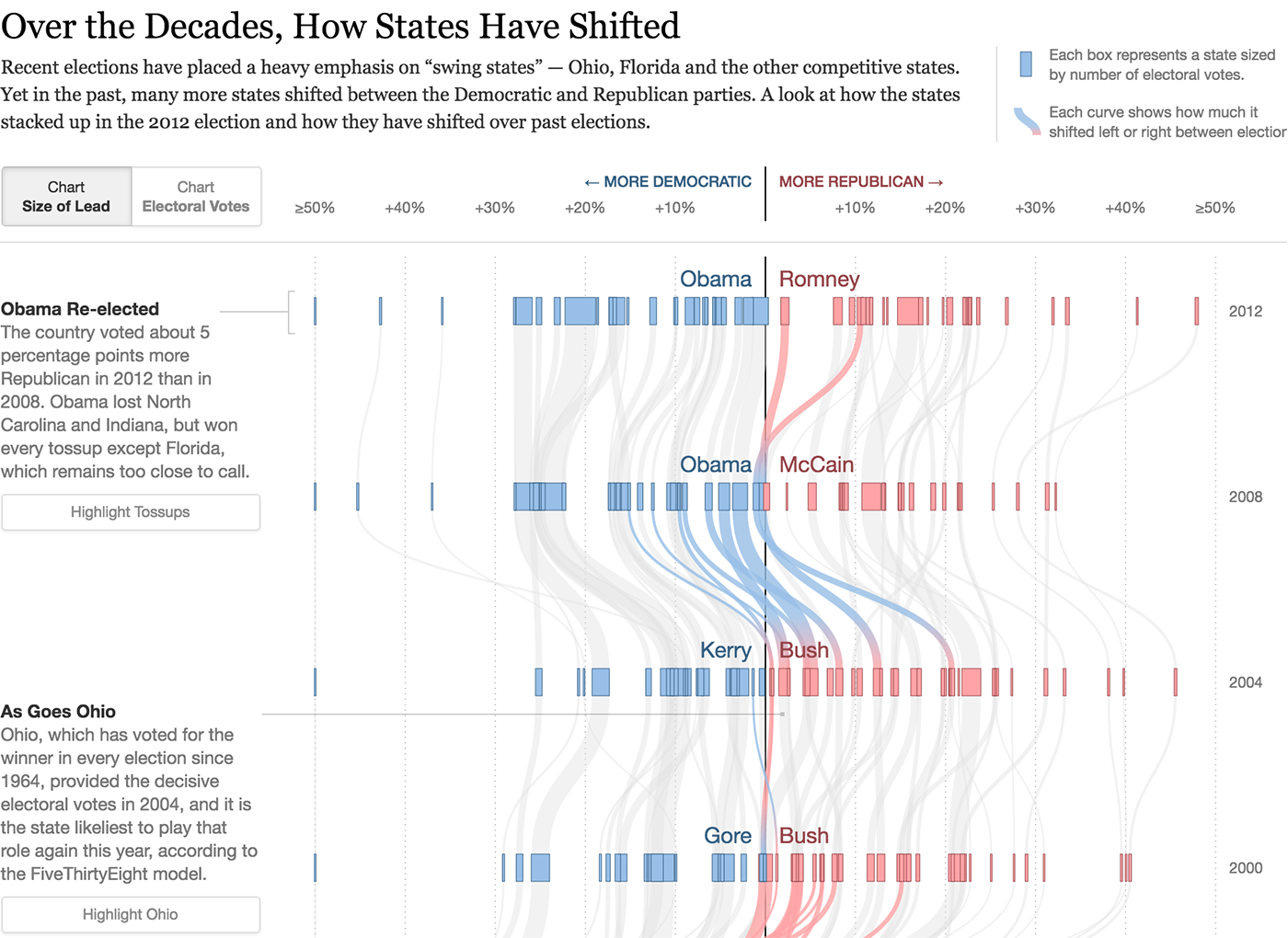

State that changes its political mood , atom - line with thickness: Line

segments between stations on Swiss routes their trains, atom - cartographic line with a thickness:

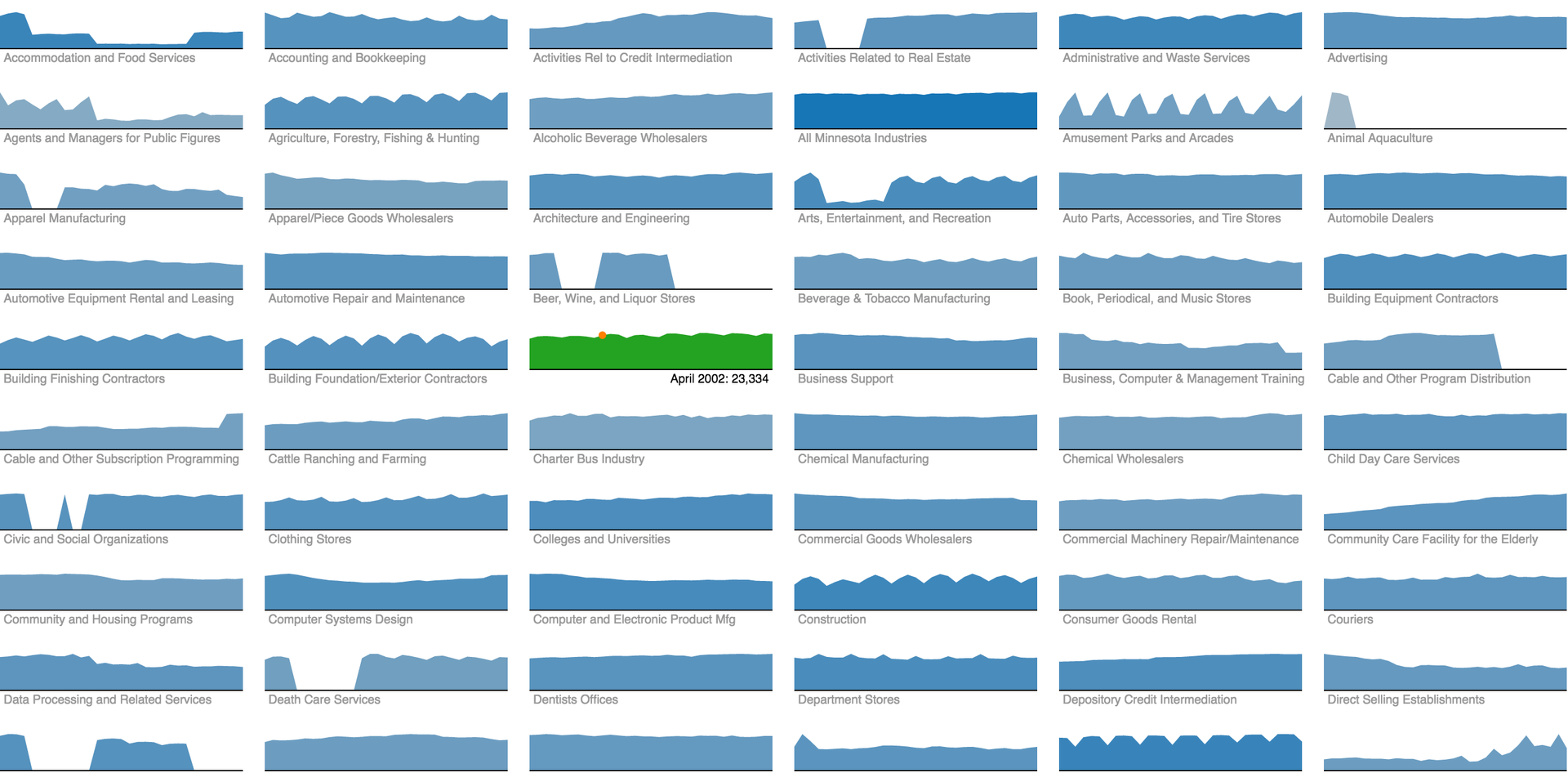

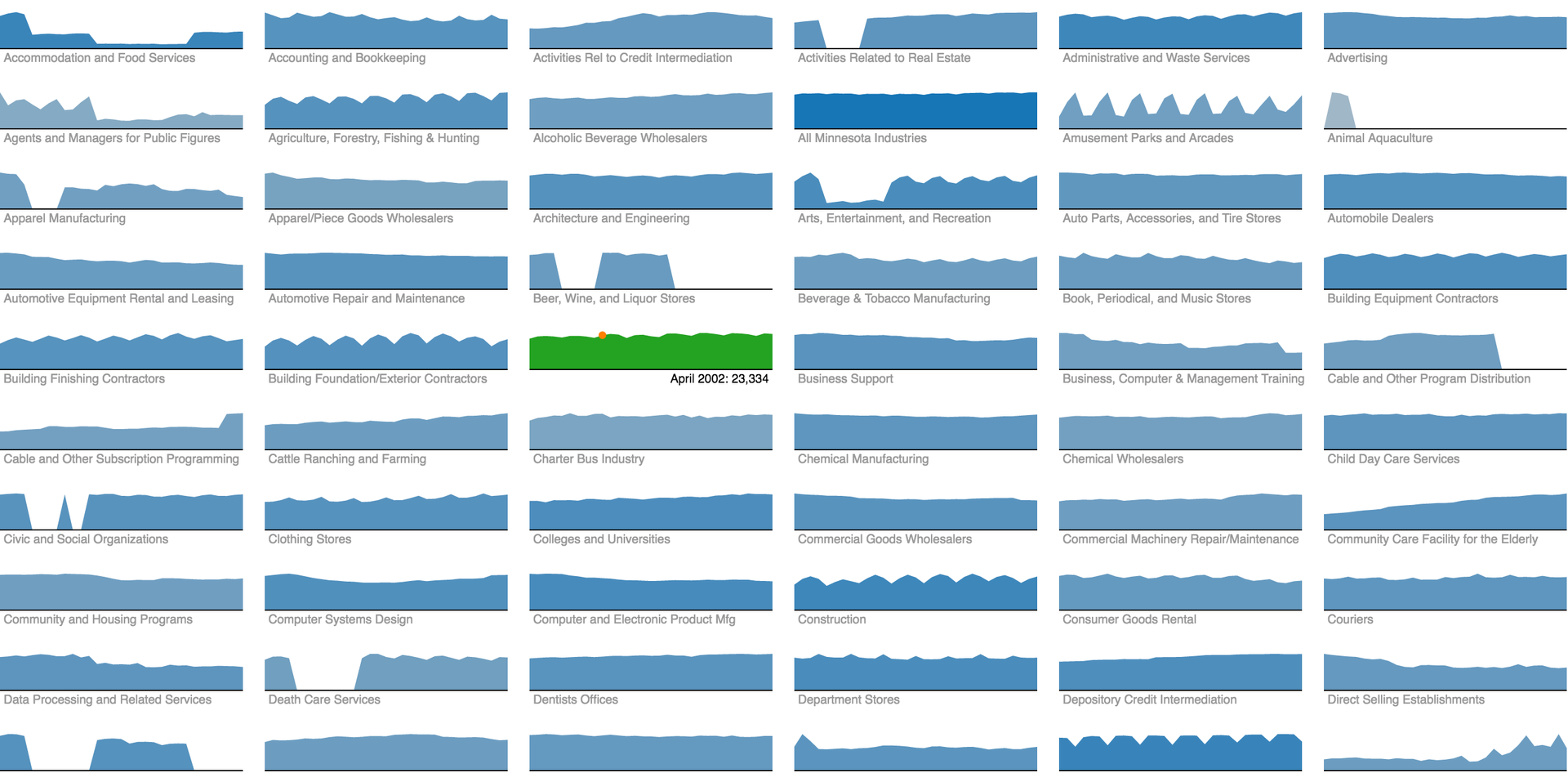

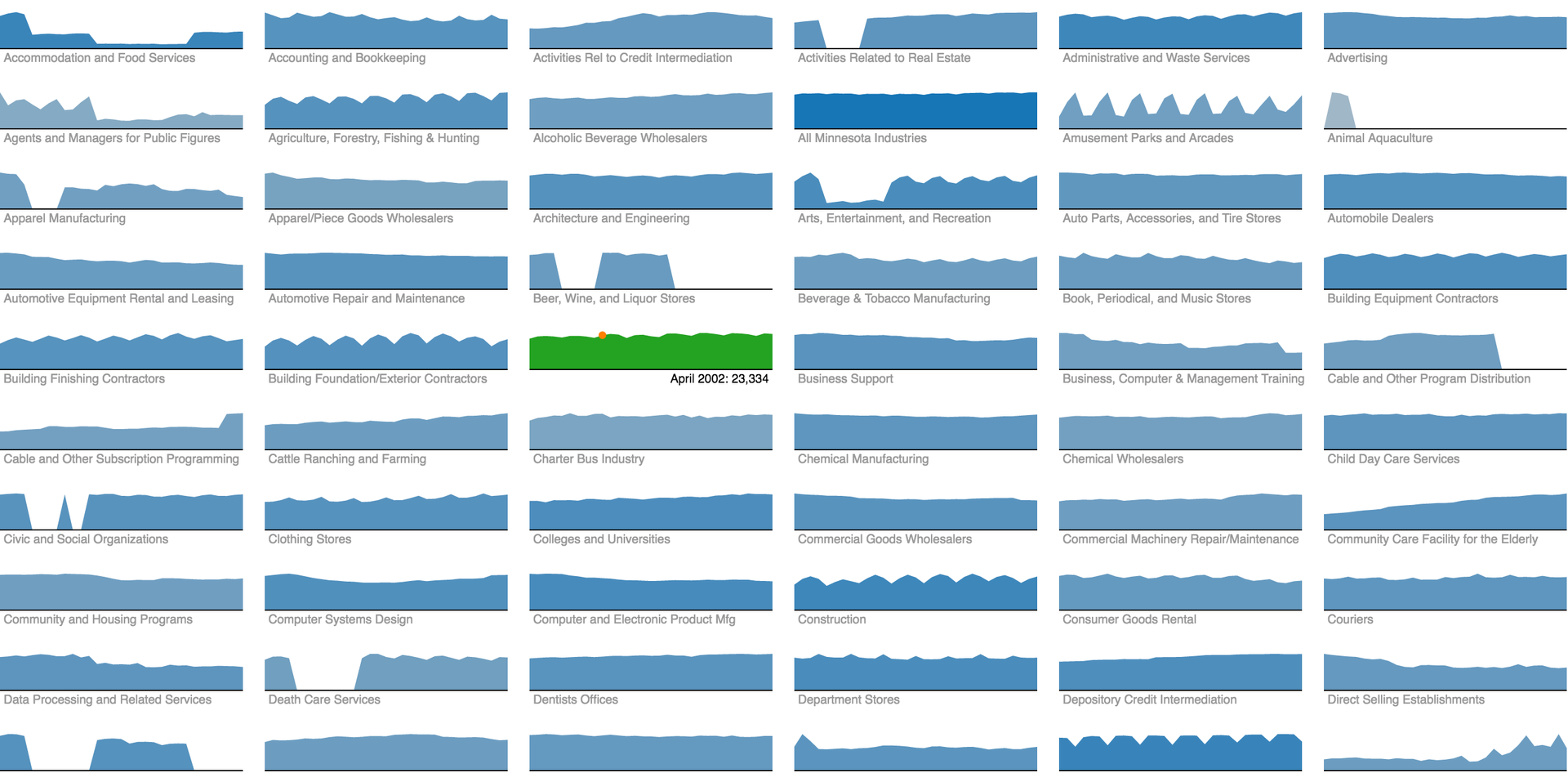

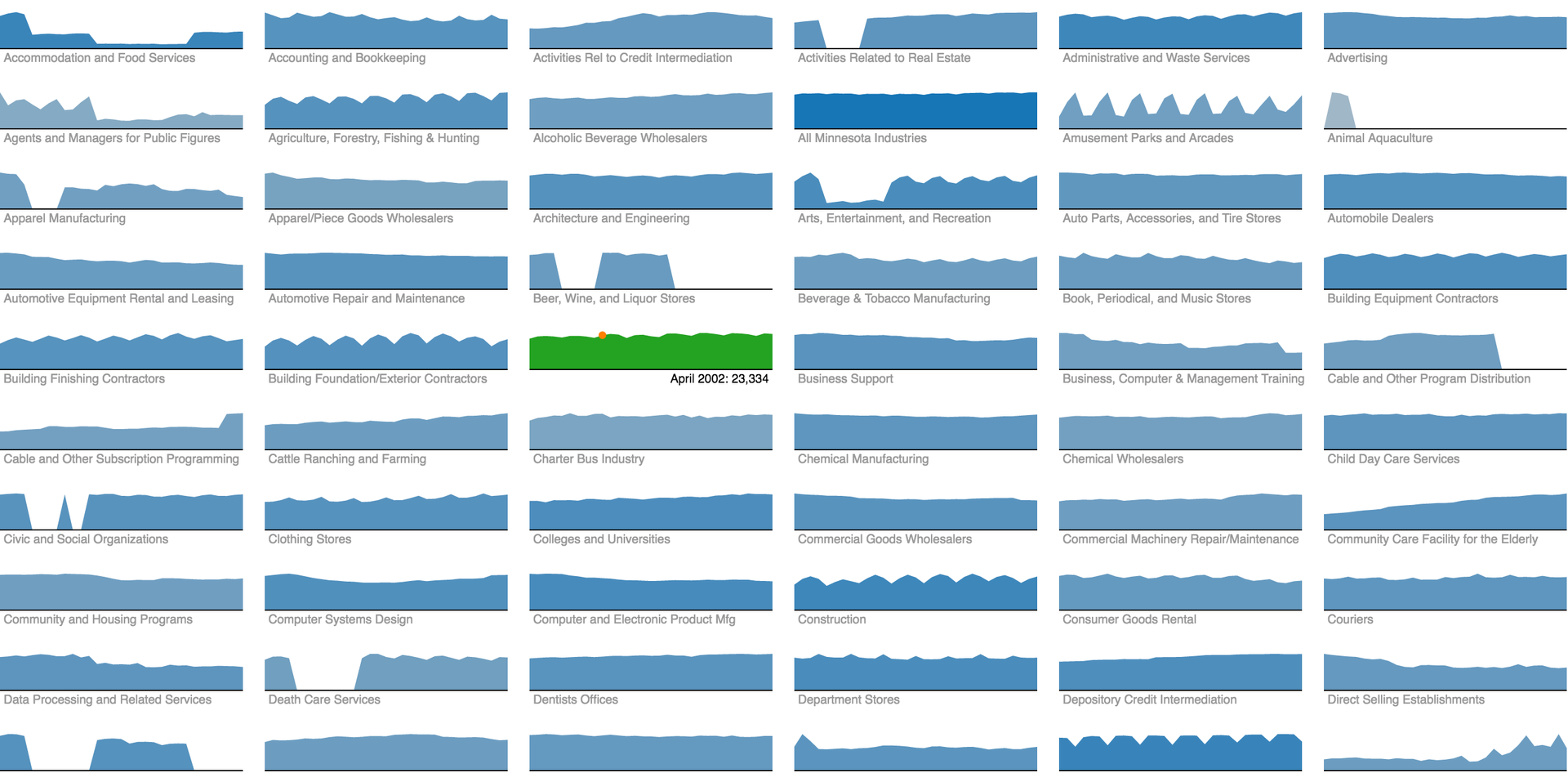

Employment dynamicsin various industries of Minnesota residents, the atom is a mini-graph:

Read more about visual atoms and their properties here .

Interactive visualization lives in two dimensions of the screen plane. It is these two dimensions that give the mass of data "rigidity", systematize visual atoms and serve as a visualization framework. How these two dimensions are used depends on how interesting, informative and useful the visualization will turn out.

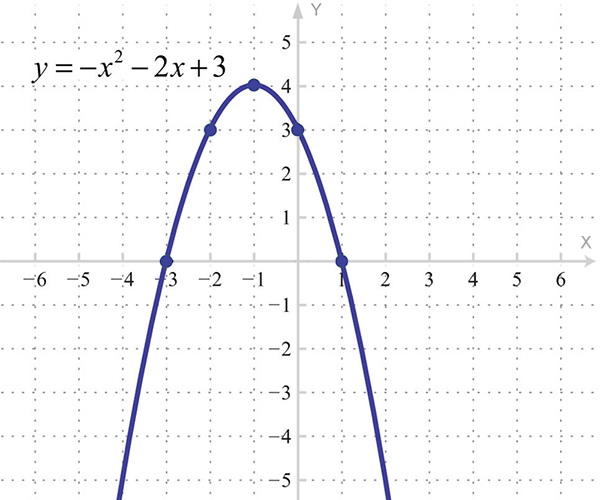

On a good visualization, each dimension corresponds to an axis that expresses a significant data parameter. I divide axes into continuous (including the axis of space and time), interval, layered and degenerate. We learn about

continuous axes in school when we build parabolas:

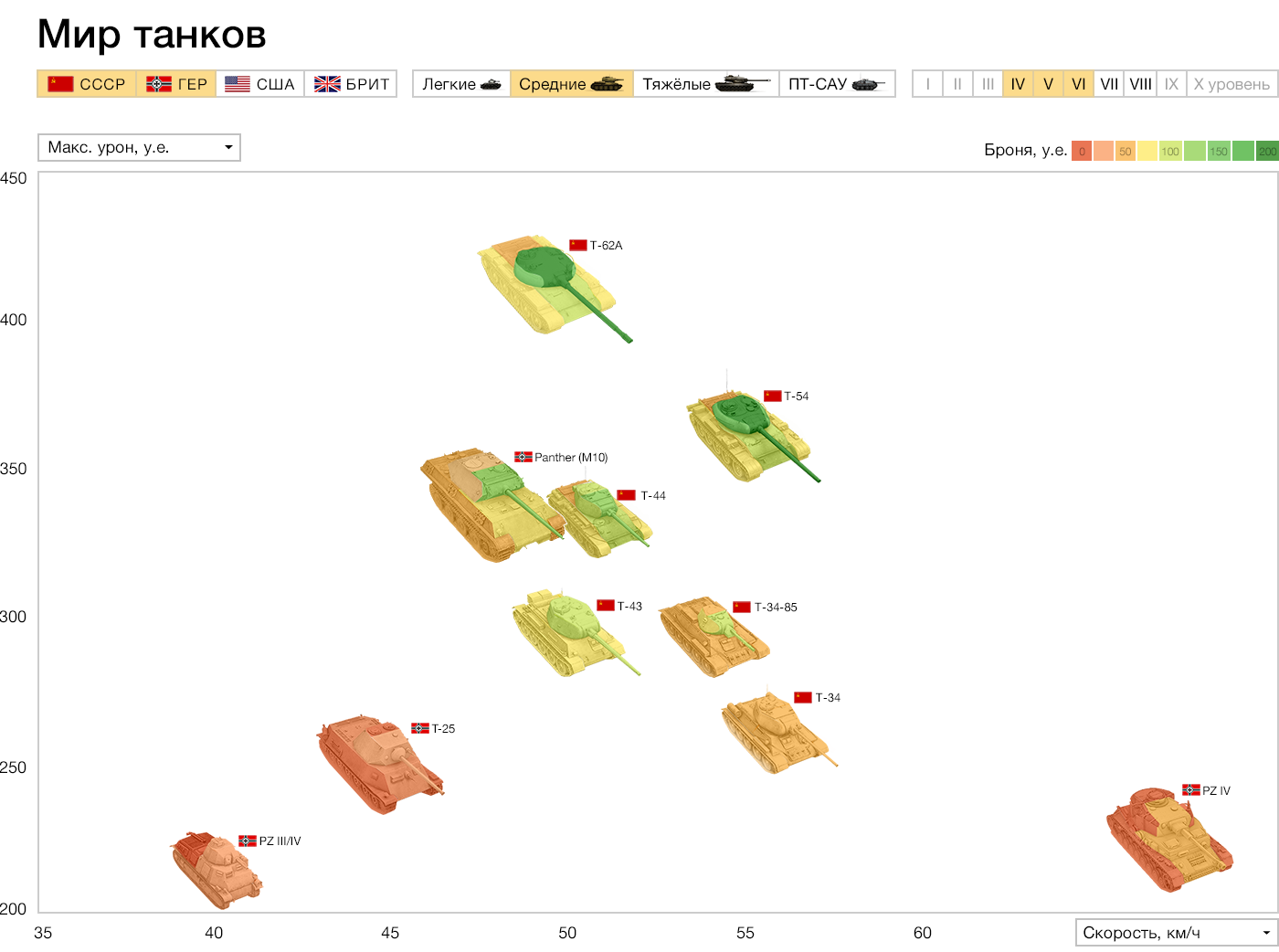

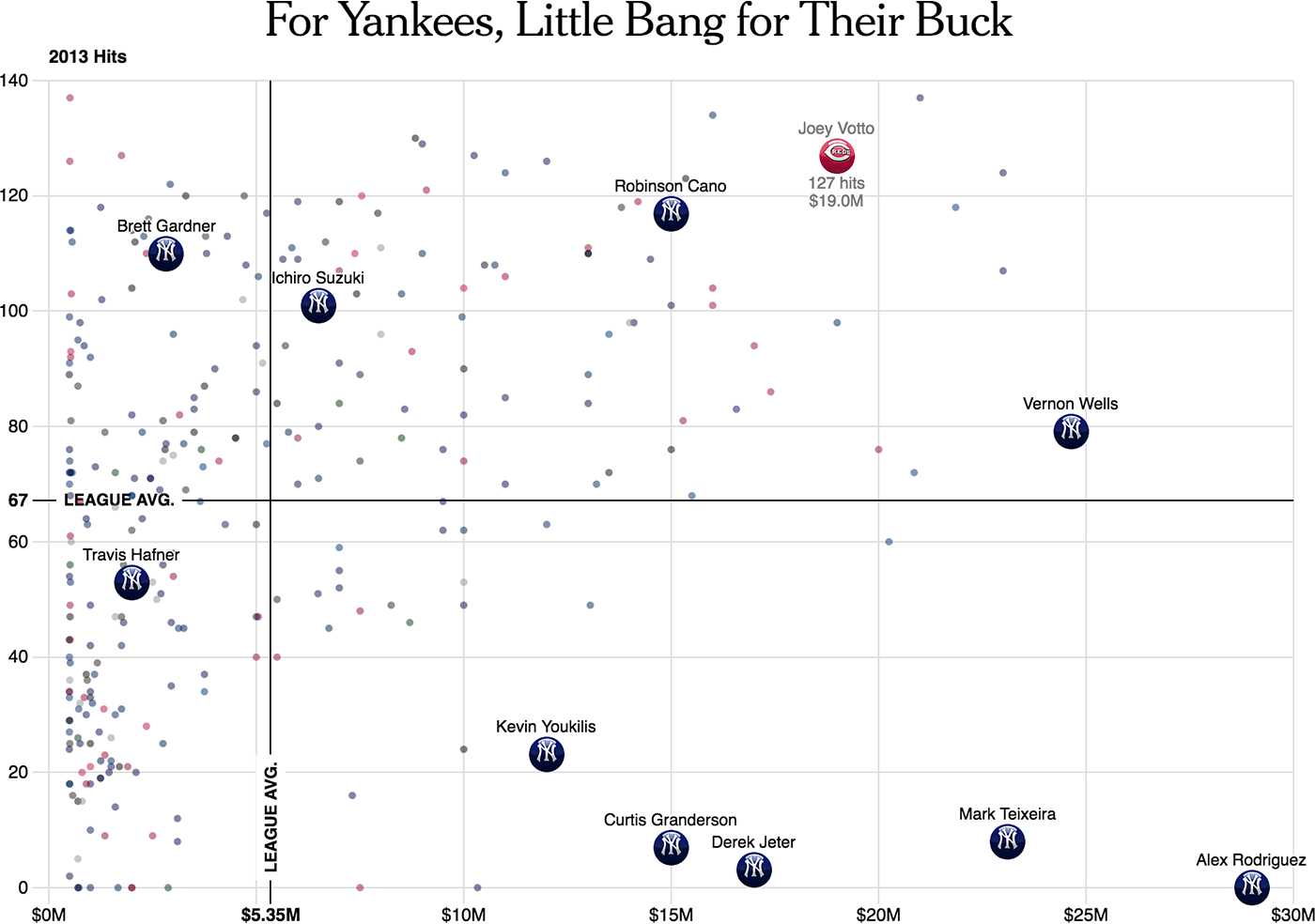

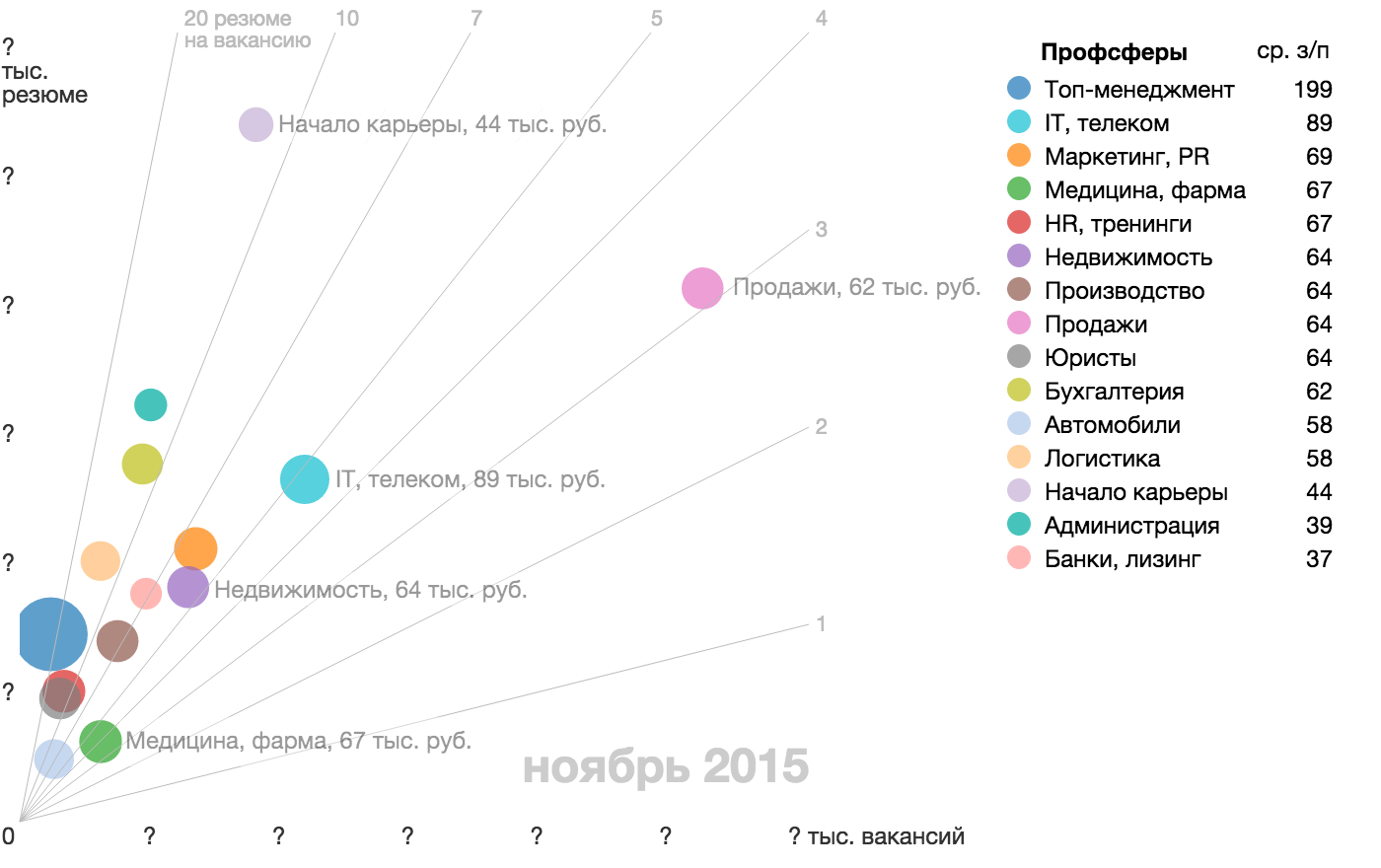

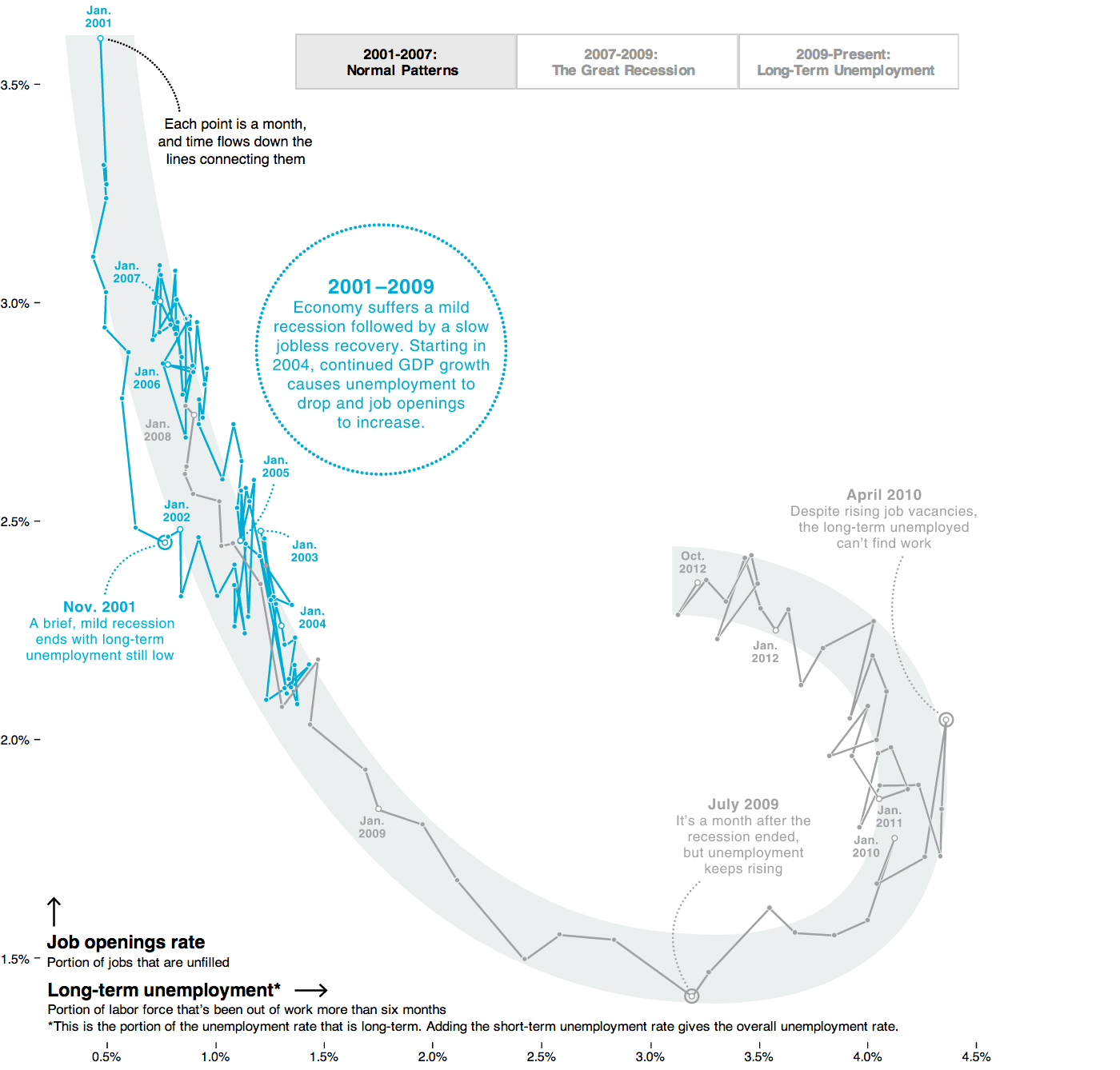

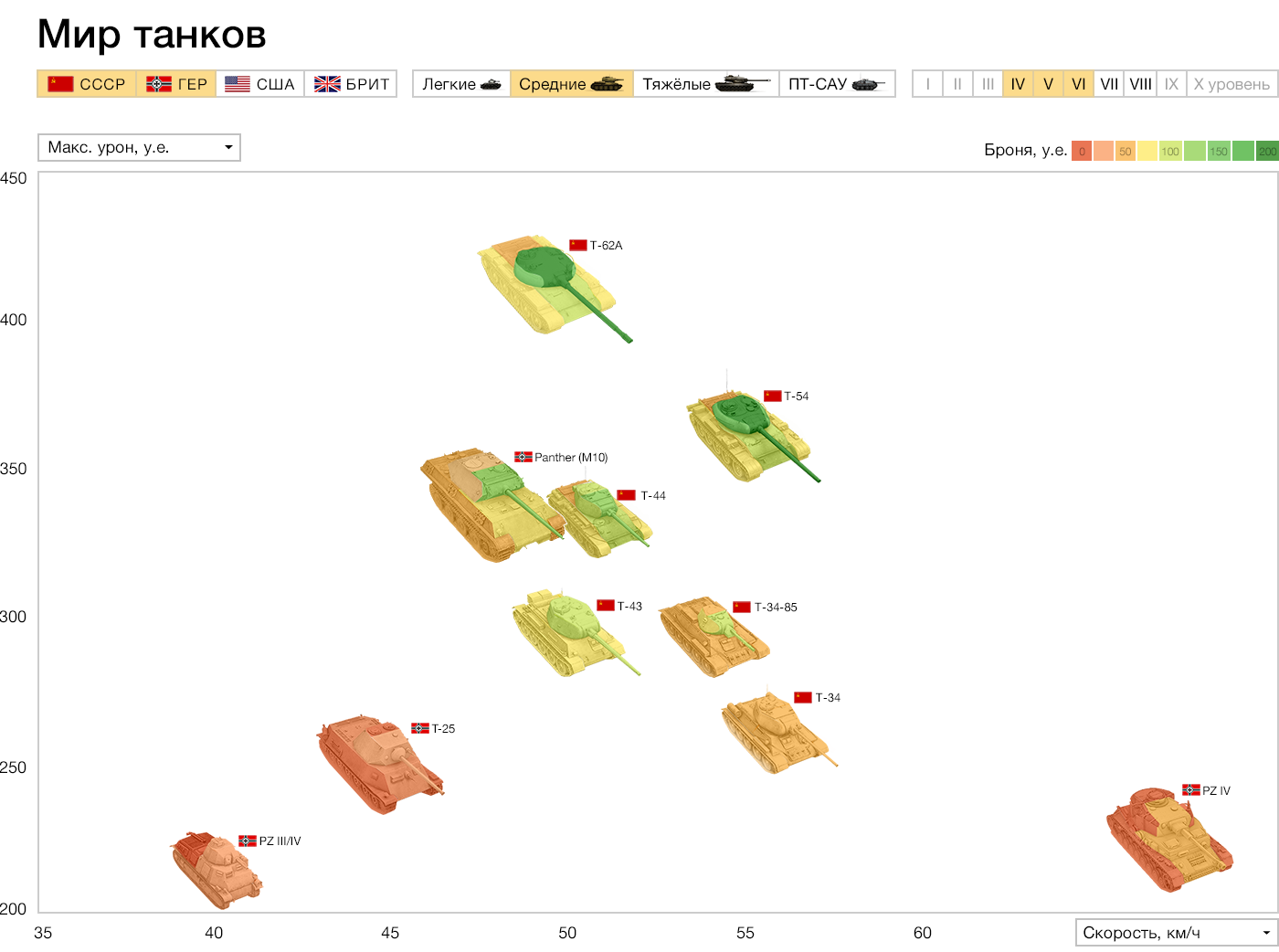

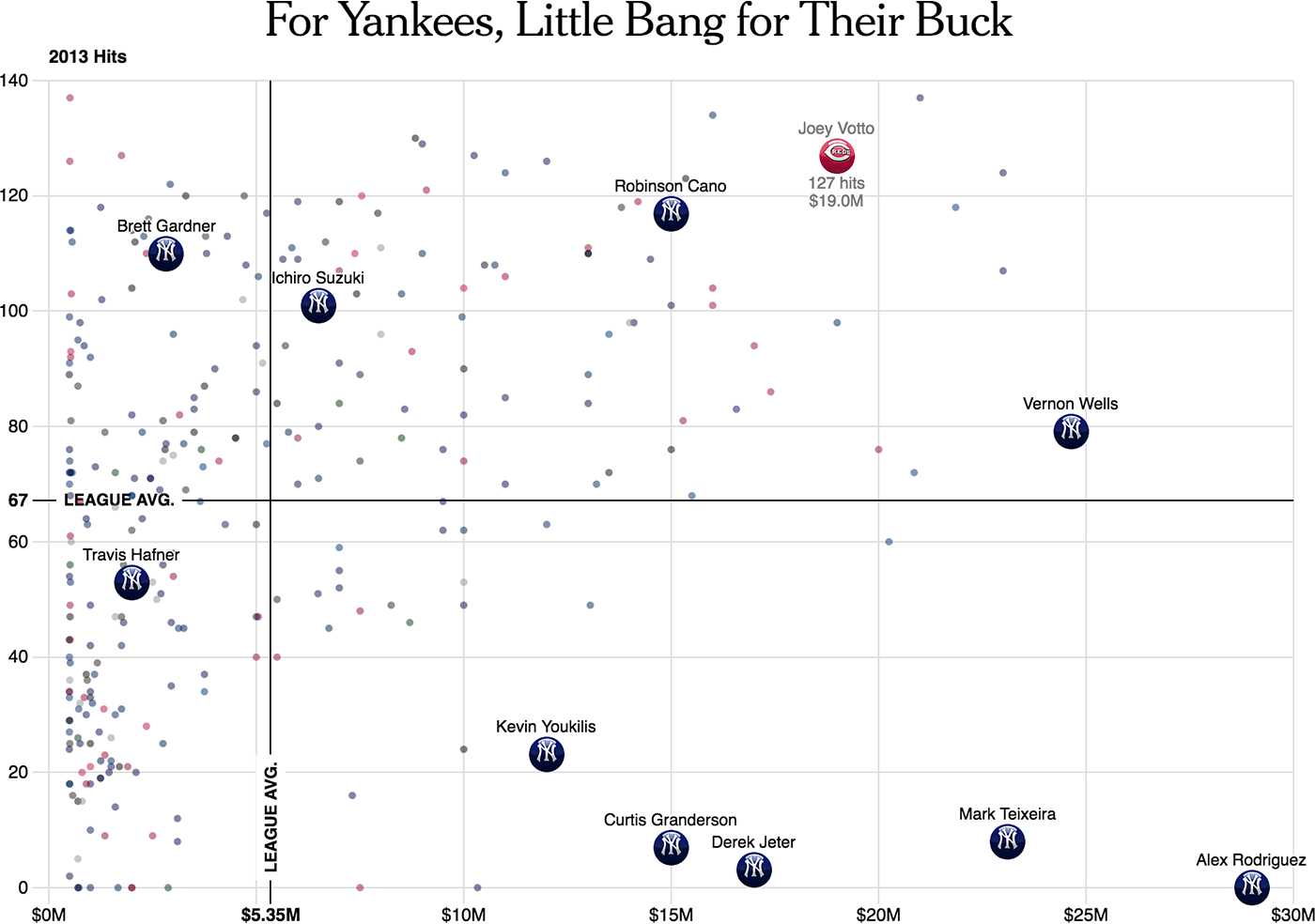

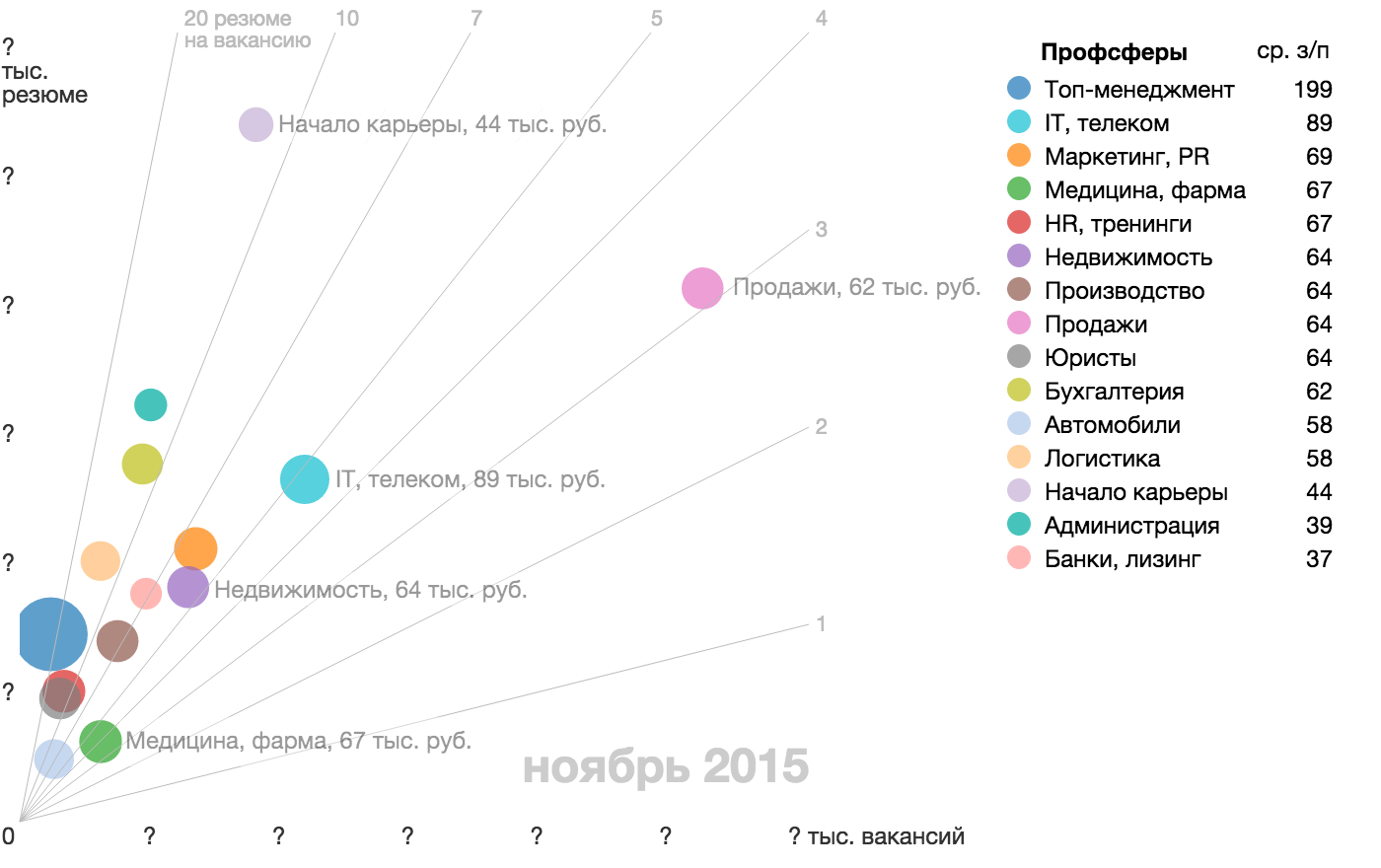

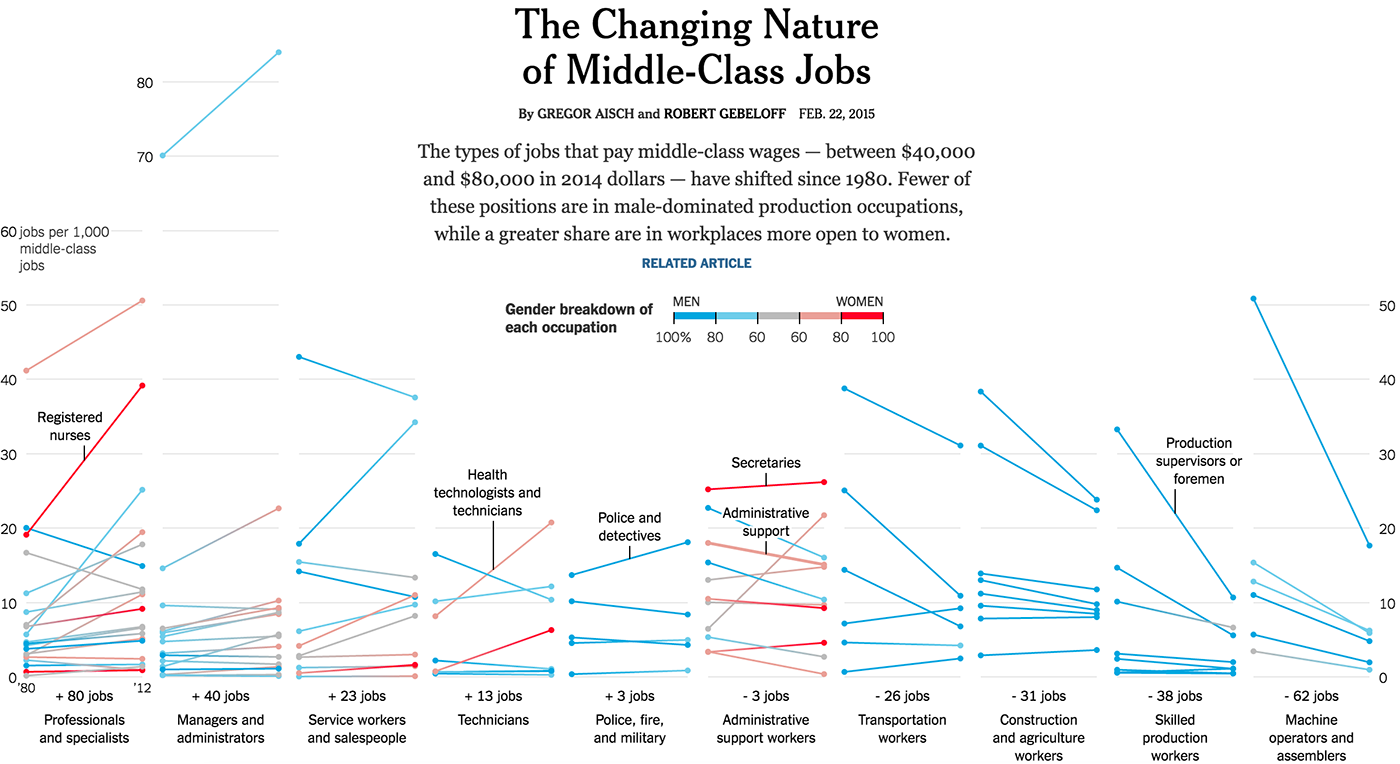

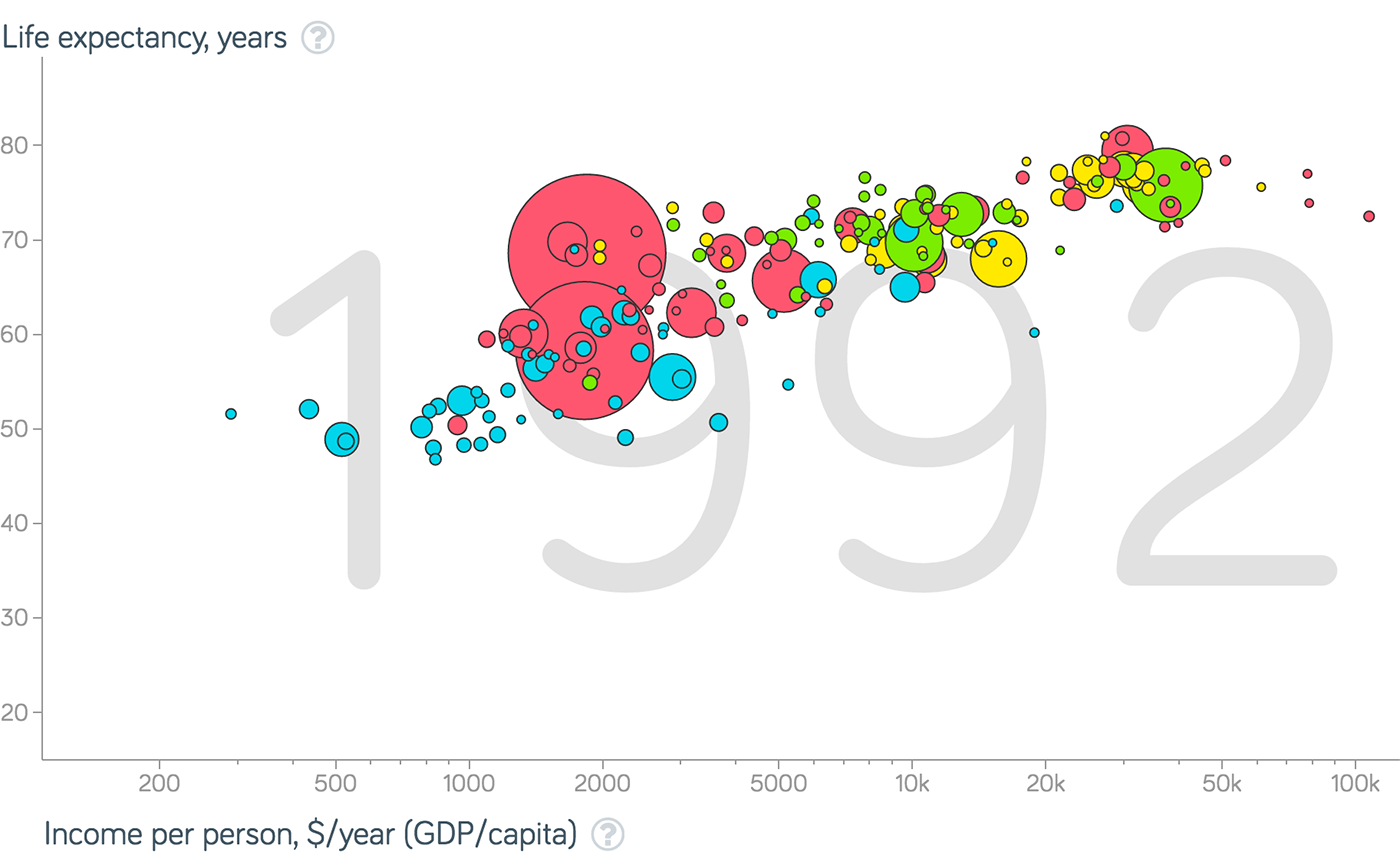

In general, a graph usually means just such a frame - of two continuous axes. Often, a graph shows the dependence of one quantity on another, in this case, according to tradition, the independent quantity is laid out horizontally, and the supposedly dependent one is laid out vertically: Graph of two continuous axes with point objects: Sometimes average values are marked on the axes, and the graph is divided into meaningful quadrants ( "expensive player score", "cheap productive", etc...) also, the chart can be performed rays they show the relationship between the parameters laid on axes, which itself may be considerable th parameter (in this case, the competition in the industry): For visual display with a large spread parameter values used axis with logarithmic scale:

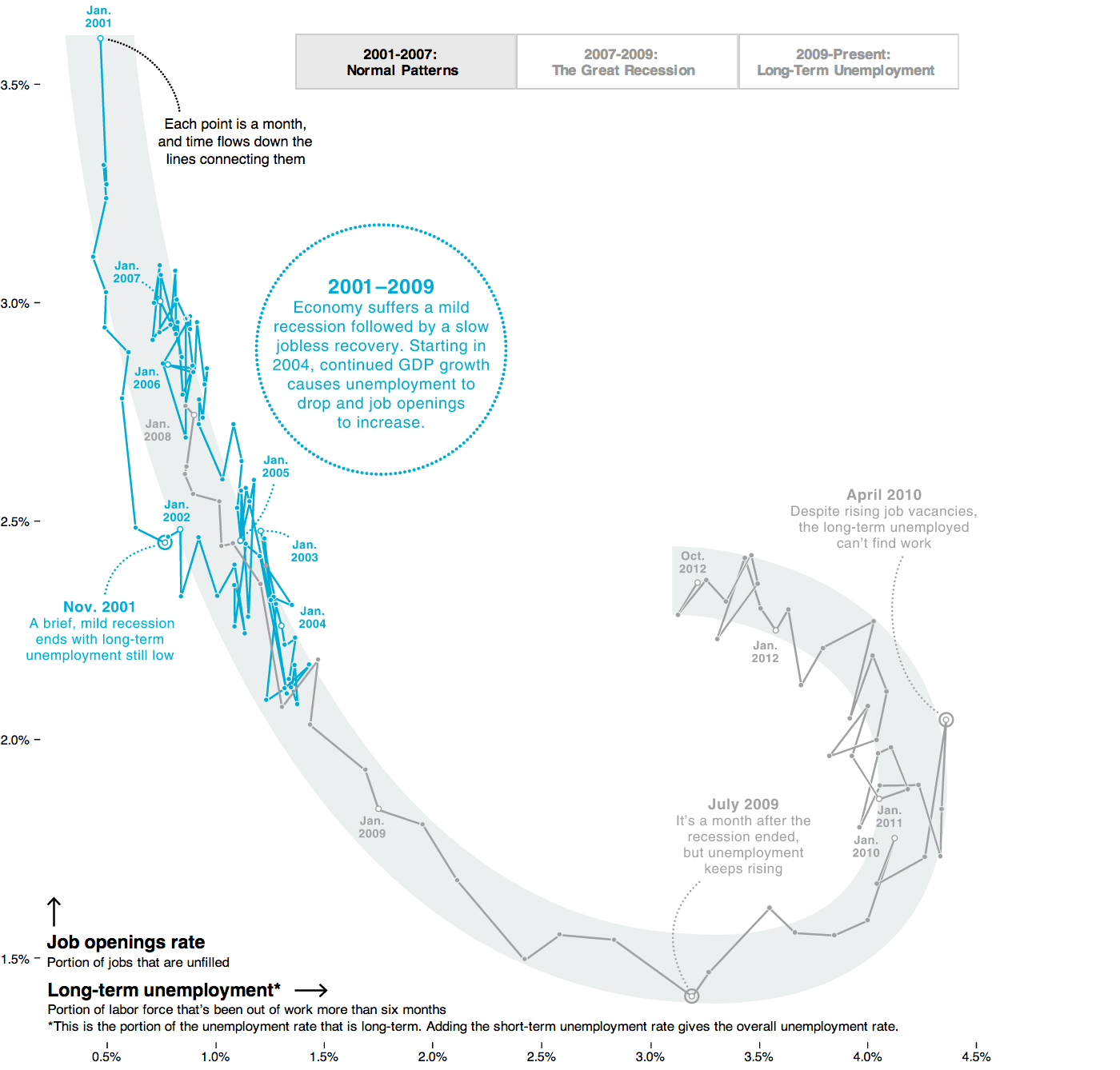

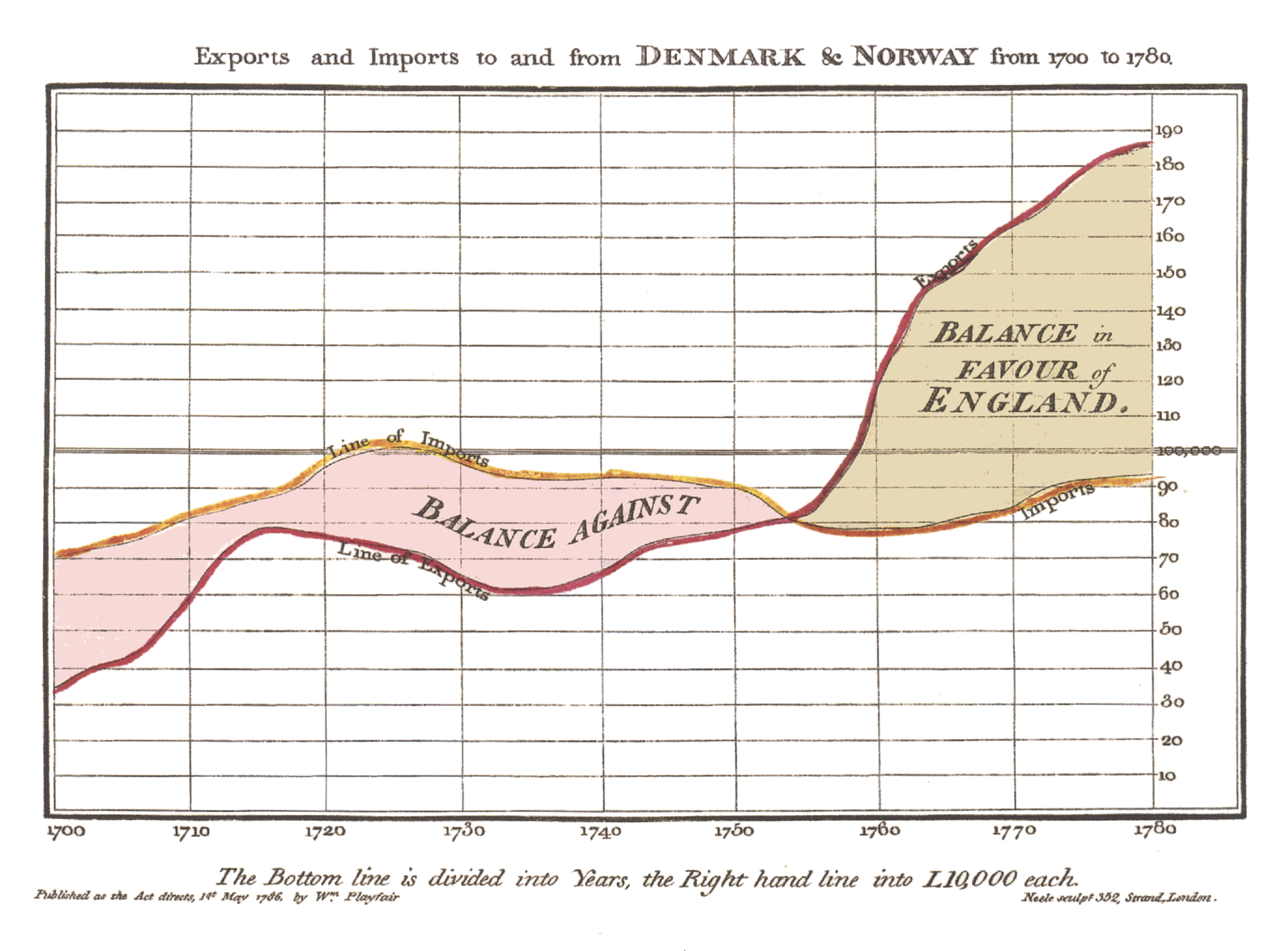

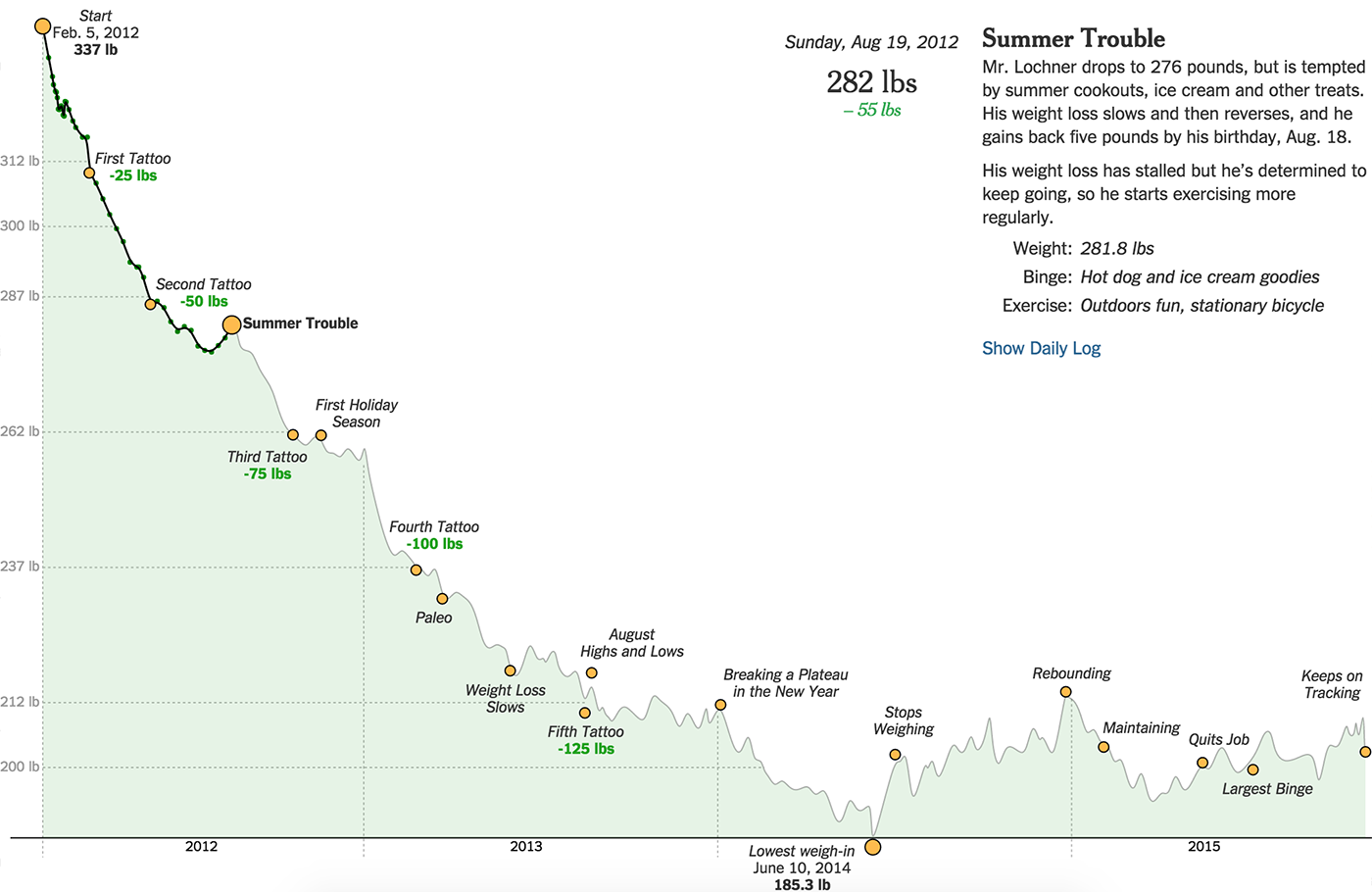

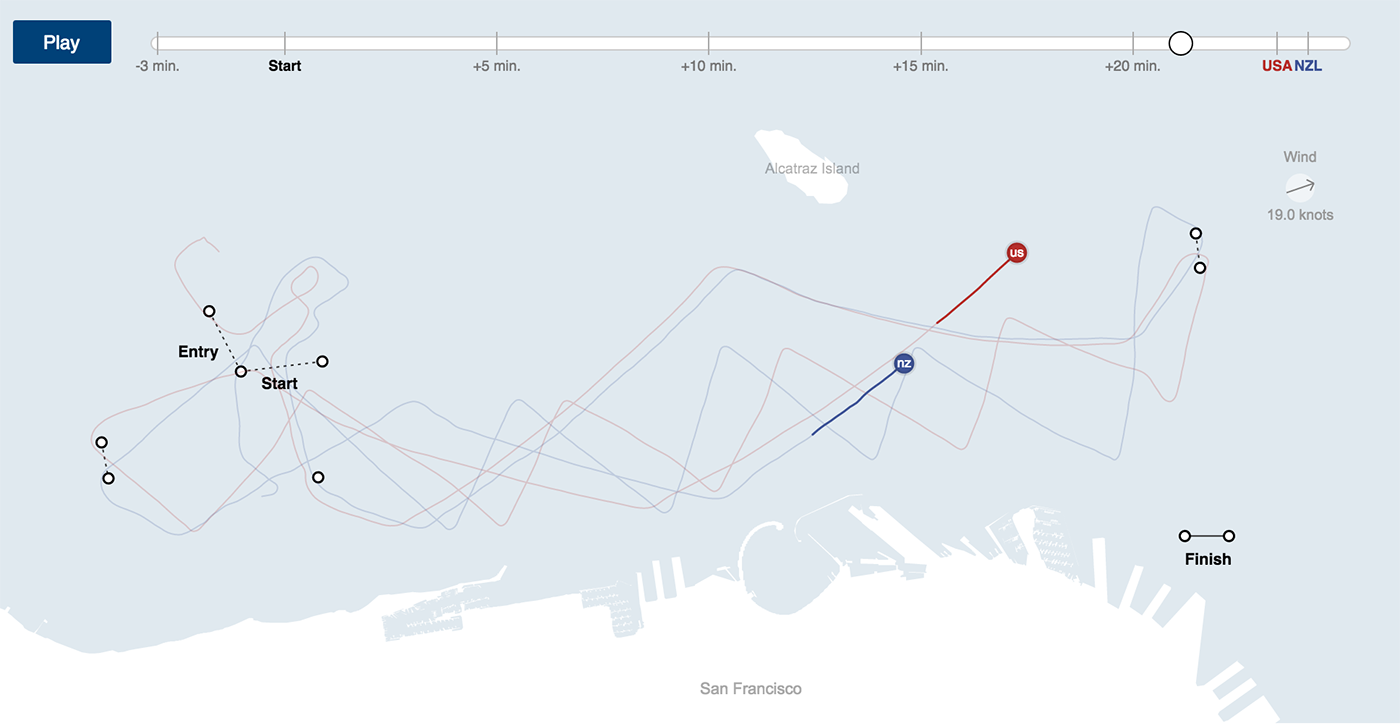

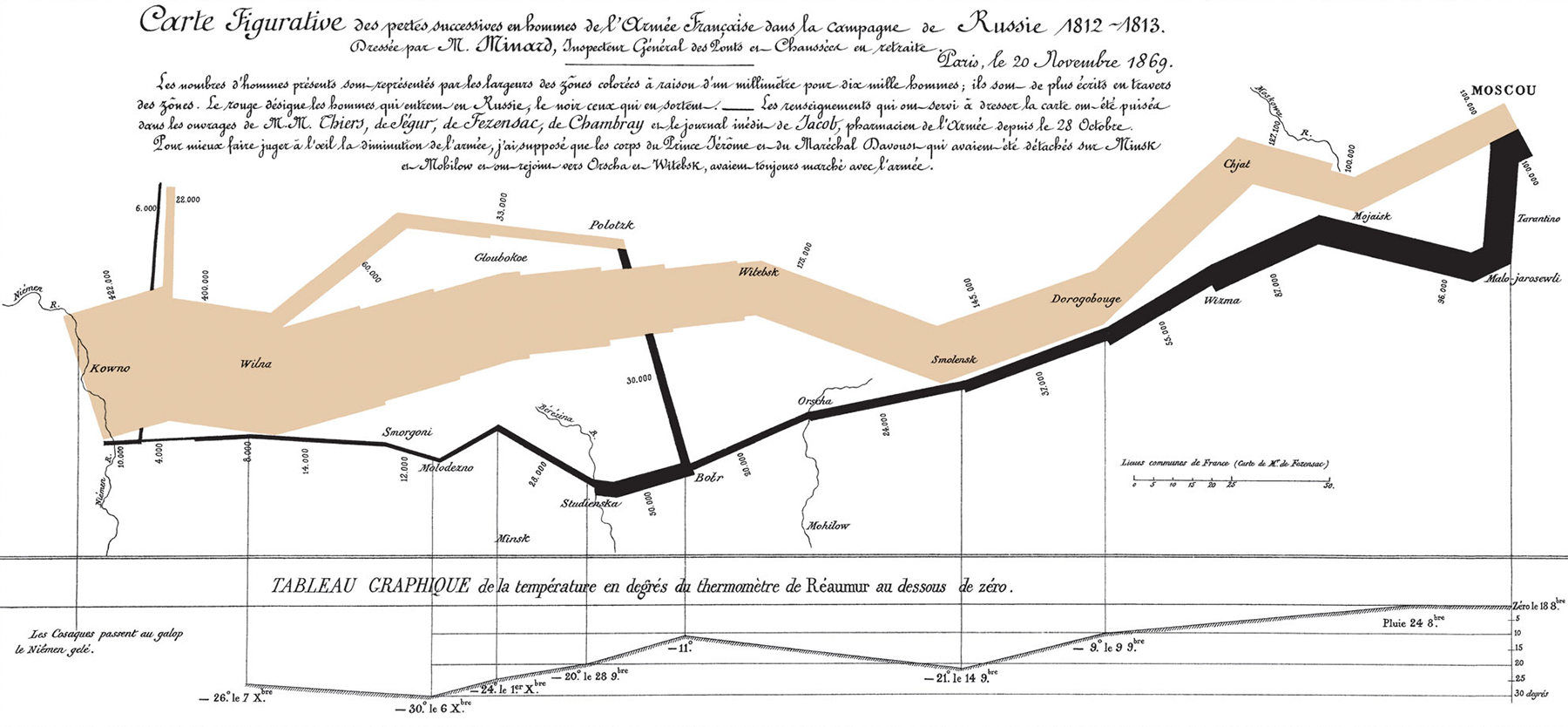

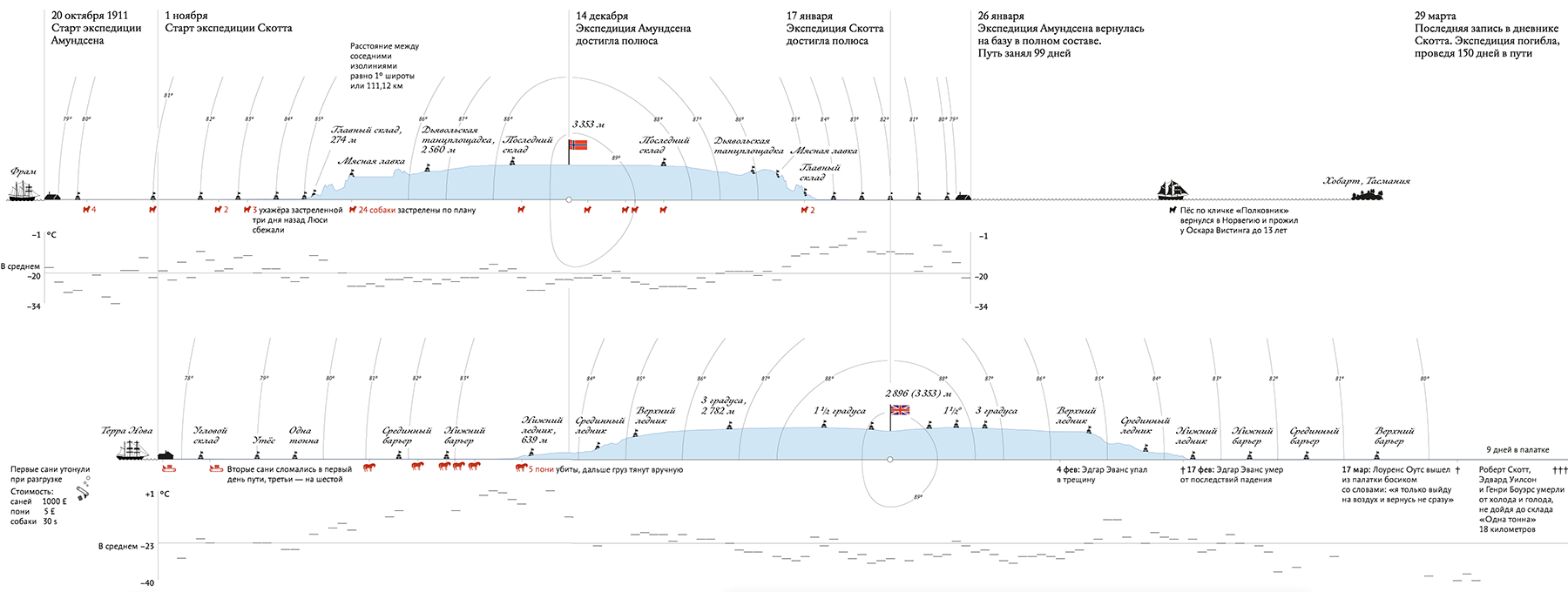

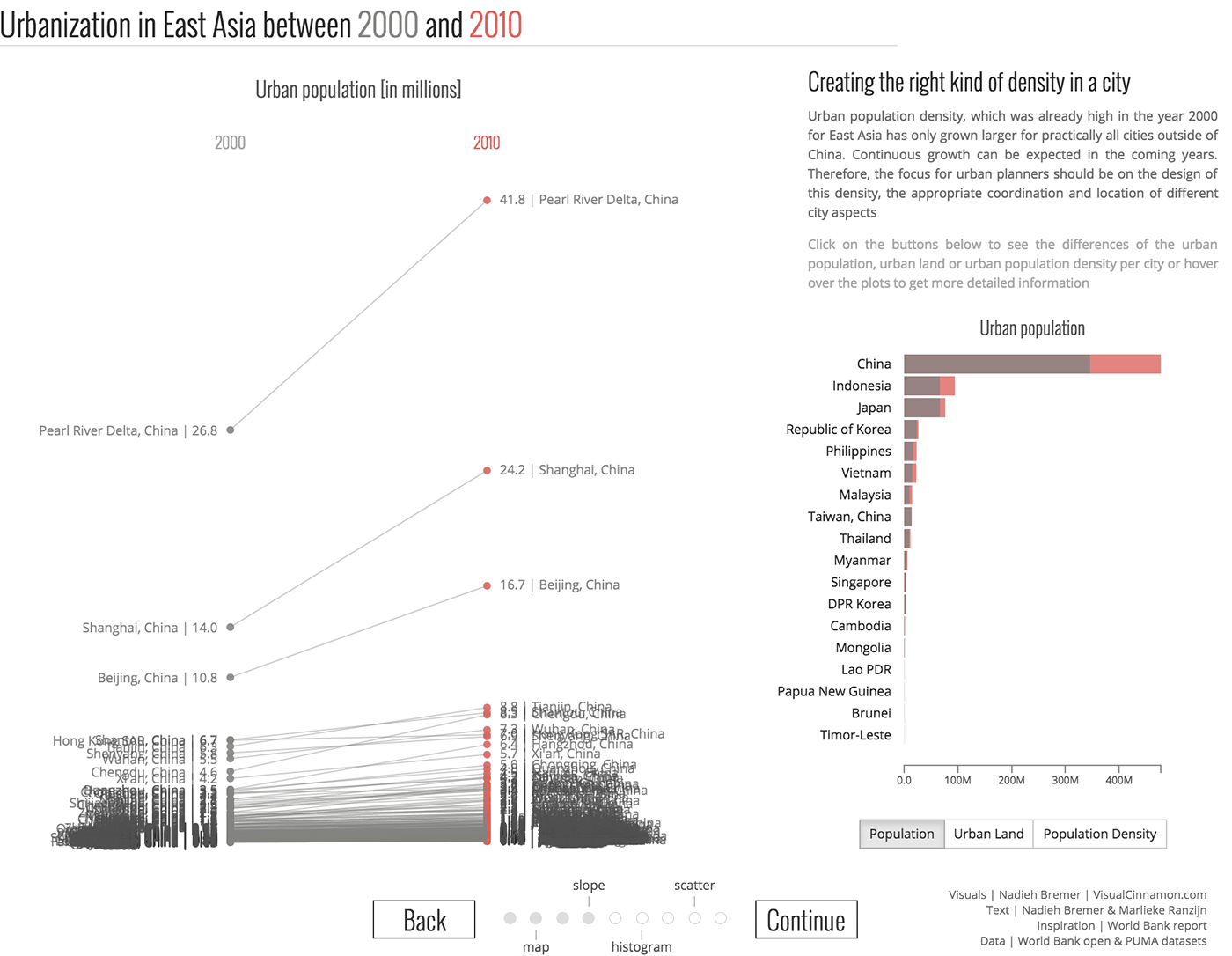

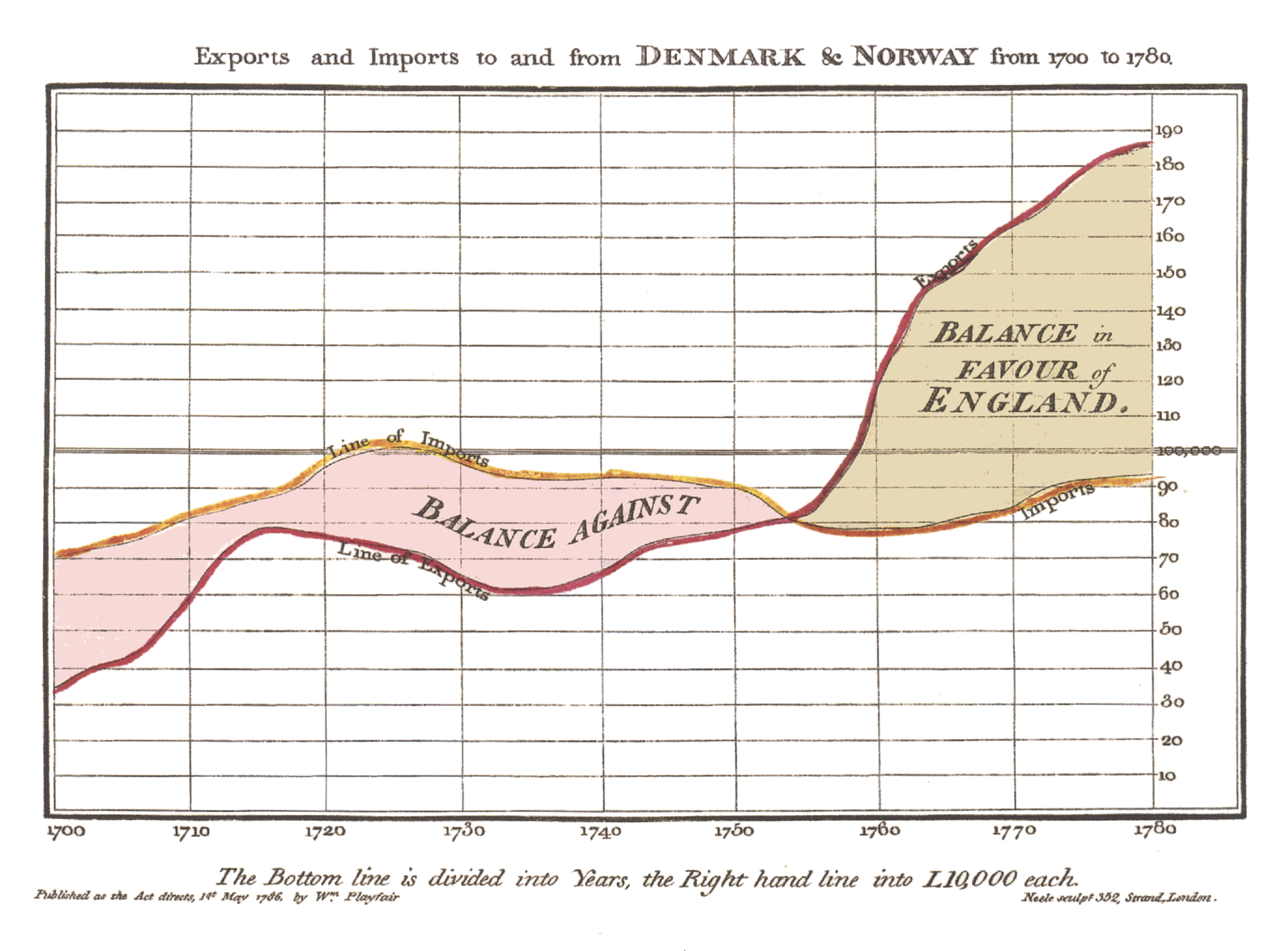

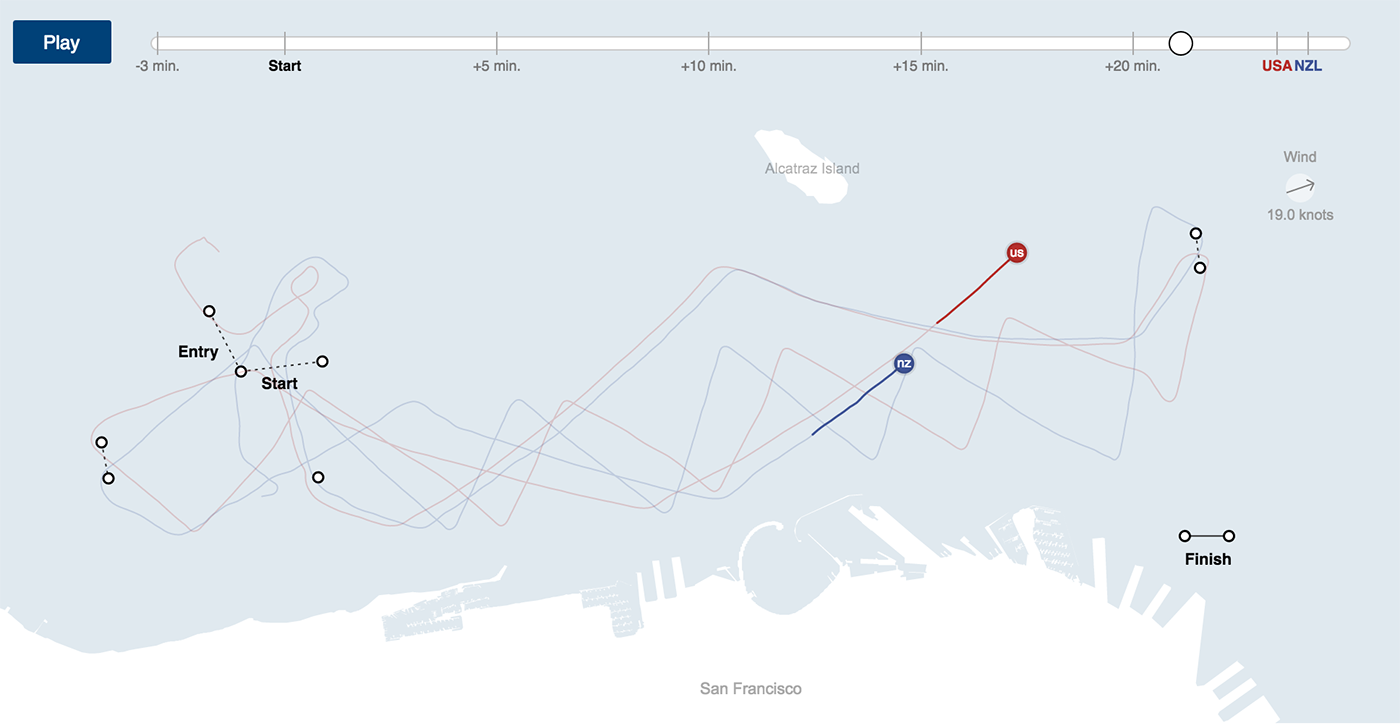

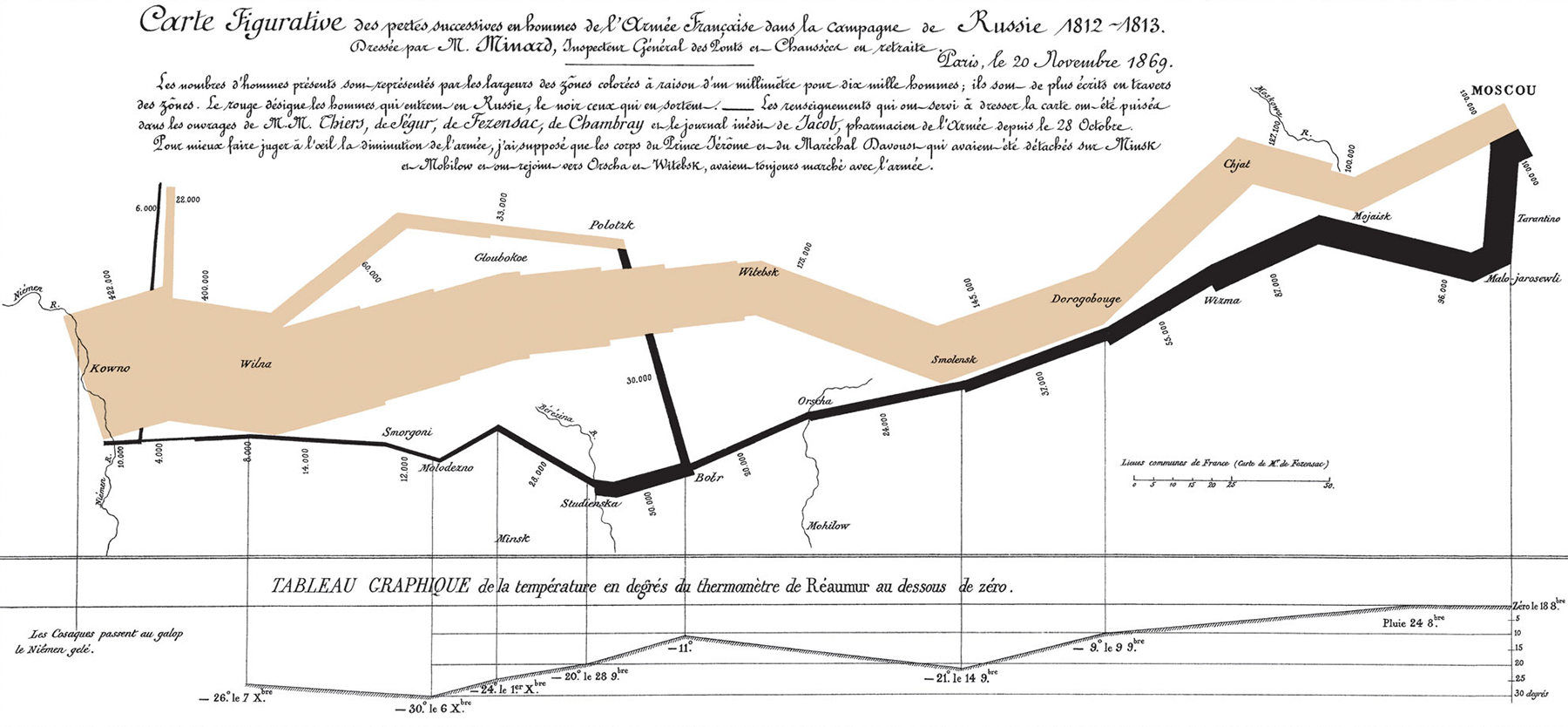

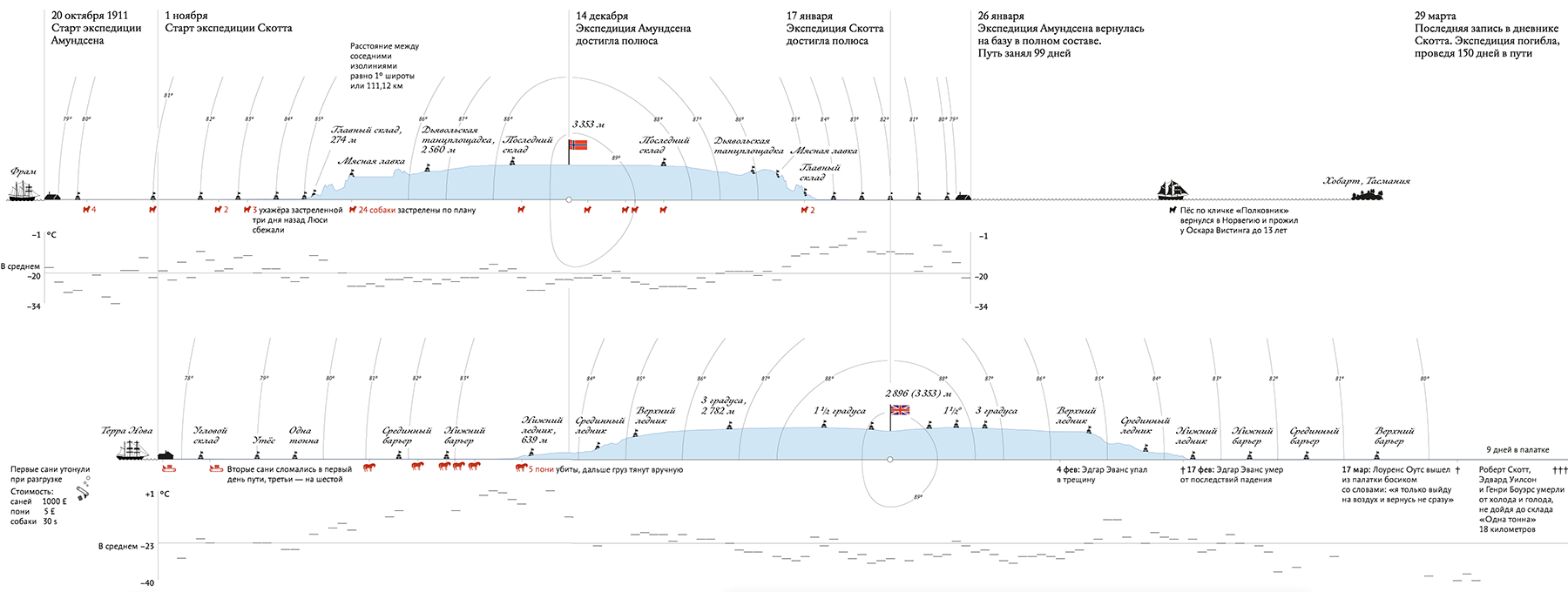

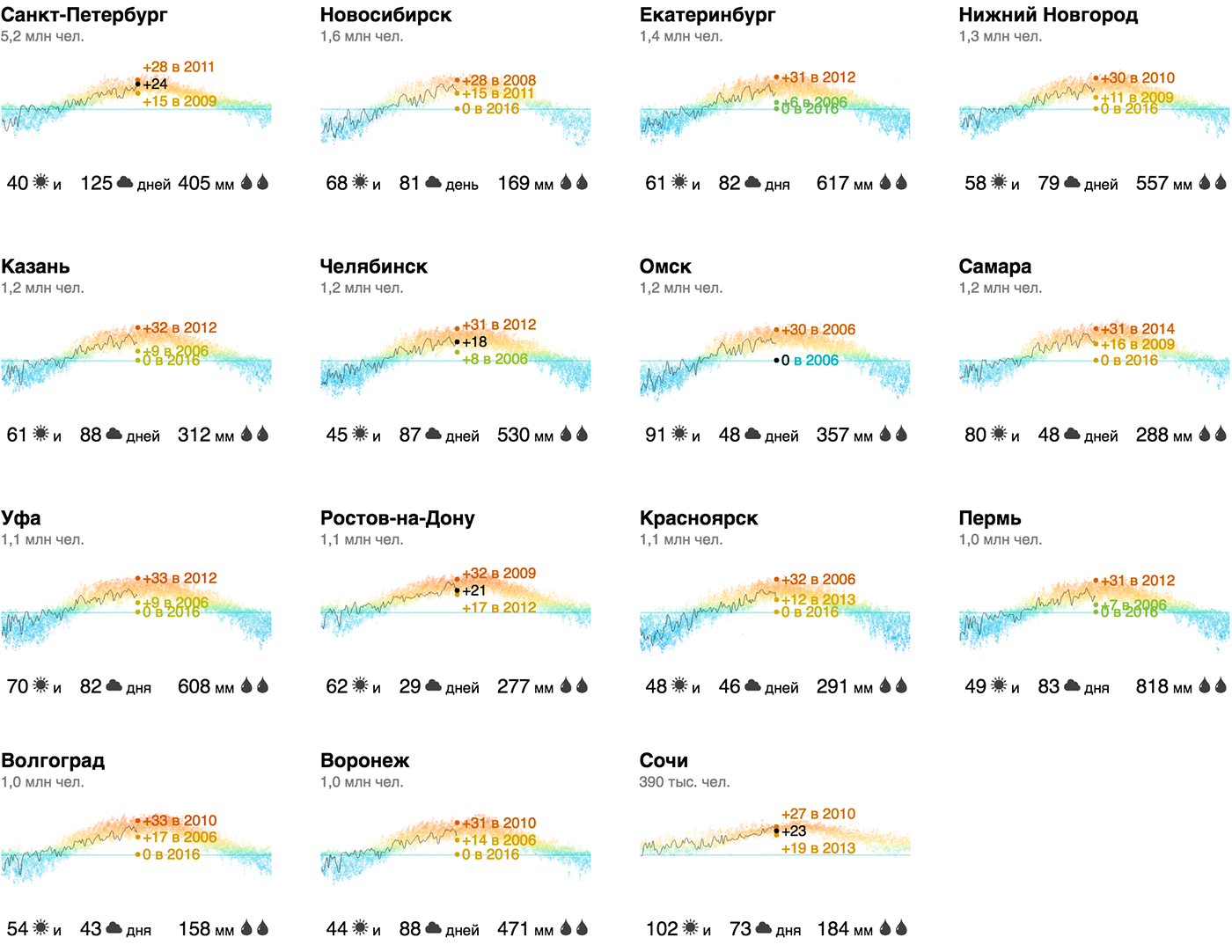

An important special case of continuous axes is the axis of space and time , for example, a geographical coordinate or a time line. A map, a view of a soccer field or basketball court, a production chart are examples of a combination of two spatial axes. Graphs with a time axis are the first abstract graphs that laid the foundation for data visualization: And they are still successfully applied: Another way to show a temporary dimension is to add a spatial picture to the slider: In exceptional cases, space and time can be combined on a flat map or along one spatial axis: spacing axis

It is divided into segments (equal or unequal), which sets the parameter value according to certain rules. The interval axis is suitable for both qualitative and quantitative parameters.

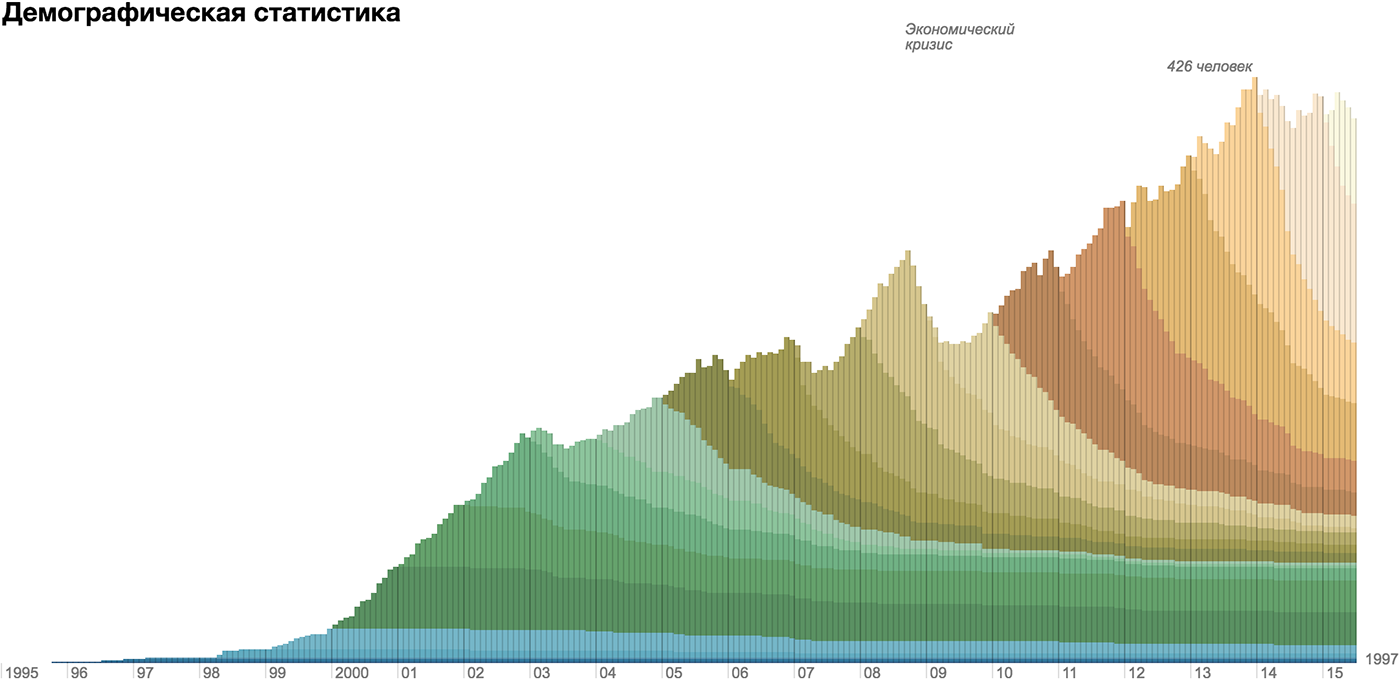

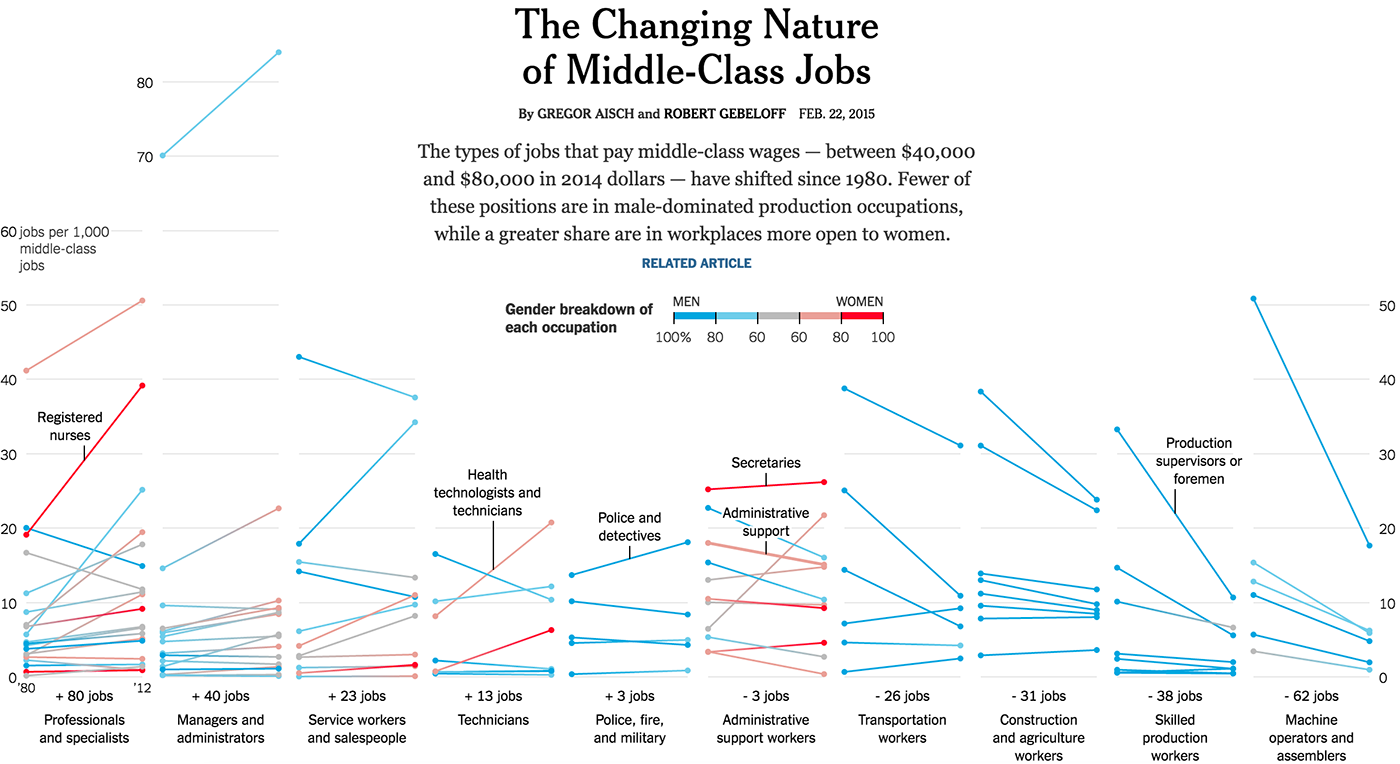

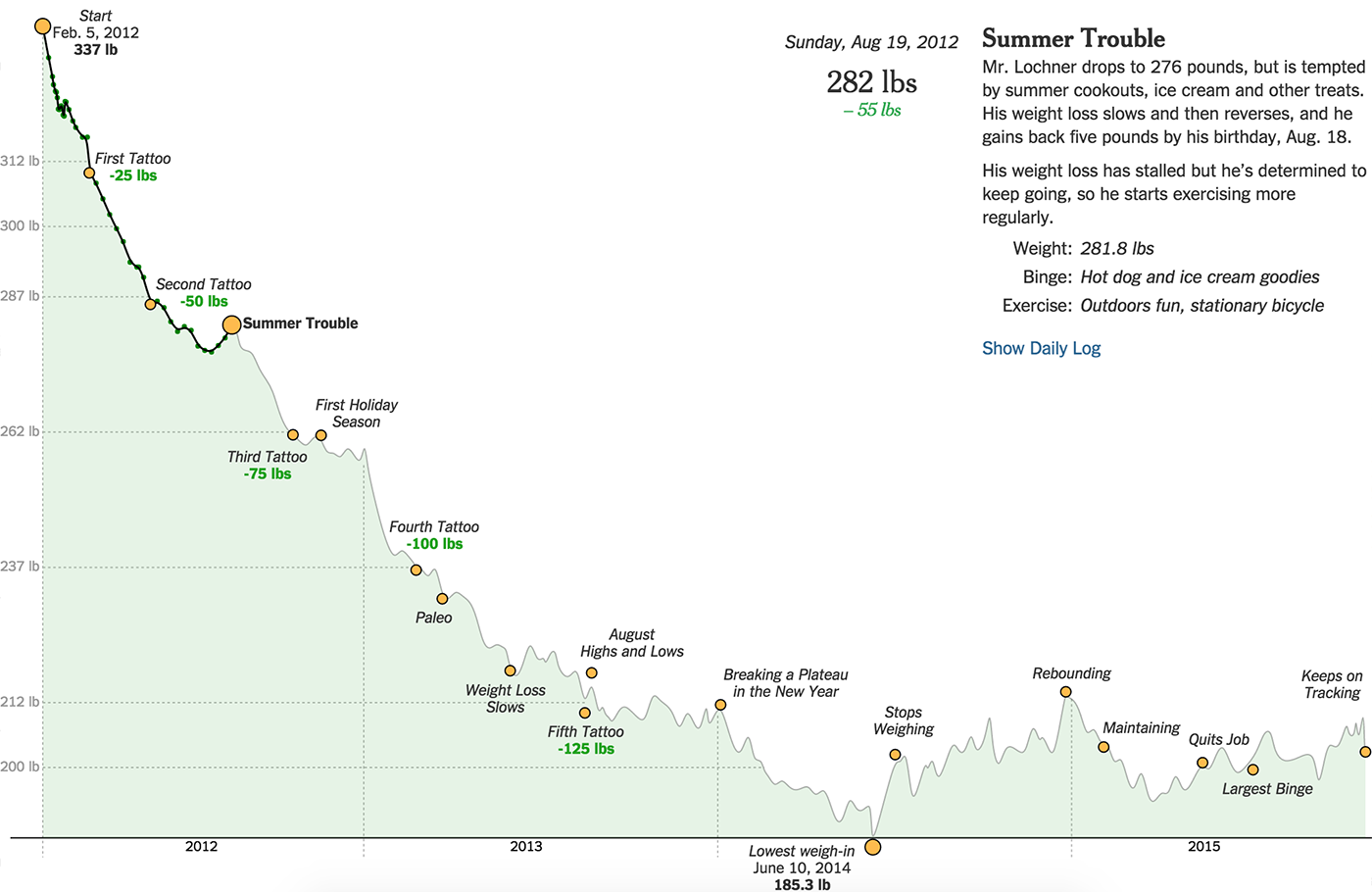

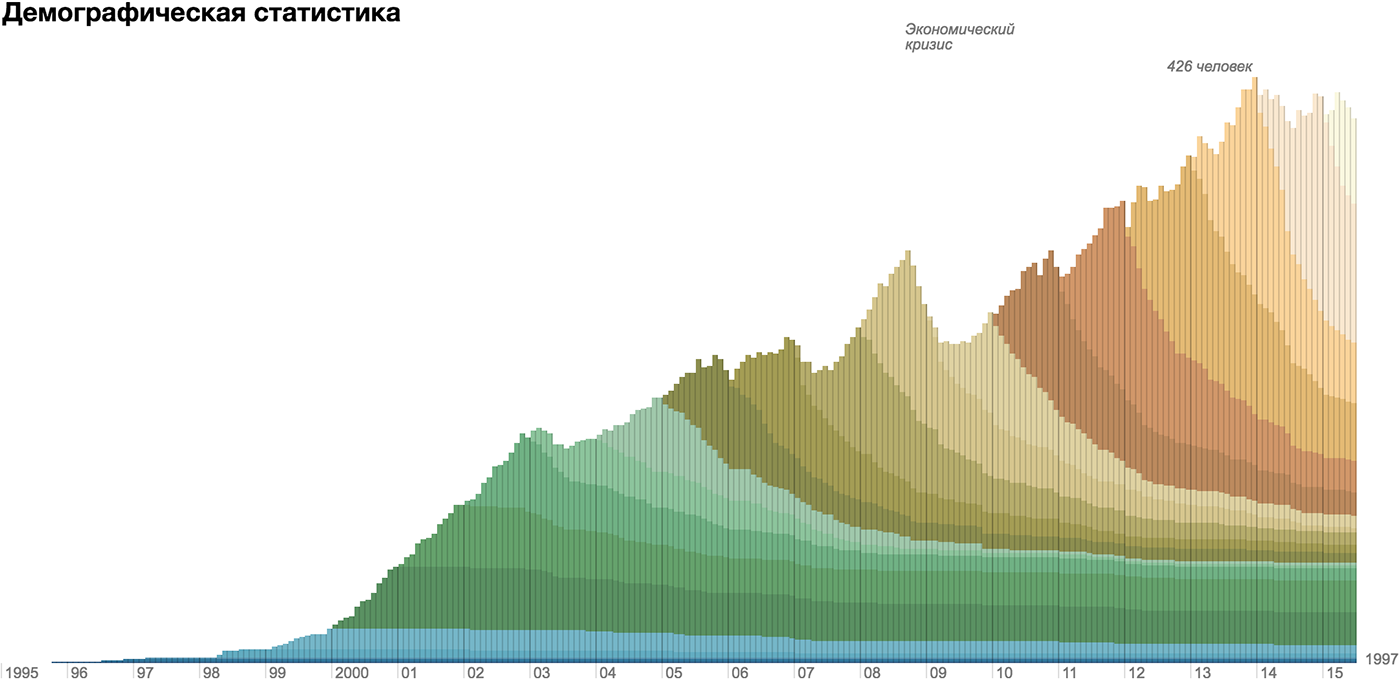

A hitmep is a classic example of a combination of two spacing axes. For example, the number of cases by state and year shown on the corresponding frame: The bar graph is an example of a combination of the interval (time interval) and continuous (value) axis: Two interval axes do not have to turn into a hit chart: A layered axis lays several parallel axes (continuous and interval) in one dimension. Most often, this technique works with timelans, when layers with data, texts and graphics are superimposed on one time axis:

Sometimes visualization requires a degenerate axis, to which a specific parameter is not attached, or on which only two values are shown. Most often this happens when the visualization illustrates the connection - to show the connection, you need space between objects.

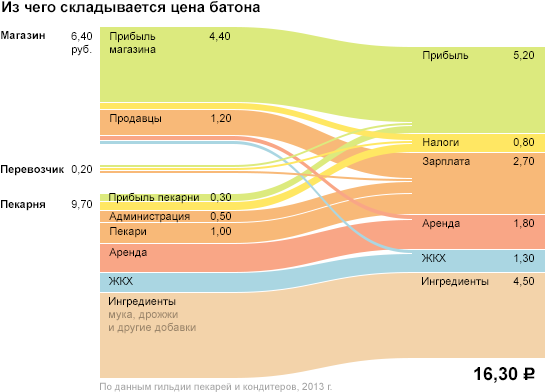

The “was-it-become” format data most often requires a degenerate axis: But it does not necessarily “eat up” the screen dimension: A degenerate axis is permissible if it exhibits important data features and thus “pays for” the loss of the whole screen dimension. But you should use it only as a last resort. Unfortunately, in spectacular popular infographic formats, one or even two screen axes are often degenerate. Another way to use screen space is to fill it with consecutive blocks.

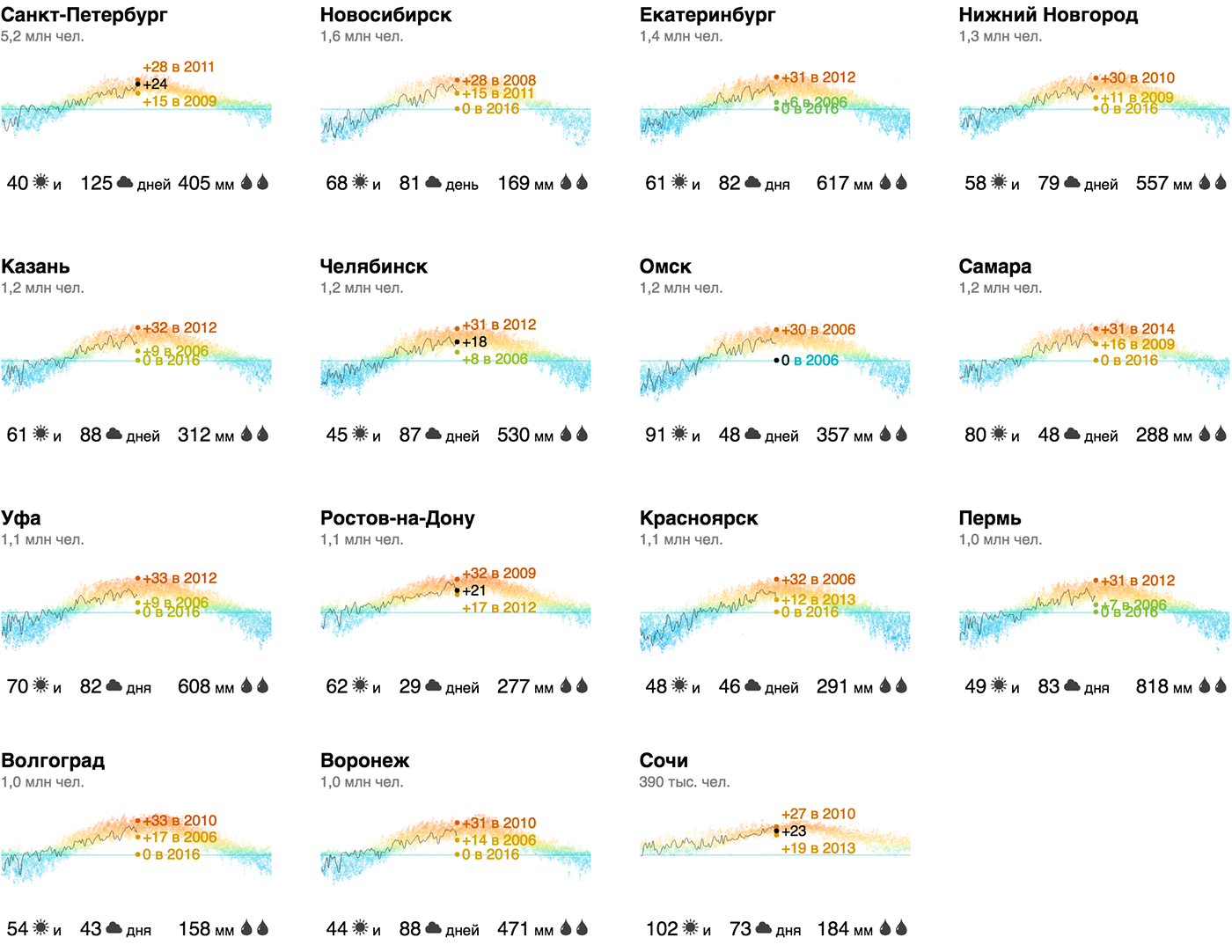

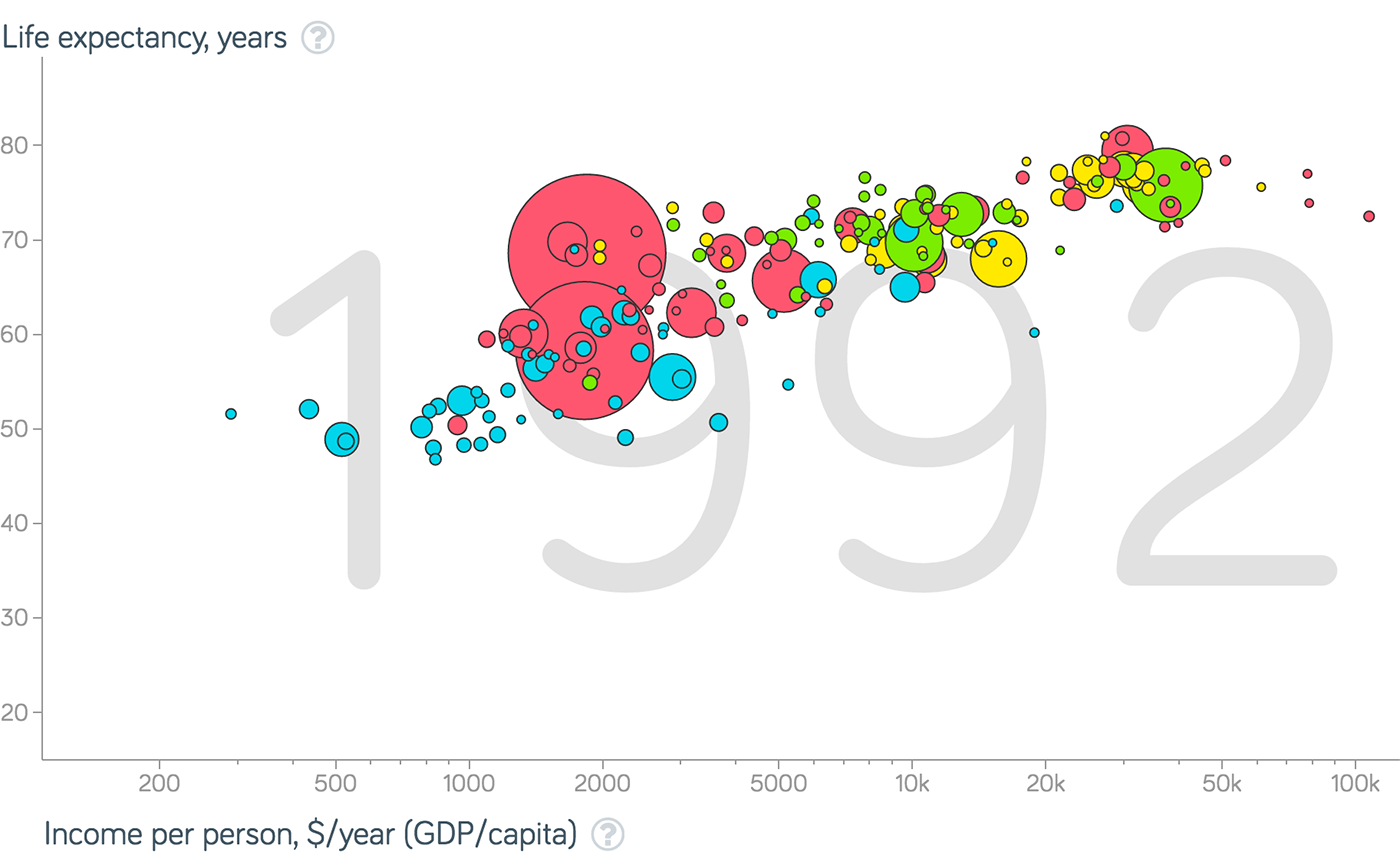

uniform mesh . The objects inside the grid are ordered linearly, for example, alphabetically: Or according to the size of the city: The grid adjusts to the size of the screen and does not have pronounced horizontal and vertical guides. In most cases, the visualization framework consists of the axes listed above. A rare exception is three-dimensional visualizations, even more rare are their successful examples: In those cases when the choice of the frame is not obvious, I combine the axes with important parameters in a more or less random way, formulate what question this or that combination of axes answers, I choose the most successful combinations. Interesting pictures are obtained at the junction of supposedly dependent data parameters: The behavior of countries on the Gepminder analytical charts

And from the distribution of data particles along different axes:

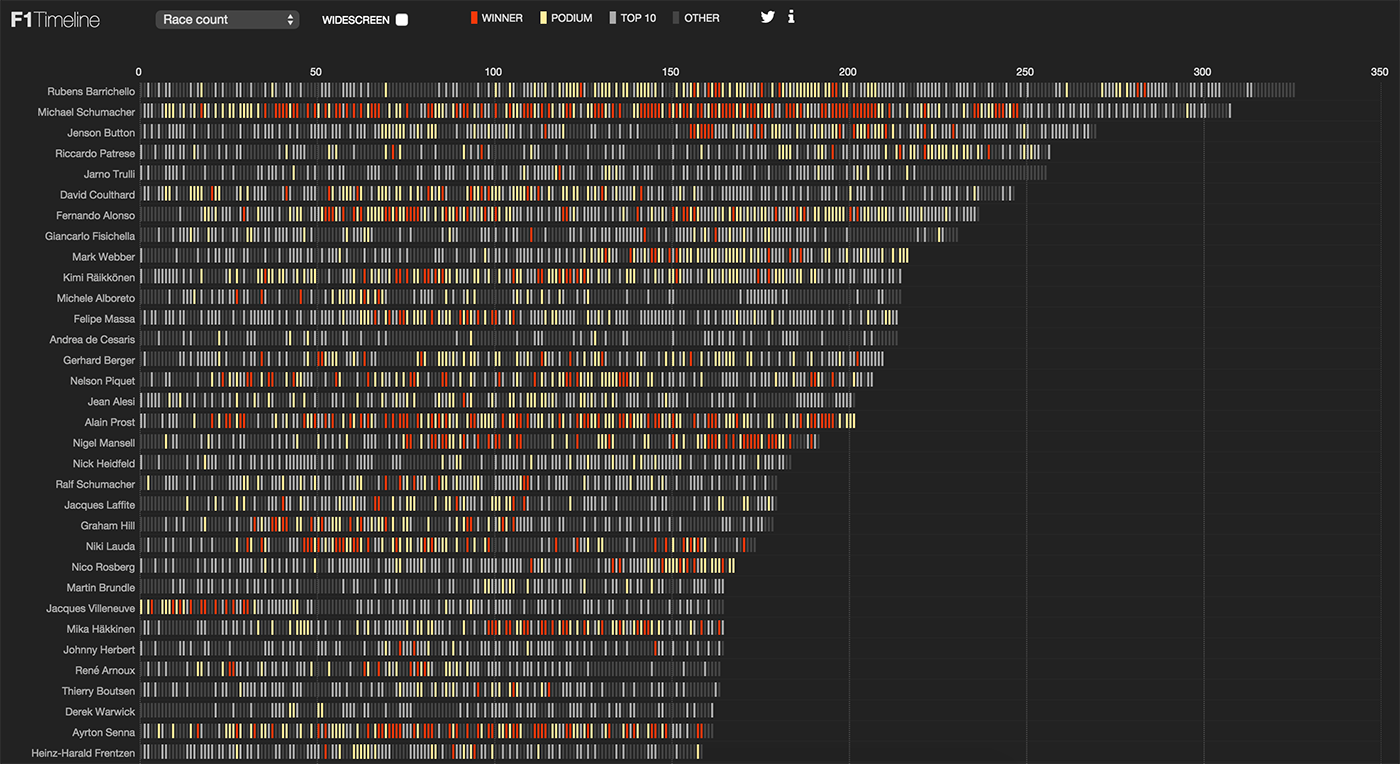

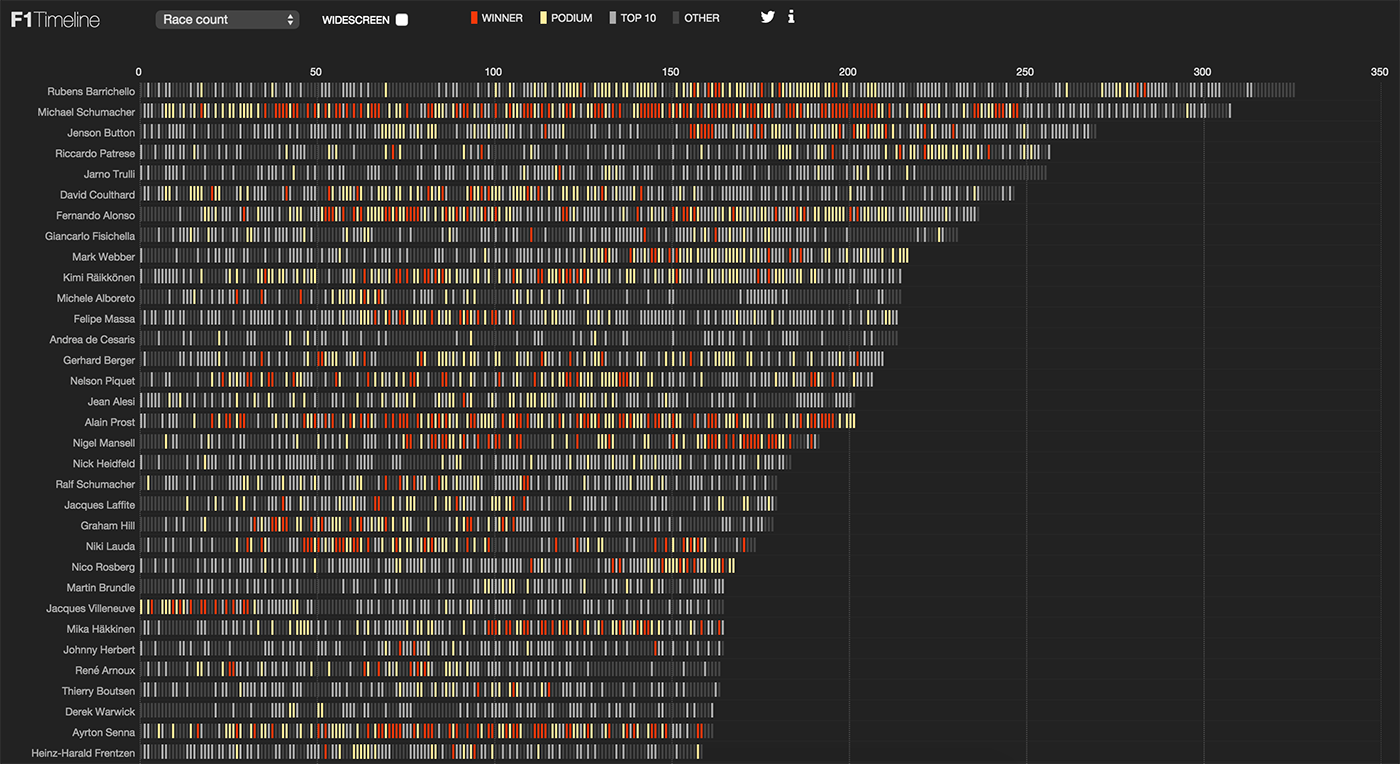

The results of Formula 1 pilots by time, number of races and age of pilots

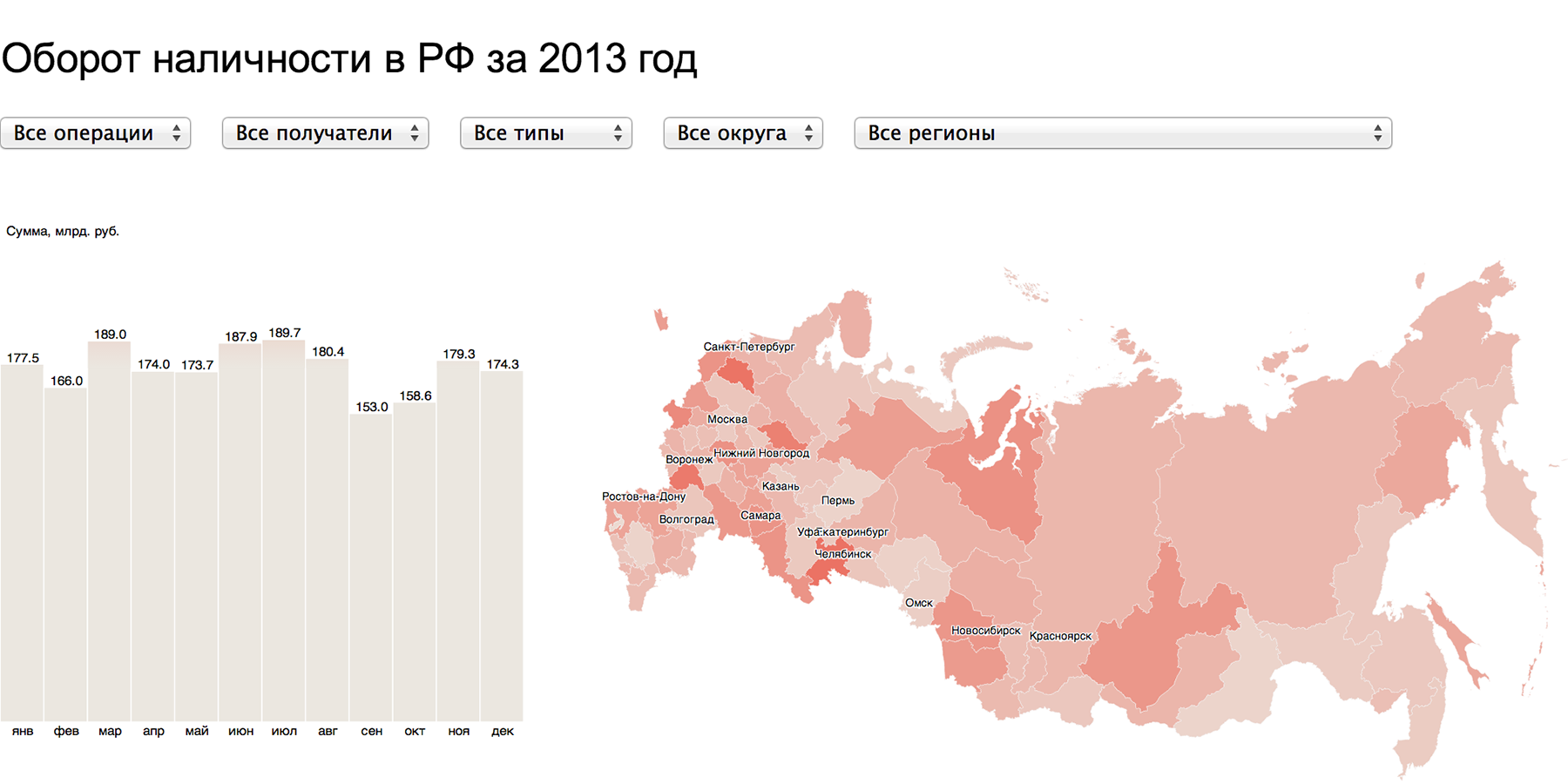

In interactive combinations of simple frames, truly powerful visualizations are born. Timeline + map:

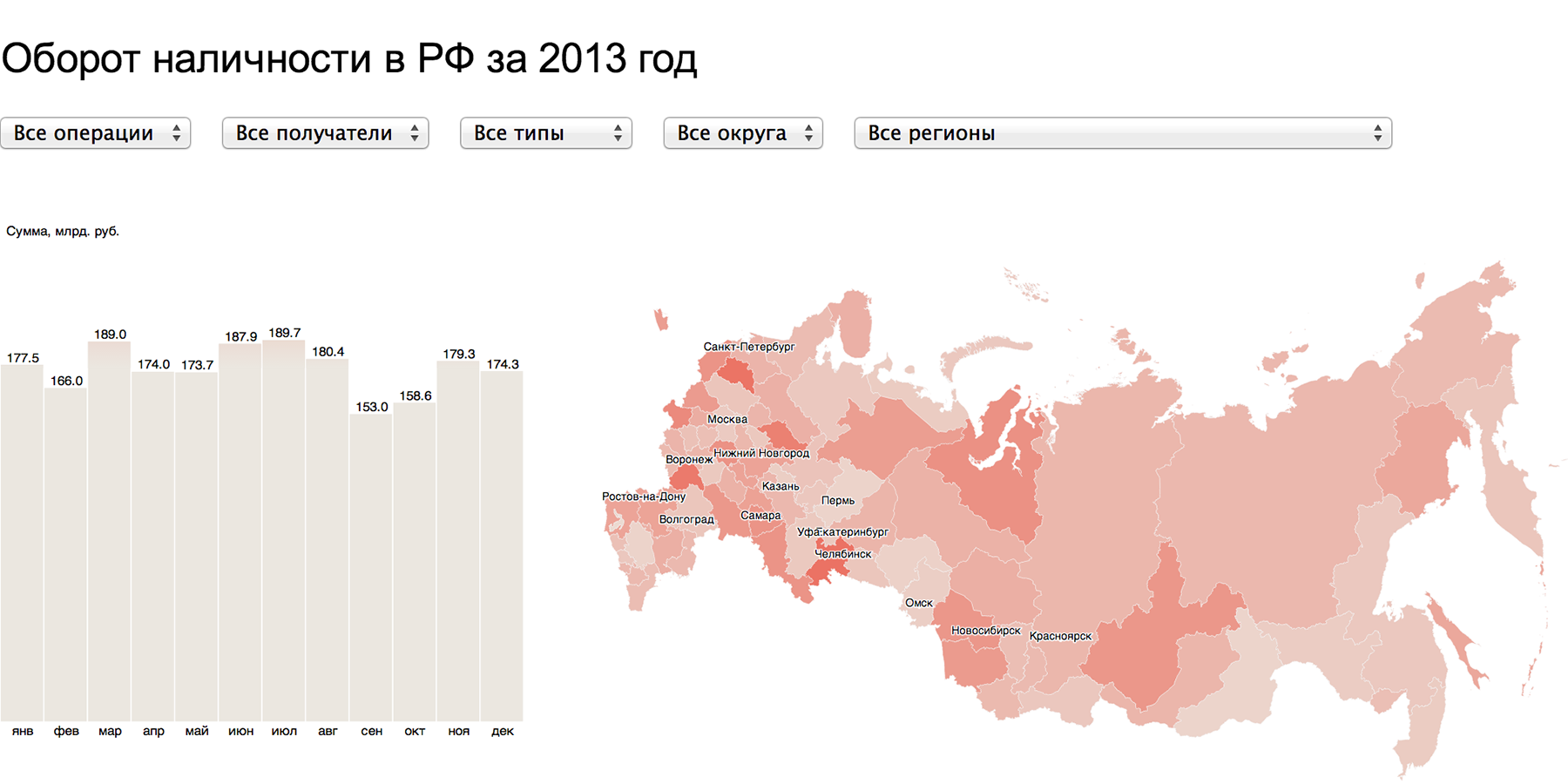

Cash turnover in the Russian Federation

Map + hitmap:

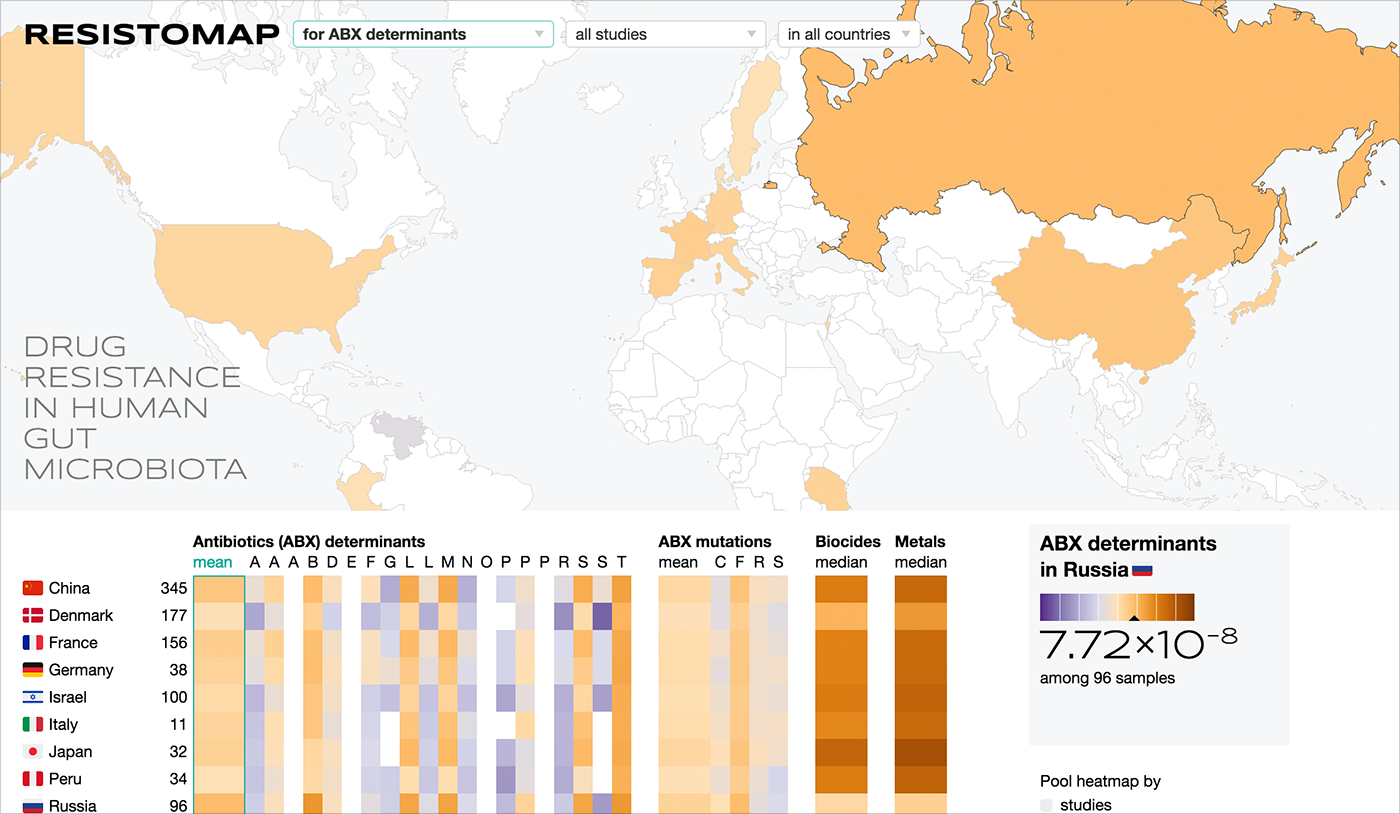

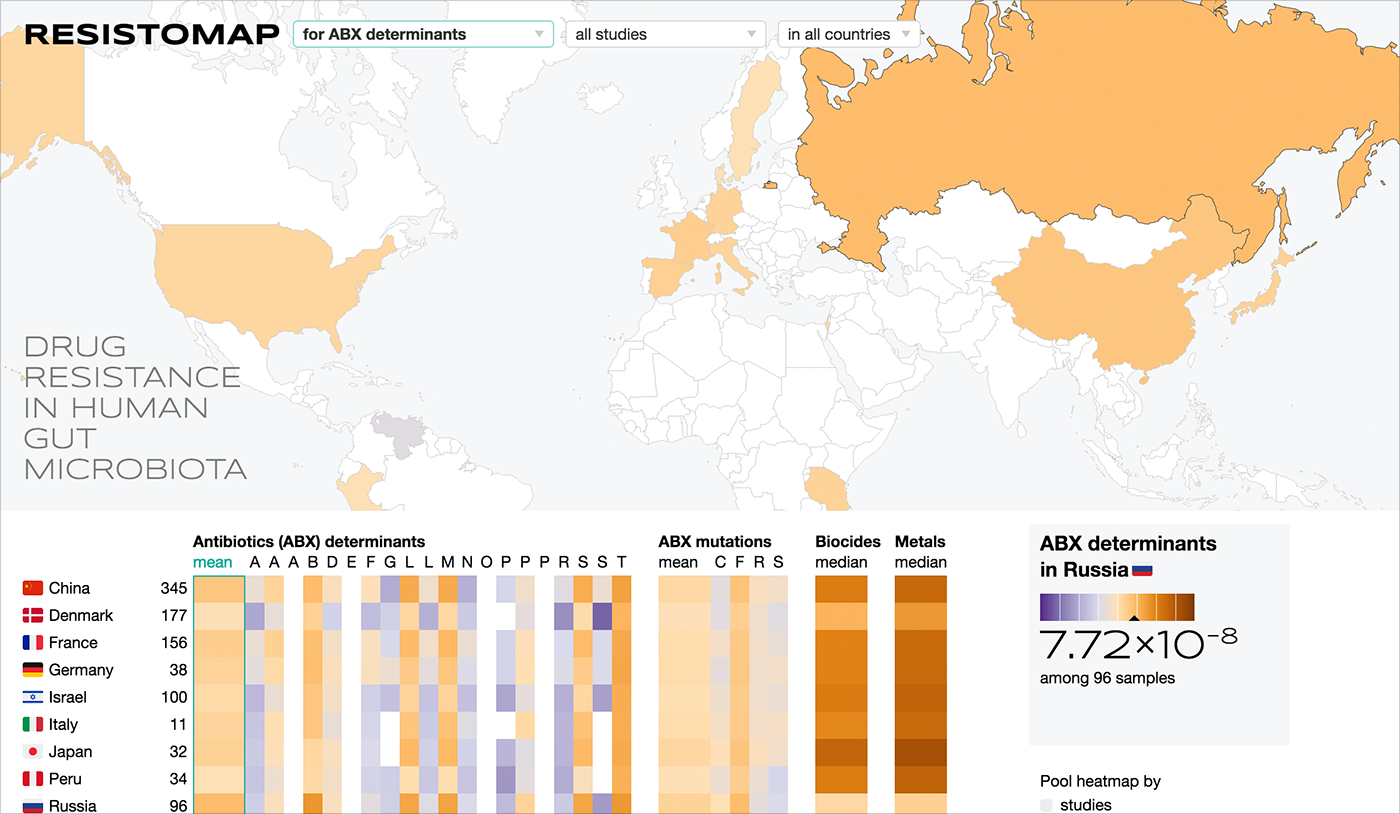

Resistance map for the Research Institute of FHM

Several similar schedules:

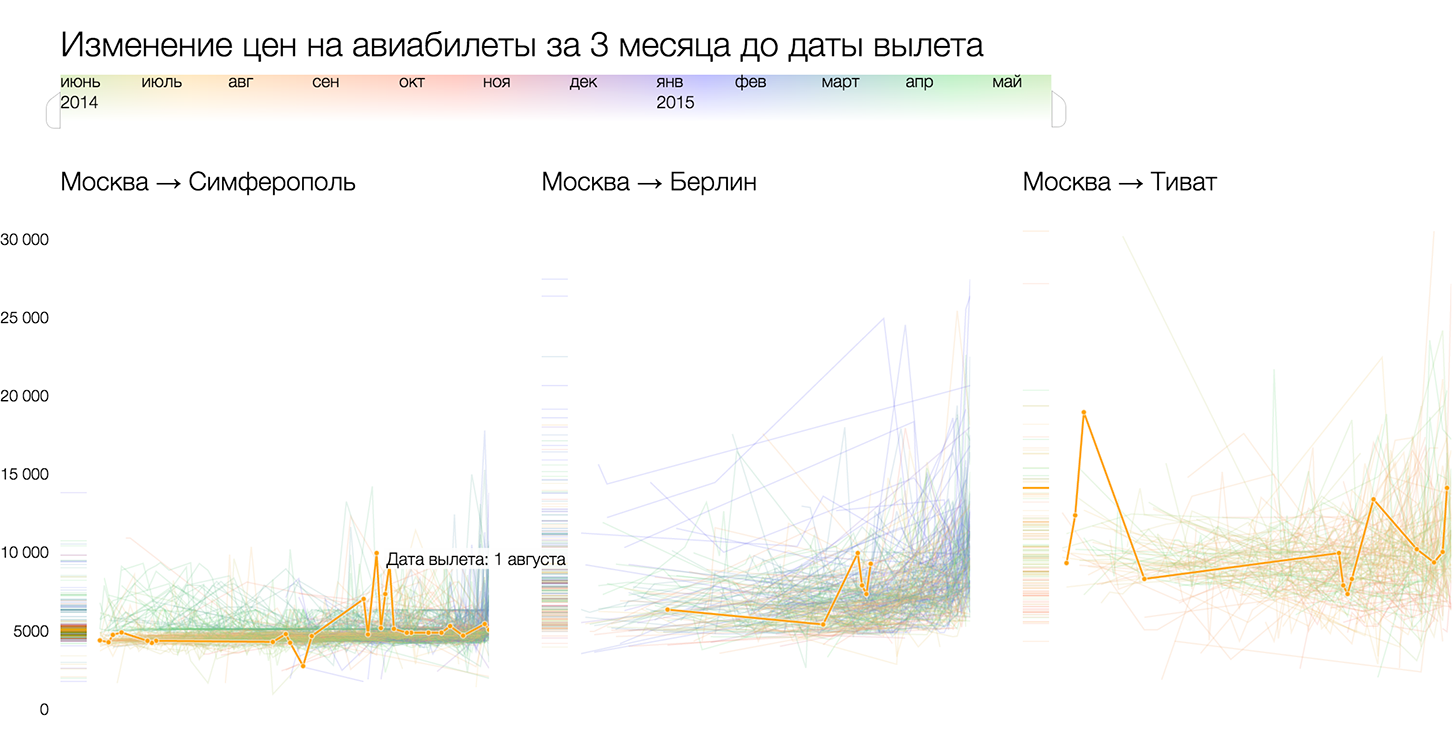

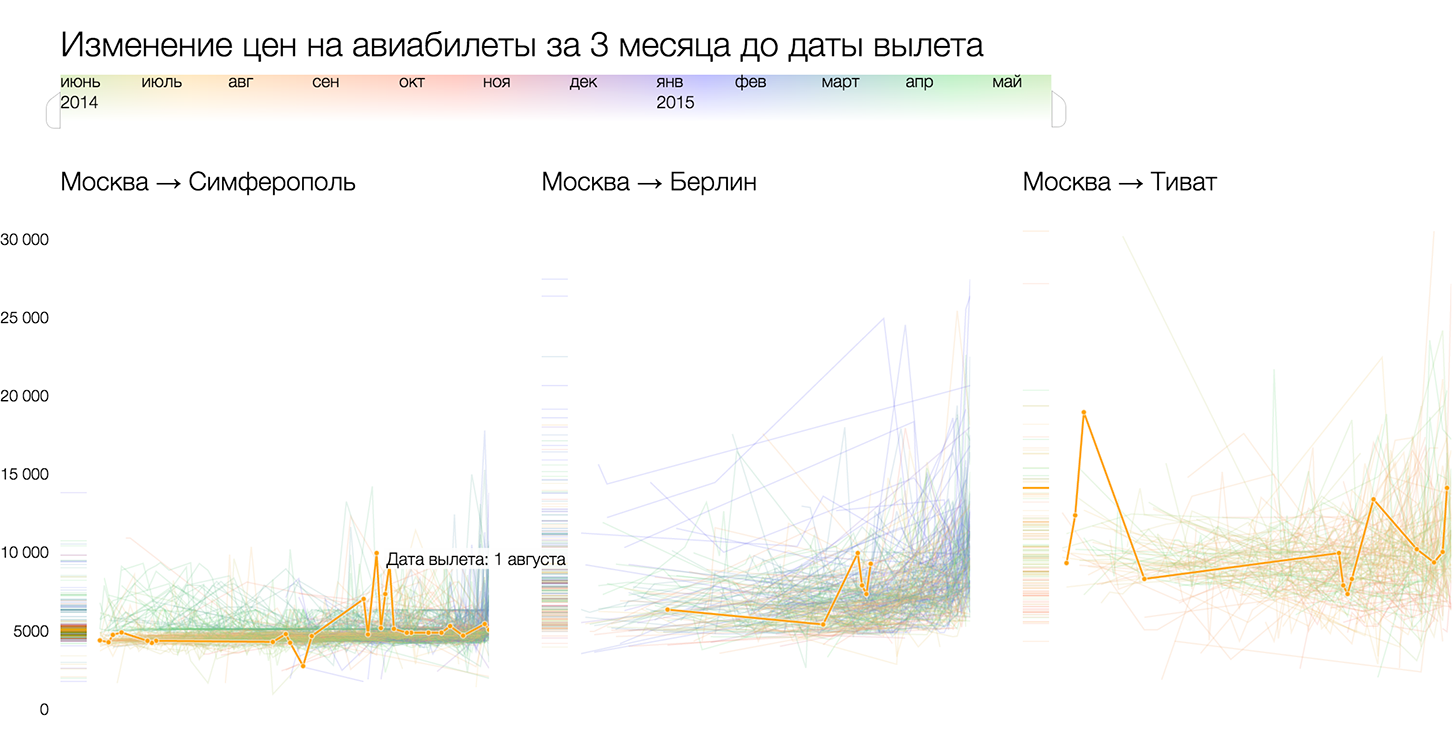

Analysis of airfare for Tutu.ru

So, this is how I see the process of creating visualizations from start to finish.

1. Go from tables and slices to data reality.

2. Find the particle or particles of data from which the mass of data is built.

3. Select visual atoms for the embodiment of data particles. Visual atoms are selected in such a way as to fully and clearly reveal the properties of a data particle. The closer the visual incarnation is to the physical meaning of the attribute, the better.

4. On the screen, the mass of data is expressed by the visual mass. It happens that in the visual mass individual atoms are distinguishable, in other cases they are averaged and added.

5. In addition to the mass of data, in the reality of data there is a set of dimensions in which the data lives.

6.These measurements on the screen collapse into a flat frame. The framework systematize visual atoms, gives the mass of data "rigidity" and reveals it from a certain side.

7. Visualization is supplemented by an interface for managing a mass of data (for example, sampling and searching) and a wireframe (for example, setting axes).

The Δλ algorithm is still in the process of being constantly updated, supplemented and improved. I tried to present it succinctly and consistently, but the scope of one habrapost for this is cramped, and much remained behind the scenes. I will be glad to comment, I will be happy to explain incomprehensible moments and answer questions.

To get acquainted with the first-hand algorithm and learn how to use it, come to a course on data visualizationwhich I will spend in Moscow on October 8 and 9. On the course, in addition to the algorithm, participants get acquainted with D3.js - a powerful tool for implementing non-standard data visualization solutions.

For the past six months, I have been working on a data visualization algorithm that systematizes this experience. My goal is to give a recipe that will allow you to sort out any data and solve data visualization tasks as clearly and consistently as mathematical problems. In mathematics, it does not matter whether to stack apples or rubles, to distribute rabbits in boxes or budgets for advertising campaigns - there are standard operations of addition, subtraction, division, etc. I want to create a universal algorithm that will help visualize any data, while taking into account their meaning and uniqueness.

I want to share the results of my research with readers of Habr.

Formulation of the problem

The purpose of the algorithm: to visualize a specific data set with maximum benefit for the viewer. The initial data collection remains behind the scenes; we always have data at the input. If there is no data, then there is no task to visualize the data.

Data reality

Typically, data is stored in tables and databases that combine multiple tables. All tables look the same, like pie / bar charts based on them. All data is unique, they are endowed with meaning, subordinate to the internal hierarchy, riddled with connections, contain patterns and anomalies. The tables show the slices and layers of the complete holistic picture that is behind the data - I call it the reality of the data.

Data reality is a collection of processes and objects that generate data. My recipe for high-quality visualization: to transfer the reality of data to an interactive web page with minimal losses (unavoidable due to media limitations), build upon visualization from the full picture, and not from a set of slices and layers. Therefore, the first step of the algorithm is to imagine and describe the reality of the data.

Description example:

Buses carry passengers on public transport routes. The route consists of stops; several flights are performed on the route per day. The route schedule for each flight is set by the time of arrival at the stop. At each moment, the coordinates, speed and number of passengers on board, as well as which flight on which route it performs, and which driver is driving, are known about each “car”.

The data we start with is just a starting point. After meeting them, we present the reality that generated them, where there is much more data. In the reality of data, without regard to the initial set, we select data from which we could make the most complete and useful visualization for the viewer.

Part of the data of the ideal set will be unavailable, therefore we “extract” - we search in open sources or rely on those that can be “extracted” and work with them.

Data Mass and Wireframe

My biggest discovery and the central idea of the Δλ algorithm in dividing visualization into a mass of data and a wireframe. The frame is rigid, it consists of axes, guides, areas. The framework organizes the space of a blank screen, it transfers the data structure and does not depend on specific values. A mass of data is a concentrate of information; it consists of elementary particles of data. Due to this, it is plastic and “sticks” to any given frame. A mass of data without a framework is a shapeless pile, a framework without a mass of data is a bare skeleton.

In the example of the Moscow marathon , the elementary particle of data is a runner, the mass is a crowd of runners. The framework of the main visualization is a map with a race route and a temporary slider.

The same mass on the frame formed by the time axis gives a diagram of the finishes:

This is an important feature of visualization, as it serves as a starting point for finding ideas. A data mass consists of data particles that are easy to see and highlight in the reality of the data.

Data Particles and Visual Atoms

An elementary data particle is an entity large enough to possess the characteristic properties of the data, and at the same time small enough so that all data can be disassembled into particles and reassembled, in the same or another order.

Search for an elementary particle of data on the example of the city budget:

Поиск элементарной частицы начинайте снизу вверх: ищите разные потенциальные частицы и примеряйте их к данным. «Деньги?» — хорошее начало, единица измерения бюджета, рубль, но слишком универсальная. Подойдёт, если не найдём чего-то более характерного для городского бюджет. «Мероприятия» не подходят, потому что не все бюджетные траты связаны с мероприятиями, есть и другие расходы, а элементарная частица должна описать всю массу данных. «Учреждения?» — с одной стороны, да, все бюджетные деньги можно разбить на отчисления тому или иному бюджетному учреждению. С другой стороны, это уже слишком крупная единица, ведь внутри учреждения может быть несколько транзакций, в том числе периодических. Если мы возьмём учреждение в качестве элементарной частицы, то будем оперировать только общим бюджетом этого учреждение и потеряем временной срез, а также возможный срез по целевому назначению средств.

In my reasoning, an elementary particle has already flashed several times - deduction, a one-time transfer of budget funds in a certain amount (the same rubles) to a certain organization for certain purposes (for example, events), tied to time. The deductions are periodic and irregular, the goal may consist of several levels of hierarchy: for an event → for organizing a concert → performer’s fee. The deductions consist of the entire expenditure item of the city budget, while the deductions can be added together, compared, and track the dynamics. If you need to visualize the budget income, use the twin particle - income. From the proceeds, you can get a picture of the formation of the city budget in the same way as from the deductions - a picture of its use.

Start from the bottom (with units of measure), try on the role of a data particle ever larger entities and argue why this or that entity is suitable or not suitable. In reasoning, new entities and allusions to a particle of data will certainly appear. For the found particle, be sure to select the appropriate word or term, it is easier to think about it in the future and solve the problem.

After answering the question of what is an elementary piece of data, think about how to best show it. An elementary particle of data is an atom, and its visual embodiment must be atomic. The main visual atoms: pixel, dot, circle, dash, square, cell, object, rectangle, line, line and mini-graph, as well as cartographic atoms - points, objects, regions and routes. The better the visual conveys the properties of the data particle, the more visual the resulting visualization will be.

In the example of the city budget, the deduction has two key parameters - the amount (quantitative) and the purpose - qualitative. A rectangular atom with a unit width is well suited for these parameters: the height of the column encodes the amount, and its color represents the purpose of the payment. The result is a picture similar to oursvisualization of the personal budget :

One and the same particle on different wireframes: along the time axis, by categories or in the axes the time of day / day of the week.

Here are other examples of data particles and their corresponding visual atoms.

Earthquake in the history of earthquakes , visual atom - map point:

Dollar and its degrees on a logarithmic maniogram , atom - pixel:

Soldier and civilian in loss visualization "Fallen.io" , atom - object - image of a man with a gun and without:

Flag on the visualization devoted to flags , atom - object - flag image:

Hour of the day (activity or sleep) on the diagram about the rhythm of cities, atom - a cell with two-color coding:

Attempting to answer a question in the statistics of the traffic simulator , visual atom - a cell with a traffic light gradient:

Company on the diagram of the scatter of tax rates , atom - circle:

Goal and goal moment in football analytics , atom - segment - trajectory of impact on the football field:

Candidate for visualization of the Huntflow hiring process , atom - line of unit thickness - path of the candidate for the funnel:

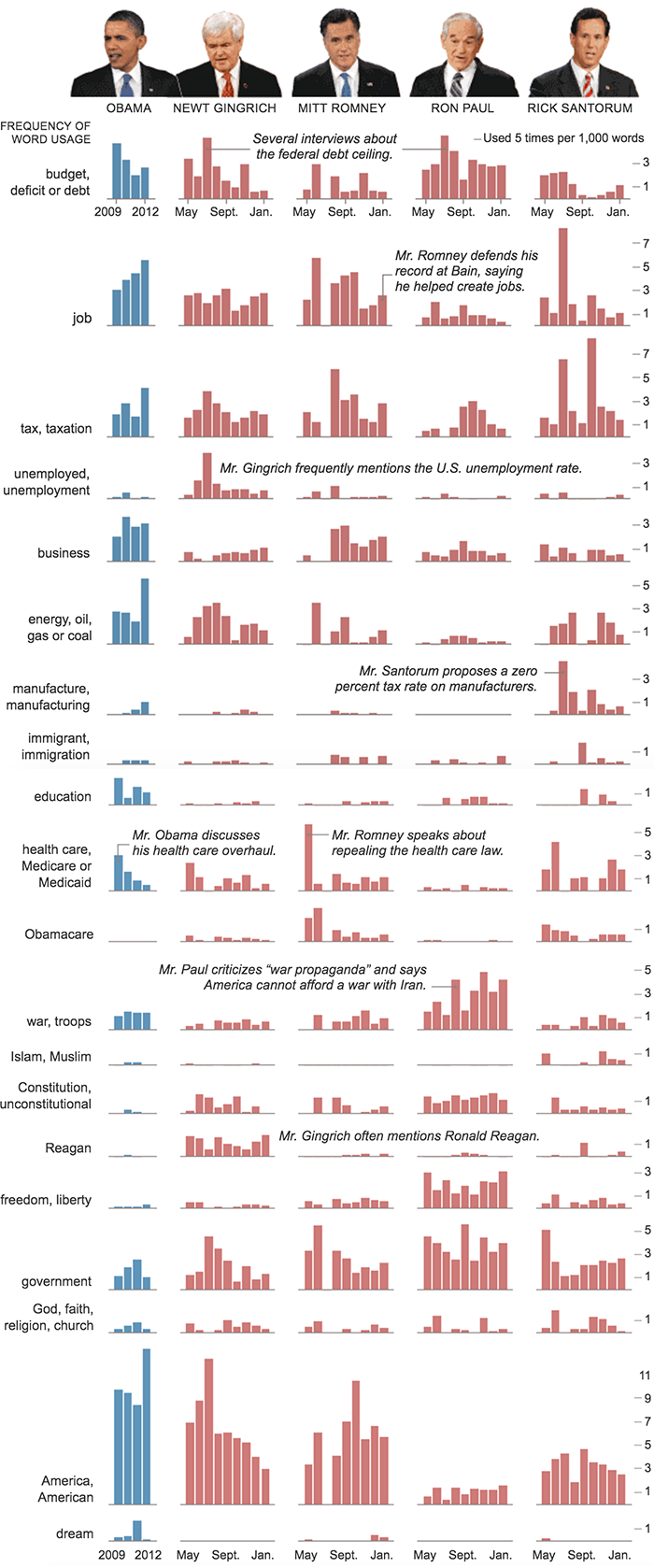

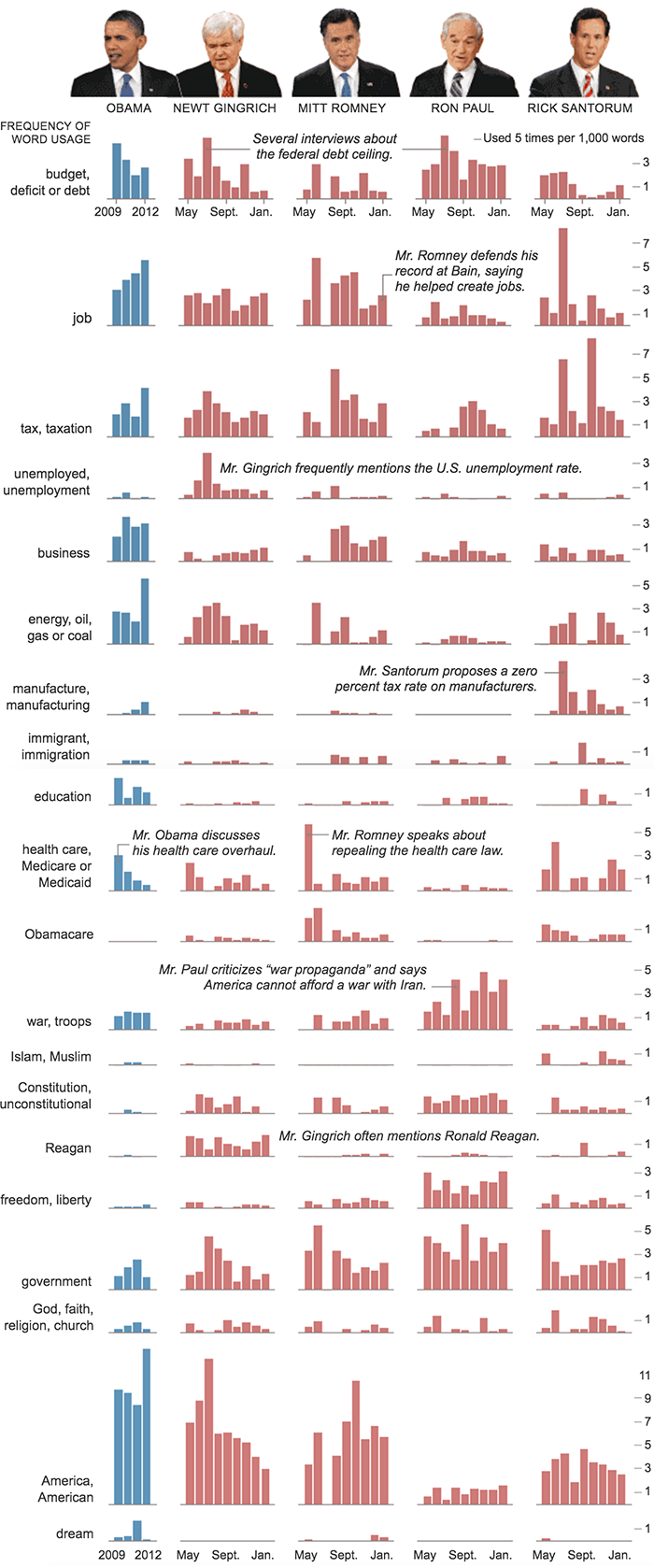

State that changes its political mood , atom - line with thickness: Line

segments between stations on Swiss routes their trains, atom - cartographic line with a thickness:

Employment dynamicsin various industries of Minnesota residents, the atom is a mini-graph:

Read more about visual atoms and their properties here .

Frame and axis

Interactive visualization lives in two dimensions of the screen plane. It is these two dimensions that give the mass of data "rigidity", systematize visual atoms and serve as a visualization framework. How these two dimensions are used depends on how interesting, informative and useful the visualization will turn out.

On a good visualization, each dimension corresponds to an axis that expresses a significant data parameter. I divide axes into continuous (including the axis of space and time), interval, layered and degenerate. We learn about

continuous axes in school when we build parabolas:

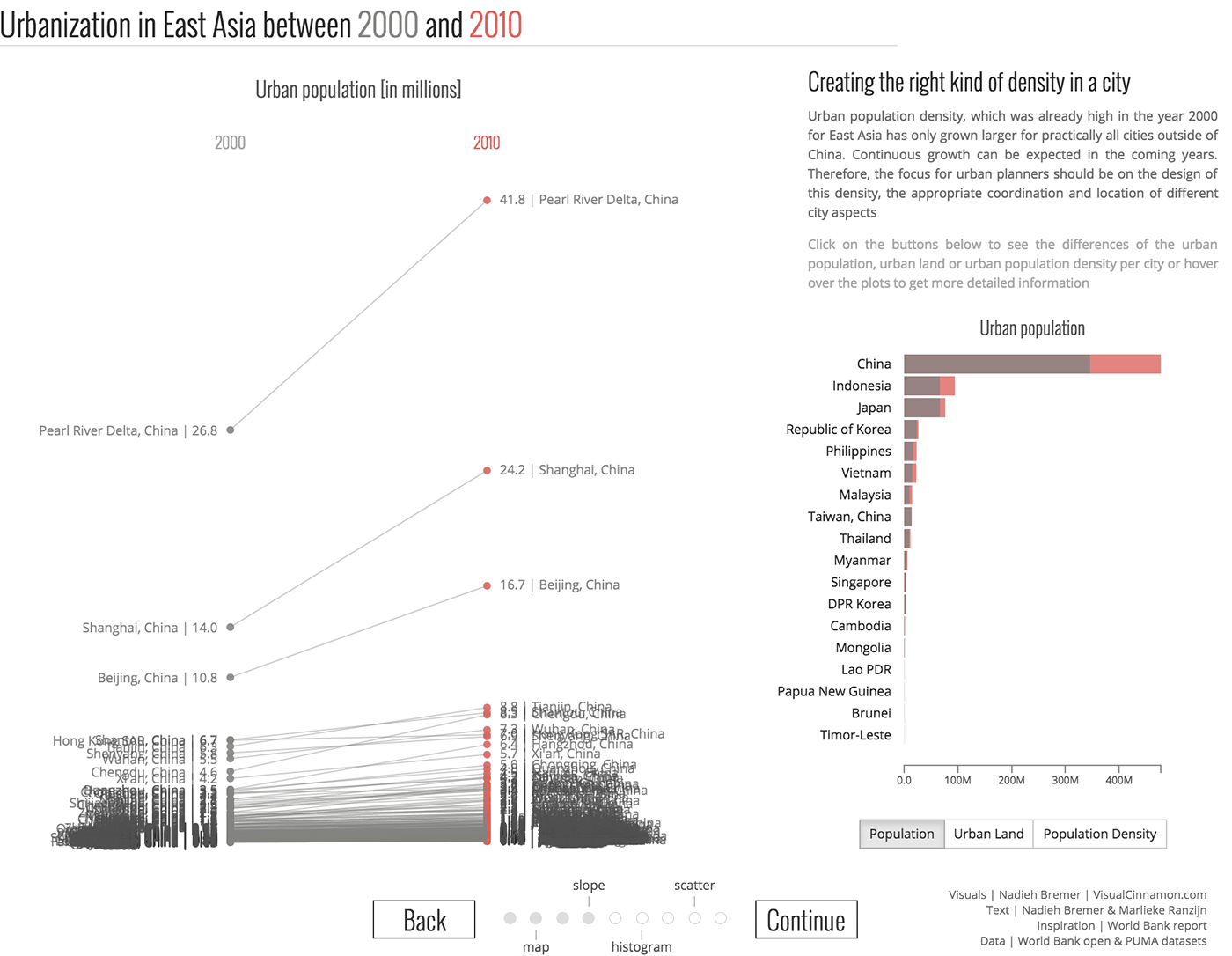

In general, a graph usually means just such a frame - of two continuous axes. Often, a graph shows the dependence of one quantity on another, in this case, according to tradition, the independent quantity is laid out horizontally, and the supposedly dependent one is laid out vertically: Graph of two continuous axes with point objects: Sometimes average values are marked on the axes, and the graph is divided into meaningful quadrants ( "expensive player score", "cheap productive", etc...) also, the chart can be performed rays they show the relationship between the parameters laid on axes, which itself may be considerable th parameter (in this case, the competition in the industry): For visual display with a large spread parameter values used axis with logarithmic scale:

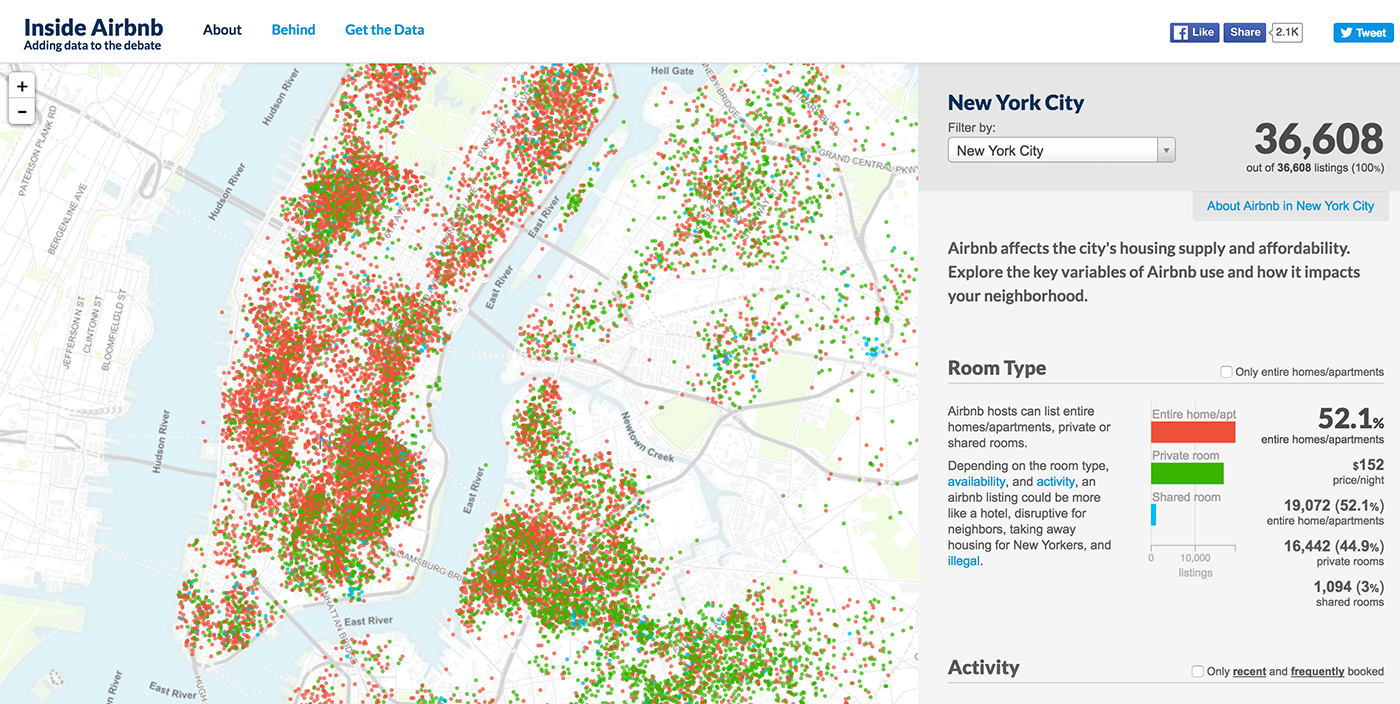

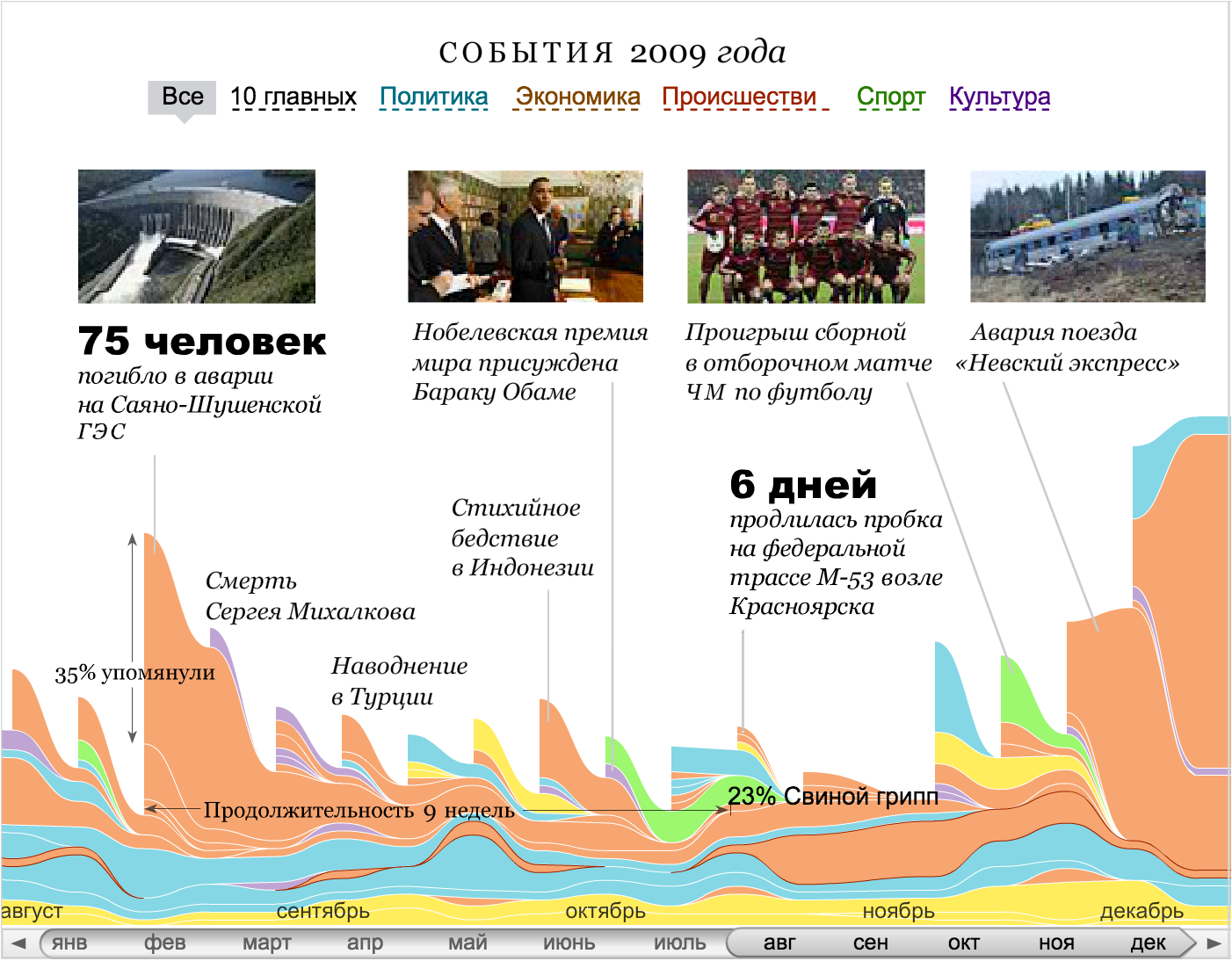

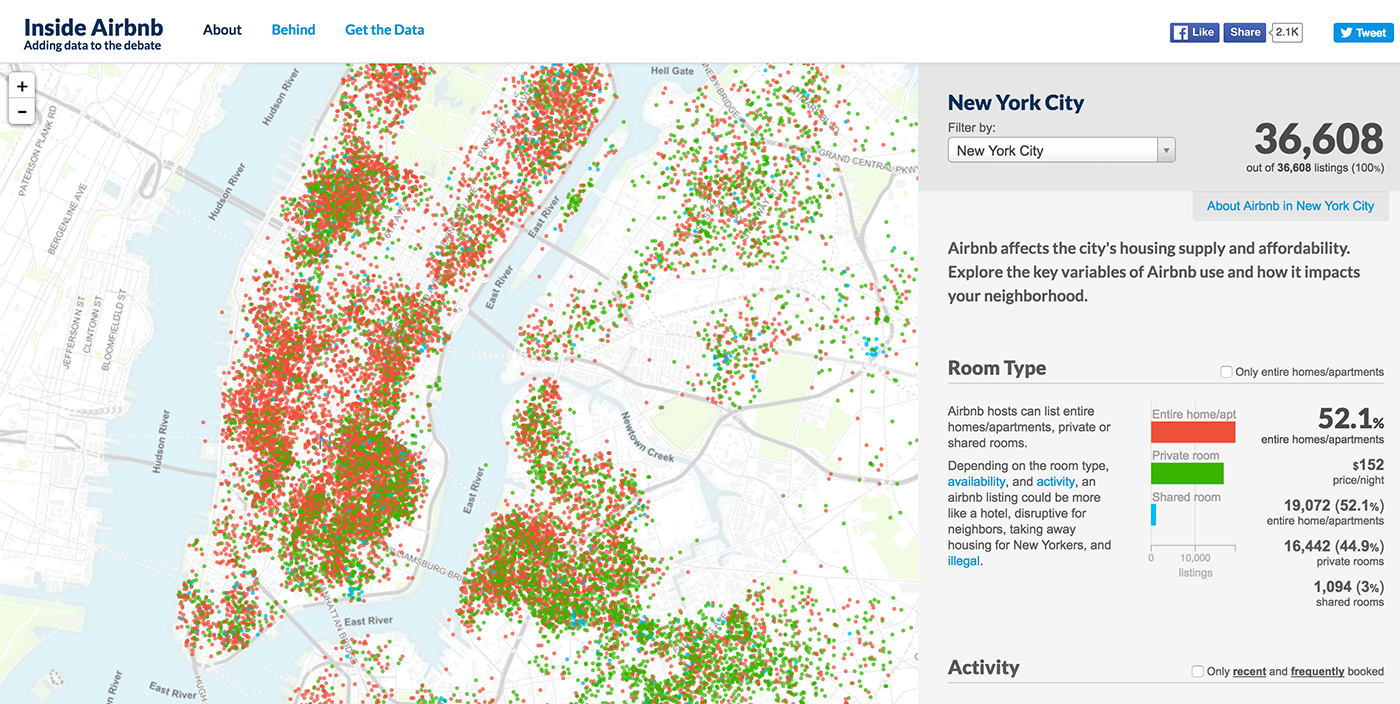

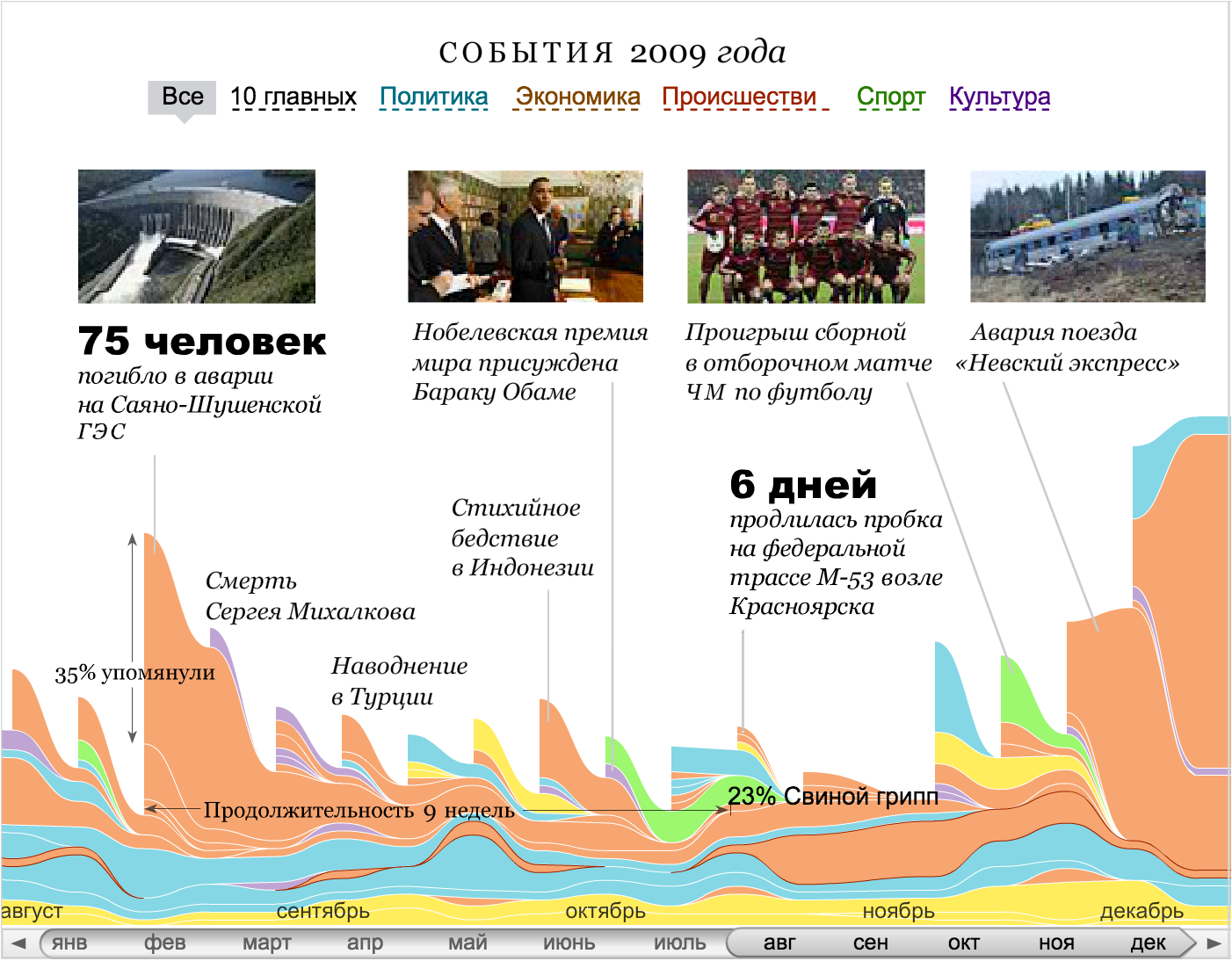

An important special case of continuous axes is the axis of space and time , for example, a geographical coordinate or a time line. A map, a view of a soccer field or basketball court, a production chart are examples of a combination of two spatial axes. Graphs with a time axis are the first abstract graphs that laid the foundation for data visualization: And they are still successfully applied: Another way to show a temporary dimension is to add a spatial picture to the slider: In exceptional cases, space and time can be combined on a flat map or along one spatial axis: spacing axis

It is divided into segments (equal or unequal), which sets the parameter value according to certain rules. The interval axis is suitable for both qualitative and quantitative parameters.

A hitmep is a classic example of a combination of two spacing axes. For example, the number of cases by state and year shown on the corresponding frame: The bar graph is an example of a combination of the interval (time interval) and continuous (value) axis: Two interval axes do not have to turn into a hit chart: A layered axis lays several parallel axes (continuous and interval) in one dimension. Most often, this technique works with timelans, when layers with data, texts and graphics are superimposed on one time axis:

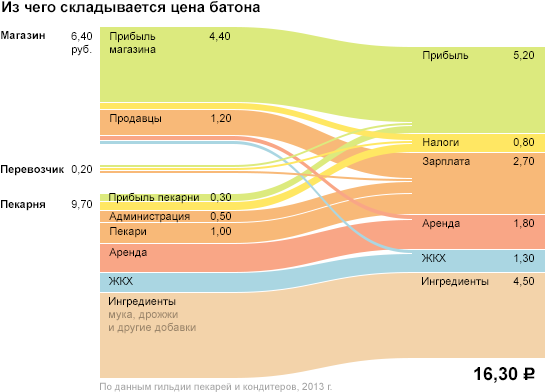

Sometimes visualization requires a degenerate axis, to which a specific parameter is not attached, or on which only two values are shown. Most often this happens when the visualization illustrates the connection - to show the connection, you need space between objects.

The “was-it-become” format data most often requires a degenerate axis: But it does not necessarily “eat up” the screen dimension: A degenerate axis is permissible if it exhibits important data features and thus “pays for” the loss of the whole screen dimension. But you should use it only as a last resort. Unfortunately, in spectacular popular infographic formats, one or even two screen axes are often degenerate. Another way to use screen space is to fill it with consecutive blocks.

uniform mesh . The objects inside the grid are ordered linearly, for example, alphabetically: Or according to the size of the city: The grid adjusts to the size of the screen and does not have pronounced horizontal and vertical guides. In most cases, the visualization framework consists of the axes listed above. A rare exception is three-dimensional visualizations, even more rare are their successful examples: In those cases when the choice of the frame is not obvious, I combine the axes with important parameters in a more or less random way, formulate what question this or that combination of axes answers, I choose the most successful combinations. Interesting pictures are obtained at the junction of supposedly dependent data parameters: The behavior of countries on the Gepminder analytical charts

And from the distribution of data particles along different axes:

The results of Formula 1 pilots by time, number of races and age of pilots

In interactive combinations of simple frames, truly powerful visualizations are born. Timeline + map:

Cash turnover in the Russian Federation

Map + hitmap:

Resistance map for the Research Institute of FHM

Several similar schedules:

Analysis of airfare for Tutu.ru

Summary

So, this is how I see the process of creating visualizations from start to finish.

1. Go from tables and slices to data reality.

2. Find the particle or particles of data from which the mass of data is built.

3. Select visual atoms for the embodiment of data particles. Visual atoms are selected in such a way as to fully and clearly reveal the properties of a data particle. The closer the visual incarnation is to the physical meaning of the attribute, the better.

4. On the screen, the mass of data is expressed by the visual mass. It happens that in the visual mass individual atoms are distinguishable, in other cases they are averaged and added.

5. In addition to the mass of data, in the reality of data there is a set of dimensions in which the data lives.

6.These measurements on the screen collapse into a flat frame. The framework systematize visual atoms, gives the mass of data "rigidity" and reveals it from a certain side.

7. Visualization is supplemented by an interface for managing a mass of data (for example, sampling and searching) and a wireframe (for example, setting axes).

The Δλ algorithm is still in the process of being constantly updated, supplemented and improved. I tried to present it succinctly and consistently, but the scope of one habrapost for this is cramped, and much remained behind the scenes. I will be glad to comment, I will be happy to explain incomprehensible moments and answer questions.

To get acquainted with the first-hand algorithm and learn how to use it, come to a course on data visualizationwhich I will spend in Moscow on October 8 and 9. On the course, in addition to the algorithm, participants get acquainted with D3.js - a powerful tool for implementing non-standard data visualization solutions.