About relative brightness, or how tenacious legacy is

I am sure that many programmers are familiar with the formula:

And the one who worked closely with graphics knows these numbers literally by heart - how in the old days enikeyshchiki remembered the Windows serial. Sometimes the coefficients are rounded to the second sign, sometimes they refine to the fourth, but the canonical form is just that.

It calculates the relative color brightness (relative luminance or in some luma contexts; not to be confused with lightness and brightness) and is widely used to convert RGB color images to Grayscale and related tasks.

The formula is replicated and cited in thousands of articles, forum discussions and answers on StackOverflow ... But the fact is that its only right place is in the dustbin of history. You can not use it. However, they use it.

But why not? And where did these coefficients come from?

There is such an international organization that develops recommendations (de facto standards) for the field of television and radio communications - ITU .

The parameters of interest to us are spelled out in the recommendations of ITU-R BT.601 , adopted in 1982 (updated edition by reference). Already at this moment one can be slightly surprised - where are we and where is the 82nd year? But this is only the beginning.

The numbers migrated there from the ITU-R BT.470 recommendations from 1970 (the updated version is also available here).

And they, in turn, are the legacy of the YIQ color model , which was developed for the NTSC North American broadcasting system in 1953! She has more to do with current computers and gadgets than nothing.

Does anyone remind anyone of a tale about the connection of spaceships with the width of an ancient Roman horse ass?

Modern colorimetric parameters began to crystallize in 1970 with the modernization of PAL / SECAM systems. Around the same time, the Americans came up with their SMPTE-C specification for similar phosphors, but NTSC switched to them only in 1987. I don’t know for sure, but I suspect that it is this delay that explains the very fact of the birth of the notorious Rec.601 - after all, by and large, they are morally obsolete by the time of their appearance.

Then in 1990 there were new recommendations ITU-R BT.709, and in 1996 they came up with the sRGB standard, which has captured the world and reigns (in the consumer sector) to this day. There are alternatives to it, but they are all in demand in highly specific areas. And 20 years have passed, no less, no more - would it be time to get rid of atavisms completely?

Someone might think that those coefficients reflect some fundamental properties of human vision and therefore do not have a statute of limitations. This is not entirely true - among other things, the coefficients are tied to color reproduction technology .

Any RGB space (and YIQ is a conversion over the RGB model) is determined by three basic parameters:

1. The chromatic coordinates of the three primary colors (they are called primaries);

2. The chromatic coordinates of the white point (white point or reference white);

3. Gamma correction.

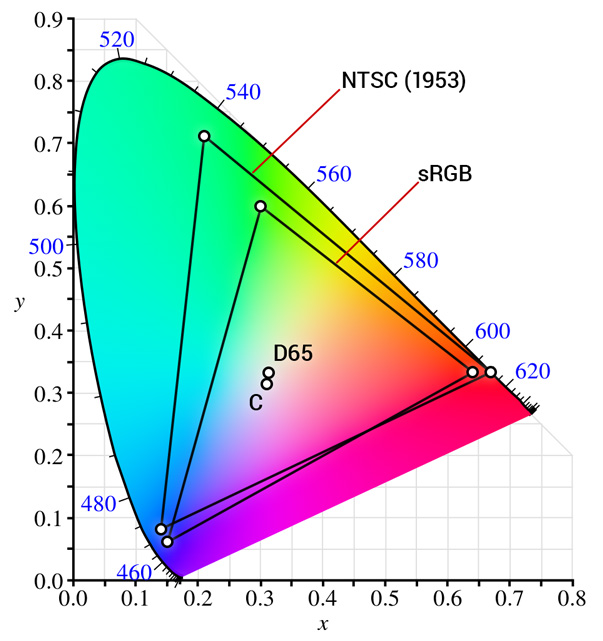

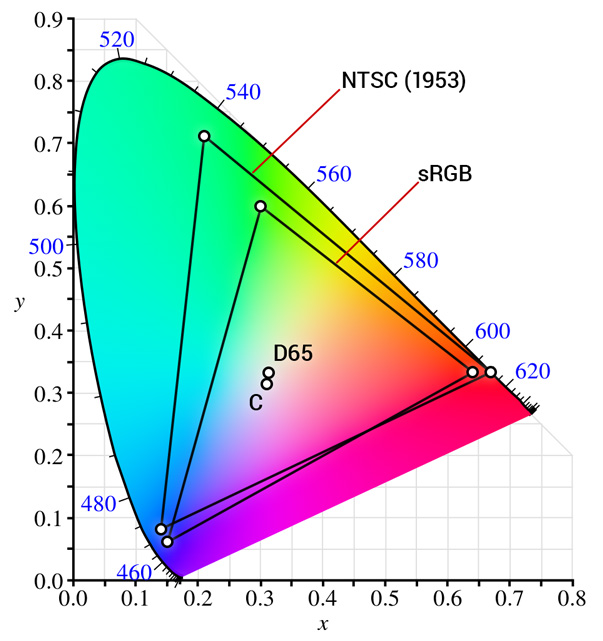

Chromatic coordinates are usually set in the CIE xyY system . The case of letters in this case is important: lowercase xycorrespond to the coordinates on the chromatic diagram (the well-known “horseshoe”), and the capital Y is the brightness from the CIE XYZ vector.

Now let's look at the Y component of all the NTSC primary colors (I marked them pink):

* The original table with many other spaces on the Bruce Lindblum website .

A familiar digital, right? So the answer to the question "where did it come from?"

And the problem is that the sRGB space used today is significantly different from the 60-year-old system. And it’s not even that which is better or worse — they are simply different :

The triangle is wider and offset to the side. Another white dot. By the way, the porthole C has long been recognized deprecated in favor of the portholes of the D series in general and the most popular D65 in particular. The color gamut body is different - accordingly, the results of brightness calculations will be inadequate to reality.

You may ask: why is the ancient NTSC coverage (almost the same as the coverage of Adobe RGB 1998!) So much more than modern sRGB? I dont know. It is obvious that the picture tubes of that time could not cover it. Perhaps they wanted to make a foundation for the future?

The relative brightness of the primary colors in the sRGB space is shown in the table above (marked in green) - they should be used. In practice, they usually round up to 4 characters:

An attentive reader will notice that the coefficient of R is rounded off by the rules (down), but this is not an error. The fact is that the sum of all three numbers must be equal to one, and a “correct” rounding would introduce an error. Pedants can take all six decimal places and not worry.

This formula is enough for 99% of typical cases. It uses W3C in all its specifications (for example, matrix filters in SVG ).

If you need more accuracy, you will have to calculate L * , but this is a separate big topic. A good answer to StackOverflow that provides starting points for further reading.

If you have an image in a different color space (Adobe RGB, ProPhoto RGB, etc.) - the coefficients will be different; they can be found in the aforementioned Bruce Lindblum table.

As mentioned above, over the years the formula has been replicated on a myriad of sites, and they sit in the top of all search engines ( for example ). Serious sources often cite both formulas, but do not make the proper distinction between them, presenting them as equal alternatives. A typical example on Stack Overflow: Formula to determine brightness of RGB color - the answers are quite detailed, but it’s difficult for a person not in the subject to make an informed choice.

In fairness, serious projects almost do not suffer from such errors - the authors do not disdain to check the standards, and the audience’s feedback is working (although there are no exceptions). But the ordinary programmer, who needs to quickly solve the problem, drives something like “rgb to grayscale” into the search engine, and he palm off him you know what. The formula continues to be found and copy-paste still! Phenomenal survivability.

I spent about 20 minutes searching for these examples:

Please note that along with the old projects, the list contains a lot of references to the latest and most modern technologies, that is, the code was written / copied recently.

And the reason for writing this note was a slide from the report of Vasilika Klimova from HolyJS-2016 - with the same prehistoric formula. It is clear that the formula did not affect the main point of the performance, but clearly demonstrated your chances of inadvertently googling it in 2016.

To summarize: if you see the sequence 299/587/114 in someone’s current code, throw a link to this note to the author.

update 1

The comments strongly ask for examples. But this is not so simple as it seems.

If you take an arbitrary picture and translate it into b / w in two ways - it will not give anything at all. Pictures will be just a little different. The viewer can only evaluate which option is subjectively prettier to him. But that’s not the point! The fact is which option is more correct, more precisely .

After a little thought, I sketched this kind of thing: codepen.io/dom1n1k/pen/LZWjbj

The script generates 2 times 100 random colors, selecting the components so that the brightness of all the cubes is theoretically the same (Y = 0.5). That is, the entire field should be subjectively perceived as uniformly as possible (homogeneous precisely in terms of brightness, not taking into account different tones).

On the left is the old “wrong” formula, on the right is the new “correct” one. On the right, uniformity is indeed noticeably higher. Although not ideal, of course - for greater accuracy, the perceptual lightness L * must be calculated.

update 2.1

Another question arose about gamma. He was already raised by at least 3 people, so I will also put it on an update. The question is actually not simple and partly even philosophical (it will completely pull on a separate article).

Strictly speaking, yes, to convert a picture to b / w, the gamma view needs to be decoded. But in practice (in tasks not related to accurate colorimetry) this step is often omitted for the sake of simplicity and performance. For example, Photoshop takes gamma into account when converting to grayscale, but the CSS filter of the same name ( MDN ) does not.

From the point of view of the correctness of the result, the choice of weights and the recalculation of gamma are complementary things. It affects both. The only difference is that gamma requires additional calculations, but the correct coefficients are free.

The second version of the demo, taking into account the gamma (the first did not go away): codepen.io/dom1n1k/pen/PzpEQX

It turned out, of course, more precisely.

And the one who worked closely with graphics knows these numbers literally by heart - how in the old days enikeyshchiki remembered the Windows serial. Sometimes the coefficients are rounded to the second sign, sometimes they refine to the fourth, but the canonical form is just that.

It calculates the relative color brightness (relative luminance or in some luma contexts; not to be confused with lightness and brightness) and is widely used to convert RGB color images to Grayscale and related tasks.

The formula is replicated and cited in thousands of articles, forum discussions and answers on StackOverflow ... But the fact is that its only right place is in the dustbin of history. You can not use it. However, they use it.

But why not? And where did these coefficients come from?

Mini digression into history

There is such an international organization that develops recommendations (de facto standards) for the field of television and radio communications - ITU .

The parameters of interest to us are spelled out in the recommendations of ITU-R BT.601 , adopted in 1982 (updated edition by reference). Already at this moment one can be slightly surprised - where are we and where is the 82nd year? But this is only the beginning.

The numbers migrated there from the ITU-R BT.470 recommendations from 1970 (the updated version is also available here).

And they, in turn, are the legacy of the YIQ color model , which was developed for the NTSC North American broadcasting system in 1953! She has more to do with current computers and gadgets than nothing.

Does anyone remind anyone of a tale about the connection of spaceships with the width of an ancient Roman horse ass?

Modern colorimetric parameters began to crystallize in 1970 with the modernization of PAL / SECAM systems. Around the same time, the Americans came up with their SMPTE-C specification for similar phosphors, but NTSC switched to them only in 1987. I don’t know for sure, but I suspect that it is this delay that explains the very fact of the birth of the notorious Rec.601 - after all, by and large, they are morally obsolete by the time of their appearance.

Then in 1990 there were new recommendations ITU-R BT.709, and in 1996 they came up with the sRGB standard, which has captured the world and reigns (in the consumer sector) to this day. There are alternatives to it, but they are all in demand in highly specific areas. And 20 years have passed, no less, no more - would it be time to get rid of atavisms completely?

So what exactly is the problem?

Someone might think that those coefficients reflect some fundamental properties of human vision and therefore do not have a statute of limitations. This is not entirely true - among other things, the coefficients are tied to color reproduction technology .

Any RGB space (and YIQ is a conversion over the RGB model) is determined by three basic parameters:

1. The chromatic coordinates of the three primary colors (they are called primaries);

2. The chromatic coordinates of the white point (white point or reference white);

3. Gamma correction.

Chromatic coordinates are usually set in the CIE xyY system . The case of letters in this case is important: lowercase xycorrespond to the coordinates on the chromatic diagram (the well-known “horseshoe”), and the capital Y is the brightness from the CIE XYZ vector.

Now let's look at the Y component of all the NTSC primary colors (I marked them pink):

* The original table with many other spaces on the Bruce Lindblum website .

A familiar digital, right? So the answer to the question "where did it come from?"

And the problem is that the sRGB space used today is significantly different from the 60-year-old system. And it’s not even that which is better or worse — they are simply different :

The triangle is wider and offset to the side. Another white dot. By the way, the porthole C has long been recognized deprecated in favor of the portholes of the D series in general and the most popular D65 in particular. The color gamut body is different - accordingly, the results of brightness calculations will be inadequate to reality.

You may ask: why is the ancient NTSC coverage (almost the same as the coverage of Adobe RGB 1998!) So much more than modern sRGB? I dont know. It is obvious that the picture tubes of that time could not cover it. Perhaps they wanted to make a foundation for the future?

How right?

The relative brightness of the primary colors in the sRGB space is shown in the table above (marked in green) - they should be used. In practice, they usually round up to 4 characters:

An attentive reader will notice that the coefficient of R is rounded off by the rules (down), but this is not an error. The fact is that the sum of all three numbers must be equal to one, and a “correct” rounding would introduce an error. Pedants can take all six decimal places and not worry.

This formula is enough for 99% of typical cases. It uses W3C in all its specifications (for example, matrix filters in SVG ).

If you need more accuracy, you will have to calculate L * , but this is a separate big topic. A good answer to StackOverflow that provides starting points for further reading.

If you have an image in a different color space (Adobe RGB, ProPhoto RGB, etc.) - the coefficients will be different; they can be found in the aforementioned Bruce Lindblum table.

Why does it bother me?

As mentioned above, over the years the formula has been replicated on a myriad of sites, and they sit in the top of all search engines ( for example ). Serious sources often cite both formulas, but do not make the proper distinction between them, presenting them as equal alternatives. A typical example on Stack Overflow: Formula to determine brightness of RGB color - the answers are quite detailed, but it’s difficult for a person not in the subject to make an informed choice.

In fairness, serious projects almost do not suffer from such errors - the authors do not disdain to check the standards, and the audience’s feedback is working (although there are no exceptions). But the ordinary programmer, who needs to quickly solve the problem, drives something like “rgb to grayscale” into the search engine, and he palm off him you know what. The formula continues to be found and copy-paste still! Phenomenal survivability.

I spent about 20 minutes searching for these examples:

- Microsoft documentation

- Matlab documentation

- Go standard library

- trendy color pickers for React (> 2k stars)

- Chart.js (> 23k stars!)

- CImg library for image processing

- some modules in OpenCV (it seems that secondary)

- collection of demos on WebGL

- something inside Gimp and the Inkscape utility

- someone's small projects on Swift , Go , Three.js , JS

Please note that along with the old projects, the list contains a lot of references to the latest and most modern technologies, that is, the code was written / copied recently.

And the reason for writing this note was a slide from the report of Vasilika Klimova from HolyJS-2016 - with the same prehistoric formula. It is clear that the formula did not affect the main point of the performance, but clearly demonstrated your chances of inadvertently googling it in 2016.

To summarize: if you see the sequence 299/587/114 in someone’s current code, throw a link to this note to the author.

update 1

The comments strongly ask for examples. But this is not so simple as it seems.

If you take an arbitrary picture and translate it into b / w in two ways - it will not give anything at all. Pictures will be just a little different. The viewer can only evaluate which option is subjectively prettier to him. But that’s not the point! The fact is which option is more correct, more precisely .

After a little thought, I sketched this kind of thing: codepen.io/dom1n1k/pen/LZWjbj

The script generates 2 times 100 random colors, selecting the components so that the brightness of all the cubes is theoretically the same (Y = 0.5). That is, the entire field should be subjectively perceived as uniformly as possible (homogeneous precisely in terms of brightness, not taking into account different tones).

On the left is the old “wrong” formula, on the right is the new “correct” one. On the right, uniformity is indeed noticeably higher. Although not ideal, of course - for greater accuracy, the perceptual lightness L * must be calculated.

update 2.1

Another question arose about gamma. He was already raised by at least 3 people, so I will also put it on an update. The question is actually not simple and partly even philosophical (it will completely pull on a separate article).

Strictly speaking, yes, to convert a picture to b / w, the gamma view needs to be decoded. But in practice (in tasks not related to accurate colorimetry) this step is often omitted for the sake of simplicity and performance. For example, Photoshop takes gamma into account when converting to grayscale, but the CSS filter of the same name ( MDN ) does not.

From the point of view of the correctness of the result, the choice of weights and the recalculation of gamma are complementary things. It affects both. The only difference is that gamma requires additional calculations, but the correct coefficients are free.

The second version of the demo, taking into account the gamma (the first did not go away): codepen.io/dom1n1k/pen/PzpEQX

It turned out, of course, more precisely.