Downloading tracks from Autotravel.ru

Like many travel lovers, I find the coordinates of the sights of cities on the site autotravel.ru (hereinafter referred to as the site). To my needs I wrote a small utility for downloading files with attractions for further uploading to the navigator. The program is extremely simple, but it works exactly as I needed. In addition, the simplest way to save load time and traffic is implemented - caching.

The program I called AtTrackDownloader is written in Python 3 using Beautiful Soup, a library for parsing HTML files. PyQt is used for the GUI - simply because I am familiar with Qt.

The core of the program is the autotravel class. It does not make sense to completely describe its methods (especially at the end I will give a link to the git repository). I will sign the basic logic.

The __load_towns_page method loads the contents of the site page for cities starting with the letter letter. More precisely, this was a few months ago, and some time ago they changed the addresses of the pages and instead of the letter they indicate 'a' + letter_number. For example: A - 'a01', B - 'a02', ..., I - 'a30'.

Accordingly, to load cities by all letters, this method is called in a loop from the __load_all_towns method. The resulting web page text is passed to the __load_towns_from_url method:

In this method, all the main work on parsing cities is done. The disassembled cities are entered into the list of dictionaries with the keys 'area' - region or country, 'town' - the name of the city, 'href' - a short link to the city (relative to the site).

Thus, all cities from the site are loaded into memory. It takes 10 seconds on my computer. Naturally, this is bad, so the idea of a cache of busy cities came up. Python allows you to serialize a list in one line, unlike other languages I know, which is great. The method of saving cities to the cache file is as follows:

Cache loading is performed in the class constructor:

As a result, we get a ten-second delay only at the first start.

Getting links to track files is implemented in the get_towns_track_links method, which accepts the address of a page with a city:

Since, theoretically (and before such cases were) on the pages of the city there may not be some kind of track format, the available types of tracks and links to them are entered in the dictionary. Then it is used to filter files in the file save dialog.

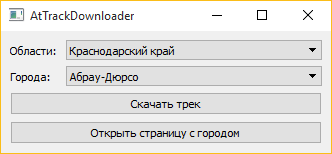

I don’t see the point of describing the creation of the interface - it’s still easier there, who are interested can go to the repository. And for those who are not interested, I bring a screenshot: The

full source codes are on Github .

The program I called AtTrackDownloader is written in Python 3 using Beautiful Soup, a library for parsing HTML files. PyQt is used for the GUI - simply because I am familiar with Qt.

The core of the program is the autotravel class. It does not make sense to completely describe its methods (especially at the end I will give a link to the git repository). I will sign the basic logic.

def __load_towns_page(self, letter):

url = AutoTravelHttp + '/towns.php'

params = {'l' : letter.encode('cp1251')}

req = urllib2.Request(url, urllib.parse.urlencode(params).encode('cp1251'))

response = urllib2.urlopen(req)

return response.read().decode('utf-8')

The __load_towns_page method loads the contents of the site page for cities starting with the letter letter. More precisely, this was a few months ago, and some time ago they changed the addresses of the pages and instead of the letter they indicate 'a' + letter_number. For example: A - 'a01', B - 'a02', ..., I - 'a30'.

Accordingly, to load cities by all letters, this method is called in a loop from the __load_all_towns method. The resulting web page text is passed to the __load_towns_from_url method:

def __load_towns_from_url(self, html_src):

for line in html_src.splitlines():

soup = BeautifulSoup(line, 'html.parser')

area = soup.find('font', {'class' : 'travell0'})

town= soup.find('a', {'class', 'travell5'})

if town == None:

town = soup.find('a', {'class', 'travell5c'})

if town == None:

continue

town_name = town.get_text().strip()

area_name = area.get_text().strip()[1:-1]

town_href = town.get('href')

yield {'area' : area_name, 'town' : town_name, 'href' : town_href}

In this method, all the main work on parsing cities is done. The disassembled cities are entered into the list of dictionaries with the keys 'area' - region or country, 'town' - the name of the city, 'href' - a short link to the city (relative to the site).

Thus, all cities from the site are loaded into memory. It takes 10 seconds on my computer. Naturally, this is bad, so the idea of a cache of busy cities came up. Python allows you to serialize a list in one line, unlike other languages I know, which is great. The method of saving cities to the cache file is as follows:

def __save_to_cache(self, data):

with open('attd.cache', 'wb') as f:

pickle.dump(data, f)

Cache loading is performed in the class constructor:

def __init__(self):

try:

with open('attd.cache', 'rb') as f:

self.__all_towns = pickle.load(f)

except FileNotFoundError:

self.__all_towns = list(self.__load_all_towns())

self.__save_to_cache(self.__all_towns)

As a result, we get a ten-second delay only at the first start.

Getting links to track files is implemented in the get_towns_track_links method, which accepts the address of a page with a city:

def get_towns_track_links(self, href):

req = urllib2.Request(href)

response = urllib2.urlopen(req)

soup = BeautifulSoup(response.read().decode('utf-8'), 'html.parser')

r = {}

for link in soup.findAll('a', {'class' : 'travell5m'}):

if link.get_text() == 'GPX':

r['gpx'] = AutoTravelHttp + link.get('href')

elif link.get_text() == 'KML':

r['kml'] = AutoTravelHttp + link.get('href')

elif link.get_text() == 'WPT':

r['wpt'] = AutoTravelHttp + link.get('href')

return r

Since, theoretically (and before such cases were) on the pages of the city there may not be some kind of track format, the available types of tracks and links to them are entered in the dictionary. Then it is used to filter files in the file save dialog.

I don’t see the point of describing the creation of the interface - it’s still easier there, who are interested can go to the repository. And for those who are not interested, I bring a screenshot: The

full source codes are on Github .